Author: Denis Avetisyan

A novel optimization approach leverages the power of quantum-inspired computing to build more robust and cost-effective supply chain networks.

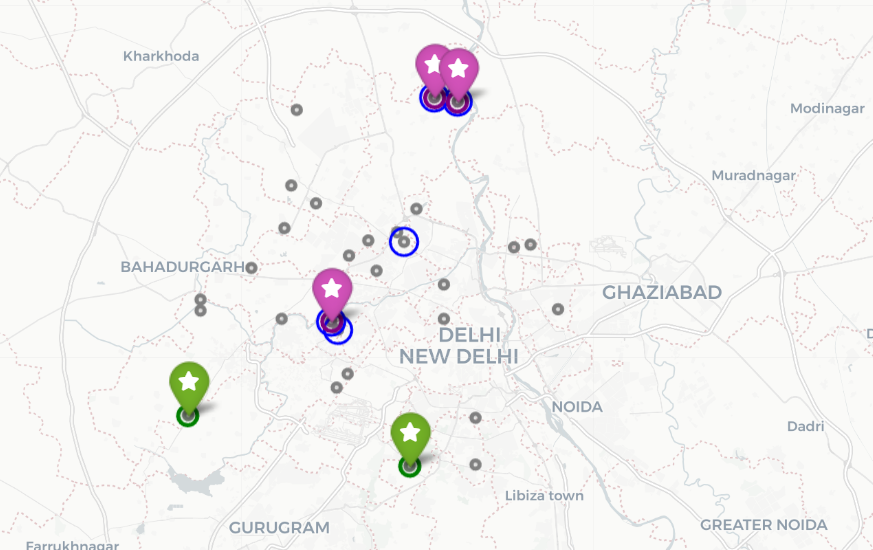

This review demonstrates a quantum-classical hybrid methodology for solving the facility location problem, achieving improved risk diversification and performance in a Delhi NCR case study.

Optimizing supply chain networks in dense urban environments presents a fundamental challenge: traditional methods excel at meeting demand but often incur prohibitive costs due to facility overlap and market cannibalization. This is addressed in ‘Bridging the Linear-Quadratic Gap: A Quantum-Classical Hybrid Approach to Robust Supply Chain Design’, which demonstrates that a quantum-inspired optimization approach can significantly reduce operational risk and improve network resilience. Using a high-fidelity digital twin of the Delhi NCR, the study reveals a 35.8% improvement in overlap risk compared to classical greedy algorithms, achieved by strategically diversifying facility locations beyond traditional high-density central areas. Could this hybrid approach offer a pathway towards more robust and spatially balanced supply chains capable of navigating the complexities of modern megacities?

Decoding Supply Chain Complexity: Patterns of Resilience

Conventional supply chain strategies, designed for predictable, linear systems, increasingly falter when confronted with the intricacies of contemporary networks. These modern systems are characterized by globalization, just-in-time inventory, and a proliferation of interconnected suppliers, distributors, and consumers. This inherent complexity introduces substantial vulnerabilities; a disruption at any single node can cascade rapidly, impacting production, increasing costs, and delaying deliveries. Traditional models, often prioritizing cost minimization above all else, lack the agility to swiftly reroute shipments, diversify sourcing, or absorb unexpected shocks. Consequently, businesses face heightened risks from geopolitical instability, natural disasters, and even localized events, highlighting the urgent need for more robust and adaptable supply chain designs that prioritize resilience alongside efficiency.

The Delhi National Capital Region (NCR) presents a compelling case study in modern supply chain difficulties, largely due to its unprecedented and multifaceted expansion. This sprawling urban agglomeration, encompassing Delhi and surrounding cities, experiences a uniquely complex logistical landscape characterized by a high density of manufacturers, distributors, and consumers. The sheer volume of goods moving through the region, coupled with a rapidly evolving infrastructure struggling to keep pace, creates persistent bottlenecks and vulnerabilities. Unlike more established and predictable supply chains, the Delhi NCR network is defined by informal sectors, variable road conditions, and a constant influx of migrant labor – all contributing to unpredictability and hindering efforts to implement standardized optimization strategies. Consequently, the region serves as a microcosm of the challenges facing supply chains globally, demanding innovative solutions focused on adaptability and real-time responsiveness rather than simply minimizing costs.

Modern supply chain optimization transcends simple cost reduction, necessitating a fundamental shift towards prioritizing resilience and adaptability. Networks are no longer evaluated solely on efficiency, but on their capacity to withstand – and rapidly recover from – disruptions like geopolitical instability, natural disasters, or unexpected demand fluctuations. This requires proactive strategies such as diversifying sourcing, building buffer stocks of critical components, and investing in real-time visibility technologies. Furthermore, truly adaptable supply chains leverage data analytics and predictive modeling to anticipate potential vulnerabilities and dynamically re-route resources, ensuring continued operation even under duress. The focus is moving from ‘just-in-time’ to ‘just-in-case’, recognizing that the cost of disruption often far outweighs the expense of building in redundancy and flexibility.

Facility Location: Uncovering the Geometry of Optimization

The Facility Location Problem (FLP) is a fundamental optimization challenge within supply chain management focused on minimizing the costs associated with serving customers from a network of potential facility locations. This involves determining the optimal number, location, and capacity of facilities – such as warehouses, distribution centers, or retail stores – to efficiently meet customer demand. The problem considers various cost factors including fixed facility opening costs, transportation costs from facilities to customers, and potentially, inventory holding costs. Formally, the FLP seeks to minimize a total cost function subject to constraints ensuring all demand is satisfied. Effective solutions directly impact logistical expenses, delivery times, and overall supply chain responsiveness, making it a core component of strategic network design.

Linear Mathematical Optimisation (LMO) and Greedy Algorithms represent initial solution approaches to the Facility Location Problem, but their efficacy diminishes with increasing complexity. LMO, while capable of finding globally optimal solutions, experiences computational intractability – exponential increases in processing time – as the number of potential facility locations and demand points grows. Greedy Algorithms offer faster, heuristic solutions by iteratively selecting the ‘best’ location at each step; however, this local optimization approach often fails to identify the globally optimal configuration, resulting in suboptimal total costs. Specifically, scenarios involving fixed facility costs, varying transportation costs, and capacity constraints exacerbate the limitations of these foundational methods, necessitating more advanced techniques to achieve realistic and scalable solutions.

The Linear-Quadratic Gap in facility location optimization arises because minimizing the sum of individual facility costs and transportation costs – a linear approach – does not necessarily yield the same optimal solution as considering all pairwise interactions between facilities, which introduces quadratic complexity. Specifically, the linear relaxation of the integer programming formulation for facility location can provide a solution that is arbitrarily far from the optimal integer solution. This discrepancy occurs because linear formulations often fail to accurately capture economies of scale or fixed costs associated with opening multiple facilities, as the cost of serving a customer from a new facility isn’t fully represented in a purely linear assessment. Addressing this gap requires utilizing solution techniques capable of handling the quadratic elements, such as branch-and-bound algorithms, or employing alternative formulations that more accurately reflect the non-linear cost structure of the problem.

Demand capture, in the context of facility location, refers to the proportion of potential customer demand served by each facility in the network. Accurately modeling this requires understanding customer behavior, including travel distances, service level expectations, and competitor locations. Simplified models often assume all demand within a radius is captured, but real-world scenarios involve probabilistic capture rates dependent on factors like price, brand preference, and service quality. Inaccurate demand capture estimations lead to suboptimal facility placement, potentially resulting in underutilized capacity, increased transportation costs, and reduced customer satisfaction. Advanced models incorporate techniques like discrete choice modeling and spatial econometrics to more realistically predict demand allocation across facilities, improving the overall responsiveness and efficiency of the supply chain network.

Quantum-Inspired Computing: Charting a New Course for Supply Chain Resilience

Quantum-inspired computing applies concepts from quantum mechanics – such as superposition and tunneling – to develop algorithms for classical computers. These algorithms are particularly suited to addressing complex combinatorial optimization problems, including the Facility Location Problem (FLP). Unlike traditional methods that can become computationally intractable with increasing problem size, quantum-inspired techniques aim to efficiently explore a vast solution space. This is achieved through algorithms that probabilistically converge towards optimal or near-optimal solutions, offering a potential advantage in scenarios requiring rapid decision-making under uncertainty and complex constraints.

Simulated Annealing and Reverse Annealing are probabilistic metaheuristic techniques utilized to approximate solutions to complex optimization problems, including the Facility Location Problem (FLP). Both methods efficiently explore the solution space by accepting not only improving solutions but, with a certain probability, also solutions that worsen the current objective function – a mechanism designed to escape local optima. The Quadratic Unconstrained Binary Optimization (QUBO) formulation is frequently employed to represent the FLP in a manner suitable for these algorithms, transforming the problem into finding the minimum energy state of a spin glass. This QUBO representation allows for leveraging specialized hardware and algorithms designed for solving quadratic binary optimization problems, accelerating the search process and enabling efficient navigation of the potentially vast solution space.

Branch-and-Bound is a deterministic algorithm used to find the optimal solution to combinatorial optimization problems. It operates by systematically enumerating candidate solutions, dividing the problem into subproblems, and discarding branches of the search tree that provably cannot lead to a better solution than the current best. While this approach guarantees identification of the globally optimal solution, the computational cost can increase exponentially with problem size due to the exhaustive search. The algorithm’s efficiency is heavily influenced by the quality of the bounding function used to prune the search space; a tighter bound leads to more effective pruning and reduced computation, but may be difficult to derive for complex problems.

Quantum-inspired computing methods, when applied to supply chain optimization, demonstrate improved resilience through efficient adaptation to fluctuating demand and network disruptions. Performance metrics indicate a 21.8% reduction in operational risk, as quantified by an overlap score of 3.26, when compared to traditional exact solvers which achieved an overlap score of 4.17. This suggests that while exact solvers may identify optimal solutions in ideal conditions, the quantum-inspired approaches provide a more robust solution in dynamic, real-world scenarios, balancing optimality with adaptability and risk mitigation.

Digital Twins: Mirroring Reality for Proactive Supply Chain Management

A Digital Twin functions as a dynamic virtual representation of a physical supply chain, moving beyond static mapping to offer continuous, real-time monitoring of operational performance. This technology ingests data from various sources – including logistics providers, sensor networks, and market demands – to create a living model of the entire network. Consequently, stakeholders gain unprecedented visibility into key metrics such as inventory levels, transit times, and potential bottlenecks. More importantly, the Digital Twin isn’t simply observational; it allows for proactive what-if simulations. By virtually testing the impact of disruptions – a port closure, a supplier failure, or a sudden surge in demand – the system identifies vulnerabilities and enables preemptive adjustments to maintain a resilient and efficient flow of goods. This capability moves supply chain management from reactive problem-solving to proactive risk mitigation and performance optimization.

The foundation of a robust digital twin for supply chain management relies heavily on access to comprehensive and accurate geospatial data, and OpenStreetMap (OSM) increasingly serves as a critical resource. This collaboratively edited map database offers a wealth of information-road networks, points of interest, and geographic boundaries-essential for constructing detailed virtual representations of physical supply chains. Unlike proprietary mapping solutions, OSM’s open-source nature allows for customization and integration with other data streams, enabling businesses to model complex logistics with greater precision. The granular detail available within OSM facilitates not only visualization of transportation routes but also the analysis of potential disruptions, delivery times, and the impact of geographical factors on network performance. By leveraging the continuously updated data within OSM, organizations can build dynamic digital twins that accurately reflect real-world conditions and support proactive risk management.

The power of a digital twin supply chain lies in its ability to proactively address logistical challenges, and algorithms like Dijkstra’s Algorithm are central to this capability. By mapping the supply chain as a network of nodes and connections, this algorithm efficiently calculates the shortest and most cost-effective routes between any two points, considering factors like distance, traffic, and transportation costs. Beyond simple route planning, the digital twin leverages Dijkstra’s Algorithm for disruption analysis; should a link in the network fail – a port closure, a road blockage, or a supplier issue – the algorithm rapidly identifies alternative pathways and quantifies the impact on delivery times and costs. This allows for swift adjustments to mitigate disruptions, rerouting shipments and proactively informing stakeholders, ultimately enhancing the resilience and responsiveness of the entire supply chain operation.

Supply chain resilience is significantly improved by applying principles of Modern Portfolio Theory, traditionally used in finance, to resource distribution. This approach strategically diversifies network dependencies, minimizing the impact of localized disruptions – much like a diversified investment portfolio reduces financial risk. Recent studies quantify this benefit using the Overlap Score, a metric that assesses the degree of shared vulnerability between different supply chain pathways; a quantum-inspired resource allocation strategy achieved an Overlap Score of 3.26, demonstrating effective diversification. Importantly, this enhanced resilience came with a minimal trade-off – a mere 3.2% decrease in overall demand capture, reducing fulfilled orders from 465 to 450 – suggesting that proactive risk mitigation doesn’t necessarily equate to significant logistical losses.

The pursuit of robust supply chain design, as detailed in this study, echoes a sentiment shared by Ernest Rutherford: “If you can’t explain it to your grandmother, you don’t understand it well enough.” This principle applies directly to the complex interplay of factors influencing supply chain resilience. The research bridges the gap between linear and quadratic optimization, simplifying a traditionally convoluted problem to reveal underlying dependencies. By employing a quantum-inspired approach, the study illuminates how facility location and risk diversification can be optimally balanced – a clarity crucial for practical implementation and a testament to understanding the system’s core mechanics. Every deviation from expected outcomes, as the analysis shows, offers an opportunity to refine the model and enhance its predictive power.

Beyond the Horizon

The demonstrated utility of quantum-inspired optimization within supply chain design, while promising, merely sketches the edges of a far more complex landscape. The current work rightly focuses on facility location, but real-world networks are dynamic, multi-echelon systems. Future investigations should rigorously examine how these approaches scale to encompass time-varying demand, transportation complexities, and the interplay between multiple, interconnected facilities. Carefully check data boundaries to avoid spurious patterns when extending the model; a beautifully optimized solution is worthless if it rests on flawed input.

A significant limitation lies in the reliance on a digital twin – a simplification of reality. The fidelity of this twin directly dictates the quality of the resulting supply chain. Research must address the challenges of maintaining an accurate and up-to-date digital representation, particularly in volatile environments. Furthermore, the interplay between risk diversification and cost optimization deserves deeper consideration; a truly robust network might necessitate a degree of redundancy that conventional cost-benefit analyses fail to capture.

The Delhi NCR case study provides a valuable anchor, but generalization remains a concern. The inherent characteristics of that region – its infrastructure, economic profile, and regulatory environment – may not translate seamlessly to other contexts. Exploring alternative geographical settings, and systematically varying model parameters, will be crucial for establishing the broader applicability – and ultimate utility – of this quantum-inspired methodology.

Original article: https://arxiv.org/pdf/2601.04095.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- How to Unlock the Mines in Cookie Run: Kingdom

- How To Upgrade Control Nexus & Unlock Growth Chamber In Arknights Endfield

- Top 8 UFC 5 Perks Every Fighter Should Use

- Most Underrated Loot Spots On Dam Battlegrounds In ARC Raiders

- MIO: Memories In Orbit Interactive Map

- How to Find & Evolve Cleffa in Pokemon Legends Z-A

- Byler Confirmed? Mike and Will’s Relationship in Stranger Things Season 5

- USD RUB PREDICTION

- Unlock Blue Prince’s Reservoir Room Secrets: How to Drain, Ride the Boat & Claim Hidden Loot!

2026-01-08 12:40