Author: Denis Avetisyan

Researchers have developed NAVe, a formal verification system designed to proactively identify vulnerabilities in zero-knowledge circuits built with the Noir programming language.

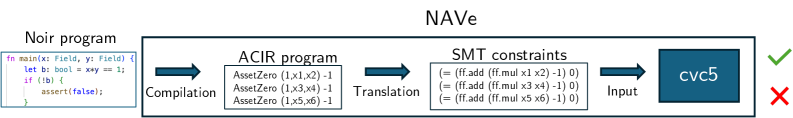

NAVe translates Noir’s ACIR intermediate representation into SMT-LIB constraints, enabling the use of powerful solvers to detect underconstrained code and other potential bugs.

Despite the growing adoption of zero-knowledge (ZK) proofs for enhanced privacy and security, ensuring the correctness of complex ZK programs remains a significant challenge. This paper introduces NAVe, a formal verification framework for Noir programs-a domain-specific language simplifying the development of ZK circuits-by translating Noir’s intermediate representation (ACIR) into SMT-LIB constraints. NAVe leverages SMT solvers to rigorously check for underconstrained code and other potential bugs, offering a practical approach to verifying ZK program behavior. Through evaluation on diverse Noir program sets, we demonstrate NAVe’s applicability and identify areas for optimization in formal verification of finite-field arithmetic-what further improvements can unlock even more robust and scalable ZK systems?

Unveiling the Zero-Knowledge Horizon

The burgeoning Web3 ecosystem, built on the principles of decentralization and user empowerment, is simultaneously facing increasing demands for both privacy and scalability. Traditional blockchain architectures, while revolutionary in their transparency, often expose user data and struggle to handle large transaction volumes efficiently. This creates a fundamental tension – the desire for open, verifiable systems clashes with the need for confidential transactions and rapid processing. Consequently, developers are actively seeking solutions that can reconcile these competing priorities, enabling a new generation of decentralized applications (dApps) capable of supporting mass adoption without compromising individual privacy. The pursuit of these solutions is driving innovation in areas like zero-knowledge proofs and other privacy-enhancing technologies, essential for realizing the full potential of a truly decentralized web.

Zero-knowledge proof systems represent a paradigm shift in data verification, enabling confirmation of information’s truthfulness without exposing the information itself. These systems achieve this feat through cryptographic protocols where one party, the prover, convinces another, the verifier, of a statement’s validity. Crucially, the verifier gains no additional knowledge beyond the truth of the statement; the underlying data remains completely concealed. This capability is particularly relevant in contexts demanding privacy, such as secure authentication, confidential transactions, and private data analysis, offering a robust alternative to traditional methods that require full data disclosure for verification. The implications extend to enhanced security, reduced data breaches, and the fostering of trust in data-sensitive applications.

Central to zero-knowledge systems is a carefully constructed interaction between a Prover and a Verifier, enabling the demonstration of truth without revealing any underlying information. The Prover aims to convince the Verifier of a statement’s validity, not by disclosing the data that supports it, but through a cryptographic ‘proof’. This proof isn’t a simple affirmation; it’s a complex exchange of data designed to statistically guarantee the statement’s accuracy. Crucially, the Verifier learns that the statement is true, but gains absolutely no knowledge about why it’s true, or the specific data that validates it. This unique dynamic allows for secure authentication, confidential transactions, and private data verification-all without compromising the integrity of the information itself.

The core of zero-knowledge proof systems lies in establishing a verifiable relationship between publicly available information – termed ‘public input’ – and privately held data known as the ‘witness’. This isn’t simply about proving something is true; it’s about demonstrating that the witness, which remains concealed, satisfies a predefined relation when combined with the public input. Imagine a complex mathematical equation f(x) = y; the public input is ‘y’, the witness is ‘x’, and the relation is the function ‘f’. The prover demonstrates knowledge of ‘x’ by showing they can correctly solve for ‘y’ without ever revealing the value of ‘x’ itself. This delicate balance-proving knowledge without disclosure-is the foundation upon which privacy-preserving applications are built, enabling trust and verification without compromising sensitive information.

Deconstructing Computation: The Arithmetic Circuit Foundation

Arithmetic circuits are foundational to Zero-Knowledge (ZK) proofs because they decompose a computation into a series of constraints – mathematical equations that must hold true for the computation to be valid. These circuits consist of arithmetic operations – addition, multiplication – performed on values within a finite field. Each operation becomes a constraint, and the entire computation is expressed as a set of these constraints. A ZK proof then verifies that these constraints are satisfied without revealing the specific input values used in the computation. The efficiency and security of a ZK proof are directly related to how effectively the original computation can be represented as a minimal and well-structured arithmetic circuit; a smaller circuit generally translates to a faster and more efficient proof.

Specialized domain-specific languages (DSLs) such as Noir, Cairo, and Circom facilitate the creation of arithmetic circuits by allowing developers to express complex computations in a high-level, human-readable format. These languages abstract away the intricacies of directly working with finite field arithmetic and constraint systems. Compilers within these DSLs translate the written code into a set of arithmetic constraints – specifically, equations involving additions, multiplications, and potentially subtractions – that define the relationships between input, output, and intermediate variables. The resulting constraints form the core of the circuit, and their satisfaction verifies the correctness of the original computation. Each language offers differing approaches to circuit construction, impacting factors such as expressiveness, performance, and ease of use, but all ultimately serve the same function: to convert logical operations into a verifiable mathematical representation.

Arithmetic circuit design necessitates distinguishing between constrained and unconstrained code. Constrained code directly translates into arithmetic constraints – equations that the proof must satisfy – and forms the core of the verifiable computation. Conversely, unconstrained code represents logic not explicitly part of the core constraints; while it influences the circuit’s behavior, it requires careful handling to avoid introducing vulnerabilities or inefficiencies. Specifically, unconstrained code often necessitates the introduction of additional constraints to ensure its output remains within the finite field and doesn’t compromise the proof’s validity; failure to properly constrain unconstrained logic can lead to incorrect results or proof failures.

Range check constraints are essential in arithmetic circuits because computations within Zero-Knowledge proofs are performed using finite fields. Finite fields, while providing security benefits, have a limited representable range of values. Without range checks, intermediate values calculated within the circuit could exceed the field’s bounds, leading to incorrect results or invalid proofs. These constraints verify that each value remains within the permissible range [0, p-1] , where p is the prime modulus defining the finite field. Specifically, range checks ensure that values do not “wrap around” due to overflow or underflow, maintaining the integrity of the computation and the validity of the resulting proof. The implementation of range checks constitutes a significant portion of the overall constraint count in many ZK circuits, impacting both proof size and verification time.

Formal Verification: Proving Logic with NAVe and Nargo

Formal verification of Noir programs employs mathematical techniques to establish the correctness of the compiled code, thereby mitigating the risk of vulnerabilities and enhancing system reliability. This process differs from traditional testing, which can only demonstrate the presence of errors given specific inputs; formal verification aims to prove the absence of certain types of errors for all possible inputs. By rigorously analyzing the program’s logic against a formal specification, potential flaws-such as integer overflows, division by zero, or incorrect state transitions-can be identified and eliminated before deployment. The resulting assurance is crucial in security-sensitive applications where even a single vulnerability could have significant consequences.

NAVe functions as a formal verification tool by leveraging Satisfiability Modulo Theories (SMT) solving. Specifically, it translates the Arithmetic Circuit (ACIR) representation of a Noir program into SMT-LIB format, a standardized language for SMT solvers. The CVC5 solver is then utilized to analyze this SMT-LIB representation, determining whether the program satisfies its specified constraints. Our implementation and testing demonstrate NAVe’s capability to rigorously examine the ACIR and identify either proof of correctness or, crucially, counterexamples indicating potential vulnerabilities within the Noir program’s logic.

NAVe operates on ACIR (Arithmetic Circuit Intermediate Representation), a crucial design element enabling language independence for Noir programs. ACIR functions as an intermediary language; Noir code is first compiled into ACIR, and then NAVe performs formal verification on this ACIR representation. This decoupling of verification from the source language means that any programming language capable of compiling to ACIR can theoretically be verified using NAVe, without requiring modifications to the verifier itself. The use of a standardized intermediate representation promotes broader compatibility and allows for the verification of programs written in languages other than Noir in the future, provided they can target the ACIR format.

Nargo, the package manager and build tool for Noir, facilitates formal verification through direct integration with the NAVe verifier. This integration automates the process of converting Noir programs into the ACIR intermediate representation and submitting them to NAVe for analysis. During testing of the Noir infrastructure, Nargo successfully verified the correctness of multiple test programs, and, in cases where vulnerabilities existed, efficiently identified and presented counterexamples detailing the failing conditions. This automated workflow reduces the manual effort required for verification and accelerates the development of secure Noir applications.

The Ripple Effect: Implications and Future Trajectories

Zero Knowledge Proof Systems represent a paradigm shift in blockchain technology, moving beyond simple transaction recording towards sophisticated, private computation. Traditionally, blockchains required all data to be publicly visible for verification, limiting their application in scenarios demanding confidentiality. These systems, however, allow one party to prove the validity of a statement to another without revealing any information beyond the truth of the statement itself. This is achieved through cryptographic techniques that enable verification of computations performed on hidden data. Consequently, blockchains can now support applications like private transactions, confidential voting systems, and secure decentralized identity solutions, all while maintaining the integrity and immutability that define the technology. The potential for revolutionizing decentralized finance and data management hinges on this capacity for verifiable, yet private, on-chain computation.

A streamlined development experience is now possible for Zero-Knowledge (ZK) applications through the integration of Noir, NAVe, and Nargo. Noir serves as a DSL for defining ZK circuits with an emphasis on developer ergonomics, while NAVe provides a high-performance proving system capable of generating succinct proofs. Nargo then functions as a package manager and CLI tool, simplifying the process of building, testing, and deploying these circuits. This combined workflow allows developers to focus on the logic of their applications rather than the complexities of cryptographic implementation, significantly lowering the barrier to entry for building privacy-focused decentralized applications and fostering innovation within the ZK ecosystem. The tooling enables rapid prototyping, efficient testing, and ultimately, the creation of scalable and verifiable computations on blockchain platforms.

Zero Knowledge Proof Systems are poised to fundamentally reshape decentralized finance and identity management by offering solutions that simultaneously enhance scalability and protect user privacy. Current blockchain limitations often force a trade-off between these two crucial aspects; however, this technology allows for transactions and data verification without revealing the underlying information. This capability unlocks opportunities for confidential financial transactions, enabling features like private payments and shielded lending, while also allowing individuals to prove claims about themselves – such as age or credentials – without disclosing the data itself. This has significant implications for self-sovereign identity, reducing the risks associated with centralized data storage and promoting greater control over personal information, ultimately fostering more secure and efficient systems across a range of applications.

Recent performance evaluations of Zero-Knowledge Proof (ZK) system solvers reveal a nuanced landscape where no single configuration consistently dominates. Testing across varied program complexities demonstrated that solver efficacy is highly context-dependent, suggesting a powerful potential for complementary deployment-strategically utilizing each solver’s strengths based on the specific computational task. However, a significant bottleneck emerged when verifying programs requiring extensive range checks – a common requirement for complex constraints. The verification time for these programs increased substantially, underscoring a critical need for higher-level abstractions and optimized techniques to manage and reduce the computational burden associated with intricate constraint systems, ultimately paving the way for greater scalability in ZK applications.

The pursuit within this framework, as demonstrated by NAVe’s translation of ACIR into SMT-LIB constraints, echoes a fundamental tenet of rigorous inquiry. It isn’t enough to simply believe a Noir program behaves as intended; it must be demonstrably proven, its every constraint subjected to relentless logical scrutiny. This echoes David Hilbert’s assertion: “We must be able to answer yes or no to those questions that are allowed to be asked.” NAVe, in essence, builds a system to ask those questions – to formally verify the absence of underconstrained code and ensure the integrity of zero-knowledge proofs – by translating the ‘code’ of the program into a language logic can definitively assess. Reality, much like a complex Noir program, is open source; NAVe offers a tool to begin reading it.

Where Do We Go From Here?

The exercise of formally verifying code-particularly within the constrained, yet rapidly evolving, landscape of zero-knowledge proofs-reveals a fundamental truth: perfect correctness is a moving target. NAVe establishes a method for probing Noir programs, translating their internal logic into the rigid language of SMT solvers. Yet, the very act of translation introduces a new set of potential distortions. A bug identified by NAVe isn’t necessarily a flaw in the intent of the Noir code, but a consequence of how that intent is mapped onto a fundamentally different computational model. The system is only as strong as its weakest interpretive layer.

Future work will inevitably push beyond the current limitations of SMT solving. As zero-knowledge circuits grow in complexity-and they will, driven by demand-the computational cost of formal verification will escalate. The challenge isn’t simply scaling existing techniques, but exploring alternative approaches-perhaps embracing probabilistic verification or leveraging machine learning to identify likely bug candidates. The pursuit of absolute certainty, it seems, will be perpetually outpaced by the ingenuity of those designing ever more intricate systems.

Ultimately, NAVe and its successors will function less as bug detectors and more as stress tests. The real value lies not in eliminating all errors, but in systematically revealing the boundaries of what’s verifiable, forcing a continual refinement of both the code and the tools used to examine it. Error, after all, is often a more instructive teacher than flawless execution.

Original article: https://arxiv.org/pdf/2601.09372.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- The Winter Floating Festival Event Puzzles In DDV

- Jujutsu Kaisen Modulo Chapter 18 Preview: Rika And Tsurugi’s Full Power

- Upload Labs: Beginner Tips & Tricks

- Best Video Game Masterpieces Of The 2000s

- Top 8 UFC 5 Perks Every Fighter Should Use

- How To Load & Use The Prototype In Pathologic 3

- How to Increase Corrosion Resistance in StarRupture

- USD COP PREDICTION

- Jujutsu: Zero Codes (December 2025)

- Roblox 1 Step = $1 Codes

2026-01-16 05:45