Author: Denis Avetisyan

Researchers have developed Multiverse, a transactional memory system that intelligently balances optimistic and multiversioned concurrency control to boost performance across diverse workloads.

Multiverse dynamically combines unversioned and multiversioned approaches to efficiently support both short transactions and long-running range queries, offering significant gains over existing STM implementations.

While software transactional memory (STM) simplifies concurrent data structure design, existing systems struggle to efficiently support both short, frequent transactions and long-running reads accessing frequently updated data. This paper introduces Multiverse: Transactional Memory with Dynamic Multiversioning, a novel opaque STM that dynamically combines unversioned and multiversioned approaches to overcome this limitation. Our experiments demonstrate that Multiverse achieves state-of-the-art performance for common-case workloads while significantly outperforming existing STMs on applications with long-running range queries. Could this dynamic approach unlock new levels of concurrency and scalability in shared-memory parallel programming?

The Inevitable Friction of Concurrency

Conventional Software Transactional Memory (STM) systems, while promising a simplified approach to concurrent programming, frequently encounter performance limitations when multiple threads intensely compete for shared resources. This high contention arises because traditional STM implementations typically rely on locking or complex versioning schemes to ensure data consistency, introducing overhead that escalates with increased concurrency. As the number of threads attempting to access and modify the same data grows, these systems experience escalating contention, leading to increased abort rates and significant performance degradation. Consequently, applications designed with these STM systems often fail to scale effectively, hindering their ability to fully leverage multi-core processors and distributed computing environments. The core issue lies in the inability of these systems to efficiently manage and minimize the conflicts that naturally occur in highly concurrent scenarios, thereby restricting the potential for parallel execution and overall application throughput.

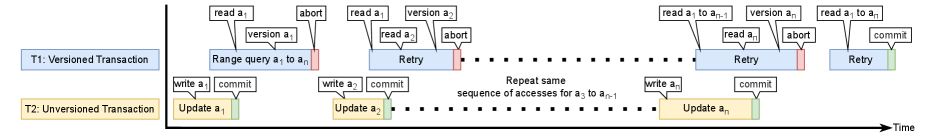

Conventional Software Transactional Memory (STM) systems, regardless of whether they employ optimistic or pessimistic concurrency control, invariably confront a fundamental trade-off impacting application performance. Optimistic approaches, which assume conflicts are rare, can suffer from high abort rates when contention does occur, as transactions repeatedly retry upon detecting conflicts – this leads to wasted computational effort. Conversely, pessimistic STM implementations, which lock resources preemptively, minimize aborts but introduce contention that can serialize access to shared data, effectively negating the benefits of concurrency. This inherent tension means that simply choosing between optimism and pessimism isn’t sufficient; instead, system designers must carefully balance the probability of conflicts with the cost of retries or the potential for blocking, and often tailor their approach to specific application workloads to achieve acceptable responsiveness and scalability.

The inherent difficulties in scaling traditional Software Transactional Memory (STM) systems necessitate a shift towards novel designs for concurrent data management. Existing STM approaches, while conceptually elegant, frequently encounter performance cliffs when multiple threads aggressively compete for the same shared resources. This contention often manifests as increased abort rates – where transactions are repeatedly forced to retry – or as serialization bottlenecks that diminish the benefits of parallelism. Consequently, research is increasingly focused on STM variants that prioritize contention management through techniques such as hybrid locking, optimistic concurrency with adaptive contention resolution, and transactional memory designs that leverage hardware support for finer-grained synchronization. These innovative approaches aim to strike a more effective balance between minimizing contention, reducing abort overhead, and maximizing throughput in highly concurrent environments, ultimately unlocking the full potential of multi-threaded applications.

A Hybrid Architecture: Embracing the Inevitable

Multiverse utilizes a hybrid architecture that integrates the benefits of both unversioned Software Transactional Memory (STM) and Multiversion Concurrency Control (MVCC). Traditional unversioned STM offers low latency and high throughput due to its direct, in-place data modification, but can suffer from contention and livelocks. Conversely, MVCC provides high concurrency by allowing read operations to access consistent snapshots of data without blocking, but introduces overhead related to version management and garbage collection. By combining these approaches, Multiverse aims to deliver the performance of unversioned STM while mitigating contention through the use of MVCC-style techniques when necessary, resulting in a system optimized for both speed and concurrency.

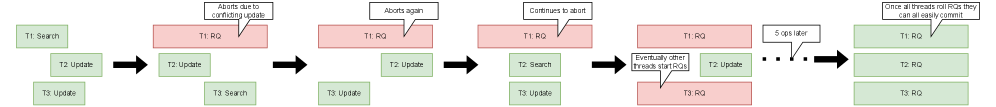

Multiverse utilizes a dual-mode transaction system, dynamically switching between ‘U’ and ‘Q’ modes to optimize performance. ‘U’ mode, representing unversioned STM, prioritizes speed by directly modifying data, suitable for low-contention scenarios. Conversely, ‘Q’ mode employs multiversion concurrency control (MVCC), creating data copies for each transaction to reduce blocking and contention, and is selected when higher contention is predicted. The system evaluates transaction characteristics and workload patterns to determine the optimal mode for each operation, allowing for adaptive concurrency management and improved resource utilization.

Multiverse minimizes transaction contention and abort rates by dynamically adjusting to workload characteristics. Traditional optimistic concurrency control systems, like those utilizing MVCC, can experience high abort rates under contention, while unversioned STM can suffer performance degradation due to lock contention. Multiverse addresses this by allowing transactions to operate in either an ‘Unversioned’ or ‘Query’ mode. The ‘Unversioned’ mode prioritizes speed for low-contention scenarios, while the ‘Query’ mode leverages multiversioning to reduce contention in high-contention environments. This dynamic selection, based on real-time analysis of transaction behavior, results in reduced abort rates and improved throughput, ultimately enhancing the overall performance and scalability of the system.

Optimized Mechanisms: The Cost of Consistency

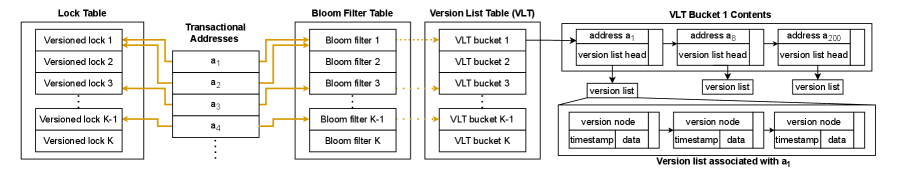

Multiverse employs a Bloom Filter and a Version List Table to manage versioned addresses with reduced overhead. The Bloom Filter serves as a probabilistic data structure to quickly determine if an address has been versioned, avoiding costly lookups in the Version List Table when no prior version exists. The Version List Table then stores the chain of versions for each address that has been versioned, facilitating efficient access to historical data. This two-tiered approach minimizes memory consumption and lookup times compared to storing version histories for all addresses, optimizing performance for long-running transactional applications.

Lock Tables within the system manage concurrent access to data, differentiated by transaction versioning. Unversioned transactions utilize encounter time locking, granting access based on immediate availability and preventing conflicts during read/write operations. Conversely, versioned transactions employ deferred commit time locking, postponing lock acquisition until the commit phase. This approach minimizes lock contention during transaction execution, enhancing concurrency, and only definitively reserving resources when data modification is confirmed. The combination of these locking strategies optimizes performance based on whether transactions require multi-version concurrency control or operate on current data only.

Epoch Based Reclamation manages memory within the Multiverse system by dividing the lifetime of data into sequential epochs. Each epoch represents a period during which new data is allocated and older data is considered potentially reclaimable. During each epoch transition, the system identifies and releases data no longer referenced by active transactions or snapshots, effectively preventing memory leaks and reducing fragmentation. This approach is particularly beneficial for long-running applications where continuous allocation and deallocation of resources can lead to significant performance degradation if not efficiently managed. Reclamation occurs in discrete phases tied to epoch boundaries, minimizing the overhead associated with continuous garbage collection and ensuring predictable memory management behavior.

Opacity guarantees transactions operate on a consistent snapshot of the database, even in the event of aborts. This is achieved by ensuring that transactions only observe committed data; uncommitted modifications made by concurrent transactions are invisible. If a transaction is aborted, its changes are rolled back, and its effects are never visible to other transactions, preserving data consistency. This isolation prevents read phenomena such as dirty reads, non-repeatable reads, and phantom reads, simplifying concurrency control and application development by providing a predictable and reliable execution environment regardless of transaction outcome.

Performance and Scalability: The Illusion of Control

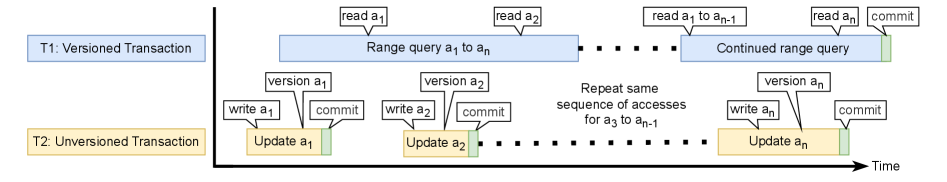

Multiverse distinguishes itself through robust support for range queries, a critical capability for applications demanding complex analytical processing. Unlike many concurrent data structures which struggle with queries spanning large datasets, Multiverse efficiently retrieves data within specified ranges without incurring substantial performance penalties. This is achieved through a novel transaction model that minimizes contention during read operations, allowing multiple transactions to concurrently access and process data within different ranges. Consequently, applications like financial modeling, scientific simulations, and real-time data analysis benefit from significantly faster query execution and improved overall responsiveness, even when dealing with massive, rapidly changing datasets. The system’s ability to efficiently handle range queries positions it as a powerful solution for data-intensive analytical workloads where timely access to specific data subsets is paramount.

To rigorously test its limits, Multiverse employs dedicated updater threads which intentionally create high-contention scenarios – situations where multiple transactions repeatedly attempt to modify the same data. This approach allows researchers to move beyond theoretical benchmarks and evaluate the system’s real-world performance under stress. Results consistently demonstrate Multiverse’s robustness; even with intense competition for resources, the system maintains stability and predictable performance. Crucially, these simulations also highlight Multiverse’s scalability, showcasing its ability to efficiently manage increasing workloads and transaction rates without significant performance degradation, positioning it as a viable solution for demanding concurrent applications.

Multiverse distinguishes itself through a unique ability to dynamically adjust its operational parameters in response to varying workload demands, notably achieving up to 50 times greater energy efficiency than competing Software Transactional Memory (STM) systems when processing extended read-only queries. This efficiency isn’t a static characteristic; rather, the system intelligently analyzes the nature of incoming requests – identifying long-running reads – and proactively optimizes resource allocation to minimize energy consumption. This adaptive approach circumvents the typical energy drain associated with continuously tracking and managing transactions that primarily involve data retrieval, offering a substantial benefit in data-intensive applications and analytical workloads where read operations heavily outweigh write operations.

Multiverse consistently matches or surpasses the transaction processing rates of established Software Transactional Memory (STM) systems when handling typical workloads devoid of extended read-only operations. However, the system truly distinguishes itself when coupled with dedicated updaters-components designed to simulate high-contention environments-and when leveraging range queries for data retrieval. These combined features unlock substantial performance gains, demonstrating Multiverse’s ability to not only maintain parity with existing STMs but to significantly outperform them in scenarios demanding efficient handling of complex data access patterns and concurrent modifications. This outperformance underscores the system’s adaptability and robustness in real-world, high-demand applications.

The pursuit of transactional memory, as demonstrated in Multiverse, echoes a fundamental truth about complex systems. Optimizations intended to address present limitations often introduce unforeseen constraints. This work’s dynamic multiversioning-balancing unversioned and multiversioned approaches-isn’t a solution, but rather a calculated trade-off. As Ada Lovelace observed, “The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform.” Multiverse doesn’t solve concurrency; it executes a precisely defined strategy. Scalability, it seems, is simply the word used to justify the inherent complexity of managing state across parallel operations, a prophecy of future failure neatly contained within a carefully constructed architecture.

What Lies Beyond?

Multiverse, in its attempt to tame the inherent chaos of concurrent access, offers a momentary reprieve, a localized decrease in entropy. It acknowledges, implicitly, that the pursuit of a universally optimal concurrency control mechanism is a fool’s errand. There are no best practices – only survivors. The dynamic adjustment between unversioned and multiversioned approaches is a tacit admission that any fixed architecture will eventually succumb to unforeseen workloads. This is not a solution, but a postponement of inevitable failure.

The true challenge, then, doesn’t lie in optimizing existing transactional memory paradigms, but in recognizing them as inherently fragile. Future work must confront the reality that order is merely a cache between two outages. Investigating adaptive systems that learn from contention patterns – systems that can dynamically reconfigure themselves at runtime, perhaps even evolving their internal data structures – offers a more promising, though significantly more complex, path.

Ultimately, the field must move beyond the question of “how do we prevent conflicts?” and embrace the more fundamental question of “how do we tolerate them?” The goal isn’t to eliminate inconsistency, but to contain it, to make it transient and localized. Architecture isn’t about building structures; it’s about postponing chaos.

Original article: https://arxiv.org/pdf/2601.09735.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Jujutsu Kaisen Modulo Chapter 18 Preview: Rika And Tsurugi’s Full Power

- How to Unlock the Mines in Cookie Run: Kingdom

- ALGS Championship 2026—Teams, Schedule, and Where to Watch

- Upload Labs: Beginner Tips & Tricks

- Top 8 UFC 5 Perks Every Fighter Should Use

- Jujutsu: Zero Codes (December 2025)

- How to Unlock & Visit Town Square in Cookie Run: Kingdom

- Roblox 1 Step = $1 Codes

- Mario’s Voice Actor Debunks ‘Weird Online Narrative’ About Nintendo Directs

- The Winter Floating Festival Event Puzzles In DDV

2026-01-18 09:47