Author: Denis Avetisyan

A new study explores the potential to uncover ultraheavy diquarks decaying into multijet final states at the upcoming High-Luminosity Large Hadron Collider.

This research details a machine learning approach to identifying color-sextet scalar diquarks and vectorlike quarks via the $uχ$ decay channel.

Despite the success of the Standard Model, fundamental questions regarding the origin of mass and the nature of dark matter remain open, motivating searches for physics beyond its confines. This paper, ‘HL-LHC sensitivity to an ultraheavy $S_{uu}$ diquark in the $uχ$ channel’, investigates the potential to discover color-sextet scalar diquarks and vectorlike quarks at the High-Luminosity LHC through an analysis of multijet final states originating from the S_{uu} \to u\chi decay channel. Employing machine learning techniques, we demonstrate improved sensitivity to these exotic particles in regions where the branching ratio to this channel is significant, offering a complementary search strategy at the HL-LHC. Could this novel approach unlock new insights into the nature of strong interactions and the existence of previously undetected particles?

Beyond the Standard Model: Seeking a More Complete Universe

Despite its remarkable predictive power and consistent validation through decades of experimentation, the Standard Model of particle physics remains incomplete. This foundational theory, which describes the fundamental forces and particles of the universe, fails to account for several observed phenomena, including the existence of dark matter and dark energy, the origin of neutrino masses, and the matter-antimatter asymmetry. These inconsistencies suggest the existence of physics beyond the Standard Model, prompting researchers to actively pursue new theoretical frameworks and experimental searches for undiscovered particles and interactions. The quest extends beyond simply patching the existing model; it aims to uncover a more complete and fundamental understanding of the universe, potentially revealing hidden symmetries, extra dimensions, or entirely new forces governing the cosmos.

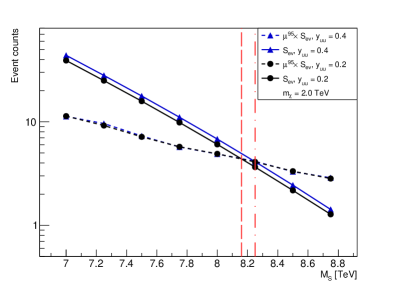

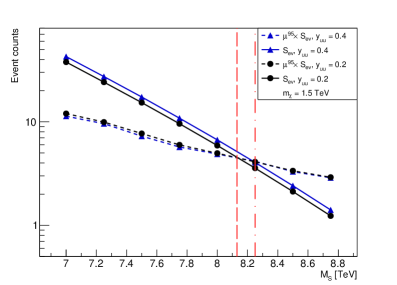

The search for physics beyond the Standard Model increasingly focuses on hypothetical particles that could resolve existing inconsistencies and explain observed phenomena like dark matter. Among these, scalar diquarks – composite particles formed by two quarks bound together via a scalar field – represent a compelling possibility. Current experiments at the Large Hadron Collider are meticulously designed to detect these exotic particles, probing masses up to 8.2 TeV. The detection strategy relies on identifying unique decay signatures arising from the diquark’s interactions, potentially manifesting as resonances in collision data. A successful discovery would not only validate theoretical predictions but also open a new window into the fundamental forces governing the universe and the nature of strong interactions, revealing a richer structure than currently understood.

Simulating the Invisible: Recreating Particle Interactions

Monte Carlo simulations are fundamental to high-energy physics research because they provide a means to predict the outcomes of particle collisions which are governed by probabilistic processes described by quantum field theory. Direct calculation of collision events is often intractable due to the complexity of the Standard Model and beyond, necessitating the use of these simulations to generate a large number of possible collision scenarios. These simulations model particle production and decay, accounting for all possible interaction pathways and their associated probabilities, and allow physicists to compare theoretical predictions with experimental data collected by detectors like those at the Large Hadron Collider. The resulting statistical distributions of simulated events are then used to estimate signal rates and background noise, ultimately enabling the discovery and characterization of new particles and phenomena.

MadGraph5_aMC@NLO and Pythia8 are software frameworks utilized in high-energy physics to simulate particle collisions. MadGraph5_aMC@NLO focuses on generating matrix elements representing the probabilities of different particle interactions at leading order and next-to-leading order precision, incorporating calculations based on perturbative quantum chromodynamics (pQCD). Pythia8 then takes these generated events and simulates their subsequent evolution, including the hadronization process where quarks and gluons form observable hadrons, as well as the modeling of underlying event and multiple interactions. These tools account for complexities like quantum interference effects and the branching of unstable particles, producing detailed event samples that approximate the conditions observed in experiments like those at the Large Hadron Collider.

High-energy physics simulations rely heavily on accurate input parameters to predict collision outcomes and detect new particles. Specifically, parton distribution functions (PDFs) like the NNPDF23LO set are critical; these functions define the probability of finding quarks and gluons within a proton, directly impacting the predicted cross-sections for particle production. The NNPDF23LO set represents an advancement over previous PDFs, incorporating the latest experimental data and theoretical calculations to reduce uncertainties in these predictions. Achieving the sensitivity required to probe physics at energy scales up to approximately 8 TeV necessitates the use of these precise PDFs, as even small inaccuracies can obscure potential signals from new particles and limit the effectiveness of experimental searches at facilities like the Large Hadron Collider.

Reconstructing Collisions: Modeling Detector Response

The Delphes framework is a fast simulation program used to model the response of the ATLAS detector at the Large Hadron Collider. It takes as input the four-momentum and identity of particles produced in simulated collisions, and then propagates them through a detailed model of the detector’s sub-systems – including tracking chambers, calorimeters, and muon spectrometers. This process accounts for effects such as energy loss, particle interactions within the detector material, and detector resolution. The output of Delphes is a set of reconstructed particles, mimicking the signals that would be observed in real data, allowing for the evaluation of analysis strategies and the estimation of systematic uncertainties before actual data collection.

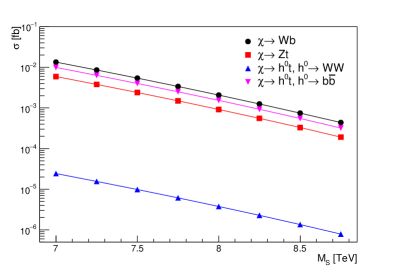

The investigation of the Suuu particle centers on analyzing its decay modes, specifically the decay to a Vector Leptoquark (VLQ) and the decay to an up-type Vector Leptoquark (uVLQ). Both of these decay pathways result in final states characterized by four jets – collimated sprays of hadrons produced from the fragmentation of quarks and gluons. The observation of these four-jet events, and the statistical analysis of their properties (such as jet mass and angular separation), provides a means to search for evidence of Suuu particle production and to constrain its potential mass and coupling strength. The specific kinematic signatures arising from these decay channels are crucial for distinguishing potential Suuu signals from background processes originating from standard model interactions.

Jet reconstruction is a critical step in particle physics data analysis, and the Anti-kt algorithm is employed to cluster particles resulting from proton-proton collisions into jets. This algorithm iteratively combines the closest particles based on a distance metric, effectively grouping the debris from highly energetic quarks and gluons. The resulting jets are then identified and characterized based on their energy, momentum, and other properties. Specifically, in searches for new particles like the Su_uu, the identification of four-jet final states – arising from the decay of the Su_uu particle – relies on accurate jet reconstruction. The precision of this reconstruction directly impacts the ability to distinguish signal events from background noise, ultimately defining the sensitivity of the experiment and the achievable exclusion limits for new physics.

Statistical Rigor: Defining the Boundaries of Discovery

The rigorous analysis of particle physics data hinges on robust statistical methods, and RooFit and RooStats represent a particularly powerful toolkit for this purpose. These software packages, built upon the ROOT data analysis framework, facilitate the creation of sophisticated statistical models that accurately represent the expected signals and background noise within experimental datasets. Researchers utilize these tools to perform hypothesis testing, quantifying the probability of observing certain results if a particular theoretical prediction is true or false. The framework allows for the flexible definition of probability density functions p(x; \theta) , where x represents observed data and θ encapsulates model parameters, enabling precise estimations of these parameters and their uncertainties. This capability is crucial for both confirming the existence of new particles and setting stringent limits on their properties, ultimately driving forward the boundaries of knowledge in high-energy physics.

The challenge of identifying rare signals amidst overwhelming background noise necessitates sophisticated discrimination techniques, and this analysis leverages the power of machine learning algorithms, specifically the Random Forest method. Random Forest constructs a multitude of decision trees, each trained on different subsets of the data, and combines their predictions to achieve a robust and accurate classification. This approach effectively maps the complex relationships between numerous observable variables, allowing the algorithm to distinguish subtle differences between signal events-those originating from the hypothesized new particle-and the much more abundant background processes. By identifying these discriminating features, Random Forest significantly enhances the sensitivity of the search, enabling the exclusion of a wider range of possible particle masses and couplings than traditional methods would allow.

This analysis successfully establishes exclusion limits for the mass of a color-sextet scalar diquark (Su_u) decaying into a u\chi final state. Through rigorous statistical modeling, the study sets a 95% confidence level (C.L.) lower bound of approximately 8.2 TeV on the diquark’s mass. Notably, the sensitivity of this search is further enhanced by considering scenarios with increased Yukawa couplings, pushing the exclusion limit to 9.2 TeV. This represents a substantial improvement – a full 1 TeV gain in sensitivity – over previous analyses, demonstrating the power of the employed techniques to probe beyond the Standard Model and constrain new physics.

The pursuit of discovering physics beyond the Standard Model, as demonstrated in this analysis of diquark signatures at the High Luminosity LHC, necessitates a careful consideration of methodological approaches. The study’s reliance on machine learning for signal selection, while promising, highlights the importance of responsible innovation. As John Stuart Mill observed, “It is better to be a dissatisfied Socrates than a satisfied fool.” This sentiment resonates deeply; blindly applying algorithms without understanding their limitations or potential biases-even in the search for fundamental particles-risks mistaking statistical flukes for genuine discoveries. Ensuring fairness and transparency in these analytical tools isn’t merely a technical detail, but a crucial aspect of the engineering discipline itself.

What Lies Ahead?

The pursuit of phenomena beyond the Standard Model inevitably reveals not just gaps in knowledge, but also the inherent limitations of the questions asked. This analysis, focused on a specific diquark decay channel, demonstrates a capability to probe theoretical landscapes – yet it simultaneously encodes an assumption: that the universe chooses to reveal itself through precisely the signatures algorithms are designed to detect. The increasing reliance on machine learning, while offering enhanced sensitivity, demands a concurrent scrutiny of the encoded biases-the worldview implicit in feature selection and network architecture.

Future work must move beyond simply maximizing discovery potential. A critical examination of the search strategy itself is required. What remains hidden by the insistence on specific decay modes? How do the chosen kinematic selections privilege certain interpretations over others? The field needs to embrace a more holistic approach, developing methods to systematically assess the completeness – or deliberate incompleteness – of the search.

Ultimately, the exploration of exotic particles like diquarks is less about confirming theoretical predictions and more about understanding the boundaries of current observation. The universe does not owe anyone a discovery; it merely exists. It is the construction of the measuring apparatus, the framing of the search, that creates the ‘signal’-and with each iteration, one must ask: what is being excluded, and at what cost?

Original article: https://arxiv.org/pdf/2601.11181.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Unlock the Mines in Cookie Run: Kingdom

- Jujutsu Kaisen Modulo Chapter 18 Preview: Rika And Tsurugi’s Full Power

- ALGS Championship 2026—Teams, Schedule, and Where to Watch

- Assassin’s Creed Black Flag Remake: What Happens in Mary Read’s Cut Content

- Upload Labs: Beginner Tips & Tricks

- Mario’s Voice Actor Debunks ‘Weird Online Narrative’ About Nintendo Directs

- Jujutsu: Zero Codes (December 2025)

- The Winter Floating Festival Event Puzzles In DDV

- Top 8 UFC 5 Perks Every Fighter Should Use

- Gold Rate Forecast

2026-01-21 08:20