Author: Denis Avetisyan

A new framework uses Monte Carlo Tree Search to dramatically improve the efficiency of syndrome measurement circuits, a critical step in protecting quantum information.

AlphaSyndrome leverages MCTS to minimize logical error rates in stabilizer codes by optimizing the scheduling of syndrome measurements for quantum error correction.

While quantum error correction (QEC) is vital for scalable quantum computing, the overhead associated with repeated syndrome measurements significantly impacts performance. This work presents ‘AlphaSyndrome: Tackling the Syndrome Measurement Circuit Scheduling Problem for QEC Codes’, an automated framework leveraging Monte Carlo Tree Search (MCTS) to optimize syndrome measurement scheduling for general commuting-stabilizer codes. AlphaSyndrome reduces logical error rates by up to 96.2% compared to standard approaches by shaping error propagation based on code structure and decoder feedback. Could this framework unlock more efficient QEC strategies and accelerate the development of fault-tolerant quantum computers?

The Fragile Foundation of Quantum Information

The promise of quantum computation – to solve problems intractable for even the most powerful classical computers – is shadowed by an inherent fragility. Unlike classical bits, which represent information as stable 0s or 1s, quantum bits, or qubits, leverage the principles of superposition and entanglement to exist in a probabilistic combination of states. This quantum state, however, is exceptionally sensitive to environmental disturbances – stray electromagnetic fields, temperature fluctuations, or even unwanted interactions with other particles. This susceptibility leads to a process called decoherence, where the delicate quantum information is lost, and the qubit collapses into a definite, classical state. Consequently, maintaining the integrity of quantum information requires isolating qubits from their surroundings, a considerable engineering challenge that forms a central hurdle in realizing practical quantum computers.

Quantum information, encoded in the delicate states of qubits, is inherently susceptible to disruption from even minute environmental interactions. This fragility demands the implementation of Quantum Error Correction (QEC) – a suite of techniques designed to safeguard these states and maintain the integrity of quantum computations. Unlike classical error correction which simply duplicates data, QEC leverages the principles of quantum mechanics – superposition and entanglement – to distribute quantum information across multiple physical qubits. This distribution allows for the detection and correction of errors without directly measuring the encoded quantum information, a process which would destroy the fragile quantum state. Effectively, QEC creates a redundant representation of the quantum information, enabling the reconstruction of the original state even if some of the physical qubits are corrupted, thereby paving the way for fault-tolerant quantum computing.

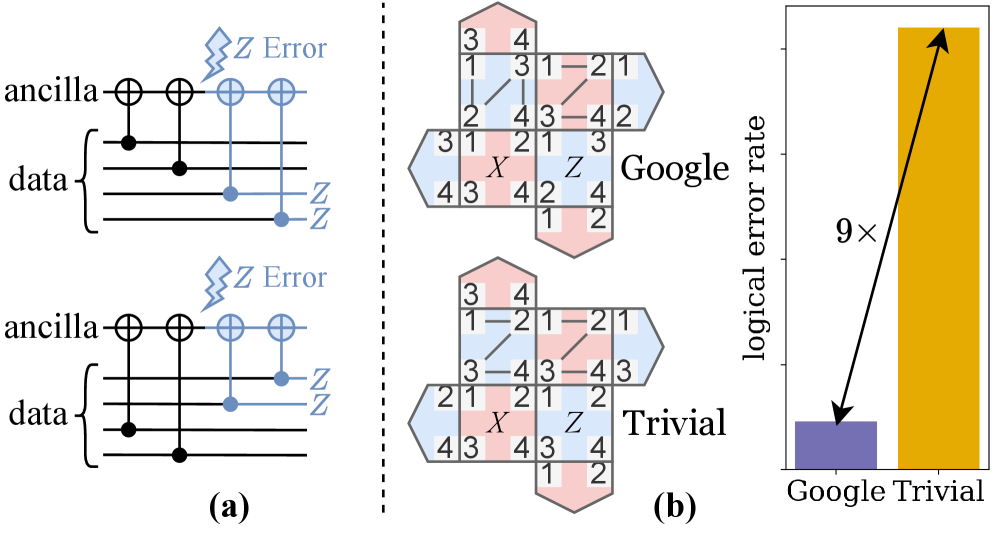

Quantum error correction, crucial for stabilizing the delicate states underpinning quantum computation, often employs Syndrome Measurement to detect and diagnose errors without directly revealing the encoded quantum information. However, this seemingly elegant solution isn’t without its pitfalls; the very act of measuring the syndrome – identifying the type of error – can inadvertently spread the initial error to other qubits. This ‘error propagation’ arises because syndrome measurement itself isn’t perfect and can introduce new errors, especially in systems with high noise levels. Consequently, a single, localized error can cascade through the quantum system, potentially corrupting a larger portion of the encoded data and undermining the entire error correction scheme. Researchers are actively investigating more robust measurement techniques and error correction codes designed to minimize this propagation effect, seeking to create truly reliable quantum computers.

Stabilizer Codes: The Architecture of Resilience

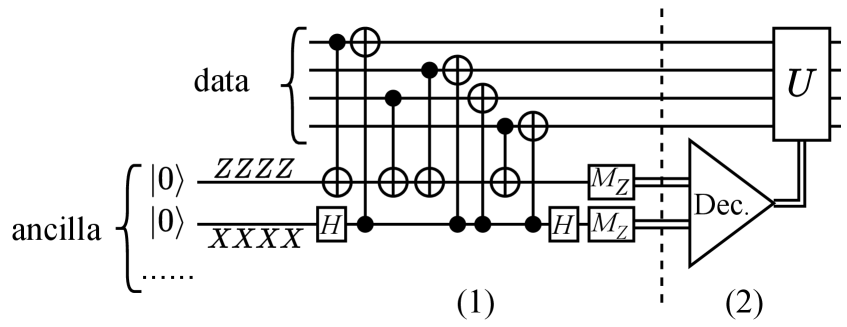

Stabilizer codes represent a significant class of quantum error correction (QEC) codes characterized by their use of a set of operators, known as stabilizing operators, to define the code space and detect errors. These operators, which commute with the code’s encoded quantum states, form an abelian group and serve as the foundation for error syndrome extraction. An error is detectable if it does not commute with one or more of these stabilizers; the non-commutation indicates the presence and, to a degree, the location of the error. The code space is then defined as the subspace of Hilbert space left invariant by the action of all stabilizing operators; any quantum state within this subspace is considered a valid encoded state. The specific choice of stabilizers directly impacts the code’s ability to correct errors and its tolerance to noise.

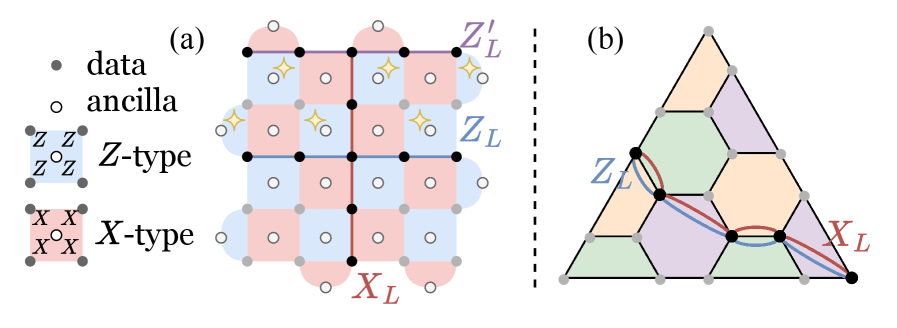

Surface codes and color codes represent a significant subset of stabilizer codes due to their advantageous fault-tolerance characteristics. Surface codes, defined on a two-dimensional lattice, exhibit a high threshold for error correction, meaning they can tolerate a relatively high probability of errors during quantum operations while still maintaining the integrity of the encoded quantum information. Color codes, utilizing a different lattice structure and encoding scheme, also demonstrate robust error correction capabilities and offer potential advantages in terms of decoding complexity for certain error models. Both code families are actively researched for implementation in various quantum computing architectures, with ongoing efforts focused on optimizing their performance and reducing the overhead associated with encoding and decoding quantum information. Their topological nature contributes to resilience against local errors, a crucial factor for building practical quantum computers.

Syndrome measurement is the central diagnostic procedure in stabilizer code quantum error correction. It involves applying a set of stabilizing operators – commutators with the encoded quantum state – to extract information about error occurrences without directly measuring the encoded qubit itself. The resulting measurement outcomes, the syndrome, identify the error type and location, allowing for corrective action. Accurate syndrome measurement is critical; errors in this process introduce further logical errors and degrade code performance. Efficient implementation requires minimizing the complexity of the measurement circuitry and reducing the time required for measurement, as gate errors accumulate during the process. The fidelity of syndrome extraction directly correlates with the achievable logical qubit error rate and, therefore, dictates the overall effectiveness of the quantum error correction scheme.

Intelligent Scheduling with AlphaSyndrome

AlphaSyndrome employs a Monte Carlo Tree Search (MCTS) framework to determine the optimal sequence for syndrome measurements within a Quantum Error Correction (QEC) protocol. MCTS functions as a tree search algorithm that explores potential measurement schedules, balancing exploration of novel sequences with exploitation of those demonstrating lower estimated error propagation. The algorithm iteratively builds a search tree, evaluating each node – representing a partial measurement schedule – through simulation and statistical analysis. This allows AlphaSyndrome to dynamically prioritize measurements that minimize the overall logical error rate, a departure from static, pre-defined scheduling approaches like Lowest Depth Scheduling. The MCTS implementation incorporates a UCT (Upper Confidence Bound 1 applied to Trees) selection strategy and a backpropagation mechanism to refine the evaluation of each node based on accumulated simulation results.

Traditional Lowest Depth Scheduling prioritizes minimizing the total time to complete all syndrome measurements without accounting for the probability or impact of errors during measurement. AlphaSyndrome departs from this static approach by incorporating error characteristics – specifically, the likelihood and effect of individual measurement errors – into the scheduling process. The framework utilizes these error profiles to dynamically adjust the measurement order, prioritizing measurements that are less susceptible to errors or that provide more critical information for error detection. This adaptive strategy allows AlphaSyndrome to mitigate error propagation and optimize the overall efficiency of Quantum Error Correction (QEC) by focusing resources on the most impactful measurements, unlike the fixed order of Lowest Depth Scheduling.

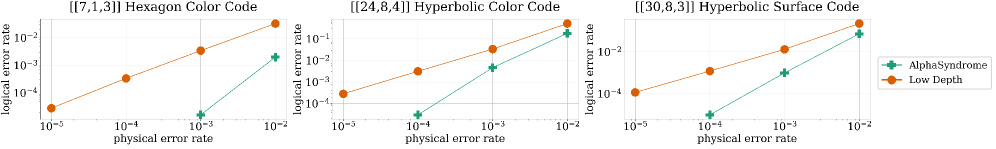

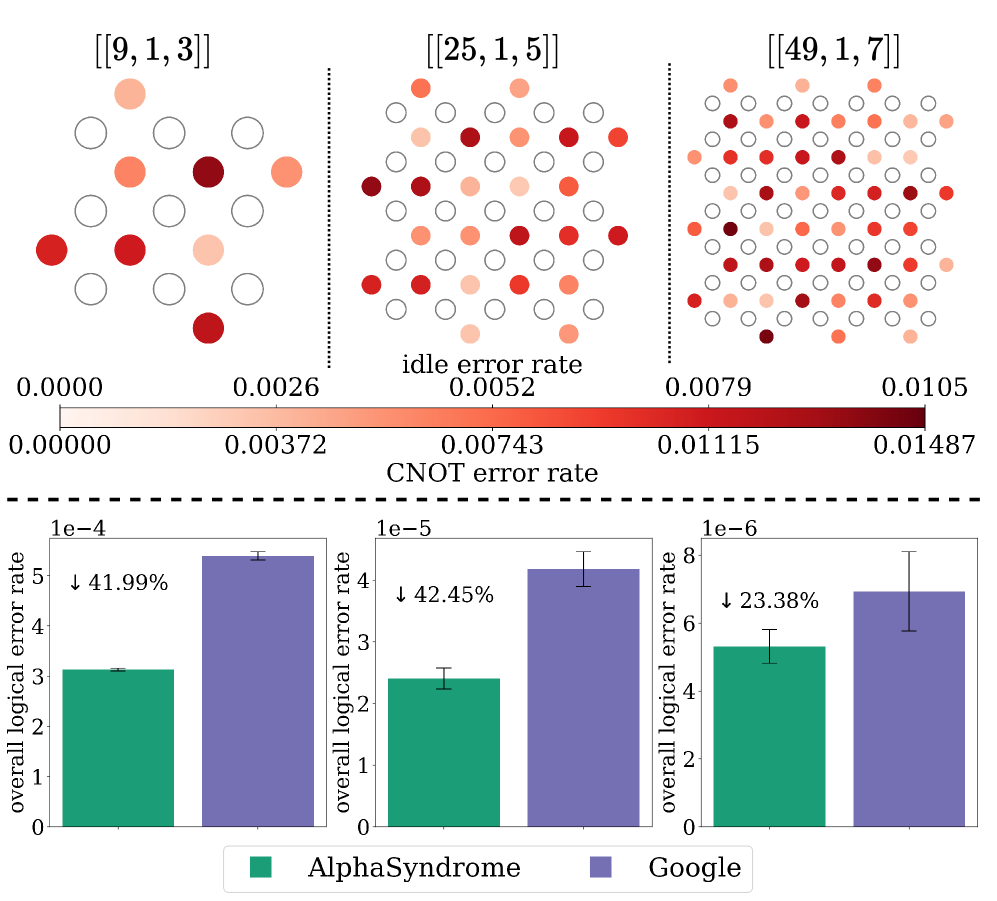

AlphaSyndrome’s data-driven approach to syndrome measurement scheduling yields significant performance gains in Quantum Error Correction (QEC). Evaluations across multiple QEC codes and decoder implementations demonstrate an average 80.6% reduction in logical error rate compared to conventional methods. This improvement in error correction efficiency is coupled with a substantial reduction in resource overhead; AlphaSyndrome achieves a 20-90% reduction in space-time volume. This decreased resource requirement is enabled by the ability to utilize smaller code distances while maintaining equivalent error correction capabilities, representing a key advancement for practical QEC implementation.

Real-World Impact and Performance

Quantum error correction aims to reduce the rate at which logical errors – those affecting the encoded quantum information – occur during computation. Recent research demonstrates that the AlphaSyndrome protocol, when paired with a conventional classical decoder, substantially lowers this logical error rate. This isn’t merely a theoretical result; simulations reveal significant gains across various quantum code configurations. By refining the error detection process, AlphaSyndrome enhances the reliability of quantum computations, bringing fault-tolerant quantum computing closer to practical realization and potentially unlocking the full power of qubit based technologies.

The efficacy of AlphaSyndrome is notably heightened when applied to error models that deviate from uniformity, a crucial characteristic of actual quantum computing hardware. Unlike simulations relying on simplified error assumptions, real-world quantum devices exhibit errors that vary across qubits and operations. In such complex scenarios, AlphaSyndrome demonstrably excels, achieving performance levels comparable to those attained by Google’s established schedule for rotated surface codes – a significant benchmark in quantum error correction. This suggests AlphaSyndrome’s adaptability makes it a promising candidate for mitigating the challenges posed by imperfect quantum systems and paving the way for more robust and reliable quantum computation.

Recent investigations into error correction within quantum computing reveal substantial improvements using the AlphaSyndrome technique, particularly when applied to the Bivariate Bicycle code. Testing demonstrates a noteworthy 44% decrease in the logical error rate when paired with BP-OSD decoding, and a still-significant 10% reduction utilizing Unionfind decoding. These results highlight AlphaSyndrome’s capacity to enhance the reliability of quantum computations, and across all tested configurations, the technique achieved a peak logical error rate reduction of 96.2%, suggesting a pathway towards more robust and practical quantum systems.

The pursuit of efficient quantum error correction, as detailed in this work, necessitates a holistic approach to system design. AlphaSyndrome’s MCTS framework embodies this principle, optimizing syndrome measurement scheduling not as an isolated task, but as an integral component of minimizing the logical error rate. This mirrors a fundamental tenet of robust system architecture – that structure dictates behavior. As Vinton Cerf observed, “The Internet treats everyone the same.” This applies equally to quantum systems; a well-structured scheduling algorithm, like a streamlined network protocol, ensures equitable and efficient processing of information, ultimately enhancing the reliability of quantum computation.

Where Do We Go From Here?

The pursuit of efficient syndrome measurement scheduling, as demonstrated by AlphaSyndrome, reveals a fundamental truth: optimization within quantum error correction is not merely a computational challenge, but a topological one. Reducing the logical error rate is less about finding the fastest schedule and more about sculpting a pathway for information to survive the noise. If the system survives on duct tape and clever heuristics, it’s probably overengineered; the elegance of a truly robust code lies in minimizing complexity, not masking it.

Future work must confront the illusion of control offered by modularity. Optimizing individual measurement circuits in isolation is a local maximum; the global behavior of the code – the propagation of errors, the correlated noise – dictates success. A holistic approach, perhaps leveraging techniques from network theory or dynamical systems, could reveal emergent properties currently obscured by piecemeal optimization.

Ultimately, the most pressing question isn’t how to schedule measurements, but how to design codes that are intrinsically resilient. A code that demands minimal optimization is not a weakness, but a testament to its inherent structure. The field should shift its focus from squeezing performance out of existing codes to exploring fundamentally new architectures that prioritize simplicity and stability, recognizing that a system’s true strength lies not in its complexity, but in its ability to maintain coherence in the face of inevitable disruption.

Original article: https://arxiv.org/pdf/2601.12509.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Unlock the Mines in Cookie Run: Kingdom

- Assassin’s Creed Black Flag Remake: What Happens in Mary Read’s Cut Content

- ALGS Championship 2026—Teams, Schedule, and Where to Watch

- Upload Labs: Beginner Tips & Tricks

- Mario’s Voice Actor Debunks ‘Weird Online Narrative’ About Nintendo Directs

- The Winter Floating Festival Event Puzzles In DDV

- Jujutsu Kaisen Modulo Chapter 18 Preview: Rika And Tsurugi’s Full Power

- Jujutsu Kaisen: Divine General Mahoraga Vs Dabura, Explained

- How to Use the X-Ray in Quarantine Zone The Last Check

- Jujutsu: Zero Codes (December 2025)

2026-01-22 06:33