Author: Denis Avetisyan

A new approach to sparse vector coding significantly reduces computational complexity for ultra-reliable, low-latency communication systems.

This review details a low-complexity Sparse Vector Coding scheme leveraging a sparse codebook matrix to improve performance in URLLC applications.

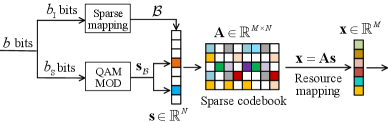

Achieving both ultra-reliability and low-latency in modern wireless communication systems presents a fundamental challenge due to the inherent limitations of short-packet transmission. This paper, ‘Low-Complexity Sparse Superimposed Coding for Ultra Reliable Low Latency Communications’, addresses this issue by introducing a novel sparse superimposed coding (SSC) scheme designed to minimize computational complexity. By leveraging a sparse codebook structure-where each codeword contains a limited number of non-zero elements-the proposed approach significantly reduces decoding complexity while maintaining acceptable block error rate (BLER) performance. Does this low-complexity design offer a practical pathway towards enabling highly reliable and responsive communication for critical applications?

Whispers of Reliability: The Looming Need for Ultra-Reliable Communication

The proliferation of novel technologies is driving an unprecedented need for communication networks capable of delivering information with both extreme reliability and minimal delay. Applications such as industrial automation, remote surgery, and autonomous vehicles necessitate Ultra-Reliable Low Latency Communication (URLLC) to function safely and effectively; even milliseconds of delay or a single lost packet can have catastrophic consequences. This demand extends beyond simply faster data rates; it requires architectures designed from the ground up to prioritize consistent, dependable delivery over sheer throughput. The current generation of wireless technologies, while offering increased bandwidth, often struggles to guarantee the stringent quality of service required by these time-critical operations, creating a significant impetus for innovative communication paradigms.

Conventional communication systems, designed for high data rates rather than guaranteed delivery, often fall short when tasked with Ultra-Reliable Low Latency Communication (URLLC). These established methods typically prioritize maximizing the amount of information transmitted per unit of bandwidth – a measure known as spectral efficiency – but achieve this at the cost of robustness. The inherent limitations in their error correction mechanisms and susceptibility to interference result in unacceptable rates of data loss for time-critical applications like industrial automation, remote surgery, and autonomous vehicles. Furthermore, the delays introduced by retransmission protocols – necessary to ensure reliability – directly contradict the low-latency demands of URLLC, rendering traditional approaches inadequate for services requiring near-instantaneous and dependable data transfer.

Sparse Vector Coding (SVC) presents a compelling advancement in communication efficiency by fundamentally altering how information is transmitted. Instead of densely packed data streams, SVC encodes data within vectors comprised primarily of zero values, with only a select few non-zero elements carrying critical information – a concept akin to sending a message using only the most essential words. This sparsity dramatically reduces transmission overhead, as fewer data points need to be sent and processed, thereby minimizing energy consumption and maximizing spectral efficiency. The technique proves particularly beneficial in scenarios demanding ultra-reliable, low-latency communication, as the streamlined data transmission minimizes delays and enhances the robustness of the signal against noise and interference. By focusing transmission power on a reduced set of significant data points, SVC offers a pathway toward meeting the stringent demands of emerging applications like industrial automation, autonomous vehicles, and remote surgery.

Refining the Signal: Advanced Sparse Coding Techniques

Sparse Regression Codes (SPARC) constitute a significant development within Sparse Vector Coding (SVC) by employing an efficient decoding method based on approximate message passing (AMP). Unlike traditional decoding algorithms which can be computationally expensive, AMP allows for faster convergence and reduced complexity when estimating the sparse code vector. SPARC achieves this by formulating the decoding process as a regression problem, enabling the use of iterative regression techniques to recover the original signal from the received data. This approach improves the scalability of SVC, particularly for high-dimensional data, and facilitates practical implementation in various signal processing applications. The efficiency of AMP decoding directly contributes to the reduced latency and power consumption associated with SPARC-based communication systems.

Generalized SPARC (GSARC) builds upon the SPARC algorithm by refining the construction of sparse regression codes to improve decoding performance. Traditional SPARC employs a fixed, randomly generated matrix for codeword creation; GSARC introduces modifications to this matrix construction process, specifically by optimizing the distribution of elements to reduce correlations and enhance the conditioning of the regression problem. This optimization leads to a lower error floor and improved bit error rate (BER) performance, particularly at high signal-to-noise ratios (SNRs). Furthermore, variations of GSARC explore different matrix generation techniques, including the use of structured matrices, to reduce computational complexity while maintaining performance gains over standard SPARC implementations.

Enhanced Sparse Coding (ESVC) improves spectral efficiency by extending traditional sparse coding techniques to utilize both the indices of non-zero coefficients and the values of those coefficients for data transmission. Specifically, ESVC employs Quadrature Amplitude Modulation (QAM) to encode information onto the non-zero coefficient magnitudes, effectively increasing the amount of data conveyed per sparse code vector. This dual encoding – utilizing both the location and value of significant coefficients – allows ESVC to achieve a higher data rate compared to systems that only encode information via the indices of active coefficients, thereby improving the overall spectral efficiency of the communication system. The technique offers potential gains in bandwidth utilization without requiring an increase in transmission power.

Taming Complexity: Sparse Superposition Codes and Their Limits

Block Orthogonal Sparse Superposition Codes (BOSS) and Block Sparse Vector Codes (SVC) enhance decoding performance by employing specifically designed mapping patterns between the transmitted data and the superimposed codewords. These patterns leverage orthogonality – minimizing interference between superimposed signals – and sparsity – utilizing codes with a limited number of non-zero elements. This strategic combination allows the receiver to more effectively separate and decode the individual data streams, even with overlapping signals. The design of these mapping patterns focuses on maximizing the Euclidean distance between the superimposed codewords, thereby reducing the probability of decoding errors and improving the overall reliability of the communication system.

While Block Orthogonal Sparse Superposition Codes (BOSS) and Block SVC improve decoding performance, their computational demands scale with block length. The matrix operations inherent in encoding and decoding, specifically the need for multiple vector-matrix multiplications and inversions, contribute to this complexity. As the block length N increases, the size of the matrices involved grows proportionally, leading to a rise in both the time and resources required for processing. This is particularly noticeable in scenarios with high-dimensional data or real-time constraints, where minimizing computational load is critical for practical implementation.

A low-complexity Sparse Superposition Code (SSC) scheme achieves reduced computational load by employing a Sparse Bernoulli Codebook Matrix for codebook generation. This matrix utilizes Bernoulli random variables to determine the placement of non-zero elements, resulting in a significantly sparser codebook compared to traditional methods. The resulting reduction in the number of required computations during both encoding and decoding processes leads to a complexity decrease of up to 50%. Performance evaluations demonstrate that this low-complexity scheme maintains comparable decoding performance to conventional SSC approaches, offering a beneficial trade-off between computational efficiency and reliability.

Validating the Promise: Performance and Design Considerations

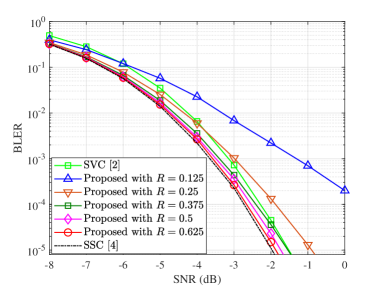

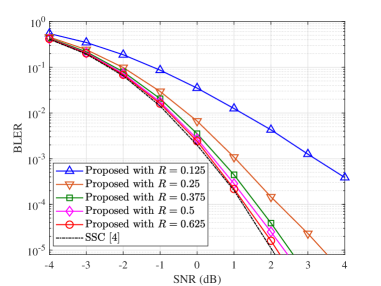

Performance of the proposed low-complexity Space-Switching Code (SSC) scheme was rigorously validated through extensive simulations conducted over Rayleigh fading channels, a common model for wireless communication environments characterized by unpredictable signal strength variations. These simulations assessed the scheme’s ability to reliably transmit data despite the challenges posed by fading, demonstrating its robustness under realistic conditions. The Rayleigh channel model allowed researchers to quantify the bit error rate (BLER) achieved by the low-complexity SSC scheme, providing a benchmark against conventional SSC approaches. The results confirm the scheme’s effectiveness in maintaining a high level of performance even when signals are subject to significant and random attenuation, a crucial aspect for practical wireless systems.

The proposed Low-Complexity Sparse Spreading Code (SSC) scheme demonstrates a significant reduction in computational demand during both encoding and decoding processes, achieving up to a 50% complexity decrease when contrasted with traditional SSC methodologies. This efficiency is realized without substantial performance loss; simulations indicate a maximum Block Error Rate (BLER) degradation of only 0.2 dB at a BLER target of 10-5. This minimal trade-off between complexity and performance positions the scheme as a viable solution for resource-constrained environments and applications requiring high data throughput, offering a compelling balance between computational cost and reliable communication.

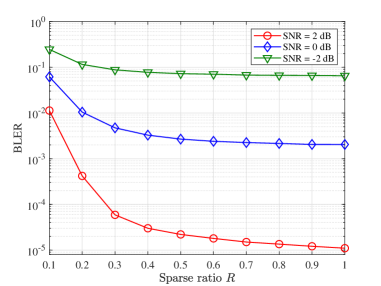

The efficacy of the proposed Low-Complexity SSC scheme hinges on a key adjustable parameter: the Sparsity Factor. This factor directly governs the number of active elements within the signal representation, enabling a nuanced balance between computational demand and achievable performance. A higher Sparsity Factor promotes more comprehensive signal recovery, potentially enhancing accuracy but simultaneously increasing the complexity of both encoding and decoding processes. Conversely, a lower factor reduces computational load, streamlining operations at the cost of potentially sacrificing some degree of signal fidelity. Consequently, careful selection of the Sparsity Factor allows engineers to tailor the scheme’s operation to specific application requirements, optimizing for either minimal complexity in resource-constrained environments or maximized performance where computational resources are less limited.

The proposed scheme relies on Multipath Matching Pursuit (MMP) as a robust decoding algorithm, expertly reconstructing the transmitted signal from potentially noisy or incomplete data. MMP operates by iteratively identifying and isolating dominant signal components – or ‘multipaths’ – within the received signal, effectively separating the desired information from interference. This approach proves particularly effective when dealing with sparse signals, where the energy is concentrated in a limited number of strong paths, allowing for efficient and accurate recovery even under challenging channel conditions. By intelligently prioritizing and extracting these key signal components, MMP minimizes decoding errors and ensures reliable communication performance within the low-complexity sparse spreading code system.

Whispers of the Future: Scaling Towards Next-Generation Communication

A newly proposed Low-Complexity Sparse Superposition Coding (SSC) scheme presents a significant step towards realizing Ultra-Reliable Low-Latency Communication (URLLC) systems. This approach intelligently combines data transmission with carefully chosen block lengths, achieving a crucial balance between computational complexity and performance. Unlike more demanding coding strategies, this scheme minimizes processing overhead while maintaining the high reliability necessary for critical applications – such as industrial automation, remote surgery, and autonomous vehicles. The design prioritizes practicality, offering a pathway to deployable URLLC solutions without requiring excessive resources, and paving the way for a new generation of responsive and dependable wireless networks.

This innovative communication scheme prioritizes a crucial equilibrium between computational demands and data throughput, enabling robust performance in challenging applications. By strategically minimizing complexity without sacrificing essential functionality, the approach allows for efficient transmission even with limited resources-a necessity for burgeoning technologies like industrial automation, tactile internet, and remote surgery. The resultant system isn’t simply about achieving high speeds; it’s about maintaining reliable connectivity and responsiveness under real-world constraints, making it a practical solution for deployments where processing power and energy efficiency are paramount. This balance ultimately unlocks the potential for widespread adoption of ultra-reliable low-latency communication (URLLC) in diverse and demanding scenarios.

Investigations reveal a significant performance advantage for the proposed Low-Complexity SSC scheme when handling sparse data; specifically, it demonstrably surpasses traditional SVC (Scalable Video Coding) methods when the data sparsity factor exceeds 0.375. This improvement stems from the SSC scheme’s efficient handling of near-zero data values, allowing for reduced transmission overhead and improved reconstruction quality in scenarios characterized by high sparsity – common in sensor networks and emerging machine-to-machine communication. Beyond simply outperforming SVC, this threshold highlights a critical operational zone where the SSC scheme’s architectural advantages become particularly pronounced, suggesting its suitability for applications where data is inherently sparse and reliable communication is paramount.

Continued development of this communication scheme benefits from investigating dynamic sparsity control, allowing the system to intelligently adjust data representation based on channel conditions and data importance. This adaptive approach promises to optimize the trade-off between transmission efficiency and reliability, particularly in fluctuating wireless environments. Simultaneously, exploration of advanced decoding algorithms – potentially leveraging machine learning techniques – could significantly improve the recovery of transmitted information, even under challenging noise levels or interference. Such innovations hold the potential to push the boundaries of URLLC performance, enabling more robust and dependable communication for critical applications and paving the way for increasingly complex future networks.

The pursuit of reduced complexity, as demonstrated by this scheme for sparse vector coding, feels less like engineering and more like a delicate balancing act. It’s an attempt to coax order from the inherent chaos of transmission – to distill signal from noise with the fewest possible rituals. As Bertrand Russell observed, “The whole problem with the world is that fools and fanatics are so confident in their own opinions.” This work doesn’t claim certainty, but rather a pragmatic reduction of computational burden – a carefully constructed spell designed to improve block error rates in the face of unpredictable channels. The sparsity factor isn’t a solution, merely a constraint, a way to momentarily appease the chaotic forces at play.

What Lies Beyond?

The pursuit of low-complexity in sparse coding, as demonstrated, feels less like a destination and more like a carefully negotiated truce with the inevitable. This work offers a pragmatic reduction in computational burden, yet the core tension remains: information demands a price, and simplicity is merely a redirection of that cost. Future iterations will undoubtedly explore the limits of this redirection, probing how aggressively the codebook matrix can be pruned before the whispers of the signal become indistinguishable from the noise.

The assumption of a fixed sparsity factor, while easing implementation, feels… convenient. Real channels aren’t polite enough to adhere to such constraints. A truly adaptive scheme, one that dynamically adjusts sparsity based on channel state and data criticality, would be a compelling, if considerably more chaotic, direction. Such a system wouldn’t solve the problem, of course; it would merely shift the battleground to the realm of efficient adaptation algorithms.

Ultimately, this research serves as a reminder: low-latency communication isn’t about achieving zero error-it’s about managing acceptable loss. The true measure of progress won’t be found in ever-more-complex codes, but in a refined understanding of what constitutes ‘acceptable’ in a world where perfection is a statistical illusion. The errors, after all, are where the truth resides.

Original article: https://arxiv.org/pdf/2601.16012.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Unlock the Mines in Cookie Run: Kingdom

- Assassin’s Creed Black Flag Remake: What Happens in Mary Read’s Cut Content

- Upload Labs: Beginner Tips & Tricks

- Jujutsu Kaisen: Divine General Mahoraga Vs Dabura, Explained

- Jujutsu Kaisen Modulo Chapter 18 Preview: Rika And Tsurugi’s Full Power

- Mario’s Voice Actor Debunks ‘Weird Online Narrative’ About Nintendo Directs

- ALGS Championship 2026—Teams, Schedule, and Where to Watch

- The Winter Floating Festival Event Puzzles In DDV

- How to Use the X-Ray in Quarantine Zone The Last Check

- Top 8 UFC 5 Perks Every Fighter Should Use

2026-01-23 16:13