Author: Denis Avetisyan

A new foundation model, THOR, promises to unlock greater insights from diverse Earth observation data, offering improved efficiency and adaptability for climate and societal applications.

THOR unifies multi-sensor Earth observation data using a compute-adaptive patching strategy, achieving state-of-the-art data efficiency and flexibility.

Current Earth observation models struggle with the heterogeneity of satellite data and inflexible deployment options, limiting their real-world impact. This paper introduces ‘THOR: A Versatile Foundation Model for Earth Observation Climate and Society Applications’, a compute-adaptive foundation model designed to unify data from multiple Copernicus Sentinel satellites-processing resolutions from 10 to 1000 meters within a single framework. By employing a novel randomized patching and input size strategy, THOR achieves state-of-the-art performance and enables dynamic trade-offs between computational cost and feature resolution without retraining. Will this flexible architecture unlock new possibilities for data-efficient climate and societal applications, particularly in data-limited scenarios?

The Expanding Frontier of Earth Observation: A Challenge of Scale

Earth observation has entered an era of unprecedented data abundance, yet effectively harnessing this potential presents significant challenges. Traditional methods, designed for smaller datasets, now grapple with the sheer volume and complexity of information streaming from multiple sensors – like those aboard the Sentinel constellation. Analyzing petabytes of data daily requires computational infrastructure that is often cost-prohibitive or simply unavailable, and the inherent heterogeneity of multi-sensor data – differing resolutions, spectral bands, and formats – necessitates extensive pre-processing and harmonization. This complexity isn’t merely a matter of storage; it also impacts analytical speed and introduces potential errors, hindering timely insights into dynamic Earth systems and limiting the ability to respond effectively to rapidly evolving environmental conditions.

The influx of data from constellations like Sentinel-1, Sentinel-2, and Sentinel-3 presents a significant computational bottleneck for Earth observation. While these satellites offer unprecedented coverage and revisit times, fully exploiting their potential demands substantial processing power and storage capacity. Often, a trade-off occurs: to manage the data volume, analysts must reduce the spatial resolution, effectively diminishing the detail captured, or accept delays in processing, hindering timely responses to dynamic events. This limitation stems from the sheer scale of the datasets – terabytes are routinely generated daily – and the complexity of algorithms needed to extract meaningful information. Consequently, researchers and operational users frequently face the challenge of balancing data richness with computational feasibility, requiring innovative approaches to data reduction, parallel processing, and algorithmic optimization.

Current Earth observation modeling approaches often struggle with practical application due to a rigidity in handling diverse analytical needs and geographic extents. Many established frameworks are designed for specific resolutions or limited areas, necessitating substantial rework – or complete model rebuilding – when applied to different scales or data types. This inflexibility creates a bottleneck in processing the constant stream of data from modern sensors; a model optimized for regional forest monitoring, for example, may be wholly unsuitable for tracking urban expansion at a continental level, or for utilizing higher-resolution imagery for detailed crop health assessment. The inability to dynamically adjust model parameters and algorithms to match varying data demands not only increases computational costs and processing times, but also limits the potential for extracting timely and actionable insights from the wealth of Earth observation data now available.

THOR: A Foundation Model for Earth Observation – A Paradigm Shift

THOR represents a new approach to Earth Observation (EO) data analysis by functioning as a foundation model, a paradigm shift from task-specific algorithms. Existing foundation models, typically trained on natural language or images, lack the capacity to natively process the multi-spectral and spatio-temporal characteristics of EO data. THOR addresses this limitation by providing a unified framework for diverse EO sources – including optical imagery, radar data, and elevation models – enabling transfer learning across various downstream tasks such as land cover classification, object detection, and change monitoring. This unification facilitates the development of more generalizable and adaptable EO applications, reducing the need for extensive task-specific training and labeled datasets.

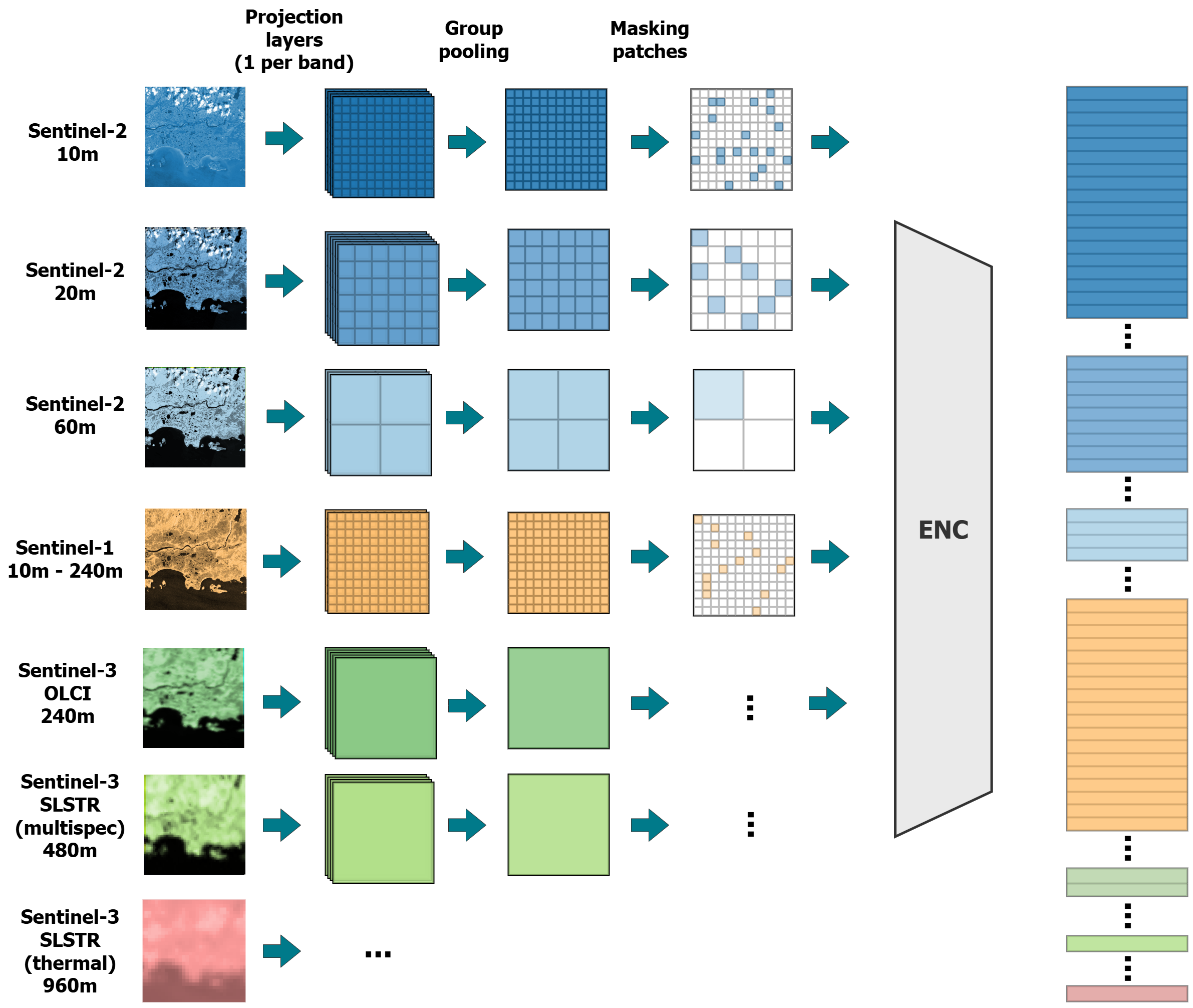

THOR’s foundational architecture is a Vision Transformer (ViT), a neural network leveraging attention mechanisms to process Earth Observation (EO) data. Unlike convolutional neural networks that rely on local receptive fields, ViTs divide an image into a sequence of patches, treating each patch as a “token” similar to words in natural language processing. Self-attention layers then weigh the relationships between these patches, allowing the model to capture long-range dependencies and contextual information within the image. This approach enables robust feature extraction, identifying relevant patterns and characteristics across diverse EO datasets, and improving performance on downstream tasks by focusing on the most informative areas of the input imagery.

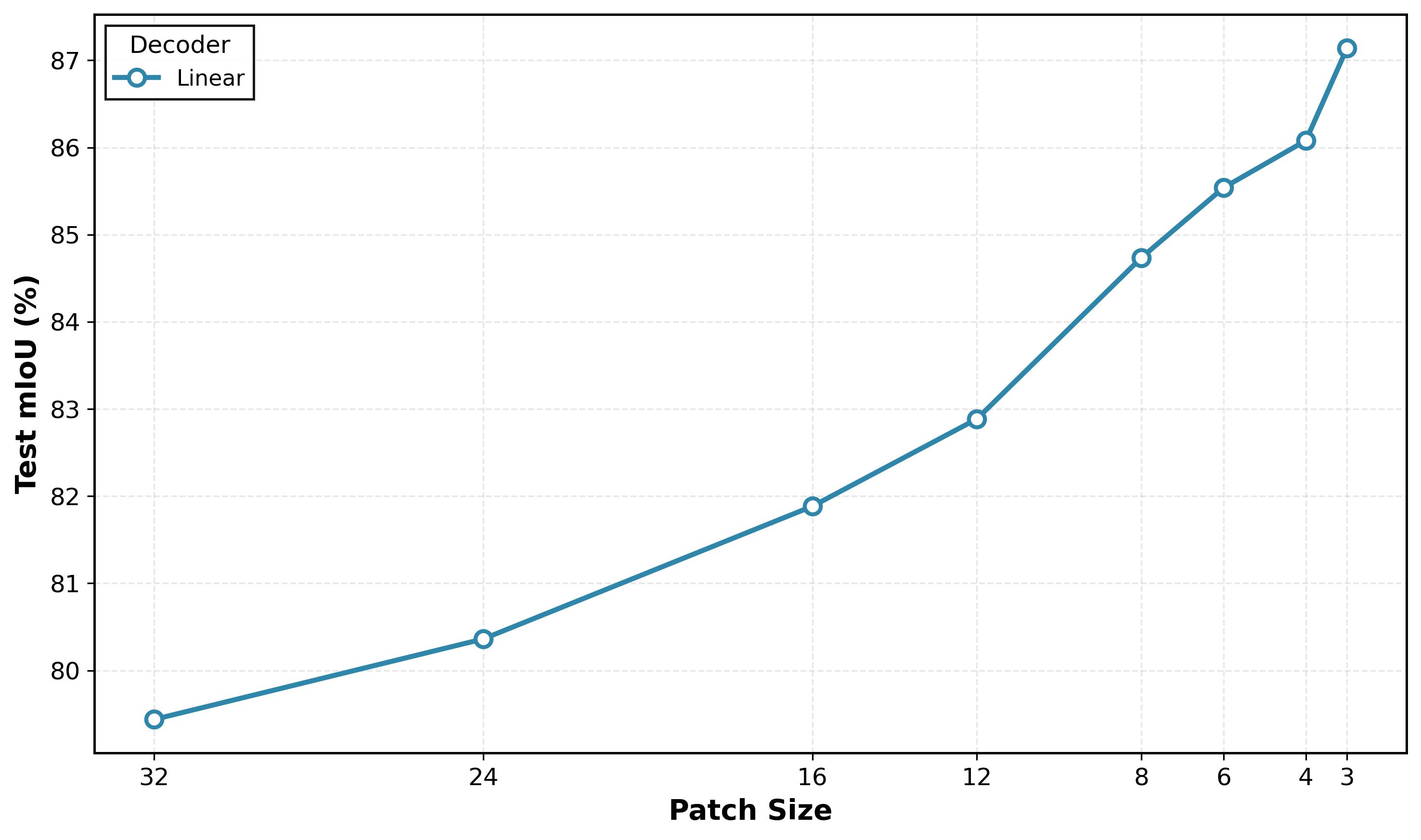

THOR incorporates flexible patching as a mechanism to manage computational load and support multi-resolution Earth Observation (EO) data. Traditional Vision Transformer (ViT) models utilize fixed-size patches for image processing; however, THOR dynamically adjusts patch sizes during operation. This is achieved by allowing the model to process input data with varying granularities, reducing the number of patches – and therefore computational requirements – when dealing with lower-resolution imagery or larger areas. Conversely, higher-resolution data benefits from smaller patch sizes for more detailed feature extraction. This adaptive approach enables THOR to maintain performance across a broad spectrum of EO data characteristics without requiring extensive retraining or architectural modifications.

Advanced Techniques: Unlocking Flexibility and Spatial Understanding

THOR utilizes GSD-aware 2D ALiBi (Attention with Location-based Interpolation of Biases) encoding to maintain consistent spatial understanding regardless of variations in Ground Sampling Distance (GSD). Traditional positional encodings struggle when imagery is captured at differing resolutions or altitudes; ALiBi addresses this by incorporating location-based biases directly into the attention mechanism. This allows the model to effectively extrapolate spatial relationships, ensuring that objects and their relative positions are accurately interpreted even with changes in GSD. The 2D implementation specifically focuses on preserving spatial information within the image plane, which is crucial for tasks like semantic segmentation and object detection in geospatial data.

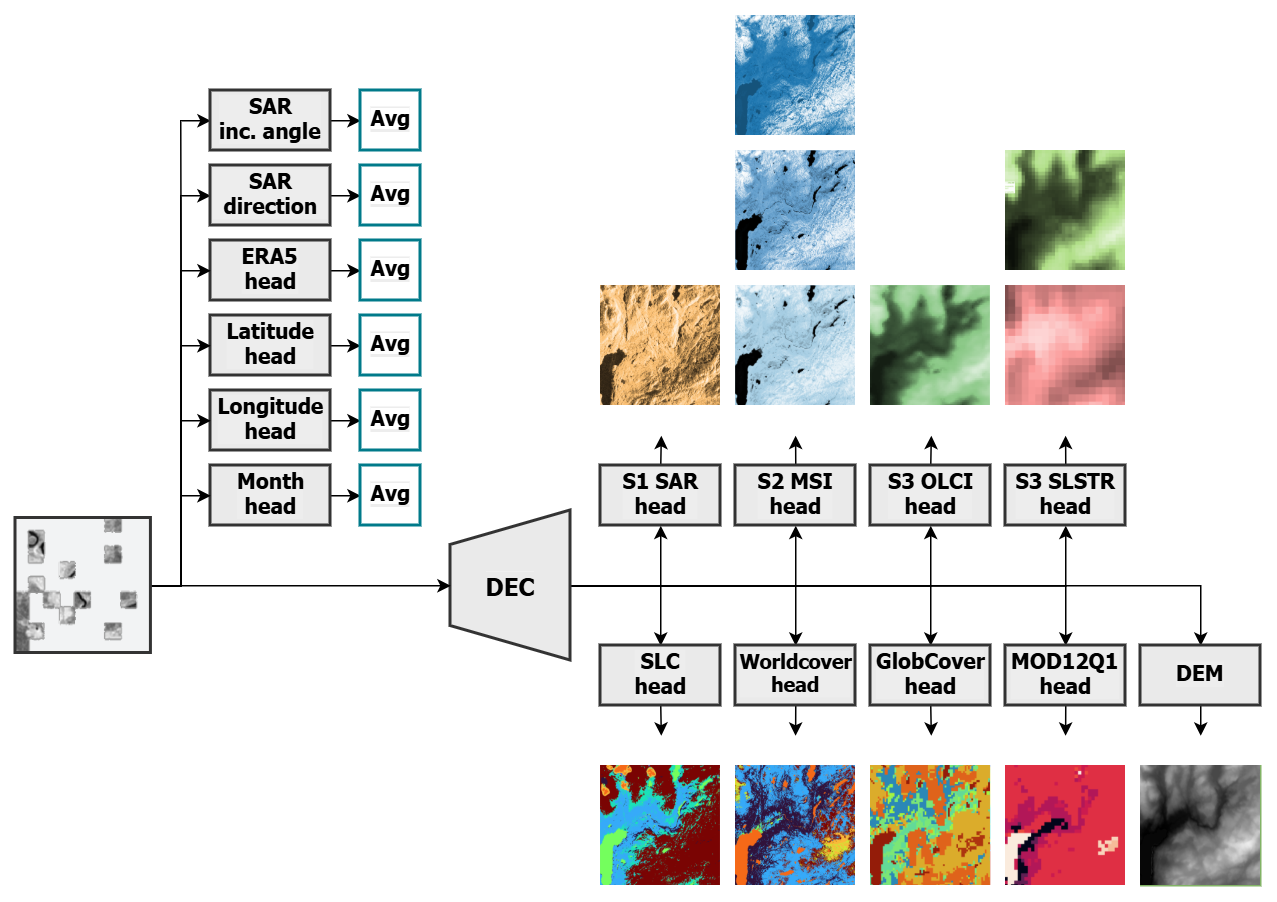

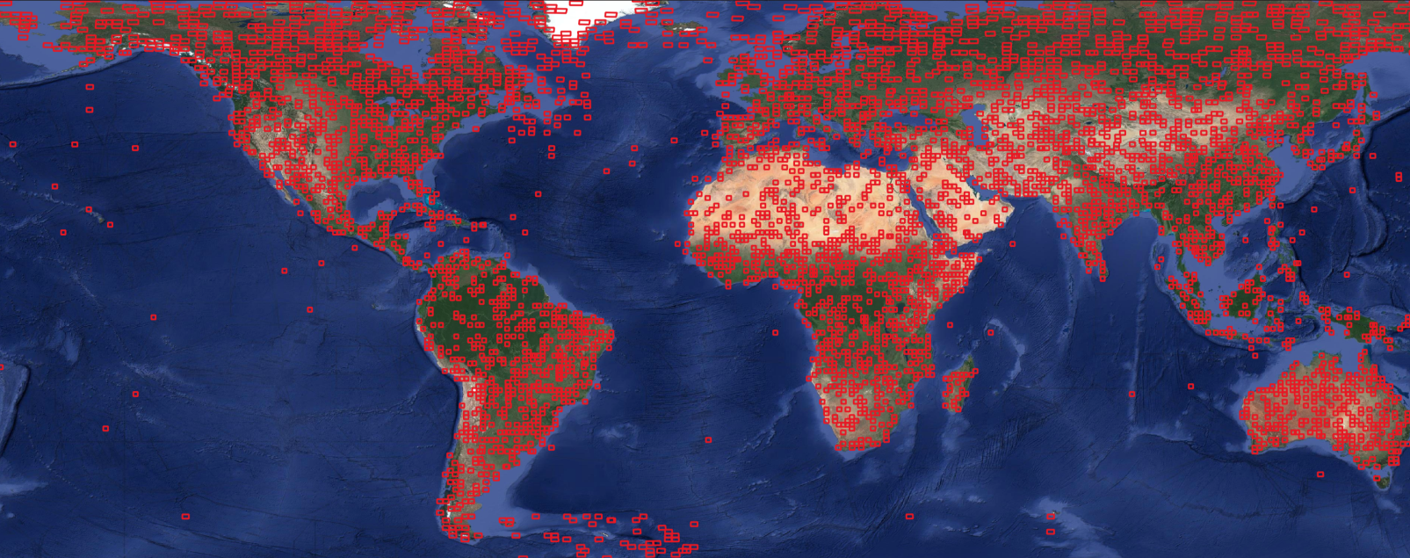

The THOR model utilizes Masked Autoencoders (MAE) for pre-training, a self-supervised learning technique where portions of the input data are masked and the model is trained to reconstruct the missing information. This pre-training phase is conducted on the THOR Pretrain Dataset, a large-scale collection of multi-sensor imagery comprising diverse environments and object configurations. The dataset’s scale and variety enable the model to learn robust feature representations without requiring manual annotations, improving performance and generalization capabilities for downstream tasks. Utilizing MAE on this dataset allows the model to learn contextual understandings of visual data and improve its ability to infer missing information, enhancing its overall perception capabilities.

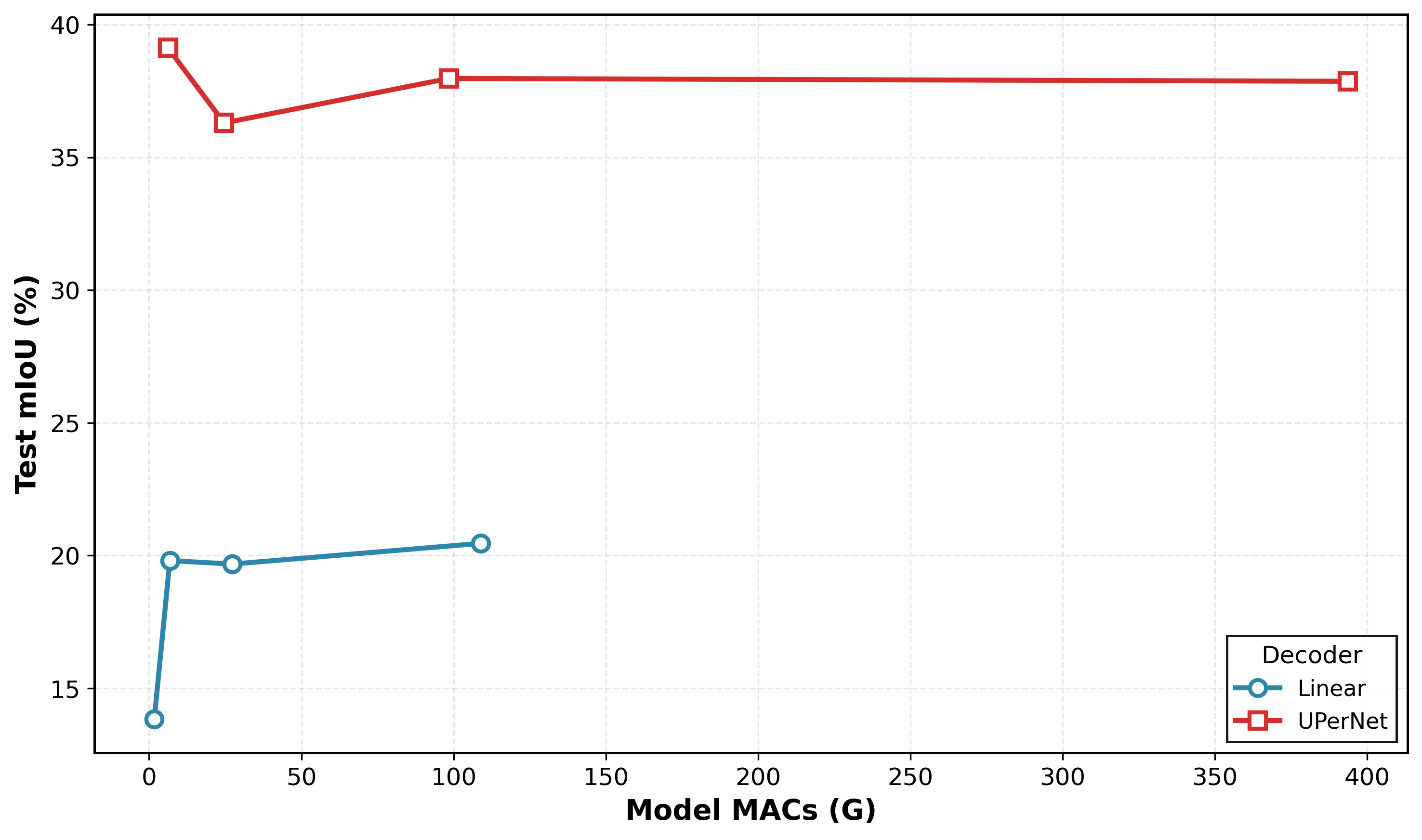

The THOR framework demonstrates versatility through its support for diverse decoder architectures, ranging from single linear layers to more complex networks such as UperNet. This adaptability enables application to a variety of downstream tasks, including semantic segmentation. Quantitative results indicate state-of-the-art performance, achieving a mean Intersection over Union (mIoU) score of 58.63% when utilizing a 4×4 patch size for evaluation. This performance benchmark establishes THOR as a competitive solution for scene understanding tasks requiring robust and flexible decoding capabilities.

Multi-Sensor Synergy and Compute-Adaptive Power: Expanding Analytical Horizons

THOR distinguishes itself through sophisticated multi-sensor integration, expertly synthesizing data from a diverse array of Earth observation platforms including the Sentinel-1 radar, Sentinel-2 and Sentinel-3 optical imagery, and supplementary datasets. This synergy isn’t simply a concatenation of information; the model actively learns to correlate signals across these different modalities, extracting complementary insights that would be obscured when analyzing each source in isolation. By intelligently fusing these streams of data, THOR constructs a more robust and nuanced understanding of complex environmental phenomena, improving the reliability and accuracy of its analyses and ultimately enabling more informed decision-making in areas like land cover classification and change detection.

THOR demonstrates a significant advancement in efficient remote sensing through its compute-adaptive inference capabilities. This allows the model to dynamically adjust its computational load, creating a flexible balance between processing speed and analytical precision. Crucially, this adaptability proves invaluable in resource-limited settings, such as deployment on edge devices or in regions with limited bandwidth. Validation on the PANGAEA benchmark reveals that THOR achieves a mean Intersection over Union (mIoU) of 58.63% – a competitive score – while utilizing only 10% of the standard training data, showcasing its data efficiency and potential for rapid deployment and analysis even with minimal resources.

The THOR model significantly enhances its analytical capabilities through the integration of ERA5-Land data, incorporating crucial land surface variables like temperature, soil moisture, and vegetation indices for more comprehensive environmental assessments. This strategic data inclusion is paired with a notable advancement in model efficiency; THOR achieves comparable performance utilizing a mere 24.6 thousand parameters with a linear decoder, a dramatic reduction from the 22.9 million parameters required by a traditional U-Net decoder. This parameter reduction not only minimizes computational demands and memory footprint, but also facilitates deployment on resource-constrained platforms without substantial performance loss, representing a key innovation in scalable remote sensing analysis.

The development of THOR exemplifies a pursuit of fundamental truths within complex systems. It’s not merely about achieving high accuracy on benchmark datasets, but establishing a robust and adaptable framework for understanding Earth Observation data. As Niels Bohr stated, “The opposite of trivial is not obvious.” This sentiment resonates deeply with the core innovation of THOR – its compute-adaptive patching strategy. By dynamically adjusting computational resources, the model reveals underlying invariants in multi-sensor data, moving beyond superficial correlations to expose the essential relationships driving Earth’s climate and societal dynamics. If it feels like magic, one hasn’t revealed the invariant, and THOR actively seeks to make those invariants transparent.

What Lies Ahead?

The introduction of THOR represents a necessary, if not entirely surprising, progression. The relentless accumulation of Earth Observation data-a digital deluge-demands architectures transcending simple empirical performance. While current metrics undoubtedly reflect a step forward in data efficiency and multi-modal integration, the underlying challenge remains: correlation is not causation. The model elegantly unifies disparate data streams, yet a provable link between observed patterns and genuine physical phenomena is conspicuously absent. Future work must prioritize establishing such demonstrable connections, moving beyond predictive accuracy to verifiable understanding.

The compute-adaptive patching strategy, while resourceful, hints at a deeper limitation. Reducing computational burden through selective attention is a pragmatic necessity, but it skirts the core issue of algorithmic complexity. A truly elegant solution would derive insight without sacrificing exhaustive analysis-a feat demanding novel mathematical formulations, not merely efficient approximations. The pursuit of scalability should not overshadow the imperative for logical rigor.

In the chaos of data, only mathematical discipline endures. The field now faces a critical juncture: will it continue down the path of increasingly complex empiricism, or will it embrace the uncomfortable necessity of provable, first-principles reasoning? The answer will determine whether these foundation models become tools of genuine scientific discovery, or merely sophisticated instruments for pattern recognition.

Original article: https://arxiv.org/pdf/2601.16011.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Unlock the Mines in Cookie Run: Kingdom

- Assassin’s Creed Black Flag Remake: What Happens in Mary Read’s Cut Content

- Jujutsu Kaisen: Divine General Mahoraga Vs Dabura, Explained

- Upload Labs: Beginner Tips & Tricks

- Where to Find Prescription in Where Winds Meet (Raw Leaf Porridge Quest)

- The Winter Floating Festival Event Puzzles In DDV

- Top 8 UFC 5 Perks Every Fighter Should Use

- Jujutsu: Zero Codes (December 2025)

- Xbox Game Pass Officially Adds Its 6th and 7th Titles of January 2026

- Jujutsu Kaisen Modulo Chapter 18 Preview: Rika And Tsurugi’s Full Power

2026-01-24 13:58