Author: Denis Avetisyan

Researchers are exploring quantum optimization techniques to build more resilient and adaptable networks for unmanned aerial vehicles.

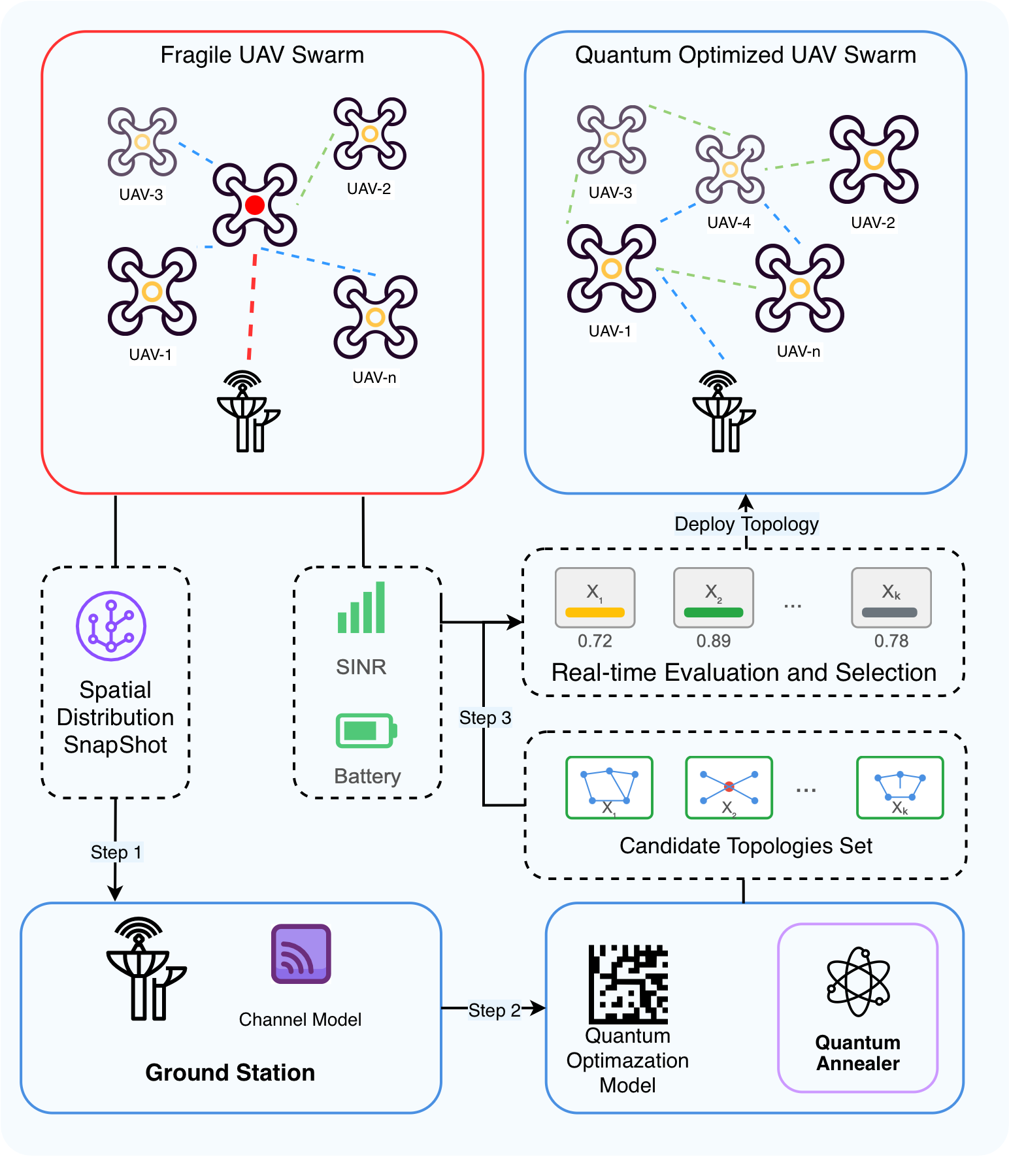

A novel two-stage framework leverages quantum annealing to optimize UAV network topology for improved performance and robustness in dynamic environments.

Maintaining reliable connectivity in rapidly changing Unmanned Aerial Vehicle (UAV) networks presents a significant challenge to traditional topology control methods. This paper, ‘Quantum Takes Flight: Two-Stage Resilient Topology Optimization for UAV Networks’, introduces a novel quantum-assisted framework to address this limitation by efficiently generating and selecting robust network topologies. Leveraging quantum annealing to explore a diverse solution space, the proposed approach demonstrates improved performance retention and objective value compared to classical methods in dynamic environments. Could this quantum-inspired resilience pave the way for more adaptable and dependable next-generation UAV communication networks?

The Inherent Volatility of Dynamic Networks

Contemporary network infrastructure, notably those leveraging Dynamic Unmanned Aerial Vehicle (UAV) Networks, is characterized by inherent and persistent topological volatility. This isn’t simply a matter of occasional adjustments; rather, the very structure of the network is in a state of flux, driven by the mobility of the UAVs themselves and external environmental factors like wind, obstacles, and signal interference. Each UAV’s movement necessitates a reconfiguration of the network, creating and dissolving connections in real-time. Furthermore, atmospheric conditions and physical barriers can unpredictably alter signal propagation, dynamically reshaping the communication landscape. This constant reshaping presents a fundamental challenge to maintaining stable and reliable connectivity, demanding network designs that anticipate and accommodate these frequent shifts rather than resisting them.

The inherent dynamism of modern networks introduces a critical vulnerability known as network fragility. Traditional network designs, predicated on static topologies, struggle to maintain consistent performance when faced with frequent shifts in connectivity. Each node joining or leaving the network, or even temporary signal obstructions, can create single points of failure or drastically increase communication latency. This susceptibility to disruption isn’t merely a matter of inconvenience; it directly impacts the reliability of data transmission and the overall functionality of the network, potentially leading to complete communication breakdowns – a particularly concerning issue for applications demanding continuous operation, such as those relying on Dynamic UAV Networks or critical infrastructure monitoring. Consequently, networks built on inflexible architectures exhibit significantly reduced resilience in the face of real-world operating conditions.

Reliable communication in dynamic networks hinges on a shift from static configurations to proactive topology management. Instead of relying on pre-defined routes and connections, these networks demand systems capable of continuous monitoring and real-time adaptation. This involves algorithms that predict network shifts – caused by UAV movement or environmental interference – and preemptively adjust routing protocols, bandwidth allocation, and even node positioning. Such a responsive approach minimizes disruption by establishing alternative pathways before failures occur, ensuring consistent connectivity and data transmission. The goal isn’t simply to react to change, but to anticipate it, creating a self-healing network infrastructure resilient to the inherent instability of dynamic environments.

Decoupling Optimization from Real-Time Decision-Making

A two-stage framework is proposed for dynamic topology management to improve responsiveness and scalability. This approach decouples the computationally intensive optimization process from the time-critical decision-making required in dynamic network environments. The framework operates by first performing offline optimization to generate a set of viable network topologies, and subsequently utilizing these pre-computed options for rapid selection during online operation based on current network state. This separation allows for more complex optimization algorithms to be employed offline without impacting real-time performance, and enables faster adaptation to changing network conditions by reducing the computational burden during runtime.

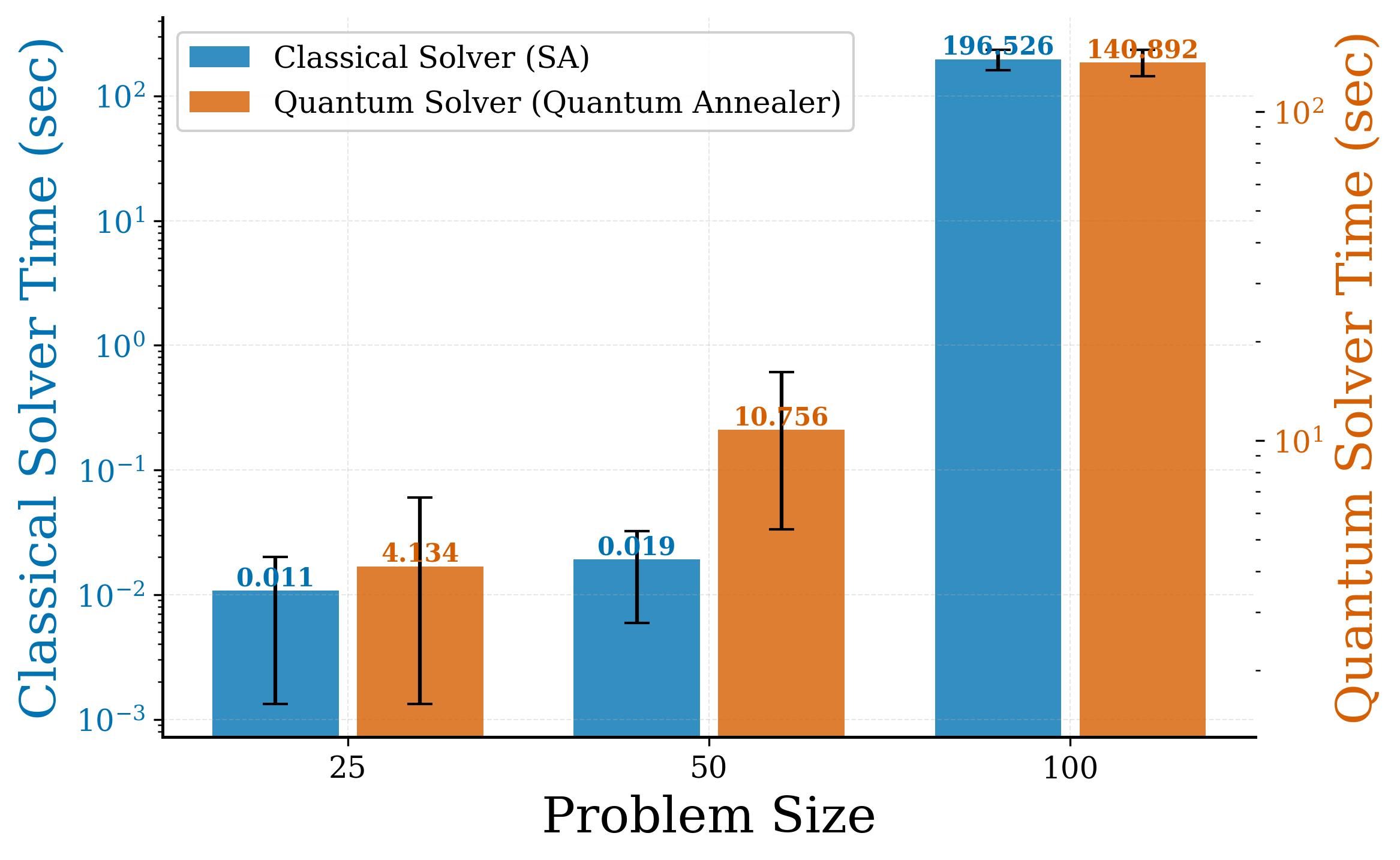

The Offline Stage leverages Quantum Annealing (QA) as a method for generating a set of candidate network topologies. QA allows for the parallel exploration of a vast solution space, enabling the simultaneous evaluation of numerous potential network configurations. This contrasts with sequential optimization techniques. By exploiting quantum phenomena, QA facilitates the identification of diverse topologies that might be missed by classical algorithms. The resulting candidate set is then available for rapid assessment during the Online Stage, reducing latency in dynamic topology management.

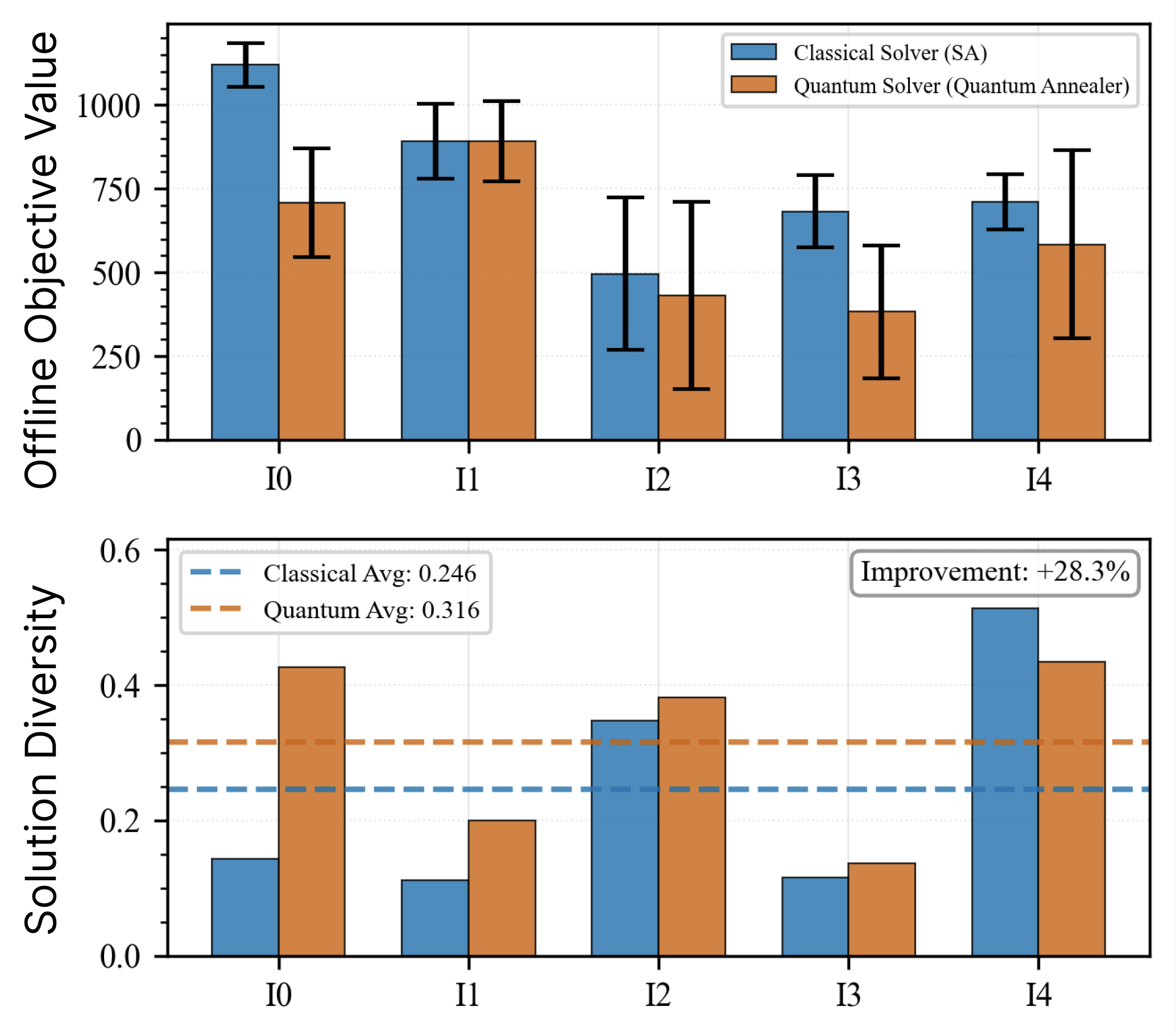

The diversity of candidate topologies generated by the Quantum Annealing (QA) process is enhanced through an Iterative Similarity Penalty mechanism. This mechanism functions by assessing the similarity between newly generated topologies and those already present in the candidate set, applying a penalty to topologies exhibiting high similarity. This iterative process encourages the exploration of a wider range of topological solutions, resulting in a demonstrated 28.3% increase in solution diversity when compared to the performance of simulated annealing. The increased diversity improves the likelihood of identifying a topology optimally suited to dynamic network conditions during the online selection stage.

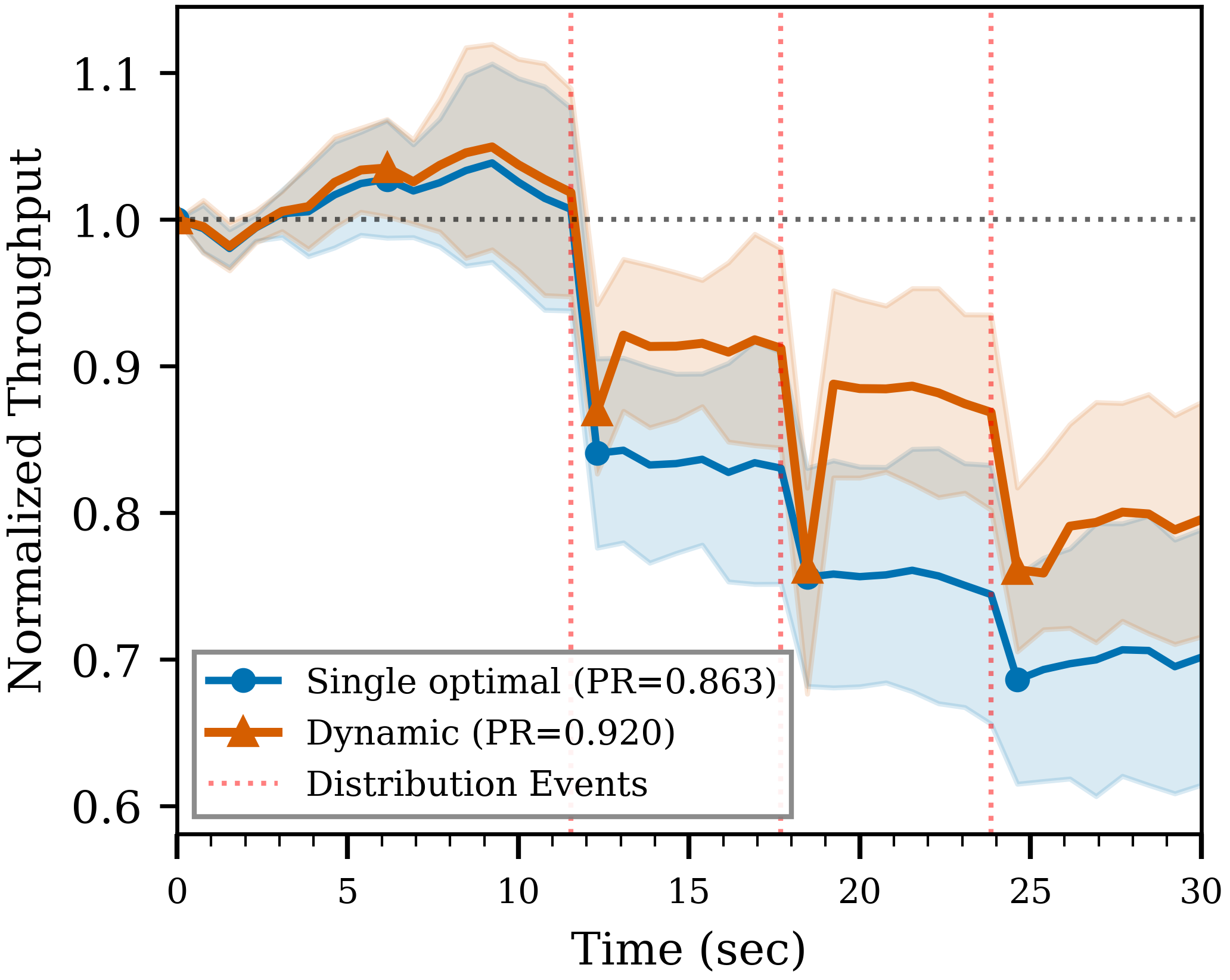

The Online Stage of the Two-Stage Framework leverages pre-computed candidate topologies to facilitate rapid decision-making under dynamic network conditions. Rather than recalculating optimal topologies in response to changing conditions, this stage selects from the diverse set generated during the Offline Stage, significantly reducing computational latency. This approach allows the system to adapt to real-time network fluctuations – such as link failures or shifts in traffic demand – with minimal delay, as the selection process involves evaluating pre-existing solutions rather than solving an optimization problem in real-time. The pre-computation enables a response time that scales with the number of candidate topologies, rather than the complexity of the underlying network optimization problem.

Quantum Annealing for Optimized Network Topology

The Offline Stage of our topology optimization framework utilizes Quantum Annealing (QA) to address the computational limitations of classical methods. Traditional topology optimization algorithms are constrained by the exponential growth of the solution space with increasing network complexity. QA, however, exploits quantum-mechanical phenomena to probabilistically explore a vastly expanded solution space, enabling the identification of topologies that would be computationally inaccessible to conventional techniques. This expanded search capability is achieved through the superposition and tunneling properties inherent in quantum annealing, allowing for the consideration of numerous potential network configurations simultaneously and overcoming local optima that often trap classical algorithms.

Quantum Annealing (QA) exhibits a measurable performance advantage over classical Simulated Annealing (SA) when applied to topology optimization for complex networks. Benchmarking demonstrates that QA achieves a 5.15% reduction in objective value compared to SA across a range of network scenarios. This improvement indicates a greater capacity to identify lower-cost, more efficient network topologies using QA, suggesting a more effective exploration of the solution space than is possible with SA for these problem types. The objective value reduction directly correlates to improvements in network performance metrics such as throughput and resilience.

Topology optimization utilizing quantum annealing yields network designs that prioritize maximized data transfer rates, quantified as Network Throughput. These optimized topologies achieve this by strategically configuring network connections to reduce congestion and improve path diversity. Furthermore, the framework actively mitigates the impact of single or multiple node failures through redundant pathways and adaptive routing. This design characteristic enhances overall network resilience, ensuring continued functionality and performance even under adverse conditions. The resulting topologies demonstrably reduce the performance degradation experienced during failures compared to static network configurations.

The topology optimization framework achieves a 6.6% improvement in performance retention when compared to a static topology baseline, indicating enhanced network stability under varying conditions. This functionality is enabled through support for both Non-Real-Time (Non-RT) and Near-Real-Time (Near-RT) RIC components. The Non-RT RIC facilitates comprehensive pre-computation of optimized topologies, while the Near-RT RIC allows for rapid selection and deployment of the most appropriate topology based on current network state, providing a balance between optimization quality and responsiveness.

Towards Inherently Robust and Self-Healing Networks

Network fragility, the susceptibility to disruption from the failure of critical nodes, is addressed through a framework centered on optimized network topologies. This approach moves beyond reactive repair to proactively minimize the presence of single points of failure – those nodes whose collapse precipitates widespread connectivity loss. By intelligently structuring the network’s architecture, the framework ensures alternative pathways for data transmission, diminishing the impact of individual node failures. This isn’t simply about redundancy; the optimized topologies are designed to distribute critical functions, preventing any single node from becoming disproportionately vital. Consequently, the network exhibits heightened resilience, maintaining functionality even under adverse conditions and ensuring continued communication despite localized disruptions.

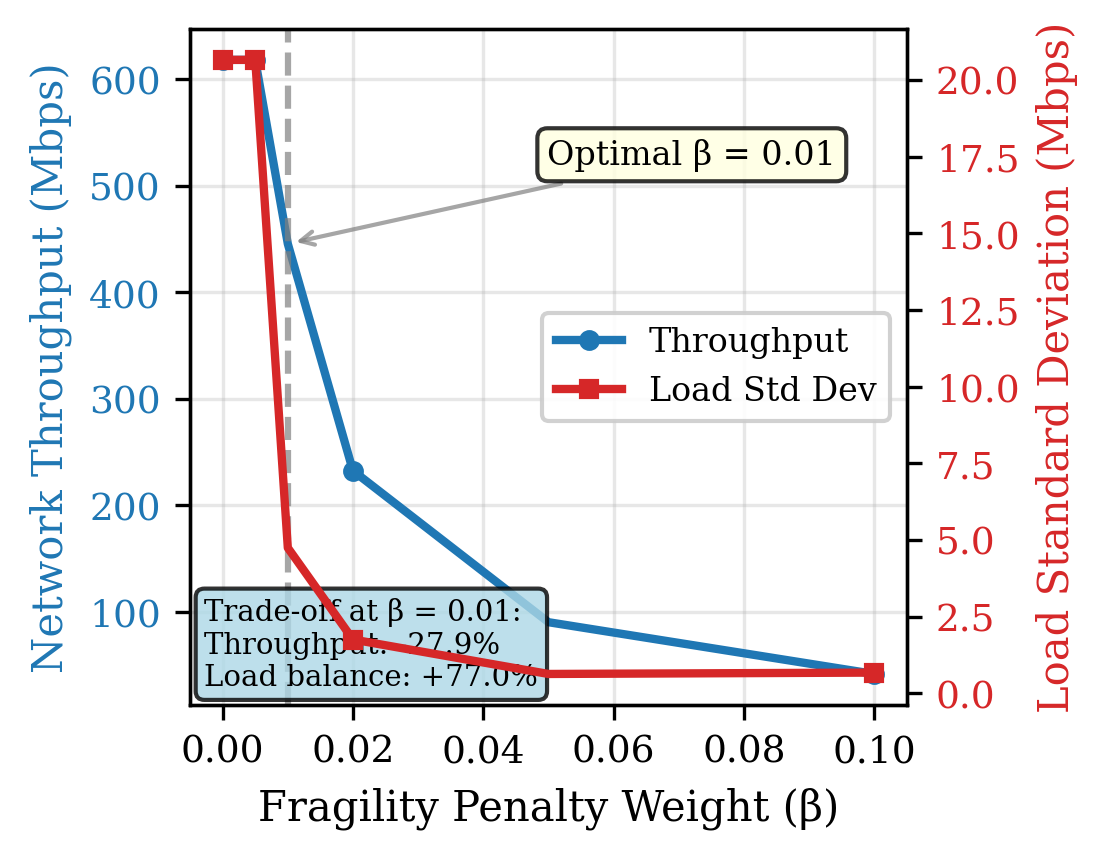

Optimized network topologies inherently facilitate effective load balancing, a critical mechanism for bolstering resilience against disruptions. By intelligently distributing traffic across available links, the framework minimizes the strain on individual nodes and prevents performance bottlenecks. Studies demonstrate a significant 77.0% improvement in the standard deviation of nodal load at β=0.01, indicating a substantially more even distribution of traffic and a reduction in the likelihood of overload. This proactive equalization not only enhances stability under normal operating conditions but also dramatically improves the network’s capacity to withstand failures or surges in demand, ensuring consistent and reliable communication even when faced with adverse conditions.

The network framework demonstrates an inherent capacity for self-regulation in the face of operational challenges. Rather than relying on external intervention, the optimized topology facilitates dynamic adaptation to disruptions, effectively redistributing network traffic and rerouting data around failed links. This proactive resilience isn’t simply about restoring connectivity; it’s about maintaining performance levels even during failures. The system intelligently leverages redundancy and load balancing to minimize the impact of individual node or link outages, ensuring continued functionality and a sustained quality of service. This internal capacity for recovery distinguishes the framework, moving beyond reactive repair to a state of continuous, self-maintained stability, though some trade-offs exist, such as a noted 27.9\% reduction in throughput under specific parameters.

The culmination of these network optimizations yields demonstrably more robust and reliable communication systems, a characteristic of paramount importance for applications requiring uninterrupted service. While the framework prioritizes stability and fault tolerance, a trade-off exists; specifically, testing reveals a 27.9\% reduction in overall throughput at a parameter setting of \beta = 0.01. This decrease, however, represents a calculated compromise, safeguarding against systemic failures and ensuring consistent performance even under duress-a critical advantage in scenarios ranging from emergency response networks to real-time financial transactions where consistent connectivity outweighs maximal data transfer rates.

The pursuit of resilient UAV networks, as detailed in this work, necessitates a shift from empirical testing to provable solutions. The two-stage topology optimization framework, employing quantum annealing, aims to establish a foundation of mathematically sound network configurations. This aligns perfectly with Kernighan’s observation: “If it feels like magic, you haven’t revealed the invariant.” The apparent complexity of quantum optimization is not magic, but a revelation of underlying, provable network properties. By pre-computing diverse, resilient topologies, the system avoids relying on ad-hoc responses to dynamic conditions, instead revealing the inherent invariants that guarantee performance, even amidst network disruptions. This focus on demonstrable correctness, rather than simply ‘working on tests,’ is the hallmark of truly elegant engineering.

What Lies Ahead?

The presented framework, while demonstrating a path toward resilient UAV network topologies, merely scratches the surface of a far deeper challenge. The reliance on offline quantum optimization, specifically QUBO formulation, inherently limits adaptability to truly unforeseen network disruptions. The asymptotic complexity of solving increasingly large QUBO instances – even with quantum annealers – remains a significant hurdle, demanding continued investigation into alternative formulations or hybrid classical-quantum approaches. The true test will not be in generating diverse topologies, but in proving their provable optimality-a feat currently obscured by the heuristic nature of the underlying quantum algorithms.

Future work must address the scalability of this approach beyond the current demonstration. The integration with Software-Defined Networking (SDN) and O-RAN presents opportunities, yet these necessitate a rigorous analysis of the signaling overhead and latency introduced by dynamic topology adjustments. A purely topological solution, however elegant, cannot fully compensate for deficiencies in communication protocols or hardware limitations. The field requires a shift in focus – from simply reacting to network failures, to predicting and preventing them through proactive, mathematically grounded control strategies.

Ultimately, the pursuit of resilient UAV networks is not merely an engineering problem, but a mathematical one. The ephemeral nature of wireless links and the inherent uncertainty of dynamic environments demand solutions rooted in provable correctness, not empirical observation. Only then can one claim to have truly tamed the chaos and achieved a network capable of withstanding the inevitable storms.

Original article: https://arxiv.org/pdf/2601.19724.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Unlock the Mines in Cookie Run: Kingdom

- USD RUB PREDICTION

- Jujutsu Kaisen: Divine General Mahoraga Vs Dabura, Explained

- Where to Find Prescription in Where Winds Meet (Raw Leaf Porridge Quest)

- Top 8 UFC 5 Perks Every Fighter Should Use

- Gold Rate Forecast

- How To Upgrade Control Nexus & Unlock Growth Chamber In Arknights Endfield

- Quarry Rescue Quest Guide In Arknights Endfield

- Jujutsu: Zero Codes (December 2025)

- Solo Leveling: From Human to Shadow: The Untold Tale of Igris

2026-01-28 15:55