Author: Denis Avetisyan

This research introduces a zero-trust runtime verification framework designed to protect agent-driven payment systems from critical vulnerabilities.

The study demonstrates how strict, execution-time authorization checks can effectively mitigate replay and context-binding attacks in Agentic Payment Protocols (AP2).

While mandate-based authorization offers a promising path towards autonomous agentic commerce, inherent assumptions about mandate usage are challenged by real-world runtime behaviors. This paper, ‘Zero-Trust Runtime Verification for Agentic Payment Protocols: Mitigating Replay and Context-Binding Failures in AP2’, analyzes vulnerabilities in agent-based payment systems and proposes a zero-trust runtime verification framework to enforce strict authorization at execution time. Our approach, utilizing dynamically generated time-bound nonces, effectively mitigates replay and context-binding attacks, maintaining low latency at high transaction throughput-approximately 3.8ms at 10,000 transactions per second. Can this framework pave the way for truly robust and scalable agentic economies, free from the threat of unauthorized transactions?

Deconstructing Authorization: The Rise of Agentic Systems

The increasing prevalence of autonomous agents – systems capable of acting independently to achieve defined goals – fundamentally shifts the landscape of digital security. Traditional authentication methods, focused on verifying the identity of a human user initiating an action, prove inadequate when the actor is a machine. These agents require permissions and the ability to execute transactions on their own, introducing a need for authorization protocols that extend beyond simple user credentials. This presents novel challenges, as securing agentic systems demands a focus on verifying the legitimacy of the agent’s mandate and ensuring actions align with pre-defined parameters, rather than relying on human oversight or direct user confirmation. Consequently, securing these systems requires entirely new approaches to runtime verification and trust establishment, moving beyond the established paradigm of user-centric security.

Agentic systems, increasingly prevalent in decentralized applications, operate on a principle of mandate-based authorization, often leveraging protocols like Agent Payments Protocol 2 (AP2). This framework grants agents the ability to act on behalf of users, but crucially, it shifts security concerns from traditional user authentication to continuous runtime verification. Unlike typical permission systems, where access is granted upfront, these systems require constant monitoring to ensure the agent adheres to the stipulated mandate – the specific parameters of its authorized actions. Without this diligent, real-time oversight, vulnerabilities emerge, potentially allowing agents to exceed their defined scope or execute unintended commands. Robust runtime verification, therefore, isn’t merely a safeguard, but a fundamental requirement for the secure and reliable operation of any agentic system built upon mandate-based authorization, demanding continuous assessment of agent behavior against its pre-approved directives.

Agentic systems, while promising increased efficiency and automation, present significant vulnerabilities if not properly secured. A critical concern revolves around the potential for malicious actors to exploit the systems through replay attacks, where legitimate, previously authorized requests are intercepted and resubmitted to trigger unintended actions. Equally concerning is the risk of authorization misuse, where agents might exceed their granted permissions or operate outside the scope of their intended mandate. These vulnerabilities stem from the inherent complexities of decentralized, autonomous operations and the reliance on digital signatures and protocols which, if compromised, could allow unauthorized access and manipulation of resources. Mitigating these threats necessitates the implementation of robust runtime verification mechanisms, alongside secure communication protocols and continuous monitoring of agent behavior to detect and prevent malicious activity.

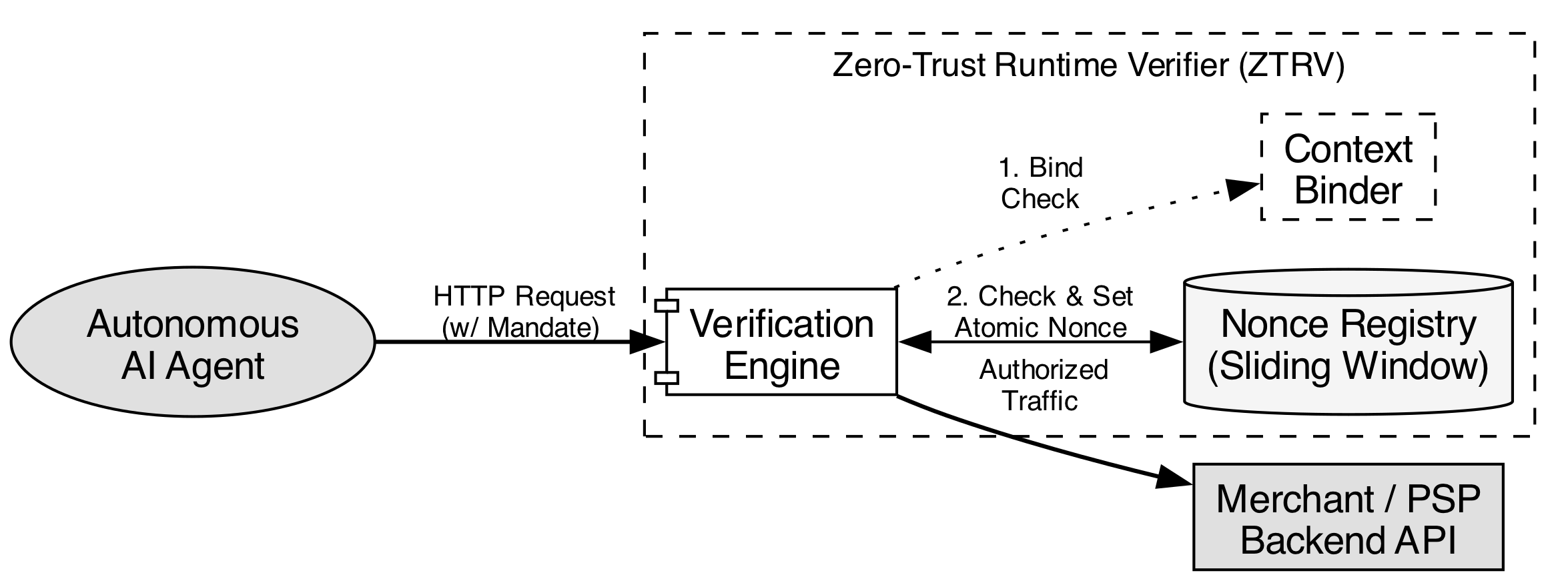

Zero-Trust Runtime Verification: A Contextual Firewall

Zero-Trust Runtime Verification addresses security vulnerabilities by implementing explicit context binding and consume-once semantics. Context binding ensures that a mandate is only valid within a specific, predefined operational context – encompassing parameters like time, location, or authorized agents – and rejects execution attempts outside of these boundaries. Consume-once semantics prevent the reuse of a single mandate; after a mandate is successfully executed, it is cryptographically marked as consumed, rendering any subsequent attempts to utilize it invalid. This dual approach mitigates risks associated with replay attacks and unauthorized mandate use by tying execution directly to a validated, single-use context.

Zero-Trust Runtime Verification utilizes cryptographic hash functions and digital signatures to establish and maintain mandate integrity and prevent replay attacks. Hash functions generate a fixed-size output for any given input, enabling verification of data consistency; any alteration to the original data will result in a different hash value. Digital signatures employ asymmetric cryptography, binding a mandate to a specific author via a private key; verification is performed using the corresponding public key. This combination ensures that mandates have not been tampered with in transit and confirms the originator’s authenticity, effectively preventing the unauthorized reuse of prior valid requests – a common vector in replay attacks.

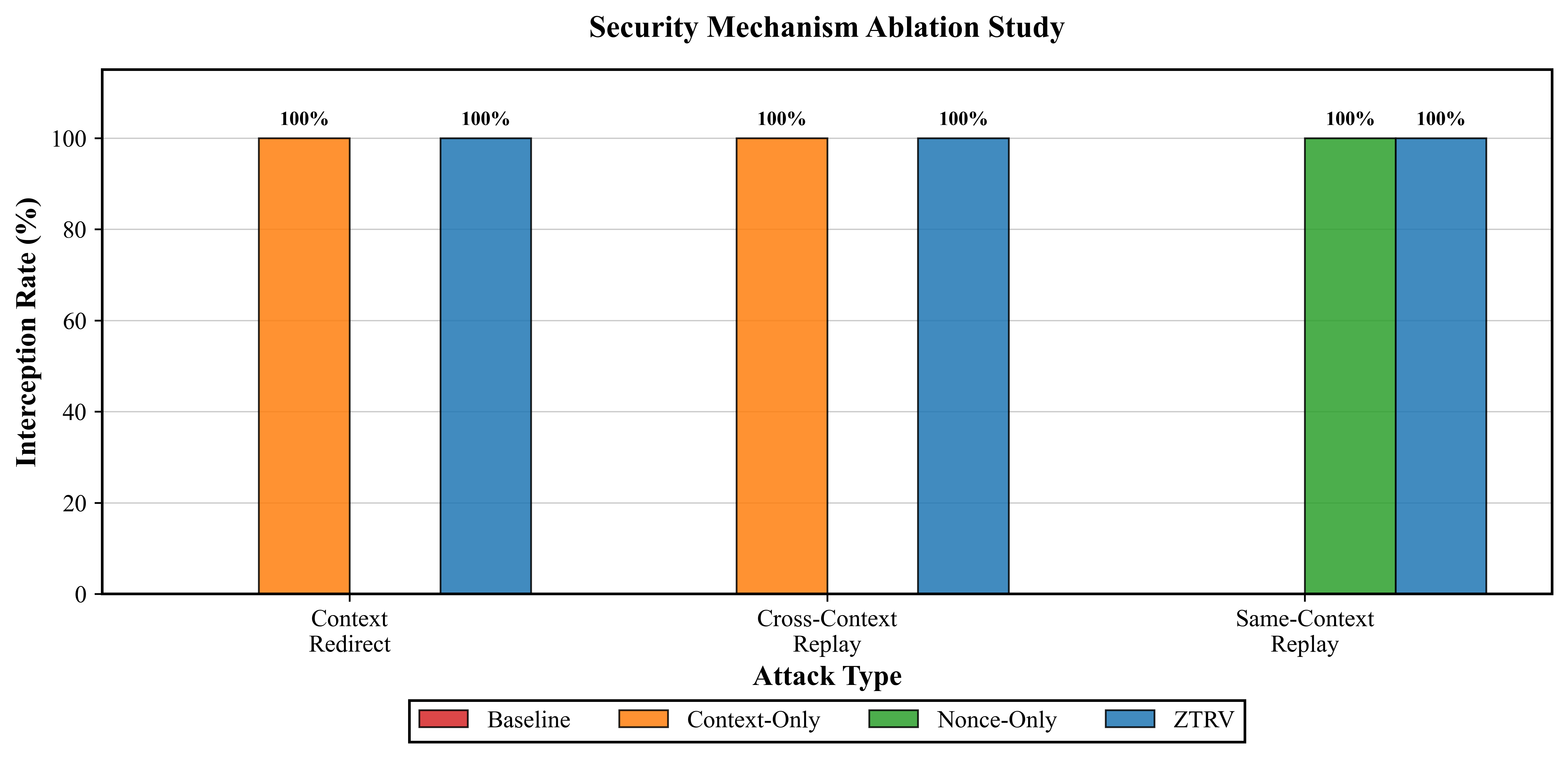

Testing of the implemented framework demonstrates complete mitigation of both replay and context-binding attacks within agentic payment execution scenarios. This represents a substantial advancement over conventional authorization techniques which are susceptible to these vulnerabilities. Performance metrics indicate a minimal verification latency of approximately 3.7 milliseconds while sustaining a transaction throughput of 10,000 transactions per second, suggesting suitability for high-volume operational environments.

Unveiling the Hidden Risks: Context Leakage and Observability

Observability pipelines, critical for system monitoring and debugging, inherently process and transmit data representing application state and user context. This data often includes sensitive “mandate” information – details authorizing specific actions or access rights. While intended for diagnostic purposes, this information can be inadvertently logged, stored, or transmitted in ways that expose it to unauthorized parties. This exposure constitutes context leakage, where the sensitive authorization details are revealed outside the intended secure processing environment. The risk arises not from a flaw in the authorization mechanism itself, but from the unintended consequences of data collection practices within the observability infrastructure.

Context leakage from observability pipelines presents security risks by exposing mandate information that can be leveraged for unauthorized access and replay attacks. Specifically, exposed data allows malicious actors to facilitate authorization misuse by constructing valid requests using compromised context. Furthermore, this leakage enables cross-context replay attacks, where previously authorized requests are re-executed with potentially different, and unintended, consequences. These attacks bypass typical authorization checks because the compromised context provides sufficient credentials for request validation, effectively circumventing security measures designed to prevent unauthorized actions.

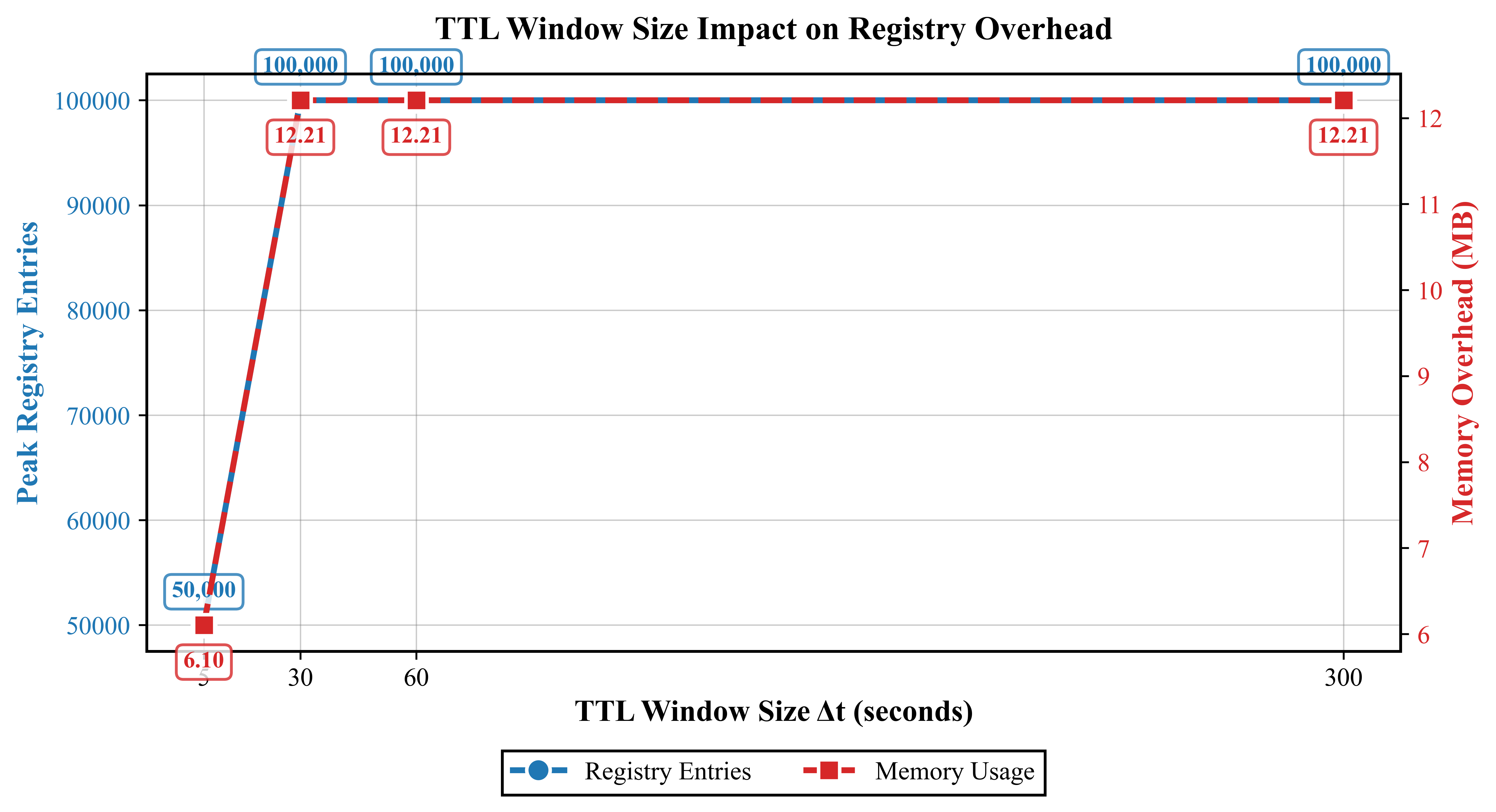

A non-ce registry mitigates replay attacks and enhances security by tracking mandate usage. This registry functions as a record of previously used identifiers, preventing the re-submission of identical requests. Importantly, the resource footprint of this registry remains manageable; at peak transaction rates of 10,000 transactions per second (TPS), the estimated memory usage is approximately 12 MB, indicating scalability and feasibility for high-throughput systems.

The Agentic Execution Paradigm: Foundations for Secure Authorization

The agentic execution model, built on the principles of stateless agents and concurrent execution, presents unique challenges to traditional authorization systems. Because agents operate without retaining internal state, each interaction must be independently validated, demanding a shift from session-based permissions to granular, context-aware access control. Furthermore, the potential for numerous agents to operate simultaneously-concurrent execution-amplifies the need for a highly scalable and responsive authorization framework. This necessitates moving beyond simple allow/deny lists to a more sophisticated system capable of dynamically assessing permissions based on the specific tool being utilized, the merchant endpoint being accessed, and the overall context of the request, ultimately ensuring secure and reliable interactions within the agentic system.

The architecture envisions user agents as active participants in a dynamic system, directly interfacing with merchant endpoints to fulfill requests. This interaction isn’t simply a passive exchange of data; it’s characterized by tool use, where agents leverage specific functionalities exposed by merchants-think of booking a flight or processing a payment-to achieve a desired outcome. Moreover, sophisticated agents may employ reasoning frameworks such as ReAct, enabling them to plan, reflect upon intermediate results, and adapt their approach in real-time. This allows for complex tasks to be broken down into manageable steps, enhancing the agent’s ability to navigate the merchant landscape and reliably deliver services, ultimately shifting the paradigm from simple requests to goal-oriented execution.

A secure agentic system relies on granting agents only the minimal permissions required to perform their designated tasks, achieved through a capability-based security model working in concert with Zero-Trust Runtime Verification. This approach moves beyond traditional access control lists, instead issuing agents unforgeable “capabilities” – essentially tokens – that represent authorization to specific actions or resources. Each capability acts as proof of permission, and agents can only operate within the boundaries defined by those capabilities. Zero-Trust Runtime Verification then continuously monitors agent behavior, ensuring that even with a valid capability, actions remain within expected parameters and don’t deviate into unauthorized territory. This dual layer of security-precise permission granting coupled with ongoing behavioral analysis-significantly reduces the attack surface and minimizes potential damage from compromised or malicious agents, fostering a highly resilient and trustworthy system.

Future-Proofing Agentic Systems: LLMs and the Evolving Threat Landscape

The expanding use of large language models, while promising for automation and complex tasks, inherently creates novel security challenges. A primary concern is prompt injection, a technique where malicious actors craft specific inputs designed to manipulate the LLM’s behavior, overriding original instructions or extracting confidential information. Unlike traditional code injection, prompt injection exploits the LLM’s natural language processing capabilities, allowing attackers to subtly alter the model’s interpretation of requests. This can range from causing the model to generate harmful content or disclose sensitive data to commandeering the agentic system for unintended purposes, such as sending unauthorized messages or executing malicious commands. Because LLMs are trained on vast datasets and prioritize fluency, discerning malicious prompts from legitimate ones proves exceptionally difficult, demanding innovative security measures focused on input validation and behavioral monitoring.

The Open Web Application Security Project (OWASP) is currently at the forefront of defining and mitigating the unique security challenges posed by large language models. Recognizing the emergence of vulnerabilities like prompt injection – where malicious instructions are embedded within seemingly benign prompts – OWASP has initiated dedicated research and the development of standardized testing methodologies. This includes actively building resources, such as the “LLM Top 10” project, to highlight the most critical risks facing developers and organizations deploying these powerful AI systems. Through collaborative efforts involving security professionals and the broader AI community, OWASP aims to establish a robust framework for secure development practices, ensuring that agentic systems built upon LLMs are resilient against evolving threats and maintain user trust.

Maintaining robust agentic systems necessitates a shift from reactive security measures to continuous, adaptive defenses. Simply identifying vulnerabilities isn’t enough; systems must be constantly monitored for anomalous behavior and evolving attack patterns. This requires the implementation of adaptive security protocols capable of dynamically adjusting to new threats – essentially, learning and improving alongside potential adversaries. Crucially, a proactive approach to threat modeling is vital, anticipating potential weaknesses before they are exploited through rigorous simulations and red-teaming exercises. Such foresight allows developers to build resilience into the system’s core architecture, ensuring long-term security and minimizing the impact of future, unforeseen vulnerabilities that will inevitably emerge as the technology matures and attracts increasingly sophisticated attacks.

The pursuit of secure agentic systems, as detailed in the exploration of runtime verification for AP2, necessitates a fundamental distrust of all components-a stance echoing the spirit of rigorous analysis. This mirrors a core tenet of systems understanding: to truly grasp a mechanism, one must attempt to dismantle it, intellectually probing its weaknesses. As John von Neumann observed, “If you can’t break it, you don’t understand it.” The paper’s focus on mitigating replay and context-binding failures isn’t simply about building defenses; it’s about actively stress-testing the system’s assumptions, identifying vulnerabilities before they are exploited, and achieving a deeper comprehension of the underlying architecture. This proactive approach to security, a systematic attempt to ‘break’ the protocol, is precisely how robust, reliable systems are forged.

Pushing the Boundaries

The demonstrated efficacy of zero-trust runtime verification against replay and context-binding attacks in agentic payment protocols isn’t a destination, but rather a sharpened lens. This work exposes the inherent fragility of assuming trust, even within seemingly robust, mandate-based systems. The focus now shifts from simply preventing known attacks to anticipating the unforeseen exploits that emerge when complex agentic economies collide with adversarial ingenuity. The current framework, while demonstrably effective, operates within a defined perimeter of authorization checks; exploring dynamic, self-modifying verification policies – systems that learn attack vectors – represents a significant, and likely chaotic, next step.

A critical limitation lies in the computational overhead of runtime verification. Scaling these systems to accommodate the throughput demands of universal commerce requires a ruthless optimization of verification processes, perhaps leveraging novel hardware architectures or exploring trade-offs between security and performance. Furthermore, the model of agentic autonomy itself demands deeper scrutiny. How does one verify the intent of an agent, especially when that intent is not explicitly programmed, but rather emerges from complex interactions with its environment?

Ultimately, this research suggests a provocative, if uncomfortable, truth: security isn’t about building impenetrable walls, but about building systems capable of graceful degradation. A truly resilient agentic economy won’t be defined by its ability to prevent all failures, but by its capacity to detect, isolate, and recover from them – a system designed to break, but not catastrophically.

Original article: https://arxiv.org/pdf/2602.06345.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Solo Leveling: Ranking the 6 Most Powerful Characters in the Jeju Island Arc

- How to Unlock the Mines in Cookie Run: Kingdom

- YAPYAP Spell List

- Top 8 UFC 5 Perks Every Fighter Should Use

- Bitcoin Frenzy: The Presales That Will Make You Richer Than Your Ex’s New Partner! 💸

- How to Build Muscle in Half Sword

- Gears of War: E-Day Returning Weapon Wish List

- Bitcoin’s Big Oopsie: Is It Time to Panic Sell? 🚨💸

- How to Find & Evolve Cleffa in Pokemon Legends Z-A

- Most Underrated Loot Spots On Dam Battlegrounds In ARC Raiders

2026-02-10 05:15