Author: Denis Avetisyan

A new quantum operating system, HALO, dramatically boosts performance by enabling precise control and allocation of quantum resources.

HALO achieves a 4.44x throughput improvement over existing methods through fine-grained qubit sharing and shot-aware scheduling.

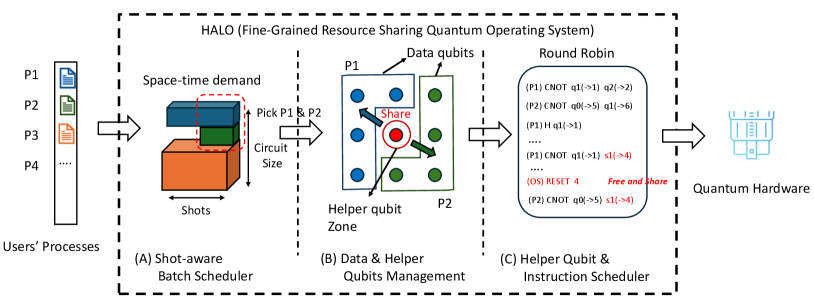

Despite burgeoning demand, current quantum cloud platforms struggle with efficient resource allocation, leaving valuable qubits idle while users face substantial wait times. This limitation motivates the development of novel operating system designs, and we present ‘HALO: A Fine-Grained Resource Sharing Quantum Operating System’, which introduces a system enabling fine-grained qubit sharing and shot-adaptive scheduling. Through hardware-aware placement of helper qubits and dynamic allocation of execution windows, HALO achieves up to a 4.44x increase in throughput and 2.44x improvement in hardware utilization on the IBM Torino processor. Can this approach pave the way for truly scalable and cost-effective quantum computing in the cloud era?

The Quantum Resource Squeeze: A Harsh Reality

The allure of quantum computing stems from its potential to solve certain problems with exponential speedups compared to classical computers, a capability rooted in principles like superposition and entanglement. However, realizing this promise faces a significant hurdle: current quantum hardware is severely constrained. Existing quantum processors possess a limited number of qubits – the quantum equivalent of bits – and these qubits aren’t universally connected. This means not every qubit can directly interact with every other, necessitating complex sequences of operations to move information around the processor. These limitations dramatically increase the difficulty of running even moderately sized quantum algorithms and introduce significant error rates, hindering the pursuit of practical quantum advantage. The field is therefore focused not only on increasing qubit counts, but also on developing strategies to maximize the utility of these scarce and imperfect quantum resources.

The pursuit of practical quantum advantage hinges not merely on increasing qubit numbers, but on maximizing the utility of each available qubit and quantum operation. Current quantum hardware is profoundly resource-constrained; qubit counts remain low, coherence times are brief, and connectivity between qubits is limited. Consequently, algorithms must be carefully tailored and efficiently compiled to minimize circuit depth and qubit usage. Optimizing resource allocation – intelligently scheduling operations, reusing qubits where possible, and minimizing communication overhead – is therefore critical. Without these advancements in quantum resource management, even the most promising algorithms may remain impractical, unable to demonstrate a genuine speedup over classical counterparts despite their theoretical potential. The effective stewardship of these scarce quantum resources represents a defining challenge – and a crucial pathway – toward realizing the transformative power of quantum computation.

Conventional scheduling algorithms, designed for classical computers, struggle to optimize the performance of near-term quantum processors due to their unique constraints and characteristics. These algorithms often prioritize minimizing execution time, but fail to account for the limited connectivity between qubits, the susceptibility of quantum states to noise, and the finite coherence times that dictate how long a quantum computation can run before errors accumulate. Consequently, simply mapping a quantum algorithm onto available hardware using classical methods can lead to drastically reduced performance or even computational failure. Researchers are actively developing novel resource allocation strategies that specifically address these quantum limitations – techniques that consider qubit connectivity, minimize gate errors, and maximize the probability of obtaining a correct result, effectively squeezing the most potential from currently available, and inherently limited, quantum hardware.

Quantum Virtualization: Making Do With What We Have

Quantum virtualization, facilitated by a \textit{QuantumVirtualMachine}, addresses the limited availability of physical qubits by enabling the representation of multiple logical qubits on a reduced number of physical qubit resources. This mapping is achieved through the abstraction of the underlying hardware, allowing a single physical qubit to emulate several logical qubits for specific durations or through shared spatial allocation. The ratio of logical to physical qubits represents a key metric for evaluating the efficiency of the virtualization process; a higher ratio indicates more effective hardware utilization. This approach is crucial for scaling quantum computations beyond the current limitations of physical qubit counts and enabling broader access to quantum processing capabilities.

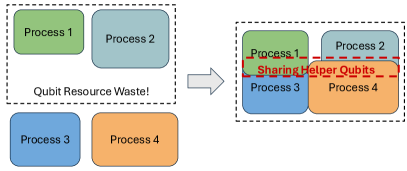

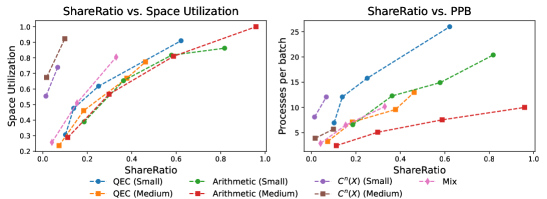

Hardware utilization is maximized in quantum virtualization through the implementation of techniques such as SpaceSharing and TimeSharing. SpaceSharing involves allocating different logical qubits to distinct physical qubits, enabling concurrent operations without resource contention. TimeSharing, conversely, assigns time slots on the same physical qubits to multiple logical qubits, interleaving their execution. These methods allow a single physical qubit to effectively function as multiple logical qubits over time or to support parallel computations, increasing the overall throughput and efficiency of the quantum hardware. The combined application of SpaceSharing and TimeSharing strategies optimizes resource allocation, thereby reducing idle time and maximizing the utilization rate of limited physical qubit resources.

HyperQ is a functional implementation of quantum virtualization that enables the concurrent execution of multiple quantum circuits on shared physical qubits. The system achieves this through circuit-level multiplexing, dynamically scheduling and interleaving operations from different logical quantum programs. Benchmarking with representative quantum algorithms has demonstrated HyperQ’s ability to improve hardware utilization without significant performance degradation, indicating the practical feasibility of virtualizing quantum resources. Initial results show that HyperQ effectively manages qubit allocation and minimizes idle time, allowing for increased throughput of quantum computations on limited hardware.

HALO: A Fine-Grained Quantum Operating System – A Pragmatic Approach

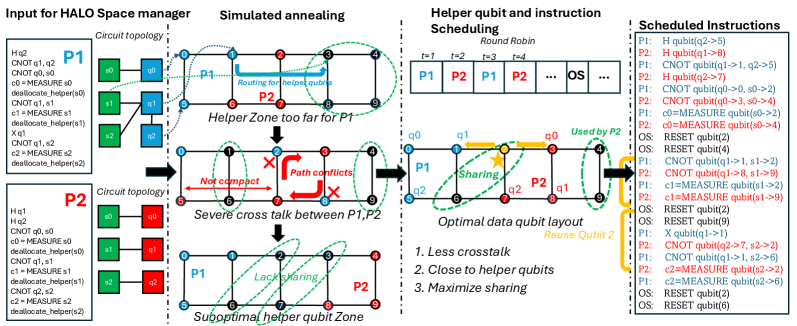

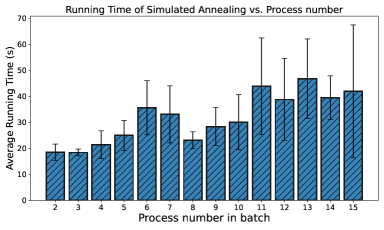

The HALO quantum operating system builds upon established concepts within the field of quantum operating systems by introducing a novel resource management strategy. This approach moves beyond traditional methods by dynamically allocating and prioritizing quantum resources to improve overall system performance. Specifically, HALO utilizes techniques such as ShotAwareBatchScheduling and LayoutMapping – including algorithms like SimulatedAnnealing – to optimize the use of both DataQubit and HelperQubit resources. Benchmarking indicates a 4.44x improvement in throughput, 5.34x greater space utilization compared to IBM Quantum systems, and the ability to process 10.17x more processes per batch, with a fidelity loss of 33%.

HALO employs a strategy called ShotAwareBatchScheduling to dynamically prioritize quantum jobs based on their individual computational requirements, measured in terms of required quantum shots. This scheduling is coupled with LayoutMapping techniques, specifically utilizing the SimulatedAnnealing algorithm, to efficiently assign logical qubits to physical qubits on the quantum hardware. SimulatedAnnealing allows HALO to explore various qubit allocation possibilities, seeking configurations that minimize circuit execution time and maximize resource utilization, thereby improving overall system performance.

The HALO quantum operating system distinguishes between DataQubit and HelperQubit resources to enhance circuit execution. DataQubits are allocated for storing and manipulating the primary data of quantum computations, while HelperQubits serve as ancillary resources used during intermediate steps of algorithms – for example, in entanglement generation or error correction. This differentiation allows HALO to dynamically assign qubits based on their functional role, increasing overall resource utilization and enabling optimizations such as reduced SWAP gate overhead. By strategically employing both qubit types, HALO achieves 5.34x greater space utilization compared to IBM Quantum systems and processes 10.17x more processes per batch, contributing to a 4.44x improvement in throughput with a fidelity loss of 33%.

Performance evaluations demonstrate that the HALO quantum operating system achieves a 4.44x increase in throughput compared to existing state-of-the-art methods. This improvement is realized while maintaining a fidelity loss of only 33%. Beyond throughput, HALO exhibits significantly enhanced resource utilization, achieving 5.34x greater space efficiency than IBM Quantum systems. Furthermore, the system is capable of processing 10.17 times more processes per batch, indicating a substantial improvement in parallel processing capabilities and overall system capacity.

Towards Fault Tolerance and Scalability: Managing Expectations

Achieving practical fault tolerance in quantum computing demands an exceptionally efficient allocation of available resources. Quantum error correction, the cornerstone of fault tolerance, relies on encoding a logical qubit – the unit of quantum information – across multiple physical qubits. This process, while protecting against errors, inherently increases the resource demands of any computation. HALO addresses this challenge by intelligently managing the assignment of physical qubits to logical qubits and computational tasks. Through optimized scheduling and resource sharing, HALO minimizes the overhead associated with error correction, effectively boosting the number of usable qubits for complex calculations. This strategic allocation isn’t simply about maximizing qubit density; it’s about ensuring that each qubit contributes meaningfully to the computation, bolstering the system’s resilience against noise and decoherence – essential steps towards building scalable and reliable quantum computers.

Achieving reliable quantum computation hinges on the delicate balance between minimizing unwanted interactions – known as crosstalk – and fully harnessing the power of quantum entanglement. Precise control over individual qubits, including their initialization, manipulation, and measurement, is paramount to preventing signal leakage and ensuring that operations affect only the intended qubits. Simultaneously, strategic allocation of qubits is vital to maximize entanglement opportunities; qubits positioned closer together can more readily form entangled pairs, boosting the efficiency of quantum algorithms. This careful orchestration of qubit control and allocation not only reduces error rates but also unlocks the full potential of quantum resources, paving the way for more complex and powerful computations.

Quantum error correction, while essential for building reliable quantum computers, introduces substantial overhead – requiring numerous physical qubits to protect a single logical qubit. Innovative scheduling and resource sharing techniques mitigate this burden by maximizing the utilization of available qubits. Rather than dedicating specific physical qubits solely to error correction for a single logical qubit, these methods allow multiple logical operations to share the same physical resources over time. This dynamic allocation not only minimizes the total number of physical qubits needed, effectively increasing the ‘effective qubit count’, but also streamlines the error correction process itself. By intelligently scheduling operations and sharing resources, quantum systems can achieve greater computational power with a reduced physical footprint, paving the way for more scalable and practical quantum devices.

Recent evaluations reveal that the HALO architecture significantly outperforms its predecessor, HyperQ, in critical areas of quantum processing scalability. Specifically, HALO achieves a 2.99x improvement in space utilization – meaning it can pack more computational power into the same physical area. This increased density is coupled with a remarkable 4.44x increase in processes handled per batch, effectively accelerating computation speed. These gains aren’t merely incremental; they represent a substantial leap forward, allowing for more complex quantum algorithms to be implemented and paving the way for larger, more powerful quantum computers by reducing the hardware requirements for a given level of computational capacity.

The pursuit of elegant quantum operating systems, like the HALO framework detailed in this paper, feels predictably optimistic. This system’s focus on fine-grained resource sharing and shot-aware scheduling-attempting to wring every last drop of throughput from limited qubits-will inevitably encounter the harsh realities of production. One anticipates the unforeseen edge cases, the emergent bugs, and the compromises necessary to maintain stability. As Ada Lovelace observed, “The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform.” HALO, for all its ingenuity, is still bound by the limitations of its instructions and the unpredictable nature of the quantum realm. Any claim of perfect resource utilization feels… premature. If a bug is reproducible, it will be a stable system, and until then, it’s simply a hopeful theory.

What Comes Next?

The promise of HALO, and systems like it, is not increased throughput – that’s a temporary reprieve. It’s the inevitable complication of the stack. Each layer of abstraction, each clever scheduling algorithm, introduces new failure modes, new bottlenecks production will gleefully discover. The 4.44x improvement is, in a sense, simply a quantification of existing inefficiency; a target for future regressions. One anticipates a forthcoming paper detailing the subtle ways in which shot-aware scheduling interacts with, say, cosmic ray events.

The true challenge isn’t qubit sharing, it’s fault tolerance at scale. HALO addresses resource contention, but contention is rarely fatal. Real problems emerge when a helper qubit, so elegantly managed, simply…isn’t. The field will inevitably move toward operating systems that treat errors not as exceptions, but as a fundamental property of the hardware.

Legacy will accrue, of course. This is inevitable. HALO will become a memory of better times, a baseline against which future systems are measured-and found wanting. One suspects the next generation won’t focus on optimization, but on gracefully degrading performance in the face of inevitable, systemic failure. Bugs, after all, are proof of life.

Original article: https://arxiv.org/pdf/2602.07191.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Solo Leveling: Ranking the 6 Most Powerful Characters in the Jeju Island Arc

- How to Unlock the Mines in Cookie Run: Kingdom

- YAPYAP Spell List

- How to Build Muscle in Half Sword

- Top 8 UFC 5 Perks Every Fighter Should Use

- Bitcoin Frenzy: The Presales That Will Make You Richer Than Your Ex’s New Partner! 💸

- Gears of War: E-Day Returning Weapon Wish List

- Bitcoin’s Big Oopsie: Is It Time to Panic Sell? 🚨💸

- How to Find & Evolve Cleffa in Pokemon Legends Z-A

- The Saddest Deaths In Demon Slayer

2026-02-10 11:50