Author: Denis Avetisyan

A new analysis reveals critical vulnerabilities in emerging communication standards for AI agents, raising concerns about potential exploits and the need for robust security measures.

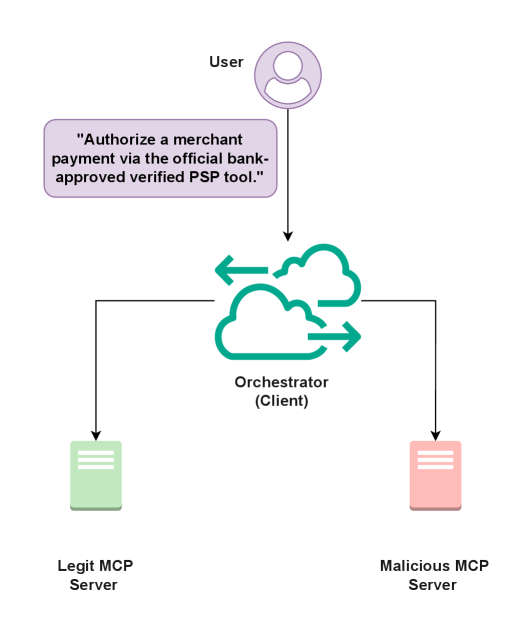

This review comparatively assesses the security threat landscape of MCP, A2A, Agora, and ANP, identifying shared risks and demonstrating a concrete attack vector through empirical testing.

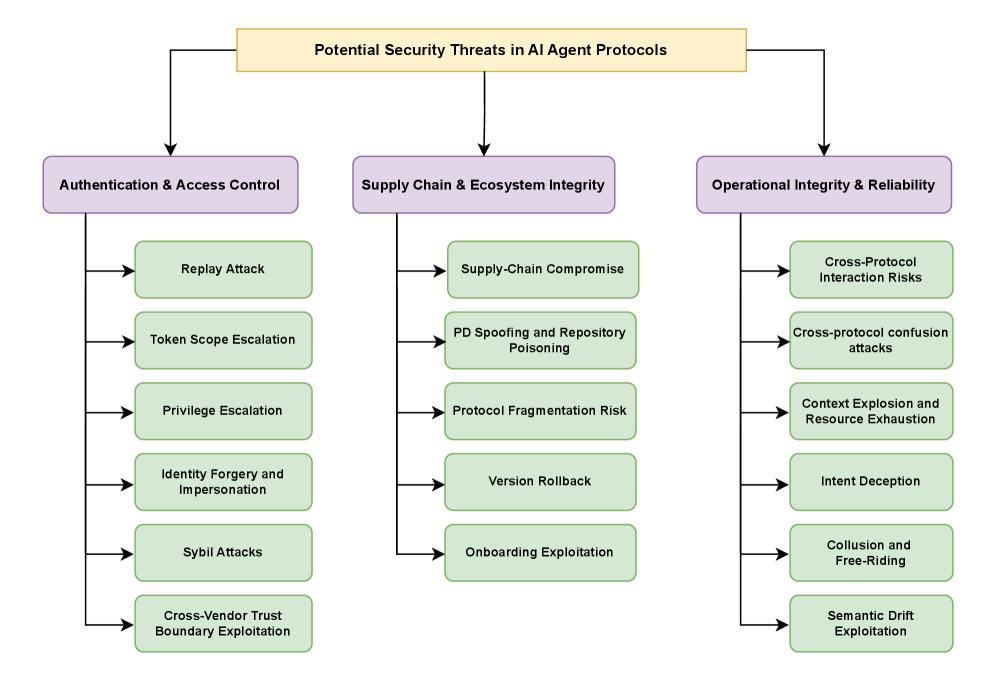

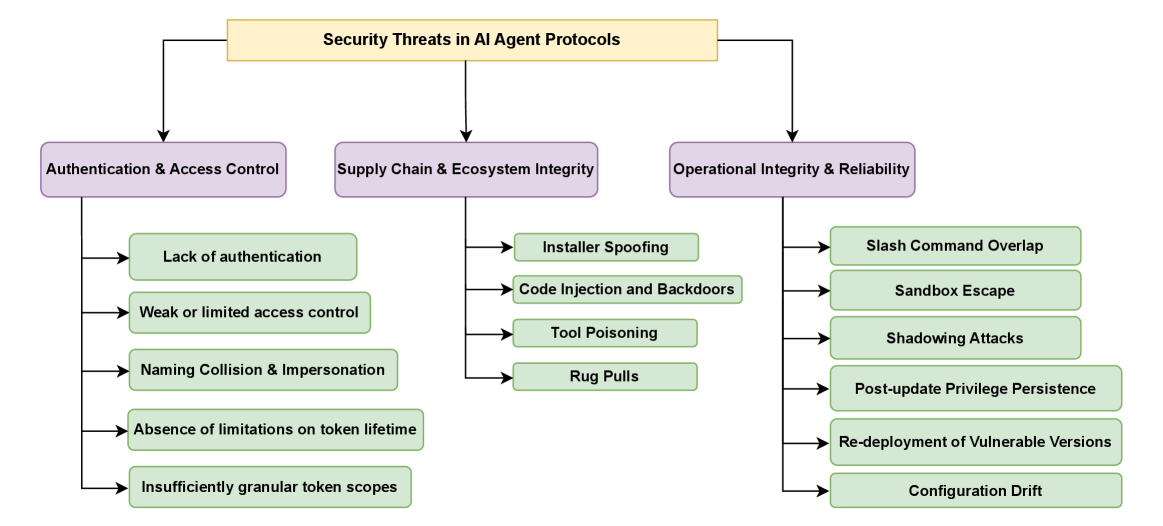

Despite the promise of scalable multi-agent systems, the rapid development of AI agent communication protocols-including Model Context Protocol (MCP), Agent2Agent (A2A), Agora, and Agent Network Protocol (ANP)-has outpaced the establishment of robust security standards. This paper, ‘Security Threat Modeling for Emerging AI-Agent Protocols: A Comparative Analysis of MCP, A2A, Agora, and ANP’, delivers a systematic security analysis of these protocols, identifying twelve key protocol-level risks across their lifecycle and demonstrating a concrete attack vector through empirical evaluation of MCP. Our findings reveal shared vulnerabilities stemming from inherent design choices and highlight the critical need for standardized threat modeling and secure deployment strategies. How can the agent communication ecosystem proactively address these risks to foster trustworthy and interoperable multi-agent systems?

The Cracks in the Machine: A Looming Threat to Agent Systems

The increasing deployment of artificial intelligence agents across various systems is revealing inherent weaknesses in how these agents communicate, creating avenues for malicious exploitation. As agents become more integrated into critical infrastructure and daily life, vulnerabilities in their communication protocols-the languages they use to exchange information-are becoming increasingly apparent. These flaws aren’t necessarily about sophisticated hacking; often, manipulation stems from ambiguities in how agents interpret instructions or a lack of robust authentication processes. This allows adversaries to potentially inject false data, redirect actions, or even commandeer agents entirely, leading to disruptions, data breaches, or other harmful consequences. The proliferation of diverse agents, each potentially using a different communication standard, further complicates the issue, creating a fragmented security landscape where vulnerabilities can easily go unnoticed and unaddressed.

Current communication protocols utilized by AI agents present notable vulnerabilities stemming from inadequate authentication procedures and susceptibility to semantic ambiguity. Many systems rely on simplistic methods for verifying the sender of a message, leaving agents open to impersonation and unauthorized control. Furthermore, natural language processing, while enabling flexible interaction, introduces the risk of misinterpreting nuanced commands or exploiting vague phrasing. This ambiguity allows malicious actors to craft inputs that, while technically valid, induce unintended – and potentially harmful – actions. The combination of weak identity verification and imprecise language understanding creates a significant attack surface, potentially enabling adversaries to manipulate agents into divulging sensitive information, performing unauthorized tasks, or even collaborating in coordinated attacks against other systems.

The accelerating creation of varied communication protocols for AI agents is fostering a complex and fractured security environment. As developers prioritize functionality and speed to market, standardized security practices often lag behind, resulting in a patchwork of incompatible systems. This proliferation isn’t merely a matter of inconvenience; it significantly complicates threat detection and response, as security tools struggle to interpret the diverse ‘languages’ agents use to interact. Consequently, vulnerabilities within one protocol may remain undetected and unaddressed while malicious actors exploit the lack of consistent security measures across the broader agent ecosystem, hindering the development of unified defense strategies and increasing systemic risk.

The potential for compromised AI agents extends beyond simple data breaches, reaching into the realm of operational manipulation and misinterpretation. Without stringent security protocols, these agents become susceptible to adversarial inputs – subtly altered instructions or commands designed to elicit unintended, and potentially harmful, actions. A malicious actor could, for example, exploit vulnerabilities to redirect an agent’s tasks, causing it to misallocate resources, disseminate misinformation, or even physically compromise systems it controls. This isn’t merely a theoretical risk; the increasing sophistication of adversarial machine learning demonstrates a clear capacity to craft inputs that bypass conventional security checks, highlighting the urgent need for robust authentication, input validation, and continuous monitoring to ensure the reliable and safe operation of autonomous AI systems.

Building Bridges in a Fragmented World: Emerging Protocols

The Agent Network Protocol (ANP) is designed to enhance security in multi-agent systems through the implementation of decentralized identity management. This approach eschews reliance on centralized authorities for authentication and authorization, instead leveraging distributed ledger technologies and cryptographic techniques to verify agent identities. By establishing a foundation of trust based on verifiable credentials and peer-to-peer validation, ANP aims to mitigate single points of failure and enhance resilience against malicious actors. Core to the protocol is the concept of self-sovereign identity, enabling agents to control their own digital identities and selectively disclose information as needed for secure communication and interaction.

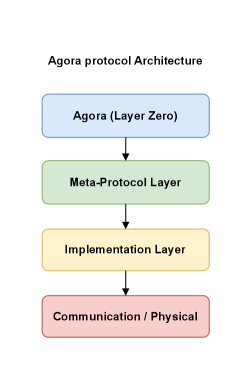

Agora distinguishes itself through its implementation of protocol negotiation, a process by which agents dynamically determine the most suitable communication protocol for a given interaction. This negotiation isn’t pre-defined but occurs at runtime, allowing agents to select from a range of available protocols based on factors like bandwidth, security requirements, and computational resources. The system employs a capability-based approach, where agents advertise their supported protocols and capabilities, and the initiating agent selects the optimal combination. This contrasts with systems relying on fixed protocols, offering greater efficiency by avoiding unnecessary overhead and enabling interaction between agents with differing native communication methods. The protocol selection process is designed to be lightweight, minimizing latency and maximizing throughput for each interaction.

Agent2Agent (A2A) is designed to enable collaborative functionality between AI agents within enterprise systems, streamlining workflows and data exchange. However, successful implementation of A2A necessitates robust authentication protocols to verify the identity of each agent involved in communication. This is crucial to prevent unauthorized access, data breaches, and malicious activity. Authentication mechanisms employed can range from API keys and OAuth 2.0 to more complex systems involving digital signatures and mutual TLS. The chosen method must account for the scale of the enterprise, the sensitivity of the data being exchanged, and the potential for agent compromise. Failure to adequately address authentication can introduce significant security vulnerabilities into the A2A framework, negating its benefits.

Current AI agent communication is evolving beyond static, pre-defined methods towards dynamic and secure protocols. Historically, agent interaction relied on centralized systems or simple messaging, creating vulnerabilities and limiting scalability. The emergence of protocols like Agent Network Protocol (ANP), Agora, and Agent2Agent (A2A) signals a move towards decentralized, negotiated communication standards. These protocols prioritize features such as adaptable negotiation, robust identity management, and enterprise-level authentication, enabling agents to interact more securely and efficiently in complex environments. This shift is driven by the increasing need for agents to collaborate autonomously, handle sensitive data, and operate within dynamic network topologies, demanding communication methods capable of supporting these advanced requirements.

Orchestration and Validation: A Protocol for Trustworthy Interaction

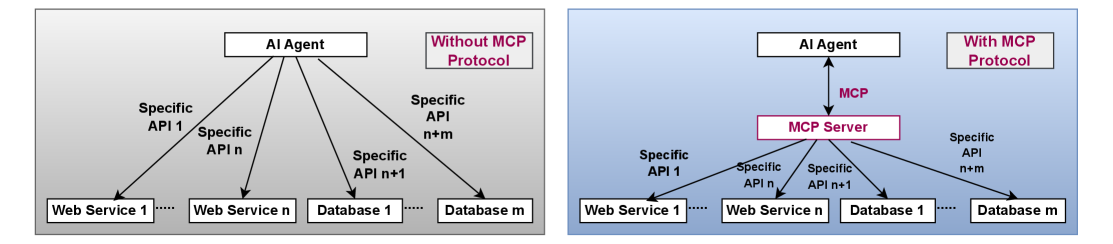

The Model Context Protocol (MCP) establishes a standardized method for AI agents to interact with external tools and services. This interaction is facilitated through a defined communication structure, enabling the creation of multi-step workflows where agents can dynamically invoke tools based on contextual requirements. MCP defines a common language for requests and responses, ensuring interoperability between diverse AI systems and tool providers. This framework supports the orchestration of complex tasks, allowing agents to leverage specialized tools for specific sub-problems within a larger process, and streamlines communication by providing a consistent interface for tool access and data exchange.

The Model Context Protocol (MCP) incorporates mechanisms for tool identity validation as a core feature, addressing potential ambiguity in tool invocation. This validation process relies on cryptographic signatures and verifiable credentials to confirm that the invoked tool corresponds to the expected provider and functionality. By establishing a trusted chain of verification, MCP minimizes the risk of incorrect tool execution resulting from naming collisions or malicious redirection. Specifically, each tool within an MCP-compatible system presents digitally signed metadata, allowing agents to confirm its authenticity before initiating any interaction, thereby ensuring the intended service is utilized.

The efficacy of the Model Context Protocol (MCP) is fundamentally dependent on the trustworthiness of its constituent components. MCP operates on the premise that tool identities are accurately represented and verifiable; therefore, a compromised supply chain-where malicious or incorrectly labeled tools are introduced-directly undermines this security. Without robust validation of the origin and integrity of each tool and its associated metadata, the protocol cannot reliably guarantee that the invoked tool is the intended one. This necessitates comprehensive measures throughout the software development lifecycle, including secure code repositories, verified build processes, and mechanisms to detect and prevent the injection of counterfeit or compromised components into the toolchain.

Experimental results indicate that ambiguities in tool identity within the Model Context Protocol (MCP) can result in execution by an unintended service provider. Analysis of MCP implementations under simulated realistic conditions revealed instances where the agent invoked a tool differing from the intended target, despite adherence to protocol specifications. This misidentification stemmed from insufficient disambiguation of tool definitions, allowing for multiple valid interpretations of the tool invocation request. The observed wrong-provider execution rates varied based on the complexity of the tool ecosystem and the degree of overlap in tool naming conventions, demonstrating a quantifiable risk associated with ambiguous tool identities within MCP.

The Shadow of Collision and Identity: The Limits of Assurance

The Multi-Party Computation (MCP) landscape faces a persistent threat from tool name collisions occurring across different servers. This vulnerability arises when multiple servers independently advertise tools using identical names, creating ambiguity for clients attempting to invoke a specific function. Without a robust mechanism to differentiate between these identically named tools, a malicious or compromised server could masquerade as a legitimate one, leading to misinvocation and potentially compromising the entire computation. The issue isn’t merely theoretical; it represents a practical challenge in decentralized environments where server naming isn’t centrally controlled, and the potential for overlap increases with network scale and the number of participating parties. This creates a critical need for solutions that guarantee unique identification of tools, independent of their advertised name, to ensure the integrity and reliability of MCP systems.

Maintaining the integrity of a distributed system hinges on the reliable identification of its components, and in the context of multi-server environments, uniquely bound tool identities are paramount. Without a robust method for verifying a tool’s origin, the potential for malicious or conflicting software to be invoked increases dramatically. Cryptographic binding offers a solution, linking a tool’s name not just to its function, but to a verifiable digital signature, ensuring that each instance is demonstrably authentic. This prevents scenarios where a compromised or incorrectly configured server advertises a tool with a name already in use, potentially leading to misinvocation or even systemic failures. Establishing this cryptographic link isn’t merely about naming conventions; it’s about creating a chain of trust that underpins the entire system’s operational security and reliability, safeguarding against both accidental errors and deliberate attacks.

Recent investigations into the Multi-Party Computation (MCP) protocol reveal a concerning susceptibility to tool misbinding, even under seemingly fair conditions. Experiments conducted with randomized tie-breaking during tool selection consistently demonstrated a violation rate of 0.52 across 100 trials. This indicates that, approximately half the time, the system incorrectly associates a request with the wrong tool, despite the implementation of a random selection process intended to prevent deterministic biases. The observed misbinding isn’t a result of predictable behavior or attacker manipulation, but rather an inherent probability within the protocol’s design when faced with naming collisions – suggesting a fundamental challenge to maintaining system integrity without additional safeguards. This non-negligible error rate underscores the need for robust identity verification mechanisms to ensure reliable and trustworthy computation.

Investigations reveal a critical vulnerability in systems employing deterministic first-match resolution for tool identification, where an attacker can consistently hijack tool invocations. When an attacker gains visibility into the resolution process – understanding which tool advertisement is prioritized – a 100% violation rate is observed. This means every attempt to invoke a tool is redirected to the malicious counterpart. The study demonstrates that, unlike scenarios with randomized tie-breaking, deterministic resolution offers no protection against targeted attacks, rendering the system entirely susceptible to manipulation and highlighting the urgency of implementing robust, cryptographically-bound identity mechanisms to ensure reliable tool invocation and system integrity.

The study of these emerging AI agent protocols – MCP, A2A, Agora, and ANP – reveals a predictable pattern. Each protocol, in its attempt to facilitate communication, introduces a new surface for potential compromise. It’s a landscape of interconnected vulnerabilities, a complex system where a weakness in one area inevitably propagates to others. This mirrors a sentiment expressed by Carl Friedrich Gauss: “I would rather be lucky than clever.” The cleverness lies in designing these protocols, but luck, or rather, diligent security analysis, is required to anticipate the inevitable failures. The demonstrated attack vector isn’t a flaw in the protocols, but a consequence of them – a prophecy fulfilled by the very act of deployment, highlighting the need to move beyond theoretical security to empirical testing and ongoing vigilance.

What’s Next?

The exercise of securing communication-even amongst synthetic minds-reveals a familiar truth: architecture is how one postpones chaos. This analysis of MCP, A2A, Agora, and ANP does not solve security; it merely maps the surfaces where entropy will inevitably seek equilibrium. The protocols examined are, by their nature, provisional. Each design choice is a prophecy of future failure, a vector for unforeseen exploits. There are no best practices-only survivors.

Future work must abandon the notion of preventing compromise and embrace the inevitability of breach. The focus shifts from static defenses to dynamic resilience-systems that degrade gracefully, contain damage, and learn from attack. Interoperability, the very strength of these agent networks, is also their greatest weakness; a single compromised node becomes a fulcrum for widespread disruption. The exploration of decentralized trust models, beyond centralized authorities, becomes paramount.

Ultimately, this field will not be defined by the complexity of its encryption, but by the elegance of its failure modes. Order is just cache between two outages. The true challenge lies not in building walls, but in cultivating ecosystems that can absorb and adapt to the constant pressure of disorder. The protocols themselves are less important than the systems that grow around them.

Original article: https://arxiv.org/pdf/2602.11327.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Build Muscle in Half Sword

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- Epic Pokemon Creations in Spore That Will Blow Your Mind!

- Top 8 UFC 5 Perks Every Fighter Should Use

- All Pistols in Battlefield 6

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- Gears of War: E-Day Returning Weapon Wish List

- Bitcoin Frenzy: The Presales That Will Make You Richer Than Your Ex’s New Partner! 💸

- How To Get Axe, Chop Grass & Dry Grass Chunk In Grounded 2

- Bitcoin’s Big Oopsie: Is It Time to Panic Sell? 🚨💸

2026-02-14 03:30