Author: Denis Avetisyan

Researchers have developed a novel framework for combining the strengths of multiple AI models, overcoming common pitfalls of instability and repetitive outputs.

Sparse Complementary Fusion with Reverse Kullback-Leibler Divergence offers improved reasoning, safety, and generalization by selectively integrating complementary parameters.

Directly merging the weights of large language models offers a promising path to capability composition, yet existing methods often suffer from instability and performance degradation due to unconstrained parameter interference. This work, ‘Beyond Parameter Arithmetic: Sparse Complementary Fusion for Distribution-Aware Model Merging’, introduces Sparse Complementary Fusion with reverse KL (SCF-RKL), a novel framework that selectively integrates complementary parameters using functional divergence measurements and sparsity-inducing regularization. Through extensive evaluation across diverse benchmarks, SCF-RKL consistently outperforms existing methods in reasoning, safety, and generalization while mitigating issues like repetition amplification. Could this distribution-aware approach unlock more robust and scalable strategies for composing specialized AI capabilities?

The Erosion of Coherence: A Temporal Challenge

While large language models demonstrate impressive capabilities across a spectrum of natural language processing tasks, maintaining coherence over extended sequences remains a significant hurdle. These models, trained to predict the next token in a series, often exhibit a decline in quality as the generated text lengthens. The inherent probabilistic nature of this prediction process, while enabling creativity, can also lead to topic drift, logical inconsistencies, and a gradual loss of focus. This phenomenon isn’t merely a matter of stylistic polish; it fundamentally impacts the usefulness of LLMs in applications requiring sustained, meaningful narratives or complex reasoning, such as long-form content creation, detailed report generation, or interactive storytelling. The challenge lies not in initiating compelling text, but in sustaining that quality and relevance throughout a substantial output.

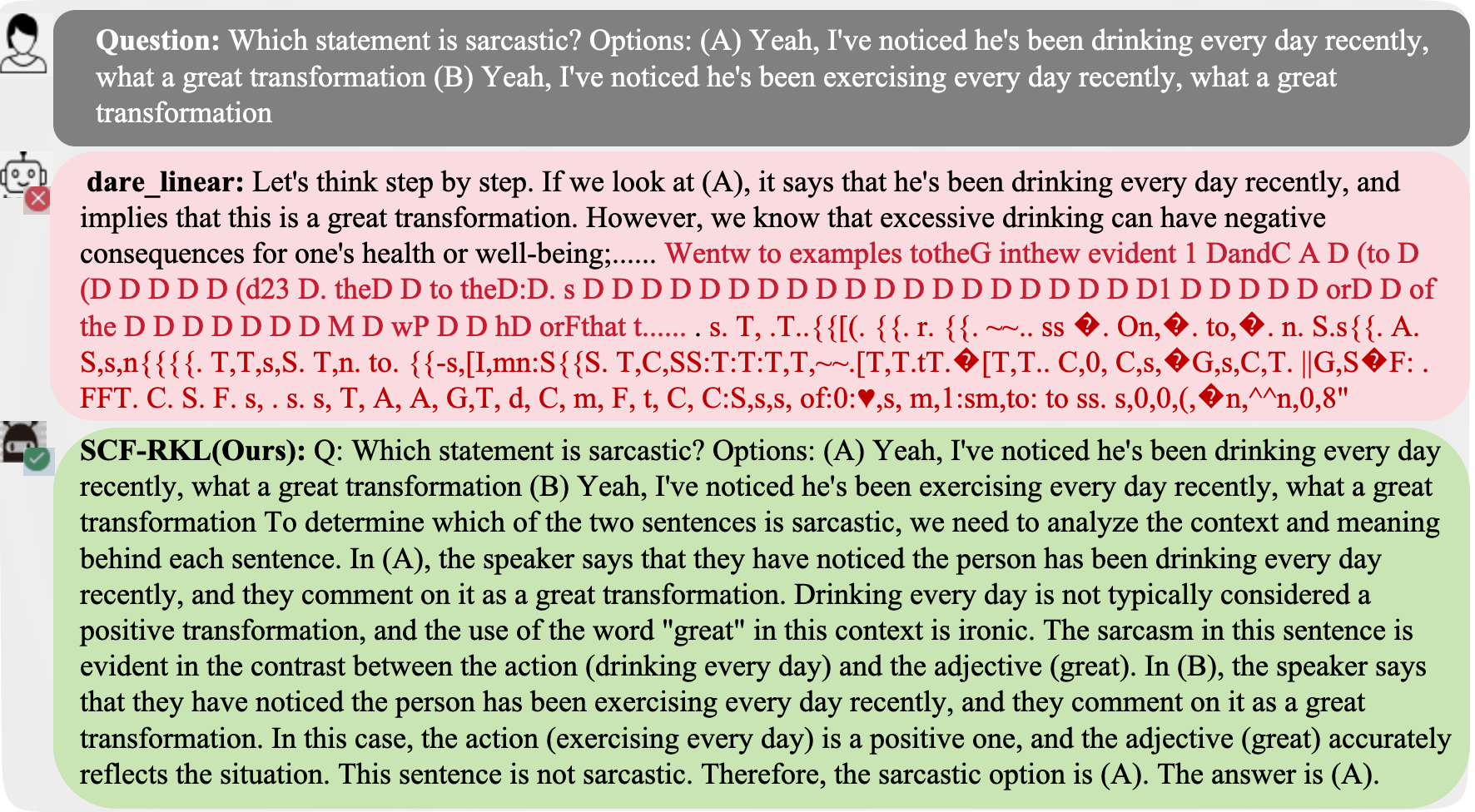

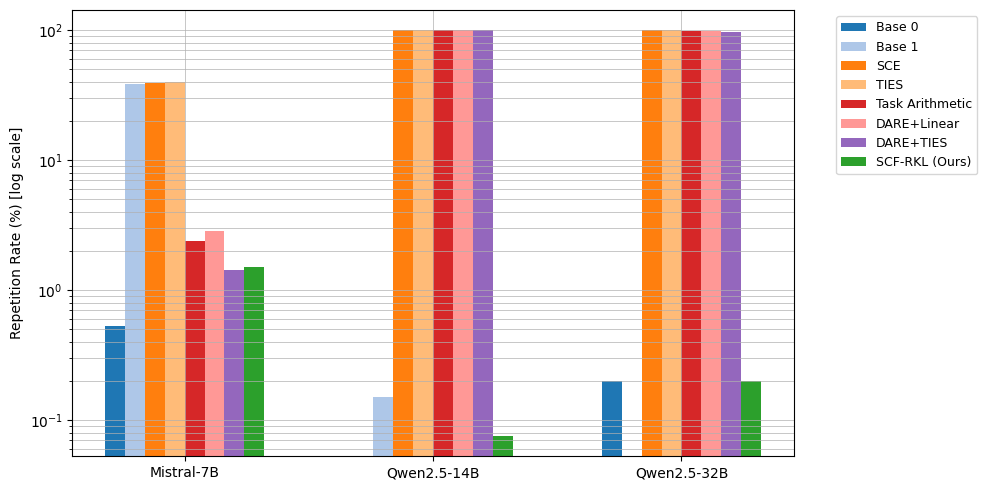

The inherent challenge in extending the output of large language models lies in the escalating risk of instability and repetitive patterns as sequence length increases, ultimately degrading the quality and practical application of the generated text. While techniques like merging outputs from multiple models aim to improve coherence, they can paradoxically amplify these issues. Studies demonstrate that baseline merging methods, when applied to complex tasks such as solving mathematical problems in the GSM8K dataset, can drive repetition rates to nearly 100%, effectively rendering the generated output nonsensical and useless – a clear indication that simply combining outputs isn’t a sufficient solution for long-form generation.

Model Merging: An Attempt to Arrest Decay

Model merging represents a technique for consolidating the capabilities of distinct Large Language Models (LLMs) into a unified system. Rather than training a single, monolithic model, this approach leverages pre-trained weights from multiple sources. The resulting merged model aims to inherit and combine the strengths of each constituent LLM, potentially exceeding the performance of any individual model in specific tasks or broadening overall functionality. This is achieved through various methods that mathematically combine the parameters of the pre-trained models, creating a new model with a potentially larger effective parameter count and a broader knowledge base.

Model merging techniques such as Task Arithmetic, DARE (Drop And REscale), and TIES (Task Interpolation via Embedding Space) operate by combining the weights of pre-trained Large Language Models (LLMs). Task Arithmetic typically involves weighted averaging of weights based on task performance. DARE focuses on identifying and selectively dropping less important weights during the merging process, followed by rescaling the remaining weights to maintain model stability. TIES, conversely, interpolates weights within the embedding space, aiming to preserve the knowledge acquired by each individual model. These approaches offer potential improvements in both performance and computational efficiency by leveraging existing pre-trained models rather than training new ones from scratch.

Model merging, while promising, presents challenges in parameter combination that can negatively impact output quality. Combining weights from pre-trained large language models (LLMs) isn’t simply additive; improper methods can result in destructive interference, where beneficial signals cancel each other out, leading to diminished performance. A frequently observed issue is a substantial increase in repetition within generated text; current techniques have demonstrated repetition rates approaching 100% in certain implementations, indicating a significant need for refined parameter averaging or alternative merging strategies to maintain coherent and diverse outputs.

SCF-RKL: A Framework for Stabilizing Generative Pathways

SCF-RKL introduces a model merging framework distinguished by its utilization of Reverse Kullback-Leibler (KL) Divergence as the primary optimization objective. This approach deviates from standard model merging techniques that often prioritize simply averaging parameters; instead, SCF-RKL explicitly focuses on minimizing the divergence between the merged model’s output distribution and that of the original, base model. By directly preserving the characteristics of the base model’s distribution, the framework aims to mitigate the risks of catastrophic forgetting or undesirable shifts in generation behavior during the merging process. This preservation is achieved through a loss function that penalizes deviations from the base model’s output probabilities, effectively guiding the merging process towards a solution that maintains the established generative characteristics of the original model.

Sparse Fusion within the SCF-RKL framework operates by selectively incorporating parameters from the adapting model into the base LLM, rather than a full parameter averaging. This selective integration is achieved through a masking mechanism that identifies and prioritizes parameters exhibiting the most significant positive contribution to the target distribution, as measured by Reverse KL Divergence. By focusing on these key parameters, Sparse Fusion minimizes destructive interference-where the adaptation process degrades the base model’s established knowledge-and preserves the stability of the generation process. This targeted approach avoids diluting the base model’s capabilities with potentially detrimental modifications, leading to improved long-horizon generation quality and reduced repetition.

Evaluation of the SCF-RKL framework demonstrates substantial improvements in long-form text generation. Specifically, testing on the GSM8K dataset achieved a repetition rate as low as 0.2%, indicating a significant reduction in redundant outputs. Beyond minimizing repetition, SCF-RKL consistently enhances the overall coherence of generated text, resulting in more logically structured and understandable long-form content. These gains were observed across various benchmark tasks designed to assess extended sequence modeling capabilities.

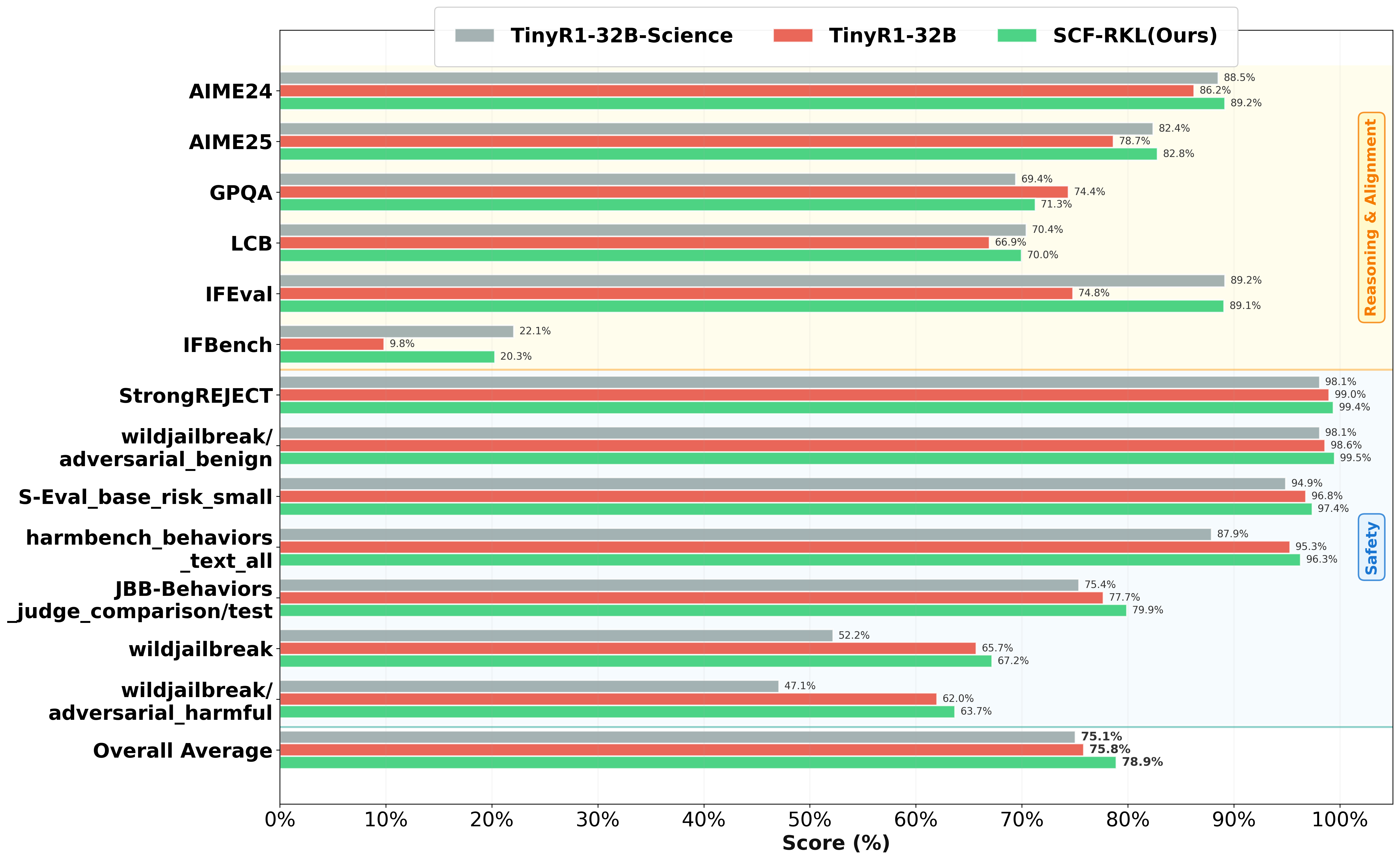

Evaluation of the SCF-RKL framework across multiple Large Language Models-including LLaMA3, Qwen2.5, Mistral, and Deepseek-R1-demonstrates significant performance gains. Testing across a range of benchmarks revealed accuracy improvements of up to 3 points compared to the strongest performing base model for each architecture. Specifically, on advanced reasoning tasks at the 32B parameter scale, SCF-RKL consistently achieved an average accuracy increase of 0.86 points, indicating its effectiveness in enhancing complex cognitive abilities within diverse LLM structures.

The Geometry of Stability: A Deeper Resonance

The success of the SCF-RKL method isn’t simply a matter of algorithmic tweaks; it fundamentally relies on the geometric arrangement of a model’s parameters and how those parameters are distributed within a high-dimensional space. Rather than treating parameters as isolated values, this approach recognizes that their relationships – the shape and curvature of the parameter landscape – directly influence the model’s stability and ability to generalize. SCF-RKL effectively preserves the underlying geometry during model merging, preventing disruptive shifts in this landscape that often lead to degraded performance. By focusing on maintaining the intrinsic geometric structure, the method avoids introducing distortions that would compromise the model’s representational capacity, resulting in a more robust and reliable outcome compared to methods that disregard these underlying spatial relationships.

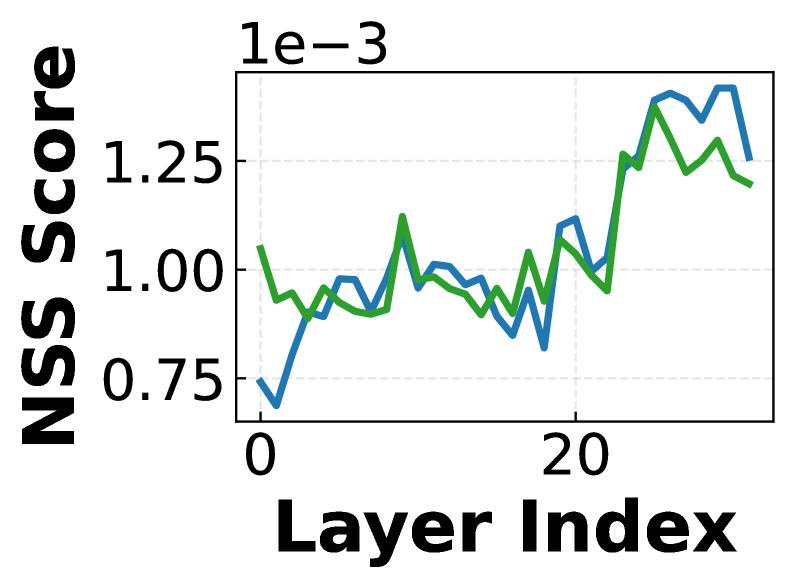

Investigating the distribution of a model’s parameters through the lens of spectral geometry reveals key connections to its stability and robustness. This approach treats the high-dimensional parameter space as a geometric object, analyzing its eigenvalues and eigenvectors – essentially, the ‘shape’ of the parameter distribution. By examining these spectral properties, researchers can quantify how changes to the model – such as those induced during merging – affect its inherent stability. A smoother, more consistent spectral signature generally indicates a more robust model, less prone to catastrophic failure or unpredictable outputs. This contrasts with fragmented or chaotic spectral patterns, which suggest instability and potential for divergence. Therefore, spectral geometry provides a powerful tool for not only diagnosing model fragility but also for guiding the development of techniques that preserve beneficial geometric structures during model modification and ensure consistent performance.

The stability of generative models during merging hinges on maintaining the underlying geometric relationships within their parameter space. Recent research indicates that techniques preserving this structure yield significantly improved generation quality; specifically, the SCF-RKL method demonstrates this principle by achieving an order of magnitude reduction in Normalized Spectral Shift (NSS) compared to conventional model merging approaches. This metric, NSS, effectively quantifies the distortion introduced into the model’s parameter space during merging – a lower NSS correlates directly with better preservation of the original geometric structure and, consequently, more stable and higher-quality outputs. The substantial decrease in NSS observed with SCF-RKL provides compelling evidence that focusing on geometric fidelity is paramount for robust model merging and opens exciting possibilities for developing future techniques grounded in these principles.

The understanding that model stability is intrinsically linked to the geometry of its parameter space is catalyzing novel approaches to model merging. Researchers are now exploring techniques that explicitly preserve, or even optimize, this geometric structure during the merging process, moving beyond simple averaging or interpolation of weights. This includes developing algorithms that analyze the curvature and topology of the parameter landscape to identify regions of high stability and guide the merging process accordingly. Preliminary investigations suggest that by focusing on maintaining geometric consistency, it’s possible to create merged models that not only exhibit improved stability – resisting catastrophic forgetting or divergence – but also demonstrate enhanced generalization capabilities and robustness to adversarial perturbations, potentially unlocking significant advancements in the field of machine learning and artificial intelligence.

The pursuit of model merging, as detailed in this work, reveals a fundamental truth about complex systems: their inherent tendency toward decay. While SCF-RKL attempts to mitigate instability and repetition – symptoms of this decay – it acknowledges the constant negotiation between past performance and present adaptability. This resonates with Dijkstra’s assertion: “It’s not enough to just do the right thing; you must also understand why it’s the right thing.” The framework’s selective parameter integration, guided by reverse KL divergence, isn’t simply about achieving better reasoning or safety alignment; it’s a considered response to the inevitable erosion of generative stability, a proactive measure against the ‘mortgage’ of technical debt inherent in any evolving system. The study doesn’t halt decay, but instead aims for a graceful aging process.

What Lies Ahead?

The pursuit of model merging, as exemplified by SCF-RKL, reveals a fundamental tension: the desire to aggregate knowledge against the inevitable decay of coherence. Each parameter integrated is a point of potential fracture, a new avenue for the amplification of subtle instabilities. The framework offers a temporary reprieve, a method for sculpting a more robust composite, but it does not eliminate the underlying entropy. Uptime, in this context, is a rare phase of temporal harmony, quickly yielding to the relentless creep of distributional shift and the emergence of unforeseen failure modes.

Future work will likely focus on extending the principles of sparse fusion beyond parameter space, exploring methods to selectively merge entire sub-networks or algorithmic components. A critical, and often overlooked, challenge lies in quantifying the ‘safety alignment’ of merged models – not simply verifying performance on curated benchmarks, but assessing resilience to adversarial manipulation and unintended consequences. This requires moving beyond purely empirical evaluations and incorporating principles of spectral geometry to understand the intrinsic fragility of the resulting system.

Ultimately, the problem of model merging is not merely a technical one; it is a reflection of the inherent limitations of complex systems. Technical debt, in this light, is akin to erosion – a gradual accumulation of imperfections that compromises long-term stability. The goal, then, is not to achieve perfect fusion, but to engineer systems that age gracefully, adapting to change and minimizing the impact of inevitable decay.

Original article: https://arxiv.org/pdf/2602.11717.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- Top 8 UFC 5 Perks Every Fighter Should Use

- How to Build Muscle in Half Sword

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- Violence District Killer and Survivor Tier List

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Epic Pokemon Creations in Spore That Will Blow Your Mind!

- Bitcoin’s Big Oopsie: Is It Time to Panic Sell? 🚨💸

- All Pistols in Battlefield 6

- Unlocking the Secrets: What Fans Want in a Minecraft Movie Sequel!

2026-02-15 07:52