Author: Denis Avetisyan

A novel system leverages semantic communication and advanced signal processing to deliver high-quality images even in challenging wireless environments.

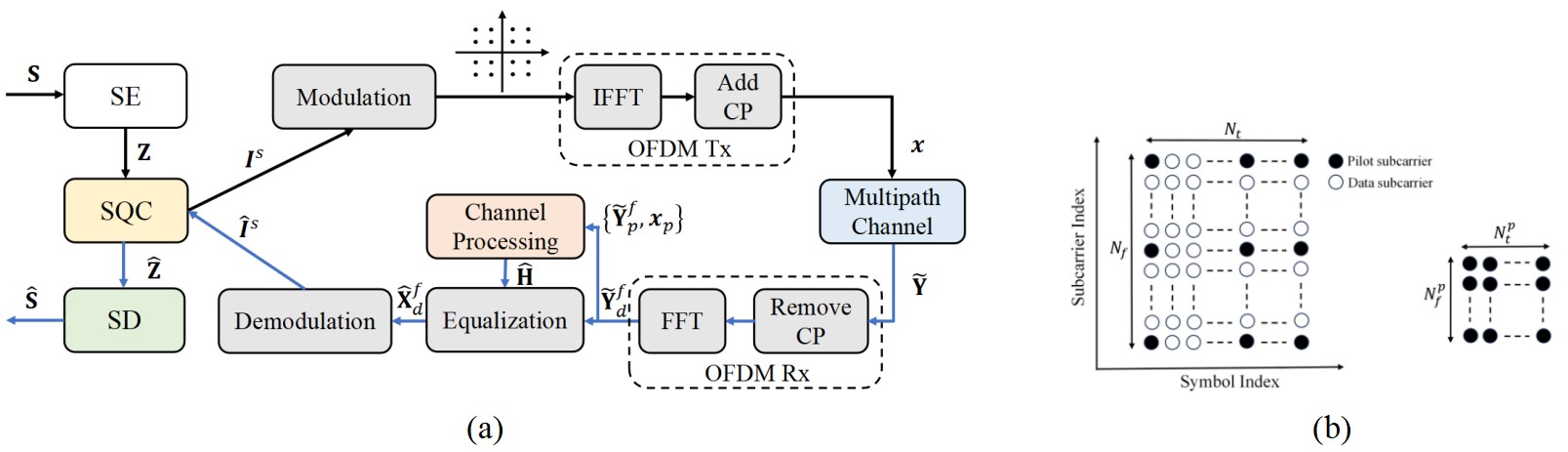

This work presents VQ-DSC-R, a robust digital semantic communication framework utilizing vector quantization, Swin Transformers, and conditional diffusion models with OFDM transmission for enhanced channel estimation and efficient image delivery.

While existing semantic communication research largely focuses on analog transmission with simplified channel models, achieving interoperability with practical digital infrastructure remains a challenge. This paper introduces ‘VQ-DSC-R: Robust Vector Quantized-Enabled Digital Semantic Communication With OFDM Transmission’, a novel system integrating a Swin Transformer-based semantic encoder, adaptive vector quantization, and a conditional diffusion model for robust channel estimation. The proposed VQ-DSC-R system demonstrably improves compression ratios and transmission reliability in multipath fading channels through end-to-end optimization. Could this approach pave the way for more efficient and dependable wireless communication of semantic information in real-world applications?

Breaking the Signal: The Fragility of Conventional Communication

Conventional communication systems prioritize the accurate delivery of symbols, treating information as a sequence of discrete units to be faithfully transmitted across a channel. However, this approach is inherently fragile; even minor disturbances – noise, interference, or distortion – can corrupt these symbols, leading to errors. More critically, focusing solely on symbol fidelity neglects the semantic content – the actual meaning – of the message. A perfectly received symbol is meaningless if it doesn’t contribute to understanding. This symbol-centric approach becomes particularly inefficient as data demands increase, requiring ever-greater bandwidth and power to maintain reliability. Consequently, subtle semantic errors, or a lack of overall comprehension, can occur even when the technical transmission appears flawless, highlighting a fundamental limitation in prioritizing precise symbol delivery over effective meaning conveyance.

The escalating volume of data transmission, coupled with the imperative for reliable communication in increasingly noisy and unpredictable environments, is driving a fundamental reassessment of communication strategies. Traditional methods, prioritizing faithful symbol delivery, often falter when faced with interference or bandwidth limitations. Consequently, research is pivoting towards semantic-centric communication, where the focus shifts from transmitting bits accurately to ensuring the meaning of the message is successfully conveyed. This approach accepts a degree of flexibility in symbol representation, prioritizing successful decoding of the intended information even if the exact transmitted signal is degraded. By emphasizing semantic integrity over precise replication, these emerging techniques promise more robust and efficient communication networks capable of meeting the demands of a data-saturated world and operating effectively in challenging conditions.

Current communication systems frequently prioritize squeezing as much data as possible through a channel, often at the expense of dependability. This trade-off between compression efficiency and reliable transmission becomes critically apparent in adverse conditions – think of a faint radio signal, a congested wireless network, or deep-sea data transfer. Highly compressed signals, while maximizing bandwidth usage, are far more susceptible to errors introduced by noise or interference. Consequently, even minor disruptions can lead to significant data loss or require complex and time-consuming error correction protocols. The challenge lies in developing strategies that intelligently balance these competing demands, ensuring both high throughput and robust performance even when facing substantial environmental obstacles. Researchers are increasingly exploring methods that prioritize semantic meaning over strict symbol reproduction, aiming for communication that conveys understanding rather than simply transmitting bits, thereby offering a more resilient approach to data transfer in challenging environments.

Decoding Intent: The VQ-DSC-R System Architecture

The Vector Quantized Digital Semantic Communication with OFDM (VQ-DSC-R) system is designed to transmit information by focusing on the meaning of the data rather than the raw data itself. This is achieved through a process of semantic encoding, where features representing the core meaning are extracted and then compressed using vector quantization. The resulting reduced-dimensionality representation allows for efficient transmission, and the Orthogonal Frequency Division Multiplexing (OFDM) scheme further enhances reliability by dividing the signal into multiple narrowband subcarriers, mitigating the effects of frequency-selective fading and interference common in wireless channels. This combination aims to deliver robust communication with lower bandwidth requirements compared to traditional methods that transmit all data bits directly.

Vector quantization (VQ) within the VQ-DSC-R system functions by representing input feature vectors with indices referencing a pre-defined codebook. This process drastically reduces the data rate required for transmission, as only the index, rather than the full vector, needs to be sent. The codebook is designed to capture the most salient semantic information within the input data, ensuring that despite the compression, critical features are preserved. Compression rates are directly proportional to the codebook size and the dimensionality of the input vectors; smaller codebooks yield higher compression but potentially greater information loss. The system utilizes techniques to optimize the codebook for minimal semantic distortion, balancing compression efficiency with semantic accuracy.

Orthogonal Frequency Division Multiplexing (OFDM) mitigates the effects of multipath fading by dividing a high-bandwidth channel into multiple narrowband subcarriers. Each subcarrier experiences minimal frequency-selective fading, as its bandwidth is less than the coherence bandwidth of the channel. This parallel transmission of data across multiple subcarriers, combined with the use of a cyclic prefix, effectively transforms frequency-selective fading into flat fading, simplifying equalization and reducing intersymbol interference. Consequently, OFDM provides a robust and reliable communication link in environments characterized by reflections, scattering, and delay spread, such as indoor spaces and urban canyons.

Adaptive Refinement: The ANDVQ Scheme in Detail

The Adaptive Noise Variance Vector Quantization (ANDVQ) scheme improves upon traditional vector quantization by dynamically adjusting quantization step sizes based on the estimated noise variance within each input vector. This is achieved by modeling the noise distribution and incorporating its characteristics into the quantization process; specifically, vectors exhibiting higher noise levels are assigned larger quantization steps to minimize the impact of noise on the quantized representation. Conversely, vectors with lower noise variance benefit from finer quantization, preserving greater detail. This adaptive refinement allows for a more efficient allocation of quantization bits, resulting in reduced quantization error and improved signal representation compared to fixed-step quantization methods.

The Straight-Through Estimator (STE) addresses the non-differentiability of the quantization operation, a critical barrier to training quantized neural networks via backpropagation. During the forward pass, the STE allows the quantized output to be used as if it were the original continuous input. However, during the backward pass, the gradient is directly passed through the quantization function as if it were an identity function – effectively treating the quantized value as continuous for gradient calculation. This approximation allows gradients to flow through the discrete quantization step, enabling end-to-end training of the entire network, including the quantizer, despite the inherent discontinuity. The STE does not modify the quantization process itself; it solely provides a mechanism for gradient estimation during training.

The Adaptive Noise Variance Vector Quantization (ANDVQ) scheme demonstrates improved performance metrics due to its ability to dynamically adjust quantization levels based on input noise characteristics. This adaptive quantization process results in a more precise representation of the signal, minimizing information loss compared to static quantization methods. Benchmarking against baseline quantization schemes indicates a statistically significant reduction in quantization error, leading to enhanced signal-to-noise ratios and demonstrable gains in overall system performance across tested datasets. The scheme’s effectiveness stems from its focus on preserving crucial signal details while efficiently compressing data, thereby exceeding the accuracy levels of traditional approaches.

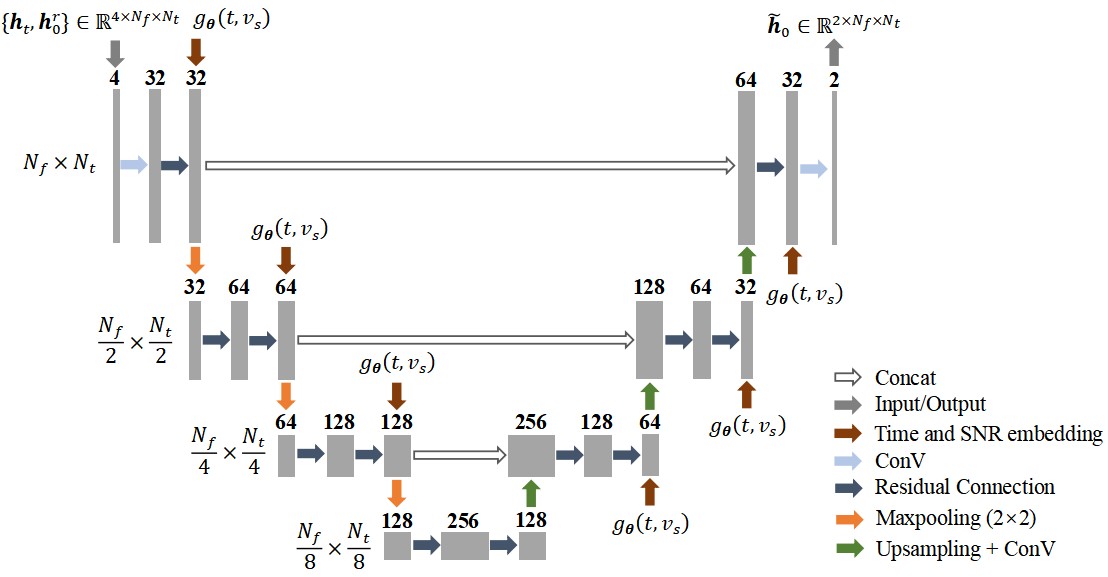

Predicting the Channel: Conditional Diffusion for Estimation

The channel estimation process utilizes a Conditional Diffusion Model (CDM) to iteratively refine initial estimates by learning to reverse a diffusion process that gradually adds noise to the true channel. This denoising approach, implemented through a series of learned reverse diffusion steps, allows the system to move from a noisy initial state toward a more accurate representation of the actual channel. The CDM is conditioned on available observations, such as pilot signals, to guide the denoising process and ensure convergence to a plausible channel estimate. This technique contrasts with direct estimation methods by framing channel estimation as a probabilistic inference problem, enabling improved robustness to noise and interference.

Traditional channel estimation techniques often treat the channel as a single, deterministic value, which is insufficient for accurately representing the inherent randomness of wireless environments. This system instead models the channel as a probability distribution, allowing it to quantify and address the uncertainty introduced by phenomena like multipath fading, Doppler shifts, and noise. By characterizing the channel statistically, the system can generate multiple plausible channel realizations, enabling a more robust estimation process and mitigating the impact of fading on signal reliability. This probabilistic approach provides a more complete representation of the channel’s state, improving the accuracy of subsequent signal processing operations and enhancing overall system performance.

System performance was rigorously evaluated using the Extended Pedestrian A (EPA) channel model, a widely adopted standard for simulating wireless communication channels in dense urban environments. Results demonstrate that the Conditional Diffusion Model (CDM)-based channel estimation scheme achieves superior performance compared to both Least Squares (LS) and ReEsNet methods. Specifically, the CDM exhibited lower Normalized Mean Squared Error (NMSE) values and correspondingly improved Bit Error Rate (BER) performance across a range of signal-to-noise ratios, indicating enhanced accuracy and reliability in channel estimation.

Beyond Transmission: System Optimization and Robustness

The system achieves optimal performance through a carefully constructed, three-stage training strategy. Initially, a Swin Transformer architecture is employed for robust feature extraction, enabling the model to capture intricate details within the data. This is followed by a dedicated channel estimation stage, crucial for accurately characterizing the communication medium and mitigating signal distortion. Finally, a system optimization phase refines the model’s parameters, ensuring seamless integration of the extracted features and estimated channel information. This sequential approach-feature learning, channel awareness, and holistic optimization-allows the system to achieve high levels of accuracy and efficiency in processing and reconstructing information.

Rigorous evaluation of the developed system centers on established metrics for image and video quality: Peak Signal-to-Noise Ratio (PSNR) and Multi-Scale Structural Similarity Index Measure (MS-SSIM). These metrics objectively quantify the fidelity of reconstructed images or video frames compared to the original, pristine data. Results consistently demonstrate a marked improvement in reconstruction quality when contrasted with performance achieved by existing, baseline methods. Specifically, the system attains significantly higher PSNR and MS-SSIM values, indicating superior detail preservation and structural similarity – a testament to the efficacy of the proposed architecture and training strategies in achieving robust and high-fidelity data recovery, even under challenging conditions.

The system’s ability to dynamically adapt to varying signal-to-noise ratios (SNR) represents a crucial advancement in maintaining both compression efficiency and reliable data transmission. By continuously monitoring channel conditions, the system intelligently adjusts communication parameters – such as modulation schemes and coding rates – to optimize data throughput and minimize transmission errors. This proactive approach not only ensures robust performance in challenging environments characterized by noise or interference, but also facilitates remarkably high compression rates by allocating resources effectively. Consequently, the system achieves a superior balance between bandwidth utilization and data fidelity, enabling high-quality reconstruction even under suboptimal communication conditions and bolstering its overall resilience.

The pursuit of efficient image transmission, as demonstrated in this VQ-DSC-R system, inherently necessitates challenging established boundaries. The integration of a Swin Transformer-based semantic encoder and conditional diffusion models isn’t about adhering to conventional methods; it’s about dissecting them to reveal vulnerabilities and opportunities for improvement. As Vinton Cerf aptly stated, “The Internet is a tool for connection, but it’s also a tool for disruption.” This system embodies that disruption, questioning the limits of bandwidth and reliability through innovative channel estimation and adaptive vector quantization. The entire framework operates on the principle of controlled deconstruction-breaking down the communication process to rebuild it stronger and more resilient.

Beyond the Signal: Future Directions

The presented system, while demonstrating a functional integration of semantic encoding and robust transmission, merely scratches the surface of a fundamental question: how much of the ‘image’ truly needs to be transmitted? The reliance on vector quantization, however adaptive, implies an acceptance of discrete representation – a concession to the limitations of current hardware. Future iterations should aggressively explore alternatives – perhaps lossy compression schemes informed by perceptual models, pushing the boundaries of acceptable distortion to maximize bandwidth efficiency. The true test isn’t achieving perfect reconstruction, but delivering an experience indistinguishable from it.

Moreover, the conditional diffusion model employed for channel estimation, while effective, represents a rather pragmatic solution. The system treats channel imperfections as a nuisance to be corrected. A more radical approach would be to incorporate channel distortion into the semantic encoding itself – effectively transmitting information through the noise. This requires a re-evaluation of signal integrity – is a ‘clean’ signal actually more informative than a deliberately corrupted one? The answer, predictably, will lie in the receiver’s capacity to decipher the intended message from the chaos.

Ultimately, this work highlights a subtle but critical point: communication isn’t about faithfully replicating data; it’s about reliably transferring meaning. The pursuit of ever-more-complex transmission schemes is a distraction if the underlying semantic representation remains impoverished. The next frontier isn’t better signals, but a deeper understanding of what constitutes ‘information’ in the first place.

Original article: https://arxiv.org/pdf/2602.15045.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Mewgenics Tink Guide (All Upgrades and Rewards)

- 8 One Piece Characters Who Deserved Better Endings

- Top 8 UFC 5 Perks Every Fighter Should Use

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- How to Discover the Identity of the Royal Robber in The Sims 4

- Sega Declares $200 Million Write-Off

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- How to Unlock the Mines in Cookie Run: Kingdom

- Full Mewgenics Soundtrack (Complete Songs List)

2026-02-18 11:39