Author: Denis Avetisyan

A new study reveals that Mamba-based medical image analysis, despite its impressive performance, is surprisingly susceptible to both malicious attacks and real-world hardware failures.

Researchers demonstrate vulnerabilities to adversarial perturbations and bit-flip faults, raising concerns about the reliability of State Space Models in critical healthcare applications.

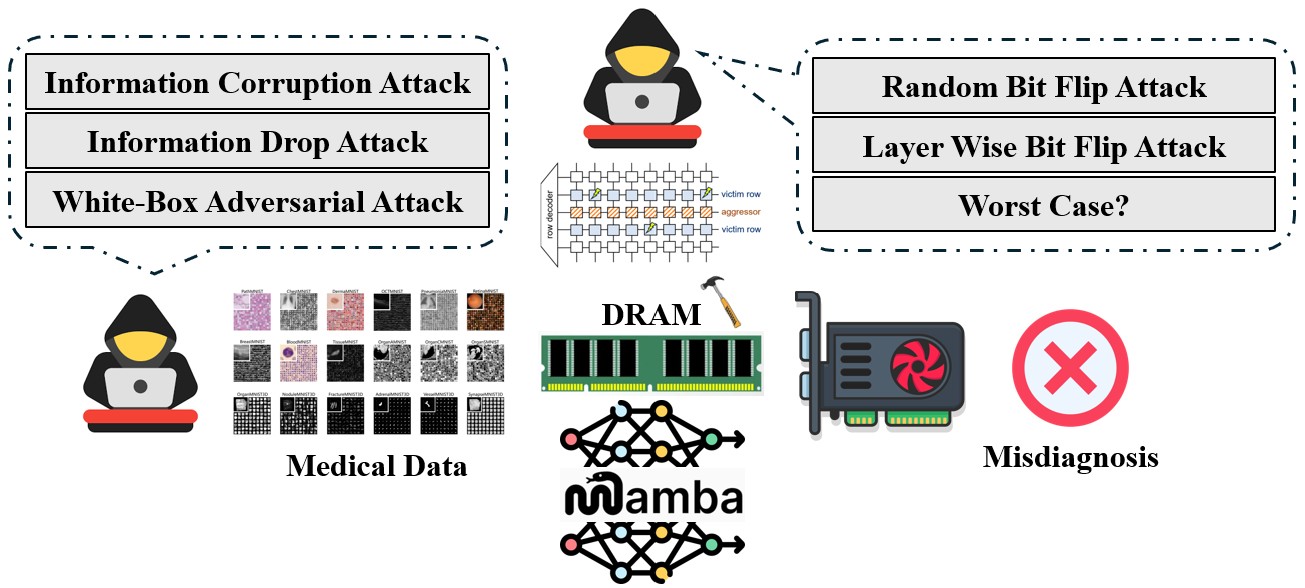

Despite recent advances in efficient deep learning architectures, the reliability of state-space models-specifically, those like Mamba-remains largely unexplored under realistic threat conditions. This work, ‘Is Mamba Reliable for Medical Imaging?’, systematically evaluates Mamba’s vulnerability to both input-level attacks-including adversarial perturbations and common corruptions-and hardware-inspired bit-flip faults emulated in software. Our findings demonstrate that while Mamba achieves high accuracy on clean medical imaging data, it is susceptible to performance degradation under these attacks, raising concerns about its deployment in safety-critical applications. Will novel defense strategies be required to ensure the robustness and trustworthiness of Mamba-based medical AI systems?

The Illusion of Precision: Beyond Current Limits in Medical Imaging

Despite demonstrated success in medical image analysis, both convolutional neural networks (CNNs) and transformers face inherent limitations when dealing with the intricacies of biomedical data. CNNs, while adept at identifying local patterns, struggle to capture long-range dependencies crucial for understanding contextual relationships within scans-a significant drawback when analyzing complex anatomical structures or subtle disease indicators. Transformers, known for their ability to model these long-range interactions, often demand substantial computational resources, scaling quadratically with input sequence length – a considerable impediment for high-resolution 3D medical images and real-time clinical applications. This computational burden restricts their feasibility for widespread deployment and hinders the efficient processing of the large datasets characteristic of modern medical imaging, creating a need for more scalable and efficient architectures.

The successful integration of artificial intelligence into clinical practice hinges on developing architectures capable of handling the intricacies of medical data with both precision and speed. Medical images, such as those from MRI or CT scans, are inherently high-dimensional and often contain subtle, long-range correlations crucial for accurate diagnosis. Current deep learning models, while demonstrating impressive results, frequently struggle with these complexities, demanding substantial computational resources and limiting their real-world applicability. A practical AI solution for healthcare necessitates models that not only achieve high diagnostic accuracy but also process data efficiently, enabling timely interventions and broader accessibility – a challenge driving research toward more streamlined and effective neural network designs.

State Space Models (SSMs) represent a departure from conventional deep learning architectures for medical imaging, offering a compelling solution to limitations inherent in both convolutional neural networks and transformers. Unlike these methods, which often grapple with computational demands when processing extended sequences – such as lengthy time-series data from cardiac monitoring or comprehensive 3D scans – SSMs achieve efficiency through a unique approach to sequential data modeling. They operate on the principle of compressing information into a hidden state, updated iteratively, allowing for linear computational complexity – meaning processing time increases proportionally to the input size, rather than exponentially. This characteristic is particularly crucial for high-resolution medical images and extended temporal data, enabling the creation of more practical and scalable diagnostic tools. By effectively capturing long-range dependencies with fewer parameters, SSMs hold the potential to significantly reduce computational burdens while maintaining, or even improving, diagnostic accuracy in complex medical imaging applications.

Mamba: A Shift in the System’s Logic

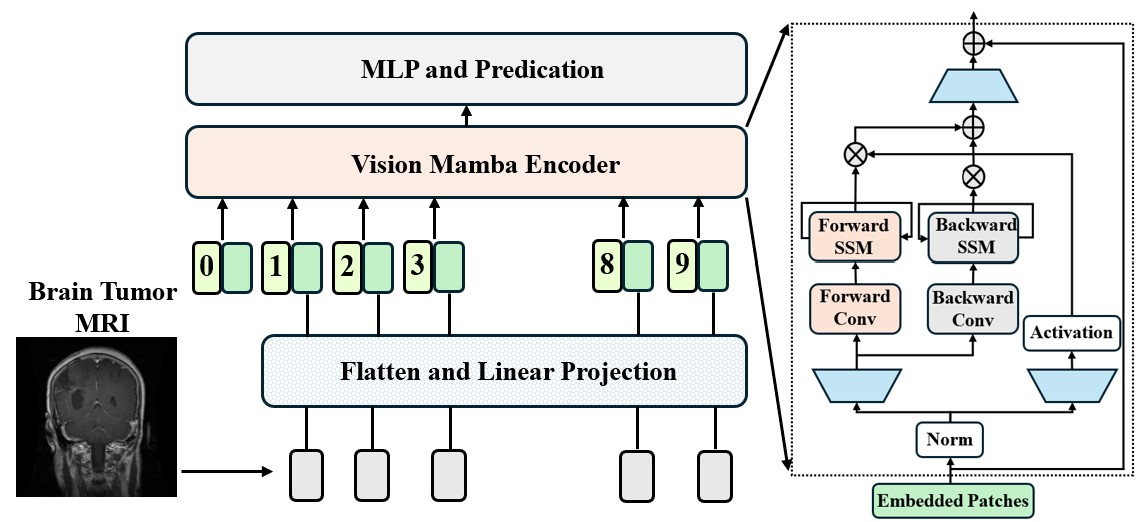

The Mamba architecture represents a departure from traditional transformer models by utilizing State Space Models (SSMs), offering improved computational efficiency. Transformers, while powerful, exhibit quadratic complexity with input sequence length, hindering their application to large, high-resolution medical images. Mamba addresses this limitation through selective SSMs, which dynamically adjust the state based on input, reducing computational cost to linear complexity. This efficiency stems from a parameterized selection mechanism within the SSM, allowing the model to focus on relevant information and discard irrelevant data during processing. Consequently, Mamba enables the processing of significantly larger image datasets and sequences with reduced memory requirements and faster processing times compared to equivalent transformer-based approaches.

Recent implementations demonstrate the adaptability of the Mamba architecture to various medical imaging challenges. MedMamba focuses on medical image processing tasks, while FDVM-Net utilizes Mamba for fast and dynamic volumetric medical image reconstruction. H-Vmunet applies the Mamba structure to enhance vascular lumen segmentation, and SegMamba is designed specifically for medical image segmentation. These models collectively illustrate Mamba’s potential across image restoration, precise anatomical delineation, and three-dimensional reconstruction of medical data, signifying a broad applicability within the field.

Evaluations of Mamba-based architectures, specifically on the BloodMNIST dataset, have demonstrated a clean accuracy of 97.63%. However, performance is negatively impacted by input perturbations; while achieving high accuracy on pristine data, these models exhibit vulnerabilities when exposed to even minor alterations in the input. This suggests a need for further research into the robustness and generalization capabilities of Mamba architectures within medical imaging applications, particularly concerning real-world data that frequently contains noise and artifacts.

Stress Testing the System: Uncovering Hidden Weaknesses

Evaluation using the Med-Mamba-Adv framework identified susceptibility to standard adversarial perturbations. Specifically, the Fast Gradient Sign Method (FGSM) and Projected Gradient Descent (PGD) were utilized to generate adversarial examples, demonstrating that Mamba is vulnerable to these common attack vectors. These attacks manipulate input data to induce incorrect predictions, indicating a lack of inherent robustness against intentionally crafted noise. The Med-Mamba-Adv framework provides a standardized methodology for assessing and quantifying these vulnerabilities, allowing for comparative analysis and development of mitigation strategies.

Evaluations incorporating simulated real-world acquisition artifacts revealed performance degradation in Mamba models. Specifically, the introduction of Gaussian noise, defocus blur, and PatchDrop corruptions resulted in decreased accuracy, indicating a sensitivity to common data imperfections. These simulations demonstrate that Mamba, while exhibiting strong performance on clean data, requires further development to achieve robustness against the types of corruptions frequently encountered in practical imaging scenarios. The observed performance drops highlight the necessity of incorporating data augmentation techniques or adversarial training strategies to mitigate the impact of these acquisition artifacts.

Evaluation using the Med-Mamba-Hammer framework revealed a significant vulnerability to bit flips within the model’s exponent representation. Starting from a baseline accuracy of 97.63% on the BloodMNIST dataset, a single exponent bit flip (K=1) resulted in a performance decrease to 9.09%. Increasing the number of flipped bits to K=8 further reduced accuracy to 4.65%, and with K=16 bit flips, performance degraded to only 0.67%. These results demonstrate a substantial sensitivity to hardware-level perturbations and highlight a critical security concern for deployment in resource-constrained or adversarial environments.

Evaluation of Med-Mamba on the BloodMNIST dataset indicates a high degree of sensitivity to input perturbations. Initial performance, measured at 97.63% accuracy with clean data, degrades significantly when subjected to even minor disturbances. This vulnerability is demonstrated through adversarial attacks and simulations of real-world data corruptions, revealing a substantial performance drop from the baseline accuracy. The observed sensitivity highlights a need for improved robustness in the model to maintain reliable performance in practical applications where data quality may vary.

The Inevitable Cascade: Implications for a Resilient Future

The development of artificial intelligence for medical diagnosis demands a fundamental shift in evaluation practices. Current pipelines often prioritize accuracy on pristine datasets, overlooking the critical need to assess performance under realistic, compromised conditions. Recent studies demonstrate that even state-of-the-art models are surprisingly susceptible to subtle perturbations, including minor data corruptions and hardware-level attacks. Therefore, incorporating rigorous robustness evaluations – testing against noisy, adversarial, and intentionally altered inputs – is no longer optional but essential. Such evaluations should become a standard component of medical AI development, mirroring the stringent validation processes required for any clinical tool, to ensure patient safety and build trust in these increasingly powerful diagnostic technologies.

Building dependable artificial intelligence for medical diagnostics demands proactive countermeasures against potential vulnerabilities. Targeted mitigation strategies, like adversarial training – where algorithms are deliberately exposed to deceptive data to enhance their discernment – are proving essential. Equally vital is hardware-aware design, acknowledging that even subtle physical disturbances, such as single bit-flip attacks, can compromise model accuracy. These attacks exploit the physical implementation of AI, rather than the algorithms themselves, highlighting the need to consider the entire system. Successfully integrating these defenses isn’t merely about achieving high accuracy on standard benchmarks, but ensuring consistent and trustworthy performance in real-world clinical settings, where reliability is paramount and the consequences of error are significant.

Continued innovation in medical artificial intelligence necessitates a proactive approach to system resilience, with future studies concentrating on the development of Mamba-based architectures designed for inherent robustness. Current models, while showing promise in complex image analysis, remain susceptible to both manipulated data – adversarial attacks – and physical disruptions, such as single bit-flip errors in hardware. Consequently, research efforts should extend beyond simply mitigating existing vulnerabilities to exploring fundamentally new defense mechanisms. This includes investigating novel architectural designs that minimize sensitivity to input perturbations and hardware imperfections, as well as creating adaptive systems capable of detecting and correcting errors in real-time. Such advancements are critical for ensuring the reliable and safe deployment of AI-powered diagnostic tools in clinical settings, ultimately building trust and maximizing patient benefit.

Recent advancements in medical image analysis showcase the promise of sophisticated models such as MambaMorph, MambaMIR, nnMamba, and Mamba-Fusion in tackling intricate diagnostic challenges. However, despite their capabilities, these architectures require rigorous evaluation of their resilience to adversarial perturbations. Studies reveal a surprising vulnerability to even subtle data manipulations, specifically single bit-flip attacks, which can compromise accurate predictions. This sensitivity underscores the critical need for continued research into defense mechanisms and inherently robust designs, ensuring that these powerful tools remain reliable and trustworthy in real-world clinical applications where data integrity cannot be guaranteed.

The pursuit of architectural perfection in medical imaging, as illustrated by the exploration of Mamba models, often feels like building sandcastles against the tide. While Mamba shows promise with clean data, the study reveals vulnerabilities to adversarial attacks and hardware faults – a predictable erosion of initial gains. This echoes a timeless truth: every new architecture promises freedom until it demands DevOps sacrifices. As John von Neumann observed, “In the past, the problem was to figure out what to do with the computers we had. Now the problem is to figure out what we can do without computers.” The reliance on complex systems, even those initially robust, introduces new failure modes, demanding constant vigilance and a pragmatic acceptance of inevitable entropy. The focus shouldn’t solely be on achieving immaculate performance, but on cultivating resilience within the system itself.

What Lies Ahead?

The pursuit of accuracy, as demonstrated by these Mamba-based systems in medical imaging, often obscures a more fundamental truth: systems aren’t judged by their performance on curated datasets, but by their failures in the wild. The observed vulnerabilities to adversarial perturbations and, crucially, to the inevitable decay of hardware, aren’t bugs to be patched; they are prophecies of eventual compromise. Each layer added in the name of resilience merely shifts the point of failure, creating new, unforeseen dependencies.

The focus will likely turn towards ‘robustness certifications’-attempts to quantify invulnerability. But such metrics are, at best, snapshots in time. The landscape of attacks, and the modes of hardware failure, are constantly evolving. It would be more fruitful to acknowledge that complete security is an illusion, and instead concentrate on graceful degradation-on systems that anticipate failure and minimize harm when it occurs.

Technologies change, dependencies remain. The architecture isn’t structure-it’s a compromise frozen in time. The true challenge isn’t building ever more complex models, but cultivating ecosystems that can adapt, evolve, and ultimately, accept their own impermanence.

Original article: https://arxiv.org/pdf/2602.16723.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- God Of War: Sons Of Sparta – Interactive Map

- 8 One Piece Characters Who Deserved Better Endings

- Who Is the Information Broker in The Sims 4?

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

2026-02-22 09:53