Author: Denis Avetisyan

A new framework assesses the real-world resilience of post-quantum cryptography on constrained devices, moving beyond simple performance tests.

This paper introduces the Quantum Encryption Resilience Score (QERS), a multi-metric evaluation of post-quantum algorithms for computers, IoT, and IIoT systems, utilizing the ESP32 as a case study.

While increasingly vital for securing future communications, evaluating post-quantum cryptography (PQC) beyond isolated performance metrics remains a significant challenge, particularly for resource-constrained devices. This paper introduces QERS-the Quantum Encryption Resilience Score-a novel framework for assessing PQC readiness in computer, IoT, and IIoT environments by integrating cryptographic performance, system constraints, and multi-criteria decision analysis. QERS delivers interpretable resilience scores through normalized metrics, weighted aggregation, and machine learning, revealing crucial trade-offs beyond traditional benchmarking. Will this holistic approach enable truly informed security design and facilitate a smooth migration to quantum-resistant systems?

Unlocking the Quantum Threat: A Systemic Vulnerability

The bedrock of modern digital security, public-key cryptosystems such as RSA and Elliptic Curve Cryptography (ECC), face a critical and well-defined threat from the advent of quantum computing. These systems rely on the computational difficulty of certain mathematical problems – integer factorization for RSA and the discrete logarithm problem for ECC – to safeguard data. However, Shor’s algorithm, a quantum algorithm developed in 1994, provides a dramatically faster method for solving these problems. While classical computers would require exponentially increasing time to break these encryptions as key lengths increase, Shor’s algorithm can, in theory, solve them in polynomial time using a sufficiently powerful quantum computer. This means that currently encrypted communications and stored data, protected by RSA and ECC, are vulnerable to decryption should large-scale, fault-tolerant quantum computers become a reality, creating an urgent need for cryptographic alternatives.

The accelerating development of quantum computing presents a fundamental challenge to modern data security, demanding a proactive shift towards Post-Quantum Cryptography (PQC). Current encryption methods, relied upon for secure online transactions, data storage, and communications, are predicated on the mathematical difficulty of certain problems for classical computers. However, these same problems become readily solvable with a sufficiently powerful quantum computer employing algorithms like Shor’s. This vulnerability extends beyond immediate threats; data encrypted today could be decrypted years later when quantum computers mature, necessitating long-term security considerations. PQC aims to develop cryptographic systems resistant to both classical and quantum attacks, ensuring continued confidentiality and integrity in a post-quantum world. The transition to these new standards isn’t merely a technological upgrade, but a critical imperative for safeguarding sensitive information and maintaining trust in digital systems.

Recognizing the impending threat to current encryption standards, the National Institute of Standards and Technology (NIST) initiated a multi-year, public evaluation process to identify and standardize a new generation of cryptographic algorithms resilient to quantum attacks. This proactive effort, culminating in the selection of several promising Post-Quantum Cryptography (PQC) candidates, isn’t merely an academic exercise; it represents a critical infrastructure upgrade. The standardization process ensures interoperability and widespread adoption of these new algorithms, allowing organizations and individuals time to migrate away from vulnerable systems like RSA and ECC. NIST’s commitment signals that the transition to quantum-resistant cryptography is not a future concern, but an active and urgent undertaking essential for safeguarding digital information in the years to come.

Quantifying Resilience: The QERS Framework – A System’s Stress Test

The Quantum Encryption Resilience Score (QERS) functions as a quantitative assessment tool for communication systems employing Post-Quantum Cryptography (PQC). It moves beyond single-metric evaluations by integrating multiple performance indicators to provide a holistic view of system resilience. Crucially, QERS is designed to identify and represent the inherent trade-offs present when deploying PQC algorithms; improvements in one area, such as enhanced security through larger key sizes, are demonstrably reflected as potential performance reductions in other areas, like increased latency or energy consumption. This capability is validated through testing in realistic deployment conditions, allowing for comparative analysis of different PQC implementations and configurations, and enabling informed decisions regarding system optimization and security posture.

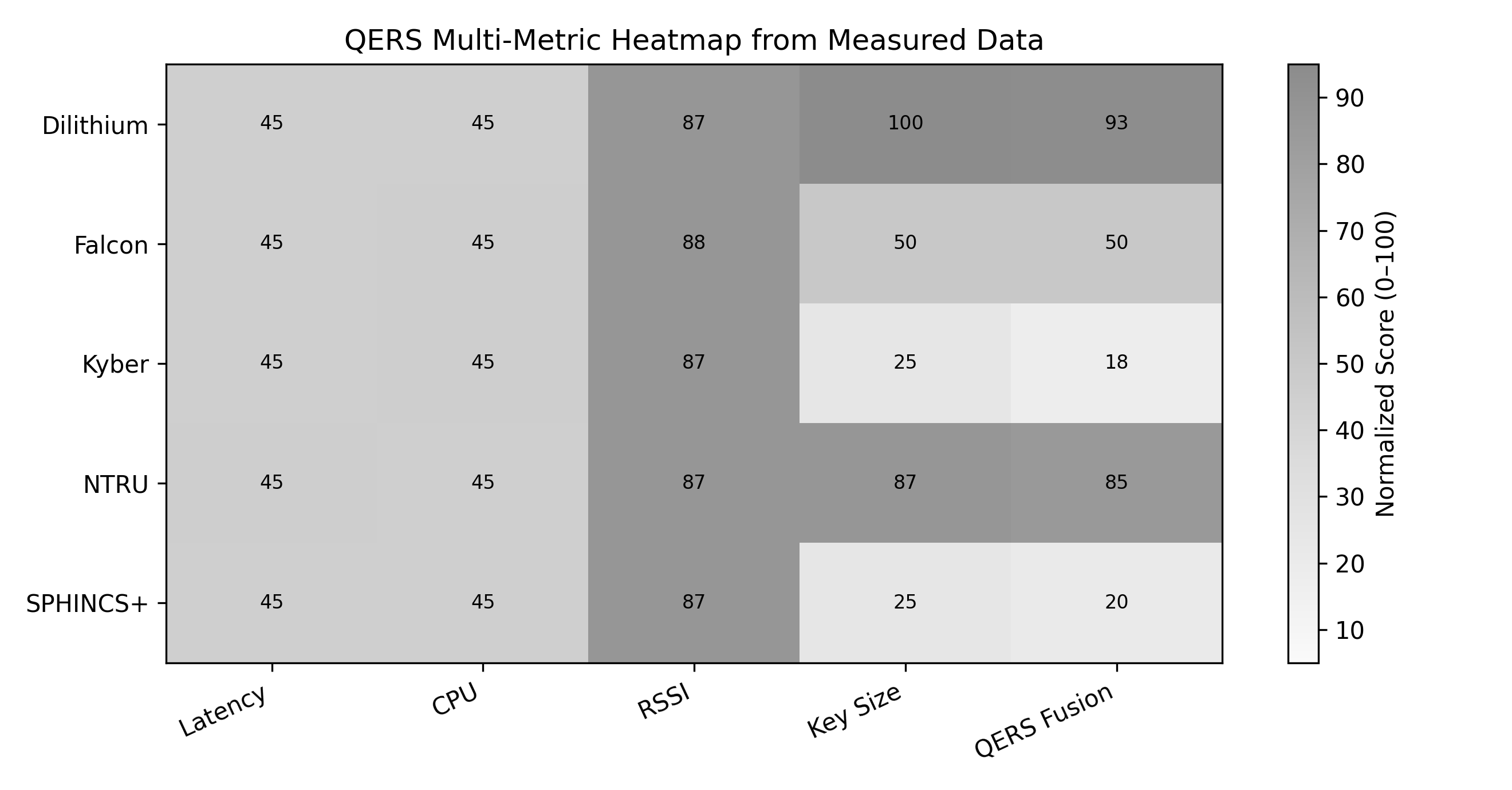

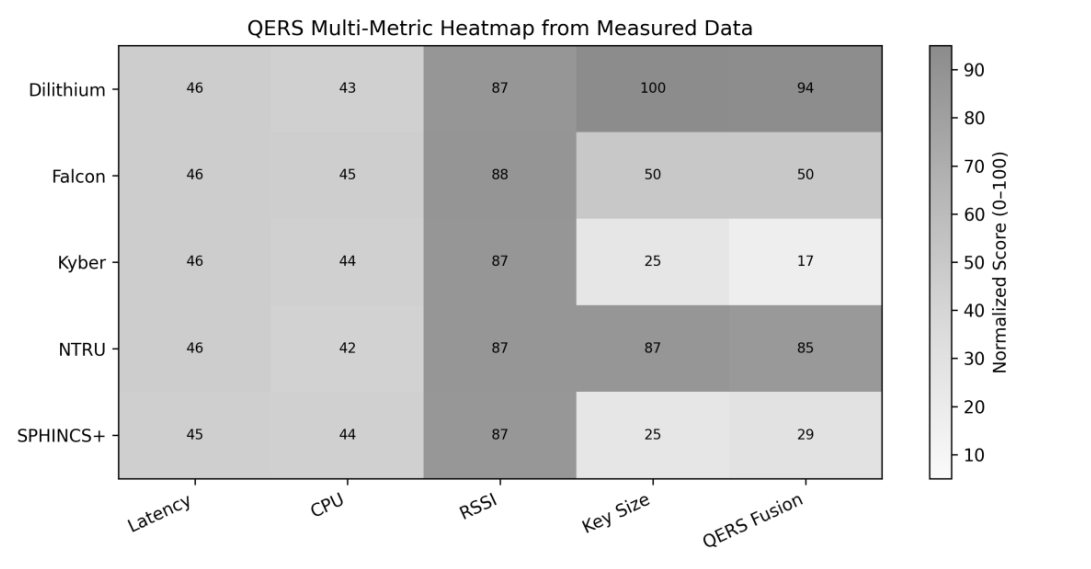

The Quantum Encryption Resilience Score (QERS) employs Multi-Criteria Decision Analysis (MCDA) to consolidate six key performance indicators – Latency, Packet Loss, CPU Load, Signal Strength, Energy Consumption, and Key Size – into a single, actionable metric. MCDA allows for the weighted aggregation of these diverse, often conflicting, parameters, reflecting the relative importance of each to overall system resilience. The resulting QERS value is then normalized to a 0-100 scale, facilitating direct comparison of resilience across different Post-Quantum Cryptography (PQC) algorithm implementations and varying deployment scenarios. This standardized score provides a quantifiable basis for evaluating and optimizing communication system performance under PQC workloads.

Min-Max Normalization is employed within the Quantum Encryption Resilience Score (QERS) framework to standardize disparate performance metrics prior to aggregation. This technique rescales each metric-including Latency, Packet Loss, CPU Load, Signal Strength, Energy Consumption, and Key Size-to a common range of 0 to 1. The calculation involves subtracting the minimum observed value for a given metric from each individual data point, then dividing by the range (maximum observed value minus minimum observed value) of that metric. This process ensures that metrics measured in different units, or with vastly different scales, contribute equally to the overall QERS score, facilitating meaningful comparisons of system performance across diverse Post-Quantum Cryptography (PQC) algorithms and varying deployment conditions. Without normalization, metrics with larger numerical values would disproportionately influence the final score, obscuring the relative performance of other important indicators.

Practical Validation: Stress-Testing PQC in the IoT Landscape

The Quantum-resistant Evaluation and Reporting System (QERS) utilizes ESP32 microcontrollers and Raspberry Pi single-board computers to evaluate Post-Quantum Cryptography (PQC) algorithm performance within representative Internet of Things (IoT) deployments. This deployment strategy allows for testing in constrained environments, mirroring the limited processing power, memory, and energy resources common in many IoT devices. Data gathered from these platforms provides performance metrics under realistic operating conditions, accounting for factors such as network connectivity and device load, which are often absent from isolated benchmarking exercises. The use of readily available and low-cost hardware facilitates wider accessibility and reproducibility of the QERS testing framework.

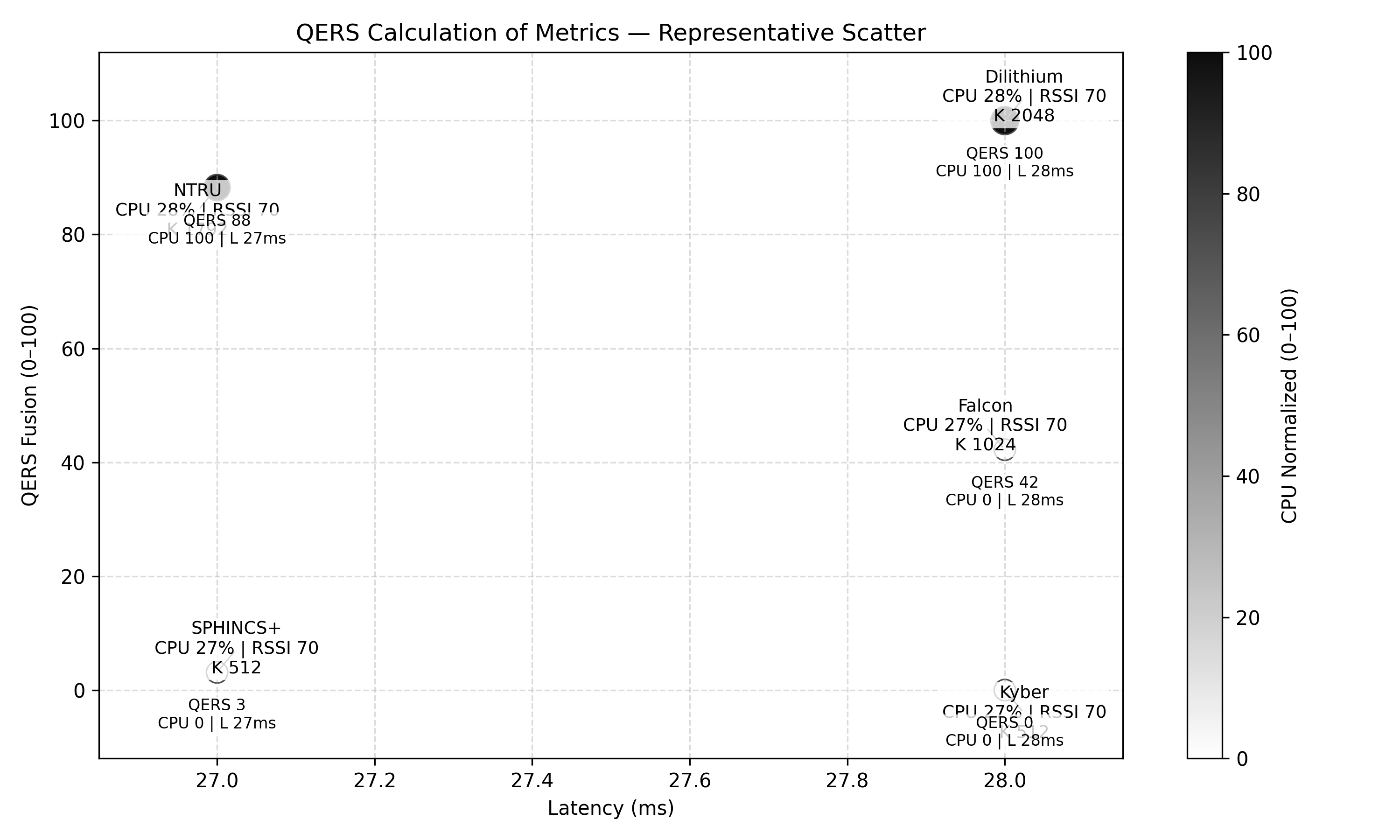

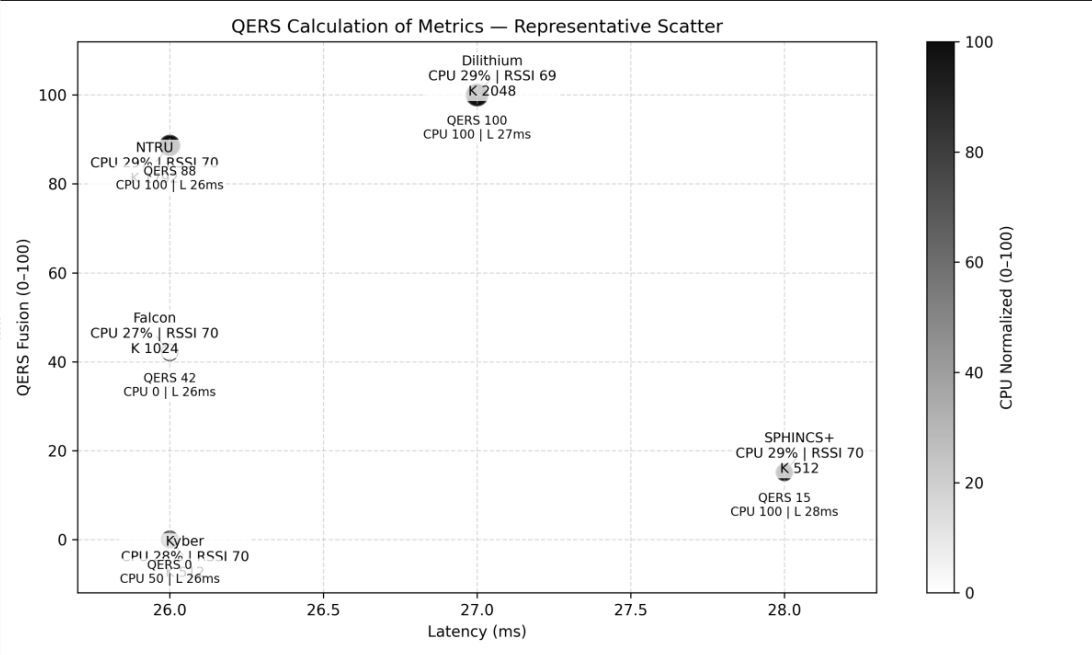

Benchmarking of post-quantum cryptography (PQC) algorithms-specifically Kyber, Dilithium, Falcon, SPHINCS+, and NTRU-demonstrates inherent trade-offs between security levels, computational performance, and resource utilization in constrained environments. Evaluations reveal that Dilithium and NTRU consistently achieve the highest Quality of Experience Rating Scores (QERS), indicating a superior balance across these metrics when compared to Kyber, Falcon, and SPHINCS+. Performance differences are attributable to algorithm design; for example, signature sizes vary significantly, impacting bandwidth requirements, while differing computational complexities affect CPU load and latency. Resource consumption is measured in terms of flash memory usage for storing cryptographic keys and the random access memory (RAM) needed during signature generation and verification.

The QERS evaluation framework utilizes three distinct scoring modes – Basic, Tuned, and Fusion – to facilitate comprehensive performance analysis of Post-Quantum Cryptography (PQC) algorithms in IoT deployments. The Basic mode provides a simple, unweighted aggregate score. The Tuned mode allows for customizable weighting of individual metrics-Latency, CPU Load, Received Signal Strength Indicator (RSSI), and Key Size-to prioritize performance characteristics relevant to specific environments. The Fusion mode dynamically adjusts metric weights based on observed environmental conditions, effectively capturing interactions between these parameters. This approach demonstrates that QERS can reveal performance trade-offs – for example, a lower latency at the cost of increased CPU load, or a reduced key size impacting RSSI – that would remain obscured when evaluating metrics in isolation through traditional benchmarking methods.

Beyond Performance: Building a Comprehensive Quantum Risk Framework

The Quantum Assessment, Response, and Security (QARS) framework extends the foundational work of Quantum Exposure Risk Scoring (QERS) to deliver a holistic evaluation of an organization’s vulnerability to quantum computing threats. While QERS initially focused on quantifying cryptographic exposure, QARS broadens this scope to encompass an organization’s overall readiness for migrating to post-quantum cryptography (PQC). This expanded assessment considers not only the systems at risk, but also the resources, timelines, and strategic considerations necessary for a successful transition. By systematically evaluating both exposure and readiness, QARS provides a crucial tool for organizations to proactively address the challenges posed by the advent of quantum computers and to build a resilient cryptographic infrastructure for the future.

The escalating connectivity of Industrial IoT (IIoT) networks introduces unique vulnerabilities that render a robust quantum risk assessment particularly crucial for these systems. Unlike traditional IT environments, IIoT devices often operate with limited processing power, memory, and energy resources, creating significant challenges for implementing computationally intensive post-quantum cryptographic (PQC) solutions. Moreover, the inherent need for high reliability and extended operational lifecycles in critical infrastructure-such as power grids, manufacturing plants, and transportation networks-demands proactive security measures against future quantum-based attacks. The potential disruption caused by compromised IIoT devices extends beyond data breaches, potentially impacting physical safety, environmental stability, and economic productivity, making a tailored quantum risk framework essential for safeguarding these increasingly interconnected systems.

The Quantum Risk Assessment and Response System (QARS) moves beyond simple vulnerability identification by directly linking technical metrics, derived from the Quantum Exposure and Risk Score (QERS), to an organization’s broader risk appetite and operational context. This integration facilitates a nuanced evaluation of post-quantum cryptographic (PQC) options, moving beyond theoretical security to practical feasibility. Organizations can utilize QARS to prioritize PQC algorithm implementation based on their specific threat model and asset criticality, informing decisions about phased deployments and resource allocation. Ultimately, this framework supports proactive, long-term security planning, allowing businesses to anticipate evolving quantum threats and maintain data confidentiality, integrity, and availability throughout the transition to a post-quantum world.

The pursuit of robust security, as demonstrated by the QERS framework, inherently involves challenging established norms. This research doesn’t simply accept existing cryptographic benchmarks; it actively probes their limitations when applied to the realities of constrained devices. It’s a systematic dismantling of assumptions to reveal hidden performance trade-offs. As Linus Torvalds famously said, “Most good programmers do programming as a hobby, and then they get paid to do it.” This sentiment perfectly encapsulates the spirit of this work – a dedication to understanding the fundamental mechanics of security, driven by intellectual curiosity rather than simply adhering to predefined standards. The QERS approach validates that true resilience isn’t found in blindly following rules, but in meticulously testing their boundaries.

Beyond the Score

The introduction of QERS does not, of course, solve the problem of securing constrained devices in a post-quantum world. It merely reframes the question. A score, however meticulously derived, is still an abstraction-a reduction of complex system behavior into a single number. The true value lies not in the score itself, but in the vulnerabilities it reveals – the points where the system, under pressure, confesses its inherent limitations. The framework highlights that performance isn’t a monolithic entity, but a constellation of trade-offs, forcing a reckoning with what functionality is truly essential, and what can be sacrificed at the altar of security.

Future iterations must address the dynamic nature of threats. QERS, as presented, assesses resilience against a current understanding of quantum attacks. But the adversarial landscape is not static. A truly robust system will require continuous, automated re-evaluation – a self-testing mechanism that probes for weaknesses as they emerge. This demands a shift from static benchmarking to adaptive, real-time analysis, effectively turning the device itself into its own penetration tester.

Ultimately, the most interesting question isn’t “How secure is this algorithm?” but “How does the system fail?” Every successful defense is merely a temporary reprieve. The inevitable breach is not a bug; it’s a feature – a confession of the system’s design, revealing the fault lines that will define the next generation of attacks and, consequently, the next iteration of resilience.

Original article: https://arxiv.org/pdf/2601.13399.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- EUR USD PREDICTION

- Epic Games Store Free Games for November 6 Are Great for the Busy Holiday Season

- How to Unlock & Upgrade Hobbies in Heartopia

- Battlefield 6 Open Beta Anti-Cheat Has Weird Issue on PC

- Sony Shuts Down PlayStation Stars Loyalty Program

- The Mandalorian & Grogu Hits A Worrying Star Wars Snag Ahead Of Its Release

- ARC Raiders Player Loses 100k Worth of Items in the Worst Possible Way

- Unveiling the Eye Patch Pirate: Oda’s Big Reveal in One Piece’s Elbaf Arc!

- TRX PREDICTION. TRX cryptocurrency

- Uncover Every Pokemon GO Stunning Styles Task and Reward Before Time Runs Out!

2026-01-21 23:35