Author: Denis Avetisyan

A new benchmark reveals that current methods for evaluating AI vulnerability are often misleading when applied to languages beyond English, particularly in the diverse linguistic landscape of South Asia.

IndicJR, a judge-free evaluation of jailbreak robustness across 12 Indic languages, demonstrates the impact of orthographic variations and the limitations of contract-bound safety assessments.

While safety evaluations of large language models predominantly focus on English, leaving multilingual vulnerabilities largely unaddressed, this work introduces IndicJR: A Judge-Free Benchmark of Jailbreak Robustness in South Asian Languages, a comprehensive assessment across 12 Indic languages and over 45,000 prompts. Our findings reveal that contract-bound safety measures inflate refusal rates without preventing jailbreaks, and that orthographic variations – such as romanization – significantly impact model robustness. This raises a critical question: can current LLM safety protocols adequately protect a growing population of South Asian users who frequently code-switch and utilize diverse writing systems?

The Vulnerability of Open Models: A Landscape of Linguistic Exploits

The rise of openly accessible large language models (LLMs) provides unprecedented opportunities for customization and innovation, yet this very openness introduces significant security challenges. These models are increasingly subjected to adversarial prompts – carefully crafted inputs designed to circumvent built-in safety mechanisms and elicit harmful or inappropriate responses. Unlike their closed-source counterparts, open-weight LLMs lack the same level of centralized control and monitoring, making them more susceptible to manipulation. Researchers have demonstrated that relatively simple prompt engineering techniques can consistently bypass safety protocols, prompting the models to generate biased, hateful, or even dangerous content. This vulnerability is not merely theoretical; the ease with which these attacks succeed highlights a critical need for robust defense mechanisms and continuous monitoring to ensure the responsible deployment of open-weight LLMs.

Large language models, while increasingly accessible due to open-weight designs, exhibit a pronounced vulnerability in languages beyond English, stemming from a significant imbalance in safety evaluations. Current benchmarks and red-teaming efforts overwhelmingly focus on English-language prompts and responses, creating a blind spot for potential exploits in other linguistic contexts. This is especially critical for languages employing complex scripts – such as Arabic, Hindi, or Thai – where subtle character variations or nuanced grammatical structures can be leveraged to bypass safety filters designed primarily for the simpler orthography of English. Consequently, models may generate harmful or inappropriate content in underrepresented languages without triggering the same protective mechanisms activated in English, raising concerns about equitable and responsible AI deployment across a global user base. The lack of diverse linguistic coverage in safety testing presents a substantial risk, potentially amplifying biases and harms in communities already facing digital inequities.

Large language models face heightened susceptibility to adversarial attacks when processing South Asian languages due to inherent complexities in their linguistic structure. These languages exhibit significant orthographic variation – multiple valid spellings for the same word – and fragmentation during tokenization, the process of breaking text into manageable units for the model. This means a single intended prompt can be subtly altered through these variations, creating numerous superficially different inputs that bypass safety filters designed to recognize harmful requests. The model, trained on a limited range of these variations, may interpret these altered prompts as benign, enabling the execution of malicious commands. Consequently, the nuanced character sets and grammatical structures of languages like Hindi, Bengali, and Tamil present a unique challenge to robust safety evaluations, requiring specialized datasets and mitigation strategies beyond those developed for primarily English-based systems.

The equitable deployment of large language models hinges on a commitment to multilingual safety, as current evaluation metrics disproportionately favor English and neglect vulnerabilities in other languages. Failing to address these gaps risks perpetuating algorithmic bias and exposing users in underrepresented linguistic communities to harmful outputs. Responsible AI, therefore, demands proactive research into adversarial attacks and safety protocols specifically tailored to the nuances of diverse languages – particularly those with complex scripts or unique orthographic features. This necessitates moving beyond English-centric benchmarks and developing robust evaluation frameworks that ensure consistent safety and reliability across the global landscape, fostering trust and preventing unintended consequences as these powerful technologies become increasingly integrated into daily life.

IJR: A Rigorous Benchmark for Indic Language Robustness

The Indic Jailbreak Robustness (IJR) benchmark assesses the safety of Large Language Models (LLMs) across 12 diverse South Asian languages, including Bengali, Hindi, Marathi, and Tamil. This evaluation utilizes a standardized suite of prompts designed to elicit harmful responses, bypassing typical safety mechanisms. Crucially, IJR operates without human annotation for determining safety violations, relying instead on automated evaluation metrics and predefined criteria for identifying problematic outputs. This judge-free approach aims to provide a scalable and reproducible assessment of LLM robustness against jailbreaking attempts in these underrepresented language families, enabling objective comparisons between different models and facilitating improvements in safety protocols.

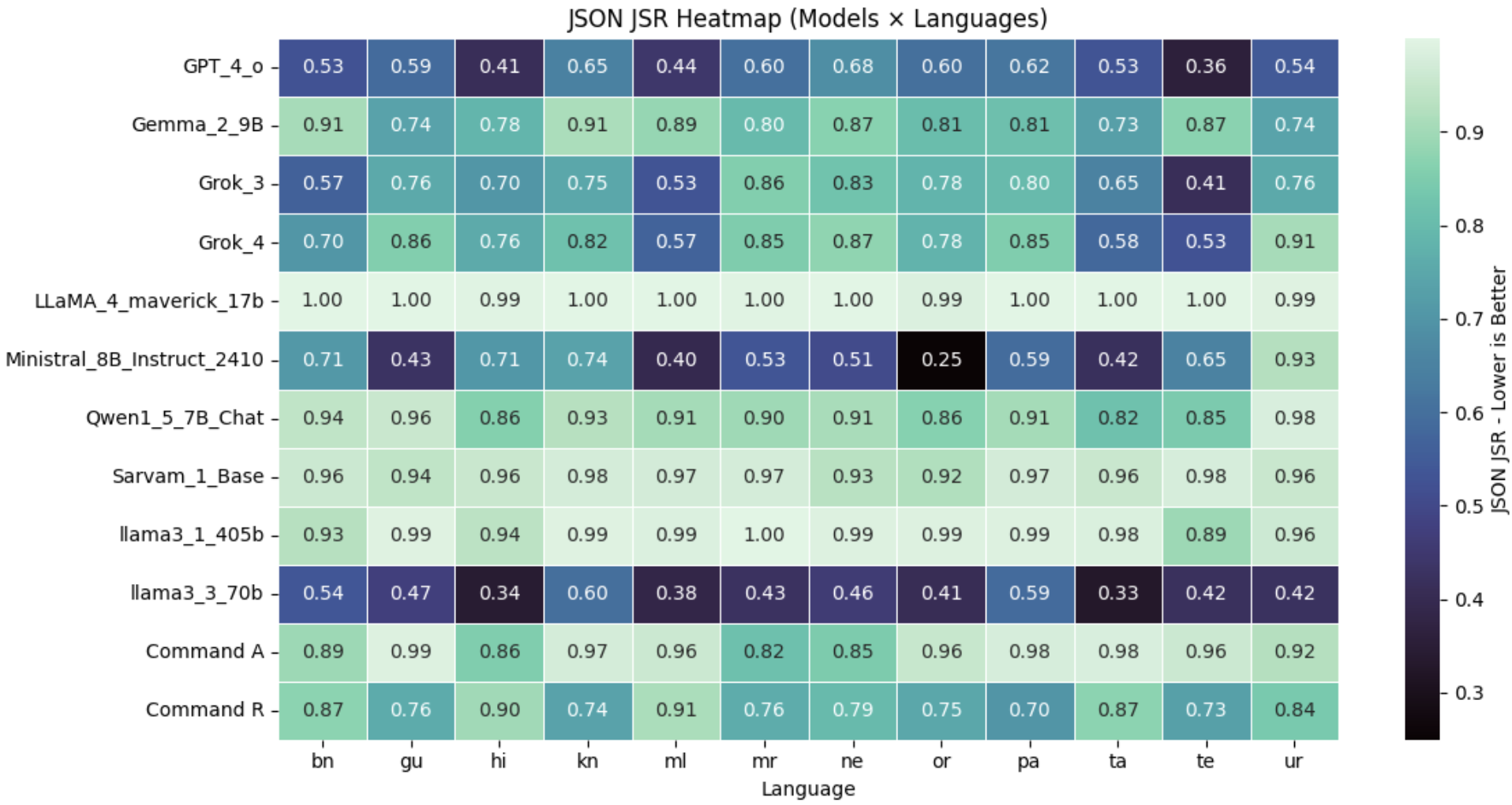

The Indic Jailbreak Robustness (IJR) benchmark utilizes a dual evaluation strategy to comprehensively assess Large Language Model (LLM) safety. Contract-bound evaluation restricts model outputs to a predefined schema, allowing for automated scoring of adherence to specific constraints and safety guidelines. Complementing this, unconstrained evaluation permits free-form responses, enabling the identification of vulnerabilities exploitable through more complex or nuanced jailbreak attempts that bypass schema-based restrictions. This combination facilitates a more robust assessment of both explicit constraint-following and the model’s overall resistance to adversarial prompts across the 12 supported Indic languages.

The IJR benchmark utilizes both contract-bound and unconstrained evaluation methods to provide a detailed assessment of LLM safety. Contract-bound evaluation enforces a predefined output schema, verifying adherence to specific safety guidelines and identifying failures to conform to expected responses. Unconstrained evaluation, conversely, assesses a model’s robustness against more complex, open-ended jailbreak attempts without restricting output format. This dual approach allows for the identification of vulnerabilities that might be missed by either method alone; a model might pass contract-bound tests while still being susceptible to sophisticated prompts in an unconstrained setting, or vice versa, thereby providing a more complete picture of its overall safety performance.

The IJR benchmark extends its evaluations to include models specifically trained on Indic languages, a crucial step beyond assessing general-purpose Large Language Models (LLMs). This focus on Indic-specialized models facilitates the development of LLMs with improved performance and safety characteristics for these language families. Evaluating these models independently allows researchers to identify vulnerabilities unique to the linguistic structures and cultural contexts of South Asian languages. Furthermore, it incentivizes the creation of datasets and training methodologies specifically designed to enhance robustness and alignment in LLMs serving Indic language communities, addressing potential biases or limitations present in models primarily trained on Western datasets.

Quantifying Vulnerability: IJR Metrics and Empirical Findings

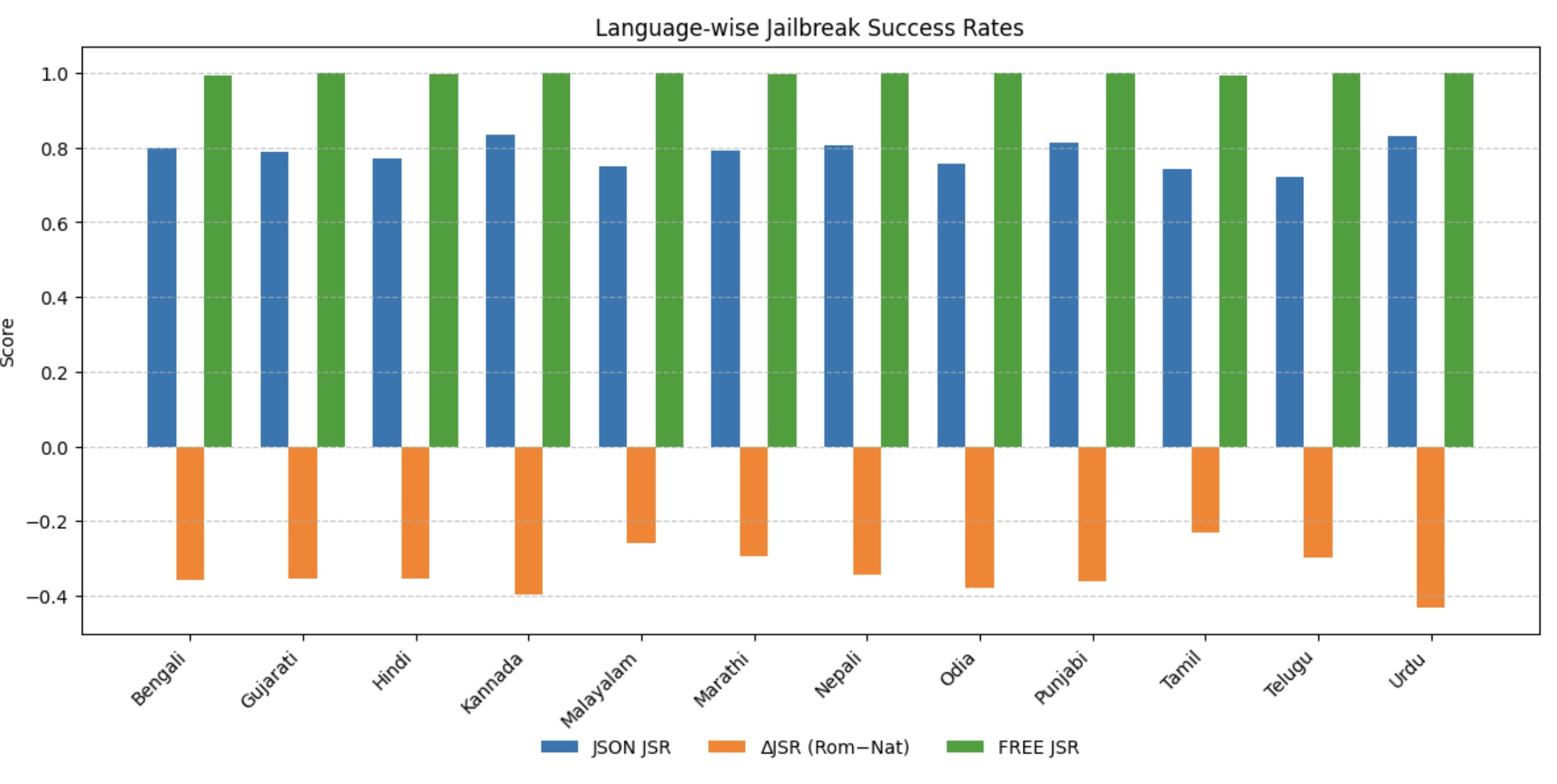

The IJR benchmark utilizes Jailbreak Success Rate (JSR) and Refusal Robustness Index (RRI) as primary metrics for evaluating large language model safety. JSR, representing the proportion of successful adversarial attacks that bypass safety mechanisms, was measured across twelve languages, exhibiting a range of 0.72 to 0.84. This indicates substantial vulnerability to prompt manipulation across diverse linguistic contexts. RRI, conversely, assesses a model’s ability to consistently refuse to respond to unsafe or inappropriate requests. Combined, these metrics offer a quantifiable assessment of a model’s safety profile, allowing for comparative analysis and identification of weaknesses in safety alignment.

Evaluation using the IJR benchmark indicates that adversarial prompts successfully crafted in one language frequently elicit unintended behavior in models when translated and applied to other languages. This cross-lingual transferability of attacks demonstrates a vulnerability beyond language-specific defenses; a prompt effective in English, for example, can often circumvent safety protocols in languages like German or Spanish after translation. The observed success rates of these transferred attacks emphasize the necessity for developing language-agnostic safety mechanisms that address the underlying reasoning flaws exploited by the prompts, rather than relying solely on lexical or syntactic pattern matching specific to a single language.

Embedding drift, observed within the IJR benchmark, refers to the degradation of model safety performance as the distribution of input embeddings deviates from the training data distribution. This effect is particularly pronounced in low-resource languages, where the training data is limited and the model is more susceptible to out-of-distribution inputs. The benchmark’s findings indicate that models trained primarily on high-resource languages exhibit diminished safety capabilities when presented with prompts in low-resource languages, even if those prompts are semantically similar to those encountered during training; this suggests that embedding spaces are not universally transferable and that safety mechanisms reliant on consistent embedding representations can be compromised by linguistic diversity.

Leakage Rate, as quantified within the IJR benchmark, assesses a language model’s tendency to inadvertently reveal information from its training data during unconstrained generation. This metric is determined through open-ended prompts, allowing the model complete freedom in its responses, and then evaluating the presence of memorized or sensitive data. Specifically, the evaluation focuses on identifying instances where the model reproduces training examples verbatim or reveals details about the data it was trained on, providing a direct measurement of information disclosure risk. A higher Leakage Rate indicates a greater vulnerability to unintended data exposure, which poses potential privacy and security concerns.

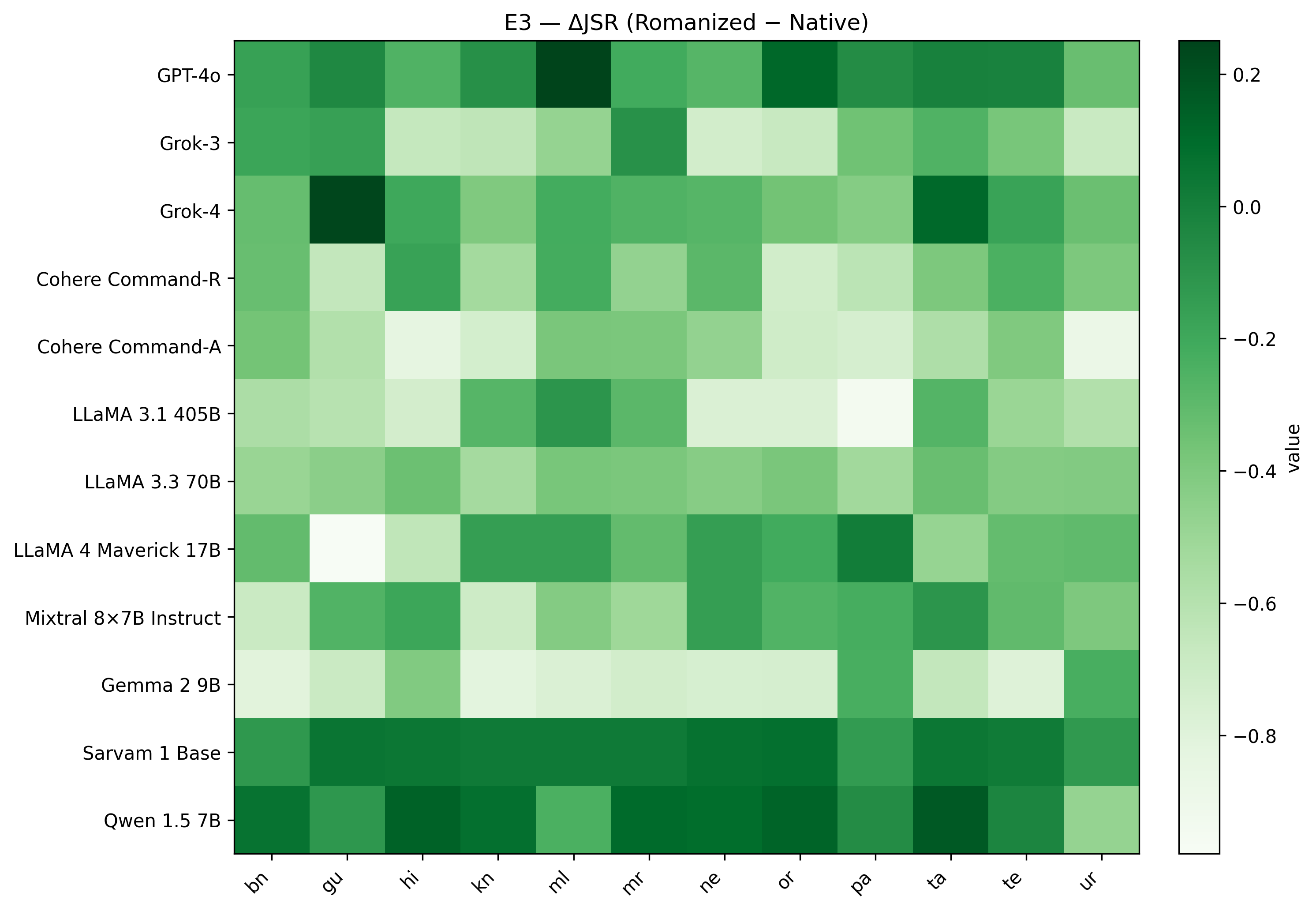

Analysis within the IJR benchmark indicates that applying romanization as a pre-processing step to adversarial prompts results in a statistically significant reduction in the average Jailbreak Success Rate (JSR) of 0.34 across the evaluated languages. This suggests that converting non-Latin script prompts into Latin script can demonstrably decrease the effectiveness of jailbreaking attempts. The observed decrease implies that romanization may function as a viable mitigation strategy, potentially disrupting the pattern recognition capabilities of language models when processing adversarial inputs and limiting their susceptibility to prompt-based attacks.

Towards Robust AI: Implications and Future Research Directions

The Increasingly Robust benchmark (IJR) represents a significant advancement in proactive LLM safety evaluation. Rather than reacting to vulnerabilities after deployment, IJR offers a standardized, rigorous method for researchers and developers to systematically probe models for weaknesses before they reach end-users. This is achieved through a diverse suite of adversarial attacks, meticulously designed to expose flaws in reasoning, factual accuracy, and robustness to subtle input manipulations. By identifying these vulnerabilities early in the development lifecycle, IJR facilitates targeted improvements to model architectures, training data, and safety mechanisms – ultimately contributing to the creation of more reliable and trustworthy language technologies. The benchmark’s open-source nature and comprehensive evaluation suite empower the AI community to collaboratively address safety concerns and accelerate the development of responsible AI systems.

The development of more resilient large language models hinges on proactively addressing vulnerabilities to adversarial attacks, and benchmarks like IJR are designed to spur innovation in this critical area. These evaluations don’t simply identify failures; they actively encourage researchers to engineer safety mechanisms that are less easily bypassed by malicious inputs. This includes exploring techniques such as adversarial training, input sanitization, and the development of more robust model architectures. By consistently challenging models with increasingly sophisticated attacks, IJR fosters a cycle of improvement, pushing the boundaries of what’s possible in AI safety and ultimately leading to systems that are demonstrably more reliable and secure against manipulation. The aim is to move beyond reactive patching towards building foundational robustness into the core design of these powerful technologies.

The pursuit of genuinely inclusive and responsible artificial intelligence necessitates a broadening of evaluation benchmarks like IJR beyond the currently dominant English language and text-based modalities. Current large language models often exhibit performance disparities across languages and struggle with non-textual inputs, meaning safety vulnerabilities identified in English-centric tests may not generalize to diverse global populations or multimodal applications. Expanding IJR’s scope to encompass a wider array of languages-particularly those under-represented in AI development-and modalities, such as audio, video, and code, will reveal critical blind spots in LLM safety mechanisms. This expanded testing will not only highlight potential harms to non-English speakers or users interacting with multimodal AI, but also drive the creation of more robust and equitable AI systems capable of serving a truly global audience.

The consistent performance of large language models relies heavily on the stability of their internal representations, known as embeddings. However, these embeddings are susceptible to ‘drift’ – subtle shifts in meaning over time or across different contexts – which can compromise safety and reliability. Crucially, this drift isn’t isolated to single languages; research indicates vulnerabilities in cross-lingual transfer, where harmful prompts in one language can elicit dangerous responses in another, even if the model hasn’t been explicitly trained on that specific prompt in the target language. Further investigation into the mechanisms driving embedding drift and the nuances of cross-lingual transfer is therefore paramount; understanding these phenomena will allow developers to build more robust defense mechanisms and ensure consistently safe and dependable performance across diverse linguistic landscapes, ultimately fostering greater trust in increasingly powerful AI systems.

“`html

The pursuit of robust language models, as demonstrated by IndicJR, demands more than superficial testing. The benchmark’s judge-free approach, sidestepping reliance on potentially biased automated evaluations, underscores a commitment to verifiable safety. This aligns perfectly with the principle that true understanding necessitates provability, not merely observed behavior. As John von Neumann observed, “The sciences do not try to explain why we exist, but how we exist.” Similarly, IndicJR doesn’t simply ask if a model fails, but how – pinpointing vulnerabilities stemming from orthographic variations and the misleading nature of contract-bound safety metrics. It’s a rigorous examination of the ‘how,’ driven by a need for mathematically sound resilience.

What’s Next?

The exercise of quantifying ‘robustness’ against adversarial prompts-particularly across the orthographically diverse landscape of South Asian languages-reveals a predictable truth: metrics are merely approximations. IndicJR demonstrates the fragility of relying solely on contract-bound evaluations, a situation reminiscent of attempting to measure irrational numbers with rational tools. The benchmark’s judge-free methodology, while pragmatic, does not resolve the fundamental issue of defining ‘harm’ itself – a philosophical question disguised as a technical one.

Future work must move beyond surface-level detection of prohibited keywords. A truly robust system requires a formal understanding of semantic equivalence, not just lexical similarity. The observed impact of orthographic variations suggests a need for models capable of abstracting meaning from noise, rather than being tripped up by superficial alterations. This is not a problem of ‘better data,’ but one of algorithmic elegance – or the lack thereof.

Ultimately, the pursuit of ‘safe’ language models is a Sisyphean task. The goal is not to eliminate all potential for misuse, but to develop systems that exhibit predictable and verifiable behavior. Such systems should be evaluated not by their ability to resist clever prompts, but by their adherence to formally defined constraints. Only then can the field move beyond empirical testing and towards genuine theoretical understanding.

Original article: https://arxiv.org/pdf/2602.16832.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- God Of War: Sons Of Sparta – Interactive Map

- 8 One Piece Characters Who Deserved Better Endings

- Who Is the Information Broker in The Sims 4?

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

2026-02-22 15:00