Author: Denis Avetisyan

A new approach identifies when a question is impossible to answer with a database, preemptively halting query generation for safer and more reliable text-to-SQL systems.

LatentRefusal leverages hidden states within a frozen language model to detect refusal signals, offering a fast and robust method for handling unanswerable text-to-SQL queries.

Despite advances in large language models for text-to-SQL, systems still struggle with unanswerable queries, potentially generating misleading or unsafe SQL code. This paper introduces LatentRefusal: Latent-Signal Refusal for Unanswerable Text-to-SQL Queries, a novel approach that proactively identifies refusal signals within a frozen LLM’s hidden activations before SQL generation. By employing a lightweight probing architecture to amplify sparse cues of question-schema mismatch, LatentRefusal offers a fast and reliable safety layer, improving F1 scores to 88.5% with minimal overhead. Could this latent-signal approach unlock more robust and trustworthy LLM-based data interactions beyond text-to-SQL?

The Perilous Path of Translation: Confronting Uncertainty in Text-to-SQL

Text-to-SQL systems, while demonstrating remarkable capabilities in translating natural language into database queries, are inherently vulnerable to generating flawed or even harmful results when confronted with problematic inputs. Ambiguous questions, lacking sufficient clarity for precise interpretation, can lead to queries that retrieve unintended data or produce inaccurate answers. More critically, systems often struggle with unanswerable questions – those for which the database simply contains no relevant information – and may attempt to construct a query anyway, potentially leading to system errors or the fabrication of plausible-sounding but entirely false responses. This susceptibility highlights a fundamental challenge in deploying these powerful tools: ensuring that the system not only understands what is asked, but also recognizes when a question cannot be reliably answered with the available data, demanding robust mechanisms to prevent the execution of unsafe or incorrect queries.

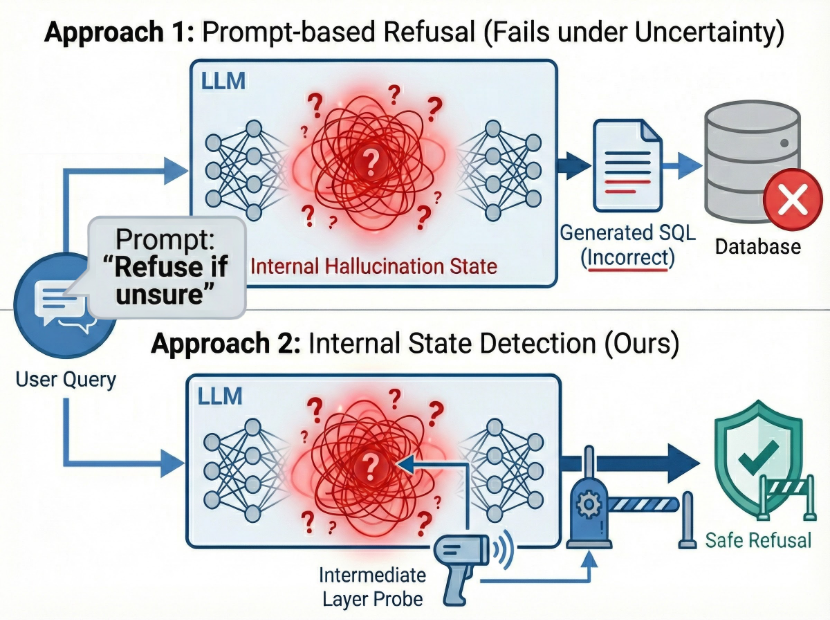

Current methods for determining if a question can be accurately answered by a text-to-SQL system frequently falter when confronted with the intricacies of natural language and the complexity of database queries. These traditional ‘answerability gating’ techniques often rely on superficial keyword matching or simplified semantic analysis, proving inadequate for discerning genuinely unanswerable questions from those merely phrased in a challenging manner. Consequently, systems may attempt to construct queries for ambiguous or unsupported requests, leading to inaccurate results or even system failures. This unreliability is particularly pronounced with questions involving complex relationships between database tables, negations, or subtle contextual cues, highlighting a significant gap in the field and necessitating more sophisticated approaches to pre-query validation.

The development of reliable text-to-SQL systems hinges on their ability to discern unanswerable questions and gracefully abstain from generating queries – a capability demanding robust refusal mechanisms. Current systems often proceed with flawed interpretations of ambiguous requests or attempt to answer questions exceeding the database’s knowledge, leading to inaccurate or even harmful results. A pre-execution refusal strategy, however, promises to intercept such problematic inputs, preventing the creation of potentially erroneous SQL and ensuring user trust. Such mechanisms require sophisticated understanding of both natural language nuance and database schema limitations, effectively acting as a critical safety net before any database interaction occurs. This proactive approach represents a significant advancement toward deployable, trustworthy text-to-SQL applications, safeguarding against incorrect information and bolstering system reliability.

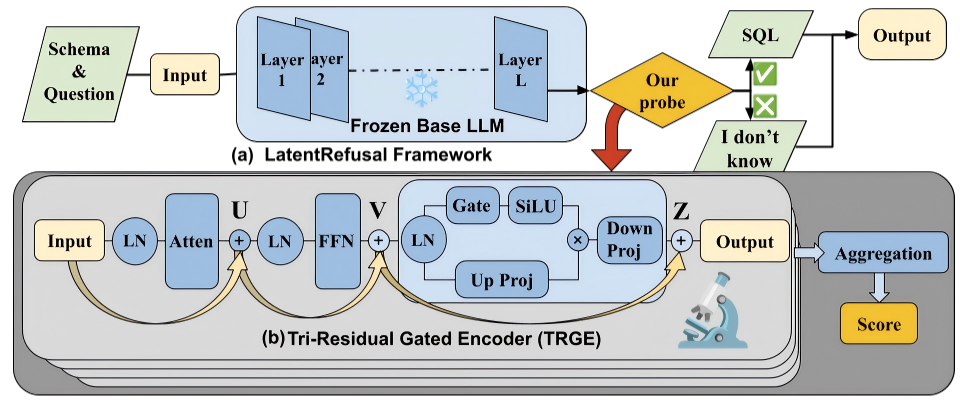

LatentRefusal: Probing for Unanswerability with Minimal Intrusion

LatentRefusal is a novel approach to determining the answerability of a given input without requiring full query generation. This method operates by analyzing the hidden states produced by a Frozen Large Language Model (LLM) – meaning the LLM’s weights are not updated during the process. By directly assessing these internal representations, LatentRefusal predicts whether the LLM possesses the necessary information to formulate a valid response before any query is constructed. This pre-query assessment offers computational efficiency, as it avoids the resource expenditure associated with generating and processing potentially unanswerable queries. The technique aims to identify inputs that are outside the LLM’s knowledge scope or incompatible with its schema, enabling proactive refusal and reducing unnecessary processing.

LatentRefusal employs a Tri-Residual Gated Encoder to analyze the hidden states of a Frozen LLM and identify potential unanswerability. This encoder architecture is designed for efficient probing of these states, specifically focusing on amplifying signals that indicate a mismatch between the input and the expected question schema. The Tri-Residual structure facilitates signal propagation and gradient flow during training, while the gating mechanism selectively emphasizes features relevant to schema mismatch. This targeted amplification allows for more accurate refusal prediction without requiring full query generation, reducing computational cost and improving efficiency in identifying inputs that the LLM cannot adequately address.

The Tri-Residual Gated Encoder addresses the issue of Schema Noise – irrelevant or misleading signals within the frozen language model’s hidden states – which negatively impacts the accuracy of refusal detection. This encoder employs a tri-residual connection structure designed to selectively amplify signals indicative of question-schema mismatch while suppressing noise. Crucially, it utilizes the SwiGLU activation function, a gated linear unit, to control information flow and enhance the model’s capacity to distinguish between valid and invalid query-schema pairings. This architecture allows for more reliable prediction of unanswerability by focusing on relevant features within the latent representation.

LatentRefusal significantly reduces computational cost by predicting unanswerability from the frozen Large Language Model’s hidden states prior to query generation. Traditional methods require a full query to be generated for each input to assess answerability, which is resource intensive. By operating on these latent representations, LatentRefusal bypasses the need for complete query decoding, thereby reducing both processing time and computational demands, especially when dealing with large volumes of input data or resource-constrained environments. This approach allows for efficient filtering of unanswerable questions before incurring the expense of full query generation and subsequent response processing.

Validating Refusal: Empirical Evidence Across Diverse Datasets

LatentRefusal’s efficacy was assessed through experimentation across four distinct datasets: TriageSQL, a complex SQL query dataset; AMBROSIA, focused on multi-hop reasoning; SQuAD 2.0, a reading comprehension benchmark including unanswerable questions; and MD-Enterprise, a medical domain question answering dataset. This diverse selection of datasets was chosen to evaluate the method’s generalizability across varying question types, reasoning complexities, and knowledge domains. Performance was measured on each dataset to establish a comprehensive understanding of LatentRefusal’s capabilities beyond a single task or dataset bias.

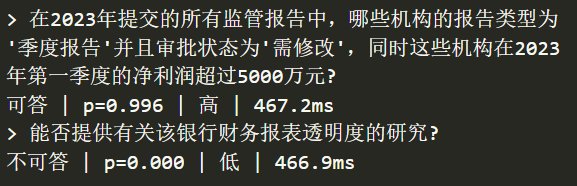

Experimental results demonstrate that the LatentRefusal method, when integrated with large language models (LLMs) such as Qwen-3-8B and Llama-3.1-8B, consistently exceeds the performance of baseline approaches in identifying questions that lack answerable content within a given context. Across multiple datasets, including TriageSQL, AMBROSIA, SQuAD 2.0, and MD-Enterprise, LatentRefusal achieves an average F1 score of 88.5% in this task. This metric indicates a strong ability to both correctly identify unanswerable questions (precision) and to avoid incorrectly flagging answerable questions as unanswerable (recall).

Evaluations demonstrate that LatentRefusal’s effectiveness in identifying unanswerable questions is independent of the underlying Large Language Model (LLM) architecture utilized. Testing encompassed various LLMs, including Qwen-3-8B and Llama-3.1-8B, and consistently yielded strong performance metrics without significant variance attributable to the specific model employed. This architectural independence indicates that LatentRefusal can be integrated as a robust refusal detection component with a broad range of LLMs, offering adaptability and simplifying deployment across diverse natural language processing systems.

Analysis of LatentRefusal’s output demonstrates well-calibrated prediction probabilities, signifying a reliable assessment of uncertainty in question answering. This calibration was quantitatively assessed, and results indicate LatentRefusal consistently outperforms baseline methods in this metric, achieving improvements of up to 14 percentage points across evaluated datasets. This improved calibration suggests the model’s confidence scores accurately reflect the likelihood of a correct refusal decision, offering greater trustworthiness in scenarios requiring robust identification of unanswerable questions.

Evaluation on the AMBROSIA dataset demonstrates that LatentRefusal achieves an F1 score of 80.2% in identifying unanswerable questions. This performance represents an 18.1 point improvement over the Semantic Entropy baseline method when tested on the same dataset. The F1 score, a harmonic mean of precision and recall, was used as the primary metric to quantify the method’s effectiveness in distinguishing between answerable and unanswerable questions within the AMBROSIA benchmark.

Beyond Text-to-SQL: Implications for Robust and Trustworthy AI

Text-to-SQL applications, while powerful, present a significant risk of executing incorrect queries if presented with unanswerable questions or ambiguous requests. LatentRefusal addresses this vulnerability by introducing a mechanism that proactively identifies and rejects such problematic inputs, thereby bolstering the safety and reliability of these systems. This approach doesn’t rely on explicitly flagging unanswerable questions; instead, it learns to recognize patterns in the model’s hidden states that indicate an inability to confidently translate natural language into accurate SQL. By refusing to execute when uncertainty is high, LatentRefusal minimizes the potential for erroneous data retrieval or manipulation, offering a practical solution for building more trustworthy and robust language-based database interfaces. This proactive refusal capability is critical for applications where data integrity and accurate results are paramount, fostering greater confidence in the system’s overall performance.

The utility of LatentRefusal transcends the specific domain of Text-to-SQL, representing a broadly applicable methodology for enhancing the safety and reliability of any language-driven reasoning system. By identifying and refusing to process requests that exceed a model’s capabilities or fall outside its knowledge boundaries, this framework establishes a proactive defense against erroneous outputs. The core principle – discerning unanswerable queries through latent space analysis – isn’t limited to database interactions; it can be adapted to applications ranging from complex question answering and code generation to scientific hypothesis evaluation and medical diagnosis. This generalized approach allows developers to build more robust and trustworthy AI systems, mitigating the risks associated with overconfident or inaccurate responses in diverse, critical applications.

Continued development anticipates a synergistic pairing of LatentRefusal with self-evaluation methodologies, aiming to enhance the robustness and reliability of language-based reasoning systems. This integration seeks to enable models not only to refuse unanswerable queries but also to assess the confidence in their responses, providing a more nuanced understanding of their limitations. Simultaneously, researchers intend to investigate more sophisticated techniques for quantifying uncertainty, notably through the application of Semantic Entropy – a measure that assesses the information content and unpredictability within a model’s hidden states. By combining proactive refusal mechanisms with refined uncertainty estimation, the goal is to build systems that are both safer and more transparent in their reasoning processes, ultimately fostering greater trust in their outputs.

Investigations into unanswerable question detection reveal LatentRefusal as a notably superior method when contrasted with alternative techniques like Spectra Analysis and Semantic Entropy. While both approaches aim to identify queries that lack a valid response within a database, LatentRefusal demonstrates a significant advantage in both effectiveness and speed. Specifically, the system achieves unanswerability detection with an impressively low inference latency of just 2 milliseconds. This represents a substantial performance gain; it is 13.7 times faster than Semantic Entropy, which requires 740 milliseconds to accomplish the same task. This efficiency positions LatentRefusal as a practical and scalable solution for enhancing the safety and reliability of language-driven database interactions and potentially other reasoning systems.

The pursuit of efficient refusal detection, as demonstrated by LatentRefusal, echoes a fundamental principle of elegant design. The method’s focus on extracting refusal signals from the frozen LLM’s latent space-specifically, its hidden states-prior to SQL generation, embodies a commitment to minimizing unnecessary computation. As Brian Kernighan observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” LatentRefusal, in a similar vein, prioritizes a direct and readily available signal-the latent refusal-over complex post-generation analysis, offering both speed and reliability. This mirrors a preference for simplicity and clarity in addressing the challenge of unanswerable queries.

Beyond Refusal

The presented work isolates a signal. A useful simplification. Yet, the question of ‘unanswerability’ remains stubbornly ill-defined. Current evaluation relies on curated datasets-artificial constraints on a universe of possible questions. Future effort must address queries genuinely outside the model’s knowledge, rather than merely those poorly mapped onto a schema. This necessitates a move beyond binary refusal-a spectrum of uncertainty is more honest.

The focus on frozen LLMs is pragmatic, acknowledging resource constraints. However, the true limit is not computation, but understanding. Detecting refusal in a static model is a solved problem, relatively. The challenge lies in building models which know what they do not know, and can articulate that ignorance without prompting. This requires internal self-assessment, a concept currently absent from large language designs.

Schema noise, deliberately introduced, offers a valuable diagnostic. But real-world databases are rarely clean. Future research should investigate robustness to genuinely corrupted or incomplete schemas-a test of practical utility. Clarity is the minimum viable kindness; a refusal mechanism, however elegant, is merely a palliative if the underlying system remains brittle.

Original article: https://arxiv.org/pdf/2601.10398.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- God Of War: Sons Of Sparta – Interactive Map

- Overwatch is Nerfing One of Its New Heroes From Reign of Talon Season 1

- Someone Made a SNES-Like Version of Super Mario Bros. Wonder, and You Can Play it for Free

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

- Meet the Tarot Club’s Mightiest: Ranking Lord Of Mysteries’ Most Powerful Beyonders

- One Piece Chapter 1175 Preview, Release Date, And What To Expect

- Bleach: Rebirth of Souls Shocks Fans With 8 Missing Icons!

- All Kamurocho Locker Keys in Yakuza Kiwami 3

2026-01-17 10:26