Author: Denis Avetisyan

New research reveals that standard theoretical guarantees are insufficient for efficient offline RL when dealing with incomplete data, demanding a deeper understanding of decision complexity.

This paper demonstrates that Q⋆-realizability and Bellman completeness do not ensure sample efficiency in offline reinforcement learning with partial coverage, necessitating consideration of coverage-adaptive performance and decision complexity.

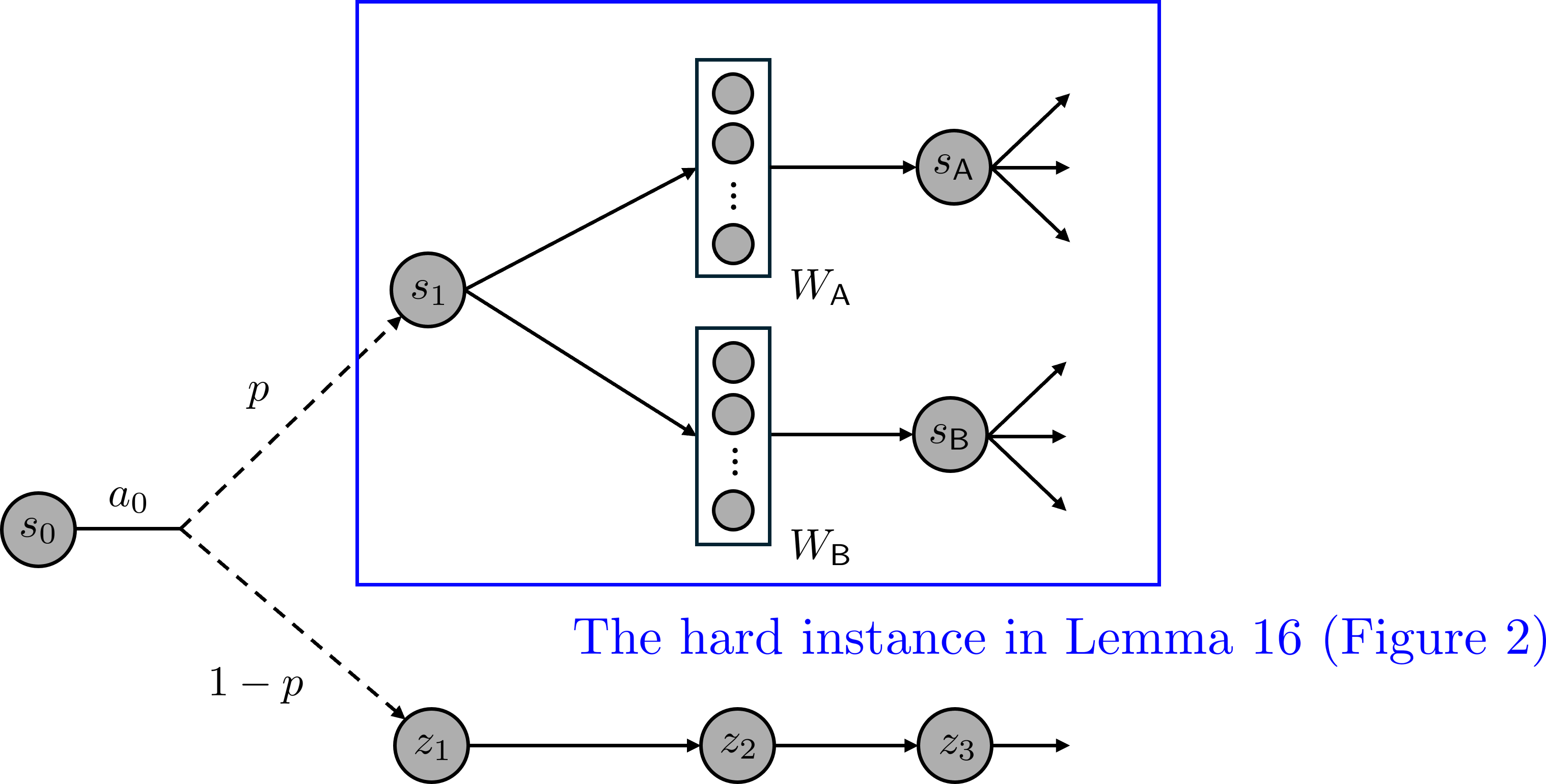

Despite the prevalence of optimism regarding Q^\star-realizability and Bellman completeness, these conditions are insufficient for efficient offline reinforcement learning under partial coverage. This work, ‘On the Complexity of Offline Reinforcement Learning with $Q^\star$-Approximation and Partial Coverage’, introduces a decision-estimation framework to characterize the intrinsic complexity of offline RL, revealing that decision complexity and coverage-adaptive performance are critical for sample efficiency. Notably, this framework recovers and improves existing bounds, yielding the first \epsilon^{-2} sample complexity for soft Q-learning under partial coverage and extending learnability guarantees to broader settings. Can these insights unlock more robust and scalable offline RL algorithms capable of tackling real-world decision-making problems?

The Promise and Peril of Learning from Static Data

Offline reinforcement learning presents a compelling alternative to traditional methods by enabling agents to learn effective policies solely from pre-collected datasets, circumventing the need for potentially expensive and time-consuming direct interaction with an environment. This approach unlocks the potential to leverage existing data – such as logs from robotic systems, historical gameplay recordings, or medical records – to train intelligent agents without further environmental engagement. The promise lies in accelerating the learning process and expanding the applicability of RL to domains where real-time interaction is impractical, dangerous, or resource-intensive, offering a pathway toward deploying intelligent systems in previously inaccessible settings.

A fundamental challenge in offline reinforcement learning arises from the reality that datasets rarely offer complete coverage of all possible states and actions an agent might encounter. This ‘partial coverage’ presents a significant risk, as learned policies are prone to extrapolate beyond the training data, potentially leading to unpredictable and suboptimal behavior in previously unseen situations. Essentially, the agent is asked to make decisions in contexts it hasn’t explicitly learned from, hindering its ability to generalize effectively and necessitating robust methods to mitigate this distributional shift. This limitation underscores the need for algorithms designed to account for data scarcity and prioritize safe exploration within the confines of a static dataset.

A core challenge in offline reinforcement learning stems from the risk of deploying policies into states and actions not adequately represented in the training dataset, leading to unpredictable and often suboptimal performance. Recent research clarifies that simply satisfying established criteria – such as Q^\star-realizability and Bellman completeness – isn’t enough to guarantee effective learning from limited data. This work provides a foundational understanding of the limitations of these conditions, demonstrating that sample efficiency in offline RL hinges on more nuanced factors related to the distribution of data and the ability of the learned policy to generalize beyond the observed states and actions – highlighting the need for new approaches that explicitly address the risks of extrapolation and distributional shift.

Mitigating Risk: The Power of Pessimism

Pessimism in offline reinforcement learning addresses the challenge of distributional shift by conservatively estimating the value of actions not represented in the training dataset. Traditional reinforcement learning assumes the agent will continue to interact with an environment consistent with the data used for training; however, offline RL algorithms must generalize to potentially unseen states and actions. Pessimistic approaches mitigate risk by assuming the worst possible outcome for these unseen actions, effectively widening the confidence interval around the estimated Q-value. This ensures the agent avoids potentially catastrophic outcomes stemming from extrapolating value functions to out-of-distribution scenarios and encourages exploration of well-understood, in-distribution actions during policy execution. The degree of pessimism is often controlled by a parameter influencing the magnitude of the conservative adjustment to the Q-value estimate.

Value-Centric and Policy-Centric Pessimism represent distinct approaches to mitigating risk in Reinforcement Learning by conservatively estimating action values. Value-Centric methods, such as the original formulation of Pessimistic Value Iteration, directly constrain the estimated value function, assuming the worst possible outcome for any unseen state-action pair. Conversely, Policy-Centric Pessimism focuses on constraining the policy itself, prioritizing actions with lower uncertainty and effectively broadening the range of actions considered safe. This difference in focus leads to varying sensitivities to distributional shift; Value-Centric methods can be overly conservative if the value distribution is poorly understood, while Policy-Centric approaches may exhibit more exploration but potentially higher immediate risk.

Conservative QQ-Learning builds upon principles of pessimistic value estimation by integrating them directly into a value-based reinforcement learning framework. Unlike prior approaches such as value-centric pessimism which often rely on distributional shift penalties, Conservative QQ-Learning utilizes a clipped double Q-learning update to constrain action values and inherently promote pessimism. This method demonstrably achieves tighter performance bounds – theoretically and empirically – compared to existing value-centric pessimism algorithms. Specifically, the clipping mechanism in the Q-learning update effectively reduces the variance of value estimates, leading to more robust policies and improved performance in offline RL settings where distributional shift is a concern.

Acknowledging Limitations: Beyond Ideal Assumptions

Pessimistic offline reinforcement learning algorithms rely heavily on assumptions regarding the quality of the learned Q-function and the underlying Markov Decision Process (MDP). Specifically, Q-Realizability posits that a near-optimal policy exists within the space of policies inducible by the learned Q-function, while Bellman Completeness ensures the existence of a stable optimal Q-value. Violations of these assumptions-such as function approximation error or extrapolation to out-of-distribution states-can lead to overly conservative policy evaluation and suboptimal performance. The efficacy of pessimism-based methods is thus directly linked to the validity of these assumptions within the given offline dataset and MDP structure; algorithms failing to meet these criteria may exhibit poor sample efficiency and fail to generalize effectively.

Decision Complexity and Estimation Error are primary factors contributing to suboptimal performance in reinforcement learning. Decision Complexity, quantified by the branching factor and horizon of the Markov Decision Process (MDP), directly impacts the number of possible action sequences and thus the difficulty of identifying the optimal policy. Simultaneously, Estimation Error arises from the limited data available to approximate the true value function or policy; this error manifests as inaccuracies in state-action value estimates Q(s,a) or policy parameters. The combined effect of these factors necessitates a substantial amount of data to achieve near-optimal performance, even under ideal conditions, and defines the inherent difficulty of the learning task irrespective of algorithmic improvements.

Current reinforcement learning frameworks often prioritize assumptions like Q-Realizability and Bellman Completeness as primary indicators of offline algorithm performance and sample efficiency. However, this work posits that these conditions are insufficient for accurately predicting success, particularly in complex Markov Decision Processes (MDPs). A more robust approach requires frameworks that explicitly model and account for inherent MDP complexities, including factors like Decision Complexity – the difficulty in selecting optimal actions – and Estimation Error, which arises from inaccurate value function approximations. By moving beyond reliance on these simplifying assumptions, research can focus on developing algorithms better equipped to handle the realities of complex, real-world learning tasks and achieve improved generalization and robustness.

E2D.OR: Forging Robust Policies Through Adversarial Training

E2D.OR presents a distinctive approach to offline reinforcement learning, drawing inspiration from the online Distributed Entropy Control (DEC) framework and implementing an adversarial policy optimization strategy. Unlike traditional offline RL methods that often struggle with distribution shift and limited data, E2D.OR trains a policy while simultaneously learning an adversarial model designed to challenge it with difficult state-action pairs. This adversarial process forces the policy to become more robust and generalize beyond the confines of the static dataset, effectively simulating a more dynamic and diverse learning environment. By optimizing the policy against this learned adversary, E2D.OR aims to improve performance and stability in scenarios where data collection is expensive or impractical, offering a pathway toward more reliable and adaptable offline RL agents.

E2D.OR enhances the resilience and adaptability of offline reinforcement learning through a carefully constructed adversarial process. The algorithm doesn’t simply learn from existing data; it actively challenges its own developing policy with a competing ‘adversary’ model designed to identify weaknesses and potential failure points. This rigorous self-assessment is particularly beneficial when dealing with datasets that lack comprehensive coverage of all possible states and actions – a common limitation in real-world applications. By forcing the policy to defend against intelligently crafted challenges, E2D.OR encourages the development of strategies that are less susceptible to unexpected situations and more capable of generalizing beyond the observed data, ultimately leading to more reliable performance in unseen environments.

Initial evaluations indicate that the E2D.OR algorithm consistently achieves superior performance when contrasted with established offline reinforcement learning methods, notably the Generative Distribution Estimation (GDE) technique. Across a range of benchmark datasets, E2D.OR exhibits improved sample efficiency and greater stability during the learning process, suggesting its capacity to effectively navigate complex environments with limited data. These findings highlight the potential of adversarial approaches to bolster offline RL, and position E2D.OR as a compelling avenue for continued investigation into robust and generalizable policies, particularly in scenarios where acquiring extensive training data is impractical or costly.

Looking Ahead: Characterizing and Conquering Dataset Complexity

The inherent difficulty of applying reinforcement learning to previously collected datasets – known as offline RL – is not uniform; some datasets present far greater challenges than others. Recent research introduces Coverage-Adaptive Complexity as a means of quantifying this difficulty, moving beyond simple dataset size or reward magnitude. This metric assesses how well a learning algorithm can cover the state-action space given the available data, effectively measuring the ‘representativeness’ of the dataset. A low coverage score indicates the algorithm may struggle to generalize to unseen states, while a high score suggests the dataset is sufficiently informative. Crucially, Coverage-Adaptive Complexity isn’t merely a diagnostic tool; it provides a valuable guide for algorithm design, allowing researchers to tailor approaches to the specific challenges posed by a given offline dataset and prioritize development of techniques robust to data scarcity or distributional shift.

Researchers are increasingly exploring the theoretical underpinnings of offline reinforcement learning to better understand its limitations and guide algorithmic improvements. Specifically, investigations into Low Bellman Rank Markov Decision Processes (MDPs) suggest that restricting the complexity of the optimal policy-by limiting the number of actions significantly impacted by each state-can lead to tighter lower bounds on achievable performance. Complementing this, the technique of Double Policy Samples aims to mitigate overestimation bias-a common challenge in offline RL-by evaluating policies using a different, independently-trained policy for action selection. These concepts, while still under development, offer promising pathways for constructing more accurate performance guarantees and designing algorithms that can effectively learn from static datasets, ultimately pushing the boundaries of what’s possible with offline reinforcement learning.

Further refinement of the E2D.OR framework necessitates exploration beyond current limitations, specifically addressing more intricate and realistic environments. Researchers are poised to integrate regularization techniques, mirroring those employed in Regularized Markov Decision Processes, to improve the algorithm’s capacity for generalization and stability. These techniques, which introduce penalties for overly complex policies or value functions, could mitigate overfitting and enhance performance in scenarios with sparse rewards or high-dimensional state spaces. By leveraging the strengths of regularization, future iterations of E2D.OR aim to provide more robust and reliable lower bounds on optimal policy performance, ultimately enabling the development of more effective offline reinforcement learning algorithms capable of tackling challenging real-world problems.

The pursuit of sample efficiency in offline reinforcement learning, as detailed in the study, reveals a fundamental truth about complex systems: a focus solely on realizability and completeness-while necessary-is demonstrably insufficient. The paper rightly emphasizes that decision complexity and coverage-adaptive performance become critical under partial coverage. This echoes Alan Turing’s observation that, “Sometimes people who are unskillful, leaders or in positions of power, can affect a great change.” The study demonstrates that simply possessing a ‘correct’ model-realizability-or a complete understanding of the environment-completeness-doesn’t guarantee success if the system cannot navigate the inherent complexities of real-world decision-making. The true cost lies not in the absence of knowledge, but in the inability to effectively apply it, a principle elegantly captured by the need to account for decision complexity.

Beyond Realizability

The pursuit of offline reinforcement learning often fixates on the elegance of realizability – the notion that a perfect policy exists within the data. This work demonstrates, however, that such guarantees are, at best, incomplete. Q⋆-realizability and Bellman completeness offer necessary, but demonstrably insufficient, conditions for practical success when coverage of the state-action space is limited. The field has long sought a simple metric for evaluating data quality; instead, it appears the structure of the decision itself-its inherent complexity-dictates performance, and documentation of coverage alone cannot capture this emergent behavior.

Future research must move beyond seeking guarantees and focus on quantifying the interplay between data distribution, decision complexity, and the robustness of learned policies. Coverage-adaptive algorithms represent a promising, though nascent, direction, but these must be rigorously analyzed not merely for convergence, but for their ability to gracefully degrade when faced with genuine out-of-distribution actions. The emphasis should shift from proving what can be learned, to understanding the limits of what can be reliably generalized.

Ultimately, the challenge lies in recognizing that offline RL is not simply about extracting a policy from data, but about constructing a system capable of operating competently within an incomplete model of the world. A truly elegant solution will likely prioritize simplicity-not in the algorithm itself, but in the representation of the decision problem-and embrace the inherent uncertainty that defines real-world interactions.

Original article: https://arxiv.org/pdf/2602.12107.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Build Muscle in Half Sword

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- Epic Pokemon Creations in Spore That Will Blow Your Mind!

- Top 8 UFC 5 Perks Every Fighter Should Use

- All Pistols in Battlefield 6

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- Gears of War: E-Day Returning Weapon Wish List

- Bitcoin Frenzy: The Presales That Will Make You Richer Than Your Ex’s New Partner! 💸

- How To Get Axe, Chop Grass & Dry Grass Chunk In Grounded 2

- Bitcoin’s Big Oopsie: Is It Time to Panic Sell? 🚨💸

2026-02-14 06:58