Author: Denis Avetisyan

New research quantifies the limitations of common approximations used to understand the behavior of matter created in high-energy heavy-ion collisions.

This review derives and assesses corrections to the smoothness and on-shell approximations within femtoscopic studies of relativistic heavy-ion collisions, improving the precision of source function extraction.

Standard analyses of femtoscopic correlations in relativistic heavy-ion collisions rely on approximations-smoothness and on-shell-that simplify the particle emission function, yet their validity remains largely unquantified. This work, ‘Corrections to the Smoothness and On-Shell Approximations in Femtoscopy and Coalescence’, presents a model-independent derivation of corrections to these approximations, enabling a rigorous assessment of their impact on extracted source parameters. We demonstrate that these corrections can be computed with comparable numerical cost to standard methods, and are typically at or below the percent level for LHC energies, with first-order contributions vanishing for angle-averaged correlations. How might incorporating these higher-order corrections refine our understanding of the quark-gluon plasma’s dynamics and the origin of hadronic correlations?

The Echo of Creation: Probing Extreme Matter

The collision of heavy ions at near light speed generates temperatures exceeding those found in the core of the sun, briefly creating the Quark-Gluon Plasma (QGP) – a state of matter where quarks and gluons are no longer confined within hadrons. This extreme environment, existing only for a fleeting moment – on the order of 10^{-{23}} seconds – demands entirely new approaches to experimental investigation. Conventional particle detectors, designed for more commonplace energies and timescales, are inadequate to resolve the QGP’s diminutive size and ephemeral existence. Consequently, scientists are pioneering innovative techniques, including sophisticated calorimetry, high-resolution tracking of numerous particles, and the study of collective flow phenomena, to indirectly probe the QGP’s properties and unravel the fundamental secrets of strong interaction physics.

Determining the precise characteristics of the Quark-Gluon Plasma – specifically its size and lifespan – represents a pivotal test of quantum chromodynamics (QCD), the prevailing theory describing the strong nuclear force. Theoretical predictions from QCD regarding the behavior of matter under extreme conditions become experimentally accessible through measurements of this plasma. A successful correlation between predicted properties, such as the expected collective flow and thermalization timescales, and observed plasma characteristics would not only validate the existing QCD framework but also illuminate the fundamental nature of strong interactions. Discrepancies, however, could signal the need for refinements to QCD or the discovery of novel physics beyond the standard model, making these measurements exceptionally valuable to the field of particle physics.

The investigation of relativistic heavy ion collisions presents a formidable challenge to conventional measurement techniques. These collisions generate the Quark-Gluon Plasma (QGP) – a state of matter existing for mere 10^{-{23}} seconds, and within a spatial volume smaller than a proton. Traditional particle detectors, designed to track the trajectories of long-lived particles, often lack the temporal and spatial resolution needed to probe this fleeting phenomenon. The incredibly short timescales demand detectors capable of ‘freezing’ the plasma’s evolution, while the minuscule size necessitates pinpoint accuracy in reconstructing collision events. Consequently, researchers are continually developing innovative approaches, including advanced calorimetry, high-granularity tracking systems, and sophisticated data analysis algorithms, to overcome these limitations and extract meaningful insights into the QGP’s properties.

Reconstructing the Source: A Femtoscopic View

Femtoscopic measurements reconstruct the geometry of particle-emitting sources by analyzing correlations between pairs of identical bosons. Because bosons are indistinguishable, their wavefunctions can interfere, leading to enhancements or suppressions in the observed two-particle angular distributions at small relative momenta. The strength of this interference is directly related to the size and shape of the emitting region – smaller sources result in larger correlations, while longer emission lifetimes broaden the observed correlations. By precisely measuring these correlations as a function of relative momentum and species, researchers can infer the spatial dimensions, lifetime, and even the collective flow velocity of the source created in high-energy collisions, providing insights into the dynamics of the strong force.

The two-particle correlation function in femtoscopy quantifies the probability of observing two identical particles within a given spatial and temporal separation. This function, typically expressed as a ratio of like-sign to unlike-sign pairs, exhibits constructive interference at small relative separations if the particles originate from the same source region. The width of this correlation peak is directly related to the size and lifetime of the emitting source; larger sources and longer lifetimes result in narrower peaks. Specifically, the Hong-Goldhaber-Sivers-Diethrich (HGSD) formula, a common parametrization, relates the correlation function to the HBT radius, which characterizes the source size in three spatial dimensions and one time dimension. Analyzing the shape and parameters of the correlation function, therefore, provides information about the geometry, homogeneity, and dynamics of the particle-producing region.

Femtoscopic calculations are significantly simplified through the application of two key approximations. The on-shell approximation assumes that the momentum of each particle is close to its mass-shell value, effectively neglecting off-shell effects and reducing the dimensionality of the integral involved in calculating the two-particle correlation function. The smoothness approximation postulates that the source function, which describes the particle emission region, varies slowly over the coherence length of the emitted particles; this allows for the replacement of the complex source integral with a Gaussian approximation, facilitating analytical or numerical evaluation of the correlation function. Both approximations reduce computational demands and enable extraction of source parameters, though their validity depends on the specific energy regime and particle species under investigation.

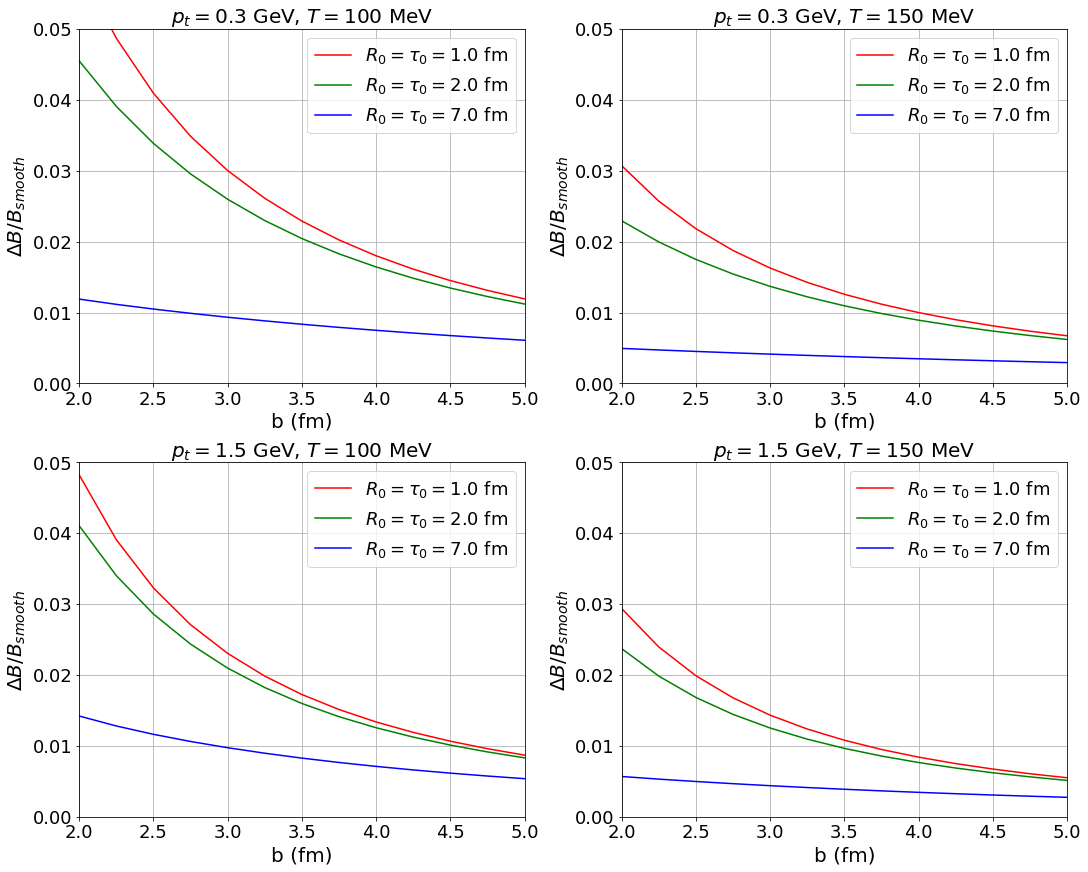

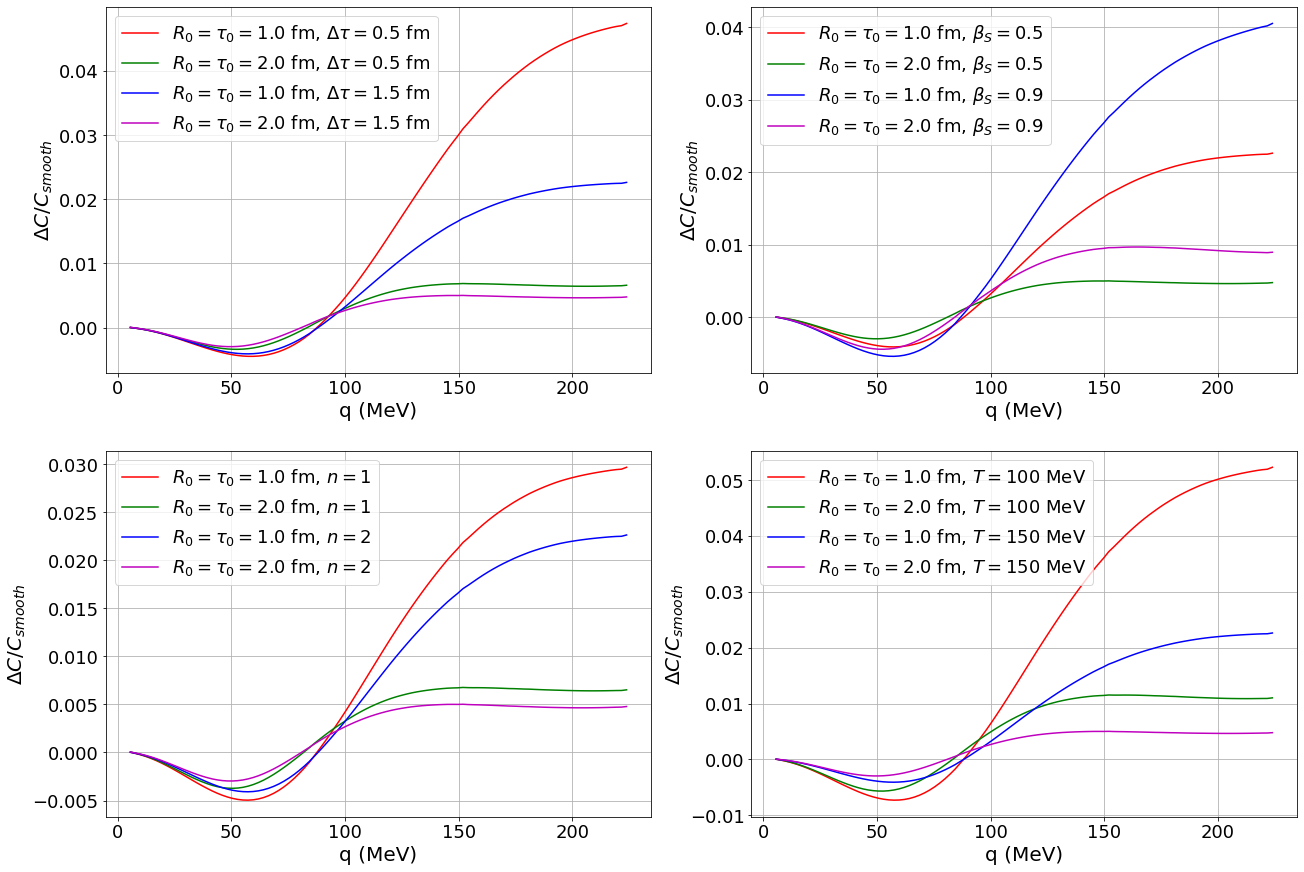

![The difference between the full and smoothed color screening functions <span class="katex-eq" data-katex-display="false">C(q)</span> and <span class="katex-eq" data-katex-display="false">C_{smooth}(q)</span>-shown on the right-exhibits source size and transverse momentum dependencies, with curves in the central row fitted to data from reference [4] using fixed parameters of <span class="katex-eq" data-katex-display="false">\Delta\tau=1.5</span> fm, <span class="katex-eq" data-katex-display="false">\beta_S=0.5</span>, <span class="katex-eq" data-katex-display="false">n=2</span>, and <span class="katex-eq" data-katex-display="false">T=150</span> MeV.](https://arxiv.org/html/2602.02810v1/corr-size.png)

The Foundation of Approximations: Necessary Simplifications

The on-shell approximation is a computational technique used in particle physics to simplify calculations involving particle emissions. It leverages the wavefunction of a free particle – one not subject to external forces – and assumes that emitted particles are massless during the emission process. This assumption allows for the simplification of the energy-momentum relationship, typically represented as E^2 = p^2c^2, where E is energy, p is momentum, and c is the speed of light. By treating particles as massless, certain terms in complex integrals are eliminated, substantially reducing the computational burden while maintaining reasonable accuracy in scenarios where the particle masses are small compared to the energies involved. This simplification is particularly useful in high-energy physics calculations and initial stages of more complex models.

Utilizing the condition of equal mass particles within the on-shell approximation significantly reduces computational demands in scattering amplitude calculations. When all emitted particles have the same mass, m, the energy-momentum relation simplifies, allowing for the elimination of variables during loop integrations. This simplification arises because the loop momentum can be expressed in terms of a single variable, decreasing the dimensionality of the integral. Consequently, fewer numerical evaluations are required to obtain a result, improving the efficiency of calculations in quantum field theory and related areas. The computational savings are particularly notable in scenarios involving multiple particle emissions or high-order corrections.

The smoothness approximation, utilized in source reconstruction techniques, posits that the source function varies gradually over the spatial or temporal domain. This assumption allows for the simplification of complex integrals and the reduction of computational demands; however, it introduces inaccuracies when applied to sources exhibiting rapid fluctuations or discontinuities. The degree of error is directly correlated to the rate of change within the source function; faster variations lead to larger discrepancies between the reconstructed source and the actual source. Consequently, the validity of results obtained using the smoothness approximation is contingent upon the source function’s characteristics, and careful consideration must be given to the potential for error when dealing with non-smooth sources.

The Evolving Picture: Corrections and Hydrodynamic Flow

Precise determination of the Quark-Gluon Plasma’s characteristics hinges on accurate measurements of the collision’s source size and lifetime. Initial models relied on approximations – notably, assumptions of smoothness and on-shell behavior – to simplify complex calculations. However, recent refinements address limitations within these approximations, incorporating corrections that demonstrably improve the fidelity of the resulting measurements. These corrections, while typically small – generally less than 0.5% – are not negligible; they represent a crucial step toward reducing systematic uncertainties and achieving a more nuanced understanding of the ultra-relativistic heavy-ion collisions that create this exotic state of matter. By accounting for deviations from ideal conditions, researchers can more confidently interpret femtoscopic data and extract meaningful insights into the fundamental properties of the Quark-Gluon Plasma itself.

The intensely hot and dense matter created in heavy-ion collisions undergoes a rapid, collective expansion – a phenomenon described by the Blast Wave Model. This model posits that the collision generates a “fireball” which expands hydrodynamically, much like an explosion, governed by equations of fluid dynamics. Importantly, the Blast Wave Model doesn’t just describe the expansion rate, but also how this expansion shapes the source function – a mathematical representation of the emitting region’s geometry and velocity. By accurately modeling this hydrodynamic flow, physicists can better understand the collective behavior of the Quark-Gluon Plasma, and ultimately, extract crucial information about its temperature, viscosity, and equation of state from femtoscopic measurements – the analysis of identical particle correlations.

The interpretation of femtoscopic measurements-which probe the spatial and temporal characteristics of particle emission-hinges on a precise understanding of the source function. This function mathematically describes the geometry and evolution of the particle-emitting region created in heavy-ion collisions, often modeled as a rapidly expanding fireball. By carefully reconstructing the source function, physicists can effectively ‘reverse engineer’ the conditions prevailing during the collision, gaining crucial insights into the properties of the Quark-Gluon Plasma (QGP). Variations in the source function directly impact the observed correlations between identical particles, allowing researchers to determine the QGP’s size, lifetime, and even its flow characteristics-essentially mapping out the landscape of this exotic state of matter created in the laboratory.

Unveiling the Quark-Gluon Plasma: A Future Perspective

Femtoscopy, a technique leveraging the quantum statistical correlations of identical particles, continues to be indispensable in the investigation of the Quark-Gluon Plasma (QGP). By meticulously analyzing the extremely narrow correlations – on the scale of femtometers – between pairs of particles emitted from the QGP, physicists can reconstruct the geometry and dynamics of this fleeting, ultra-hot state of matter. Continued advancements in the underlying approximations and theoretical models used in femtoscopic analysis are crucial, as they directly influence the precision with which the QGP’s properties – such as its size, lifetime, and temperature – can be determined. The technique effectively functions as a microscopic probe, offering unique insights into the strong force interactions governing matter under extreme conditions, complementing data from other experimental observables and providing a powerful means to constrain theoretical predictions about the QGP’s behavior.

Determining the spatial extent and temporal duration of the Quark-Gluon Plasma (QGP) provides a unique window into its fundamental properties. By meticulously measuring these characteristics, physicists can effectively constrain the equation of state, which describes the relationship between pressure, temperature, and energy density within the QGP. Furthermore, understanding the QGP’s lifetime is critical for extracting its transport coefficients – parameters that quantify how efficiently momentum and energy are dissipated within this extremely hot and dense medium. These coefficients, such as shear and bulk viscosity, reveal how the QGP evolves and ultimately thermalizes, transitioning from an initial highly energetic state to a more equilibrated one. Precise measurements of source size and lifetime, therefore, are not merely descriptive, but directly inform theoretical models striving to understand the behavior of matter under the most extreme conditions achievable in the laboratory, offering insights into the strong force that governs the interactions of quarks and gluons.

Recent analyses demonstrate an exceptional level of precision in characterizing the Quark-Gluon Plasma through femtoscopic measurements. Investigations into the validity of key approximations – namely, the assumptions of smoothness and on-shell behavior within the plasma – reveal corrections consistently below 0.5%. This finding is particularly significant as it places the systematic uncertainties stemming from these approximations on a comparable scale to the inherent statistical uncertainties of the experimental data itself. Such a close alignment signifies that the observed features of the Quark-Gluon Plasma are not substantially altered by the analytical methods employed, bolstering confidence in the derived properties like size, lifetime, and ultimately, the equation of state governing this extreme form of matter.

The continued evolution of femtoscopic methodologies promises to unlock increasingly detailed understandings of the strong force, the fundamental interaction binding quarks and gluons. Refinements in both experimental data acquisition and computational analysis are expected to yield more precise measurements of the Quark-Gluon Plasma’s properties, including its temperature, density, and viscosity. These advancements aren’t merely about improving existing precision; they pave the way for probing previously inaccessible aspects of the plasma’s dynamic behavior, such as the collective flow and the detailed mechanisms governing particle production. Consequently, future studies using these techniques may reveal subtle but crucial deviations from current theoretical models, potentially leading to a paradigm shift in the comprehension of strong interactions and the very fabric of matter at extreme energies.

The pursuit of approximation, as detailed in this study of femtoscopic corrections, echoes a fundamental truth about all systems. The authors meticulously quantify deviations from idealized conditions-smoothness and on-shell assumptions-revealing the inherent limitations of modeling complex phenomena. This work doesn’t seek perfect representation, but rather a pragmatic understanding of error. It acknowledges that, as John Dewey observed, “There is no such thing as complete certainty, only degrees of probability.” The analysis of these corrections isn’t about eliminating approximation, but about knowing where and how the system will predictably fail, allowing for a more robust interpretation of relativistic heavy-ion collisions. Order, in this context, is merely a carefully constructed cache against inevitable outage.

The Horizon of Correlation

The presented corrections to the smoothness and on-shell approximations are not, ultimately, about achieving a more ‘accurate’ number. The pursuit of numerical precision in heavy-ion collisions feels increasingly like polishing the brass on a sinking ship. Rather, these calculations delineate the boundaries of validity – the territory where a simplified model ceases to be a useful fiction. The field frequently treats approximations as conveniences, neglecting that each simplification is a prophecy of future failure, a pre-written obsolescence. The true value lies in knowing where the model breaks down, not in extending its reach.

Future work will inevitably focus on incorporating these corrections into existing analysis frameworks. However, a more fruitful path might be to acknowledge the inherent limitations of any attempt to reconstruct a spacetime source from a handful of observed particles. Stability, after all, is merely an illusion that caches well. The correlations observed in femtoscopy are not echoes of a pristine, well-defined source, but signatures of a chaotic, evolving system.

Chaos isn’t failure – it’s nature’s syntax. The challenge, then, isn’t to eliminate the noise, but to learn to read the signal within it. A guarantee of accuracy is just a contract with probability; a more honest approach is to embrace the uncertainty and map the landscape of possible sources, not to pretend a single solution exists.

Original article: https://arxiv.org/pdf/2602.02810.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- God Of War: Sons Of Sparta – Interactive Map

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Who Is the Information Broker in The Sims 4?

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Someone Made a SNES-Like Version of Super Mario Bros. Wonder, and You Can Play it for Free

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- All 100 Substory Locations in Yakuza 0 Director’s Cut

2026-02-04 18:09