Fragmented Data: Coding for Resilience in DNA Storage

As DNA emerges as a promising medium for long-term data archiving, new coding schemes are vital to overcome the challenges of fragment reassembly and data corruption.

As DNA emerges as a promising medium for long-term data archiving, new coding schemes are vital to overcome the challenges of fragment reassembly and data corruption.

Researchers are exploring the power of differential geometry and algebraic topology to build cryptographic systems resilient to attacks from quantum computers.

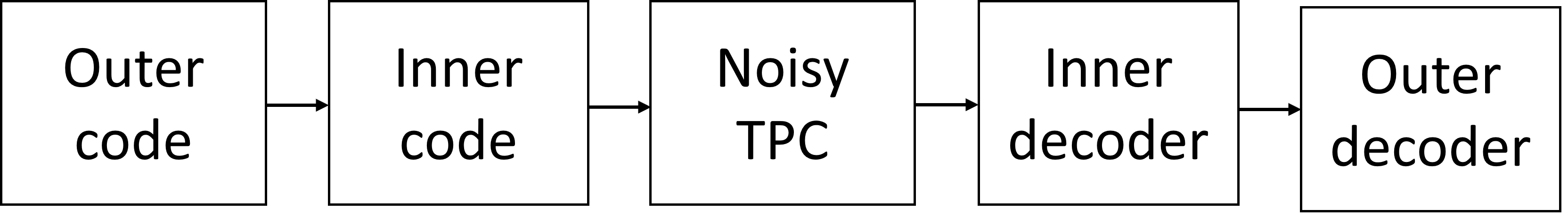

A new architectural approach integrates post-quantum cryptography to secure the software supply chain against future quantum-based attacks.

A new theory proposes that cancer isn’t driven by inherent aggression, but by a desperate struggle for stability in the face of cellular crisis.

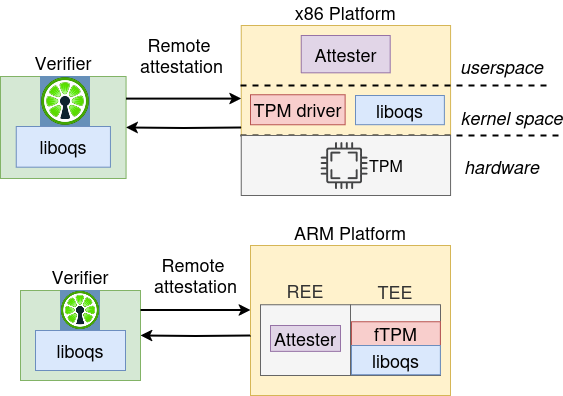

A new caching scheme dramatically improves transmission rates by intelligently organizing and delivering data to multiple users simultaneously.

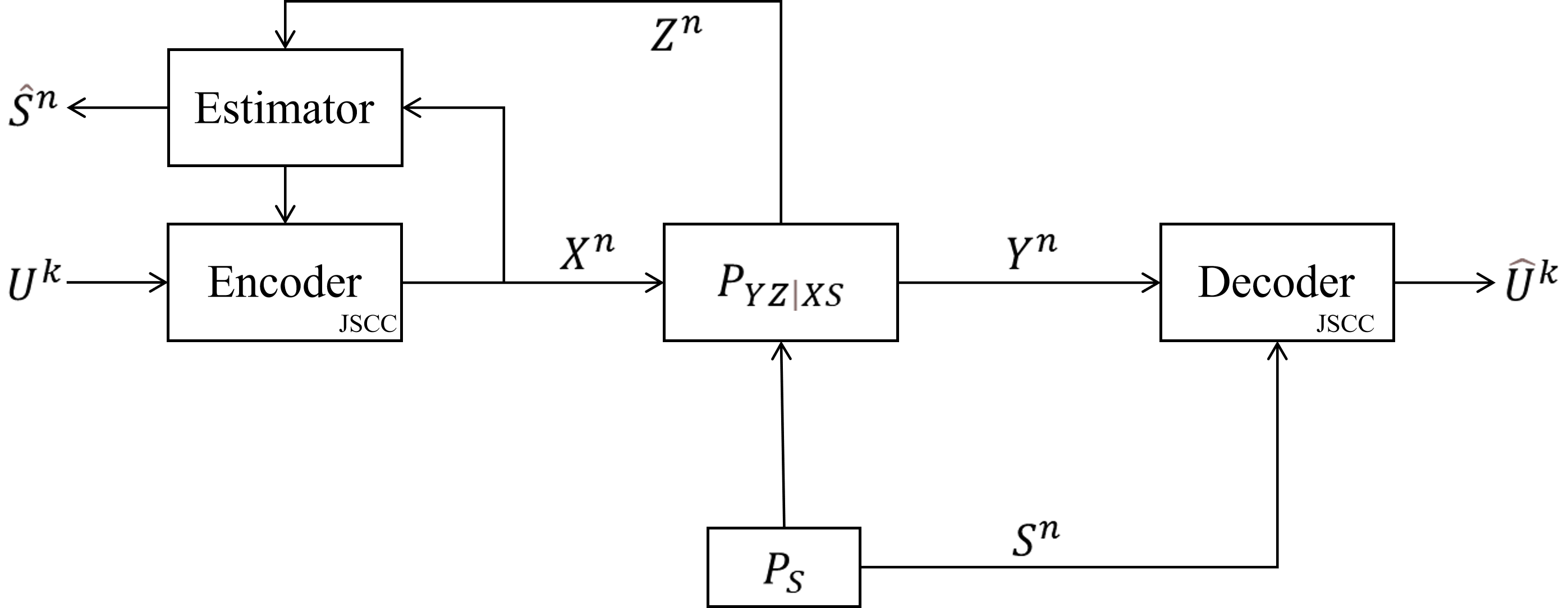

A new analysis reveals the fundamental limits of simultaneously transmitting data and sensing the environment, paving the way for more efficient wireless systems.

A new computational study reveals that the r²SCAN functional offers a significant improvement in accurately predicting charge density wave instabilities and lattice dynamics in the material CuTe.

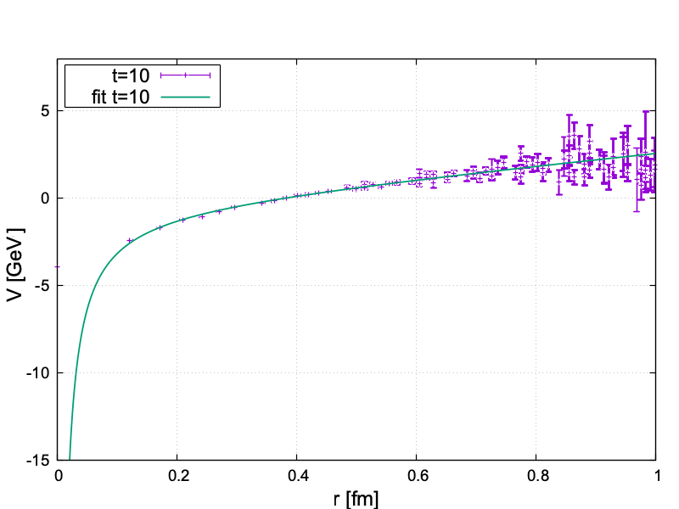

A lattice QCD study provides crucial insights into the fundamental building blocks of matter by precisely determining the mass of scalar diquarks and characterizing their interactions.

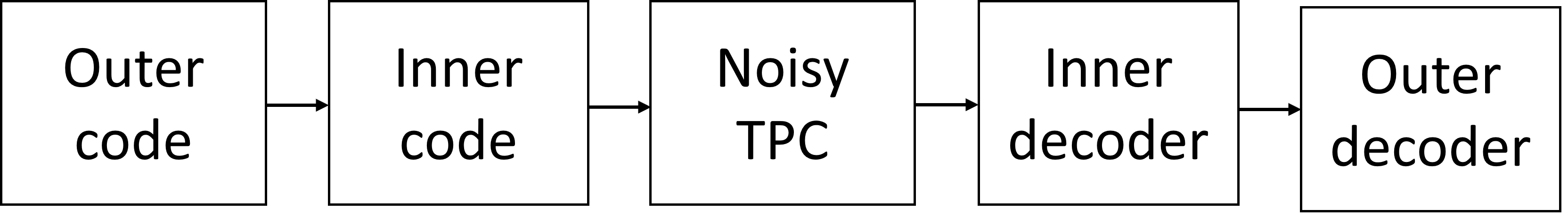

New research reveals how strategically diversifying data codebooks improves the efficiency of broadcasting information across noisy channels.

![Compressed sensing, via the exploitation of orthogonality conditions detailed in Theorem 1 and minimized in Theorem 2, achieves feasibility with fewer responses-specifically [latex]N \leq d(K-1)[/latex]-than baseline individual decoding schemes, which require at least [latex]N \geq d(K-1) + 1[/latex] responses to ensure a viable solution, thereby demonstrating a fundamental efficiency gain in data acquisition.](https://arxiv.org/html/2601.10028v1/x1.png)

New research defines the limits of efficient data aggregation, revealing how to dramatically reduce the number of responses needed for weighted polynomial computations.