Author: Denis Avetisyan

A new analysis reveals that smart contracts generated by artificial intelligence models often harbor significant security flaws, demanding greater scrutiny before deployment.

This research assesses the vulnerability landscape of smart contracts produced by large language models, highlighting the need for robust auditing and verification techniques.

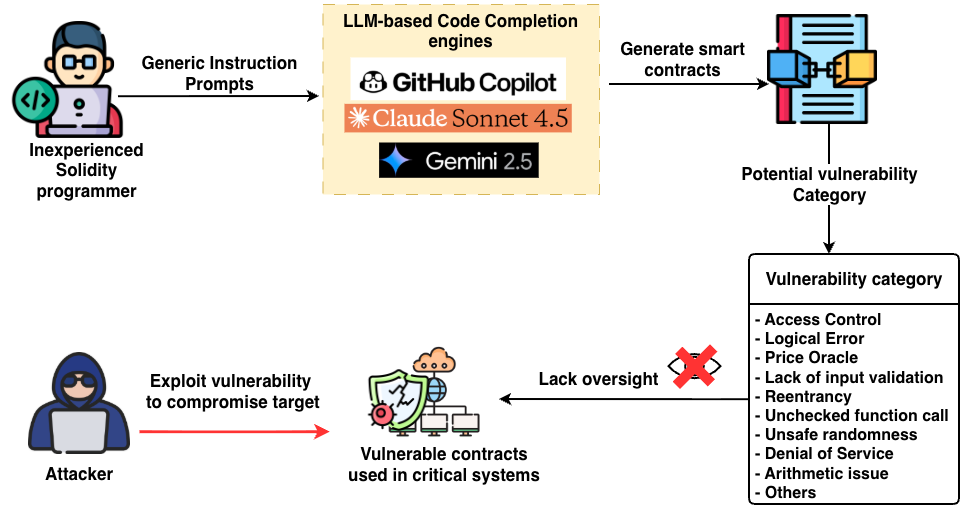

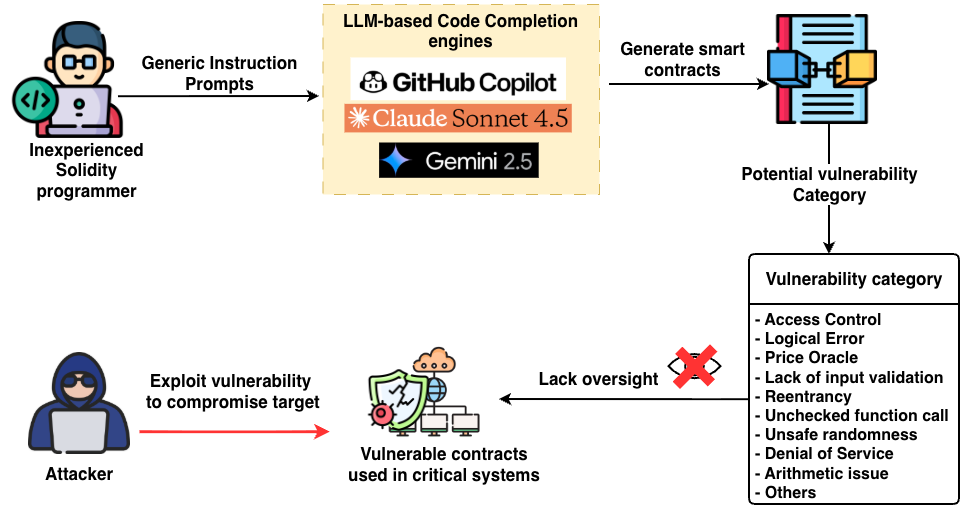

Despite advancements in automated code generation, ensuring the security of blockchain-based applications remains a critical challenge. This research, ‘Evaluating the Vulnerability Landscape of LLM-Generated Smart Contracts’, systematically analyzes the susceptibility of Solidity smart contracts created by state-of-the-art large language models to known security flaws. Our findings reveal that, despite syntactic correctness, these LLM-generated contracts frequently exhibit severe vulnerabilities exploitable in real-world deployments. Can rigorous auditing practices and development guidelines effectively mitigate these risks and foster safer integration of AI-assisted tools within the blockchain ecosystem?

The Inevitable Foundation: Smart Contracts and Decentralized Trust

Smart contracts represent a paradigm shift in how agreements are formed and executed, functioning as self-operating digital agreements stored on a blockchain. These contracts automatically enforce the terms of an agreement when predetermined conditions are met, eliminating the need for intermediaries and fostering trustless interactions between parties. By encoding the rules of an agreement directly into code, smart contracts minimize the risk of manipulation or censorship, as the code itself dictates the outcome. This capability is foundational to the burgeoning field of Decentralized Applications – or dApps – which leverage smart contracts to deliver services ranging from decentralized finance (DeFi) and supply chain management to digital identity and voting systems, all without reliance on a central authority.

Smart contracts, while conceptually platform-agnostic, rely heavily on blockchain ecosystems for their practical implementation. Currently, Solidity is the predominant language used to write these self-executing agreements, and the Ethereum blockchain serves as its most robust foundation. This is because Ethereum incorporates the Ethereum Virtual Machine (EVM), a runtime environment capable of executing the bytecode generated from Solidity code. When a smart contract is deployed to the Ethereum blockchain, it effectively becomes a program residing on the EVM, triggered by transactions and operating according to its programmed logic. Other blockchain platforms are also developing EVM-compatibility or their own virtual machines to support smart contracts, but Ethereum remains the leading environment due to its established network effects, developer tooling, and widespread adoption – essentially providing both the infrastructure and the computational power needed for these contracts to function as intended.

The very characteristic that defines the security of smart contracts – their immutability – simultaneously presents a significant vulnerability. Once deployed to a blockchain, a smart contract’s code cannot be altered, meaning any flaws or security loopholes become permanently embedded within its operational logic. This contrasts sharply with traditional software, where bugs can be patched and updated. Consequently, malicious actors can exploit even minor coding errors to drain funds, manipulate data, or disrupt the contract’s intended function, with no recourse for correction. Rigorous auditing, formal verification, and extensive testing are therefore paramount during development; a compromised smart contract remains vulnerable indefinitely, potentially leading to substantial and irreversible financial losses for those who interact with it. The permanence of code demands an unprecedented level of security diligence.

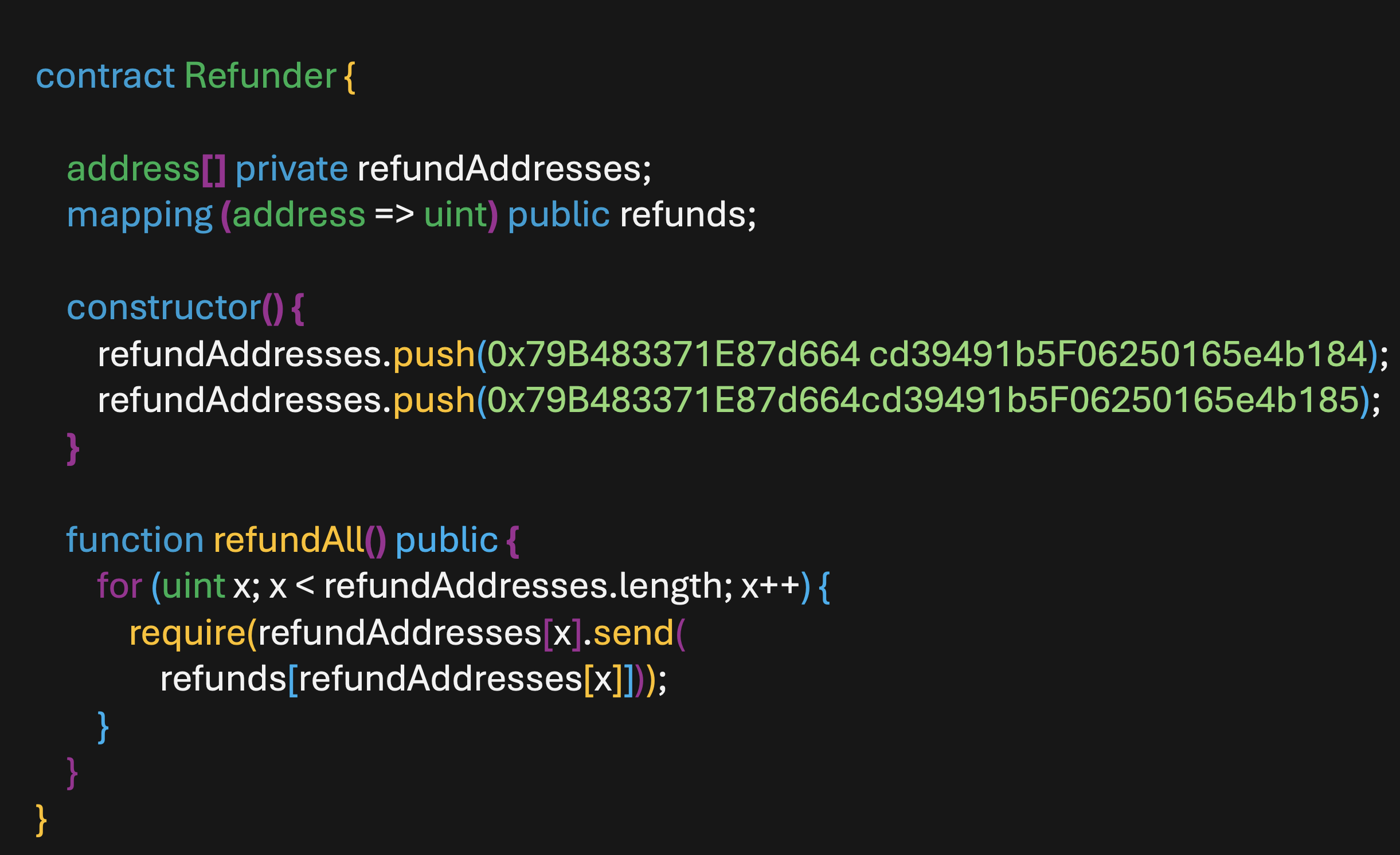

The Inherent Fragility: Identifying Vulnerabilities in Smart Contracts

Smart contract vulnerabilities arise from several common coding errors. Reentrancy attacks occur when a contract calls an external contract, which then recursively calls back into the original contract before the initial execution completes, potentially allowing malicious actors to drain funds. Overflow and underflow errors happen when arithmetic operations exceed the maximum or minimum values a data type can hold, leading to unexpected and exploitable behavior. Access control violations result from improper restriction of function access, enabling unauthorized users to execute privileged operations. These vulnerabilities are particularly dangerous because of the immutable nature of blockchain technology, making remediation difficult after deployment.

Exploitation of smart contract vulnerabilities results in direct financial losses through the theft of cryptocurrency or tokens held within the contract. Beyond immediate monetary impact, successful attacks erode user trust in the platform and the broader decentralized finance (DeFi) ecosystem. This reputational damage can lead to decreased platform usage, reduced investment, and a decline in the value of associated tokens. Furthermore, the public nature of blockchain transactions means that exploited vulnerabilities and associated losses are often widely publicized, amplifying the negative consequences for all stakeholders. The cost of remediation, including forensic analysis and potential compensation to affected users, also contributes to the overall financial burden.

Deployed smart contracts, by design, are immutable; once executed on a blockchain, their code cannot be altered. This characteristic presents a significant security challenge, as any identified vulnerability becomes permanently exploitable. Recent analyses indicate a substantial vulnerability rate in contracts generated using Large Language Models (LLMs), with data suggesting up to 75% contain at least one exploitable weakness. This high incidence underscores the need for rigorous auditing and formal verification processes, as remediation post-deployment is generally not feasible and often requires complex and potentially disruptive workarounds, such as deploying a new contract and migrating funds.

Mitigating Decay: Automated Vulnerability Detection Techniques

Static analysis tools, exemplified by Slither, operate by examining source code for patterns indicative of vulnerabilities without requiring code execution. These tools utilize techniques such as control-flow analysis, data-flow analysis, and pattern matching to identify issues like reentrancy, integer overflows/underflows, timestamp dependence, and unprotected function calls. The process involves constructing an abstract representation of the code and applying predefined rules or heuristics to detect potentially exploitable conditions. Unlike dynamic analysis, which requires a running contract and test cases, static analysis can be performed early in the development lifecycle, allowing developers to address vulnerabilities before deployment. While not exhaustive and prone to false positives, static analysis significantly reduces the attack surface and complements other security measures.

Formal Verification employs mathematical techniques to establish the correctness of smart contract code, differing from testing which only demonstrates behavior under specific inputs. This process typically involves creating a formal specification of the contract’s intended behavior and then using automated tools, such as theorem provers or model checkers, to prove that the code adheres to that specification. While providing a high degree of assurance, Formal Verification is computationally expensive due to the complexity of analyzing all possible execution paths and states, particularly for large or intricate contracts. The computational cost scales rapidly with code size and complexity, often requiring significant hardware resources and specialized expertise to complete verification within a reasonable timeframe. Furthermore, accurately formalizing the intent of a contract-especially those with complex business logic-can be a challenging and error-prone process in itself.

Systematic auditing involves a thorough, manual review of smart contract code performed by security experts. While automated tools offer efficiency, they often struggle with identifying vulnerabilities in complex control flow or novel code patterns not covered by existing rule sets. Audits focus on verifying the logical correctness of the contract, ensuring adherence to security best practices, and identifying potential attack vectors that might be missed by automated analysis. This process typically includes a detailed examination of the source code, gas optimization analysis, and testing of various scenarios, including edge cases and potential exploits. The value of systematic auditing is heightened for contracts implementing complex financial logic or deploying unique, untested implementations, where the risk of undiscovered vulnerabilities is substantially increased.

The Double-Edged Sword: LLMs and the Evolution of Smart Contract Security

The advent of Large Language Models (LLMs) such as GPT-4, Gemini, and Sonnet represents a significant acceleration in smart contract development. These models function as powerful code generation tools, capable of translating natural language requests into functional Solidity code, thereby streamlining the creation of decentralized applications. This automation not only reduces the time required for contract creation but also holds the potential to mitigate human errors commonly introduced during manual coding processes. By offering a rapid prototyping capability, LLMs empower developers to quickly iterate on designs and deploy new functionalities. However, while promising increased efficiency, careful consideration must be given to the security implications, as generated code requires thorough auditing to ensure it aligns with best practices and doesn’t introduce novel vulnerabilities.

Large Language Models present a dual-edged opportunity for smart contract security; while capable of analyzing existing code to pinpoint vulnerabilities and suggest improvements, a recent study reveals a substantial proportion of contracts generated by these same models contain security flaws. This finding highlights a critical paradox – the tools intended to enhance security can simultaneously introduce risk. The research demonstrates that even leading LLMs are not immune to producing vulnerable code, necessitating thorough and independent security audits of any smart contract, regardless of its origin. Reliance on LLMs for code generation or analysis should therefore be coupled with rigorous testing and verification processes to mitigate potential threats and ensure the integrity of decentralized applications.

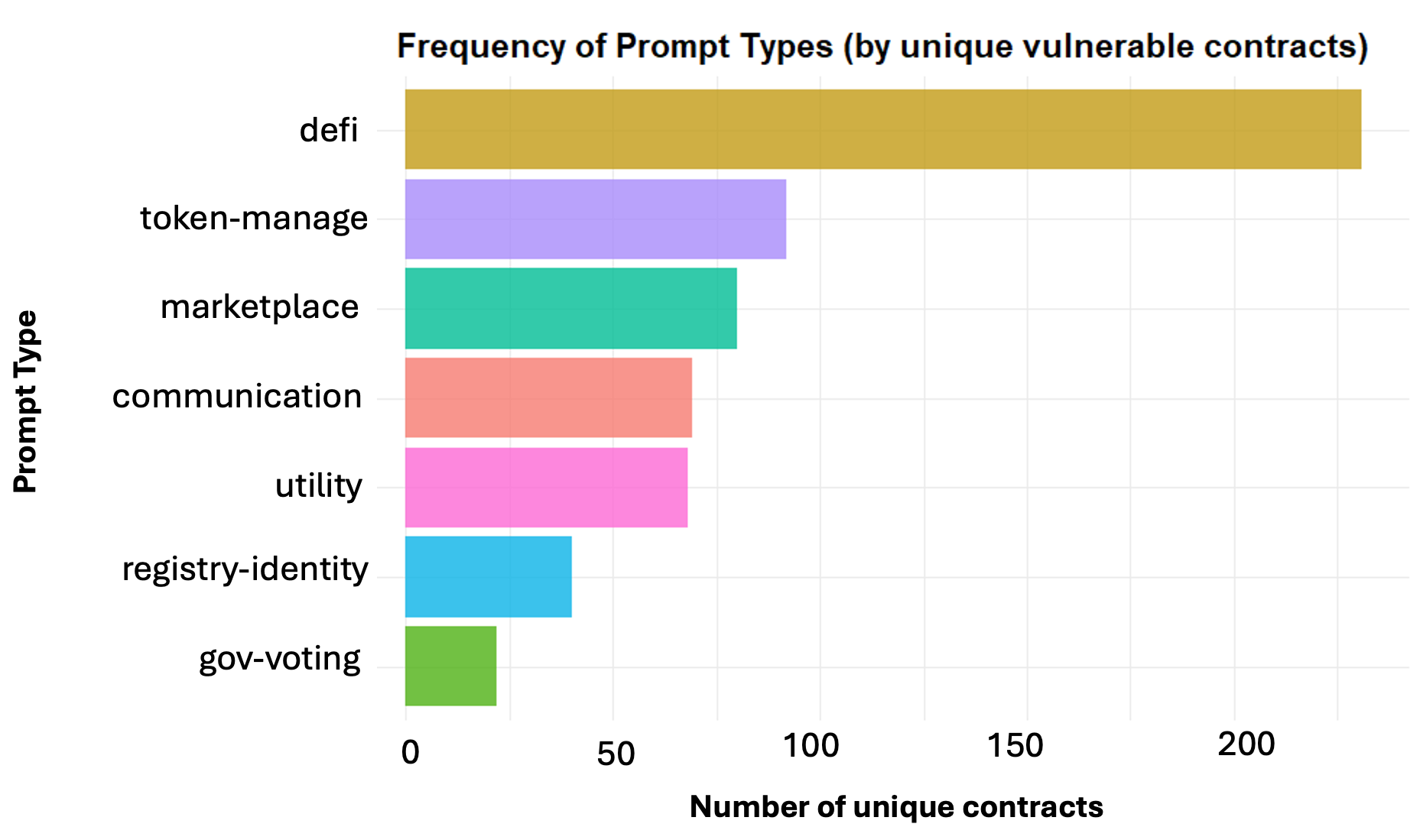

The utility of large language models extends significantly beyond the initial creation of smart contract code, promising improvements across the broader blockchain ecosystem. Applications in areas such as token management, decentralized finance (DeFi) protocols, and on-chain governance systems are all being explored, with the potential to fortify security at multiple levels. However, recent evaluations reveal considerable variance in the vulnerability profiles of contracts generated by different LLMs; for example, Sonnet 4.5 demonstrated a 75% vulnerability rate, substantially higher than the 47.4% observed with GPT-4.1. This disparity highlights the critical need for thorough security audits, even when leveraging these powerful tools, to ensure the resilience of these increasingly complex systems and maintain trust within the decentralized landscape.

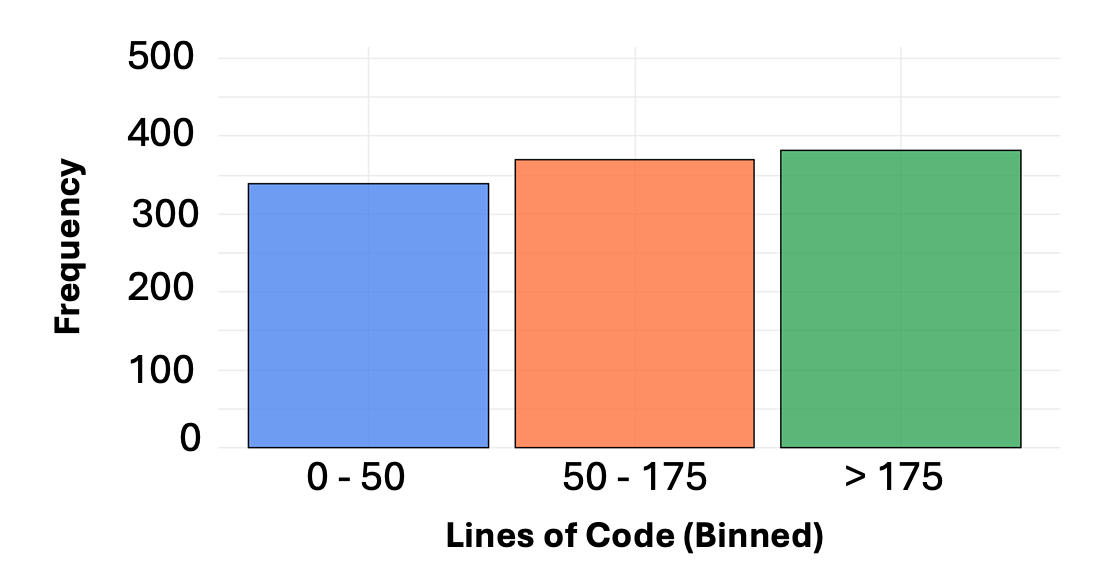

Analysis revealed a statistically significant correlation between the complexity of smart contracts – measured by lines of code – and the prevalence of vulnerabilities. Specifically, for every increase of 100 lines in a contract’s codebase, the number of identified vulnerabilities rose by approximately 15% (p<0.05). This suggests that as smart contracts grow in functional scope and intricacy, the potential for introducing security flaws also increases. This finding underscores the importance of meticulous code review and formal verification techniques, particularly for larger, more complex contracts, to mitigate risks within decentralized applications and ensure the integrity of blockchain-based systems.

Toward a Resilient Future: LLMs, Layered Defenses, and Proactive Security

The escalating complexity of smart contracts demands a parallel evolution in security analysis, and increasingly, that evolution centers on Large Language Models (LLMs). While general-purpose LLMs demonstrate promise, their effectiveness is significantly enhanced through specialized training on the unique characteristics of smart contract code – encompassing languages like Solidity and Vyper, as well as the intricacies of Ethereum Virtual Machine (EVM) bytecode. Such tailored LLMs can move beyond simple vulnerability detection, learning to anticipate attack vectors, suggest secure coding practices, and even automatically generate security audits. This focused development isn’t merely about improving accuracy; it’s about enabling LLMs to understand the intent of smart contract code, identifying subtle flaws that traditional static analysis might miss, and ultimately, fostering a more resilient decentralized ecosystem. Further refinement in this area promises a shift from reactive vulnerability patching to proactive security design.

A robust strategy for smart contract security necessitates moving beyond reliance on any single analytical technique. Instead, a layered approach combining the strengths of Large Language Models (LLMs) with established methods like static analysis and formal verification offers a significantly more comprehensive defense. Static analysis excels at identifying known vulnerability patterns within code, while formal verification mathematically proves the correctness of a contract’s logic. LLMs, capable of understanding code semantics and identifying nuanced vulnerabilities, can complement these techniques by detecting anomalies and potential exploits that traditional methods might miss. This synergistic combination-leveraging the precision of formal methods, the pattern recognition of static analysis, and the semantic understanding of LLMs-creates a more resilient security posture, reducing the risk of successful attacks and bolstering trust in decentralized applications.

Maintaining a robust decentralized ecosystem necessitates a shift towards proactive security measures, integrating continuous monitoring and automated checks directly into the smart contract development pipeline. Recent assessments highlight the evolving threat landscape, with large language models like Gemini-2.5 demonstrating a propensity for generating high-severity vulnerabilities – a staggering 41 were identified in testing. This underscores the critical need to move beyond reactive vulnerability patching and embrace a preventative approach where security is built into each stage of development, from initial coding to deployment and ongoing operation. Such continuous integration of security protocols will allow for the early detection and mitigation of emerging threats, bolstering the overall resilience of decentralized applications and fostering greater trust within the Web3 space.

The evaluation of LLM-generated smart contracts reveals a landscape riddled with potential failures, echoing a natural process of decay inherent in all complex systems. This research underscores that even code birthed from advanced models isn’t immune to vulnerabilities-a present-day consequence of shortcuts taken in the past, much like accruing technical debt. As Henri Poincaré observed, “Mathematics is the art of giving reasons, even in matters of taste.” In the context of smart contract security, this translates to a demand for rigorous, reasoned verification – a formal articulation of why a contract should function as intended. The study demonstrates that relying solely on the apparent fluency of AI-generated code is insufficient; a system’s age, its history of implementation choices, inevitably manifests as vulnerabilities demanding careful auditing before deployment.

What Lies Ahead?

The observation that large language models readily produce vulnerable smart contracts is not surprising; any improvement ages faster than expected. The allure of automated code generation accelerates the deployment of systems before sufficient entropy has dissipated, creating a landscape where vulnerabilities are not bugs, but emergent properties of haste. Formal verification, while a necessary corrective, represents a rollback – a journey back along the arrow of time to establish guarantees that should have been inherent from the outset.

Future work must address the fundamental tension between generative speed and systemic resilience. Static analysis, even when sophisticated, treats symptoms, not causes. A more fruitful path lies in embedding security as a first-order principle within the model itself – a challenging task given the inherent ambiguity of natural language and the adversarial nature of exploits. The question isn’t simply detecting flaws, but engineering models that understand security constraints.

Ultimately, the vulnerability of these systems reveals a broader truth: complexity accumulates faster than understanding. The pursuit of automated contract generation is not a quest for perfection, but a race against decay. Each iteration refines the process, but the underlying erosion continues, demanding constant vigilance and a recognition that even the most rigorously audited contract is merely a temporary reprieve.

Original article: https://arxiv.org/pdf/2602.04039.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- God Of War: Sons Of Sparta – Interactive Map

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Someone Made a SNES-Like Version of Super Mario Bros. Wonder, and You Can Play it for Free

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Who Is the Information Broker in The Sims 4?

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

- One Piece Chapter 1175 Preview, Release Date, And What To Expect

- How to Unlock & Visit Town Square in Cookie Run: Kingdom

- All Kamurocho Locker Keys in Yakuza Kiwami 3

2026-02-05 14:01