Author: Denis Avetisyan

Researchers are shifting focus from confirming correct answers to proactively identifying errors in large language models tackling complex mathematical problems.

This paper introduces pessimistic verification – a scalable method prioritizing error detection and a high true negative rate – for evaluating mathematical proof generation.

Despite advances in large language models, reliably detecting errors in complex mathematical reasoning remains a significant challenge. This limitation motivates the work presented in ‘Pessimistic Verification for Open Ended Math Questions’, which introduces a suite of simple yet effective verification methods prioritizing error identification over achieving consensus. By constructing multiple, parallel verification paths, this “pessimistic” approach substantially improves performance on mathematical benchmarks – even surpassing established techniques in token efficiency – while revealing that annotation errors often contribute to reported false negatives. Could this focus on robust error detection unlock more dependable and scalable language models capable of tackling increasingly complex mathematical problems?

Decoding the Illusion of Intelligence

Despite their remarkable aptitude for generating human-quality text and performing various language-based tasks, Large Language Models (LLMs) are demonstrably prone to errors when confronted with complex reasoning challenges. These models, trained on vast datasets, often excel at identifying patterns and correlations, but struggle with tasks requiring genuine understanding, logical deduction, or the application of common sense. This susceptibility stems from their foundational architecture – predicting the next token in a sequence – which doesn’t inherently guarantee truthfulness or consistency. While LLMs can convincingly present arguments, their internal processes may lack the robust grounding in real-world knowledge or formal logic necessary to ensure accurate conclusions, leading to subtle yet significant errors that can undermine their reliability in critical applications. The capacity to generate fluent and seemingly coherent text, therefore, should not be mistaken for genuine reasoning ability.

Conventional methods of verifying complex systems, which typically rely on achieving consensus among multiple sources or validators, prove inadequate when applied to the outputs of Large Language Models. These models generate responses based on probabilities and patterns learned from vast datasets, leading to outputs that, while often coherent, may contain subtle errors or illogical leaps. The sheer scale of LLM outputs – the volume and variety of potential responses – overwhelms traditional verification pipelines. Moreover, LLMs frequently operate in nuanced semantic spaces where simple agreement isn’t sufficient to guarantee correctness; a seemingly plausible answer can still be factually incorrect or logically flawed. Consequently, relying on consensus-based approaches offers a false sense of security, failing to detect the unique and often subtle errors characteristic of these powerful, yet imperfect, systems.

Current approaches to validating the outputs of Large Language Models (LLMs) often rely on achieving consensus – the idea that if multiple models agree on an answer, it is likely correct. However, this method proves inadequate given the potential for widespread, yet subtle, errors. A more robust strategy centers on proactively detecting failures in reasoning. This shifts the focus from confirming correctness to pinpointing instances where an LLM’s logic breaks down, regardless of whether other models make the same mistake. By prioritizing error detection, researchers can develop techniques to identify flawed reasoning patterns, enhance model safety, and ultimately build more trustworthy artificial intelligence systems, even in the absence of perfect agreement. This approach acknowledges that identifying what an LLM gets wrong is often more valuable than simply confirming that it gets something right.

Inverting the Assumption: A New Paradigm for Verification

Pessimistic Verification represents a departure from traditional Large Language Model (LLM) evaluation methodologies which often focus on achieving high inter-rater agreement. Instead, this approach prioritizes the identification of any potential error, even if a majority of evaluators concur with a response. This is achieved by adopting a “failure-first” mindset; a single dissenting reviewer indicating an issue is sufficient to flag a response as potentially incorrect. This contrasts with consensus-based methods where discrepancies are often resolved through further discussion or majority rule, potentially masking critical flaws in the LLM’s reasoning process. The core principle is that identifying even isolated errors is more valuable than establishing a high degree of agreement on potentially flawed outputs.

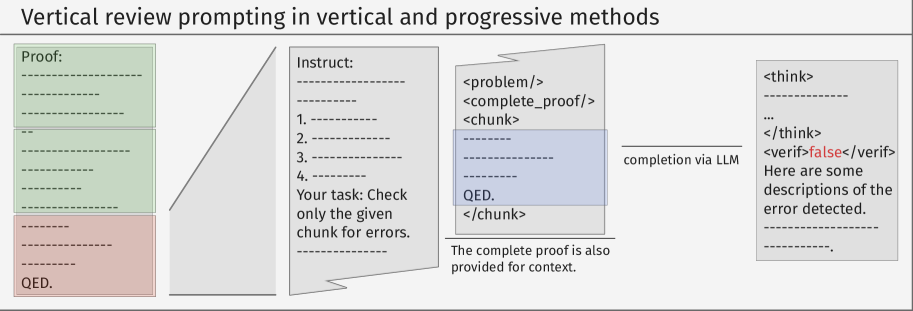

Pessimistic Verification incorporates specific techniques to maximize error detection. Simple Verification operates on a disjunctive principle: an LLM response is flagged as incorrect if any reviewer identifies an error, regardless of consensus among other reviewers. Complementing this, Vertical Verification improves scalability by distributing review tasks in parallel; multiple reviewers independently assess the LLM’s output on the same problem, enabling faster identification of potential failures, particularly as problem complexity increases and the number of reviewers grows. This parallel approach does not require reviewers to reach a unified decision, streamlining the verification process.

The integration of Simple Verification and Vertical Verification techniques aims to create a scalable and reliable framework for LLM failure identification as problem complexity increases. Simple Verification’s requirement for unanimous agreement among reviewers provides a high-confidence error signal, while Vertical Verification distributes review tasks in parallel, enabling evaluation of larger problem sets without a proportional increase in review time. This combined approach addresses limitations inherent in consensus-based evaluation metrics, which may overlook critical errors present in a minority of reviewer assessments, and provides a mechanism to systematically identify weaknesses in LLM reasoning capabilities even as input complexity and data volume grow.

Refining the Search: Progressive Verification and Scalability

Progressive Verification represents an advancement over Simple and Vertical Verification methods for Large Language Model (LLM) evaluation by combining aspects of both to enhance efficiency and scalability. Simple Verification processes each LLM output independently, while Vertical Verification evaluates outputs step-by-step, allowing for early termination upon error detection. Progressive Verification integrates these approaches, initially employing Simple Verification for a broad initial assessment, then applying Vertical Verification to outputs flagged as potentially incorrect. This tiered approach reduces the computational load associated with exhaustive step-by-step evaluation, enabling more rapid and scalable assessment of LLM performance, particularly when dealing with large datasets or complex reasoning tasks.

Progressive Verification optimizes LLM evaluation by integrating Simple and Vertical Verification techniques to enhance error detection rates while maintaining high throughput. This is achieved through a staged approach where initial evaluations leverage parallel processing for broad coverage – characteristic of Simple Verification – followed by focused, in-depth analysis of potentially erroneous outputs, similar to Vertical Verification. The combination allows for rapid identification of common errors and efficient pinpointing of complex reasoning failures, resulting in improved accuracy without diminishing the scalability benefits of parallel evaluation pipelines.

The evaluation of the Progressive Verification framework was conducted using the QiuZhen-Bench Dataset, a benchmark comprised of 1,054 mathematically-focused problems. This dataset is specifically designed to assess and challenge the reasoning capabilities of Large Language Models (LLMs) by presenting complex problems requiring multi-step solutions. The dataset’s size and difficulty are intended to provide a robust test of LLM performance, exceeding the capabilities of many existing benchmarks and identifying limitations in mathematical reasoning abilities. Rigorous testing with QiuZhen-Bench allows for quantitative assessment of the framework’s effectiveness in identifying errors and improving overall LLM reliability.

Beyond the Benchmark: Demonstrating Robustness and Scale

Evaluations using both GPT-5-mini and Qwen3-30B-A3B have confirmed the broad applicability of the Pessimistic Verification framework to a range of large language models. This testing demonstrates the framework isn’t limited by specific model architectures or training data; it consistently delivers reliable performance across diverse LLMs. By successfully evaluating models with differing structures and scales, researchers establish that the framework’s core principles – focusing on identifying potential errors through rigorous questioning – are universally effective in assessing the trustworthiness of generated outputs. This adaptability is a key strength, suggesting the framework can be applied to future LLMs as they continue to grow in complexity and capability, providing a consistent method for verification regardless of the underlying technology.

Evaluations conducted on a demanding dataset of 1,054 mathematics problems reveal the consistent high performance of this verification method. The approach demonstrates a substantial capacity for error detection, as measured by improvements in the true Negative Rate, indicating a reduced incidence of falsely accepting incorrect solutions. Furthermore, the method achieves balanced F1 scores, signifying an effective equilibrium between precision and recall – a crucial characteristic for reliable assessment. This balance suggests the framework isn’t simply identifying obvious errors, but also accurately pinpointing subtle mistakes, establishing its efficacy in rigorously evaluating complex reasoning capabilities within large language models.

The Pessimistic Verification framework is engineered for scalability, a critical feature as Large Language Models (LLMs) continue to grow in size and complexity. Unlike many verification methods that struggle with computational demands when applied to more powerful models, this framework maintains consistent accuracy even with increasingly large LLMs such as GPT-5-mini and Qwen3-30B-A3B. This is achieved through an inherent design that optimizes computational efficiency without sacrificing the rigor of error detection; the framework’s architecture allows it to effectively evaluate models with billions of parameters while sustaining high performance, as demonstrated by its consistent true Negative Rate and balanced F1 scores on a challenging mathematics problem set. This scalability ensures that the framework remains a viable tool for assessing the reliability of future generations of LLMs, regardless of their size or intricacy.

The pursuit of error, as demonstrated in this work on pessimistic verification, echoes a fundamental principle: every exploit starts with a question, not with intent. This research doesn’t seek to confirm correct solutions, but to aggressively disprove them, prioritizing the identification of flaws in large language models tackling mathematical proofs. The method’s scalability, detailed in the study, highlights the importance of probing system boundaries-a calculated dismantling to understand its limits. It’s a process akin to reverse-engineering, mirroring the belief that true knowledge comes from challenging assumptions and meticulously testing the resilience of any system, even one built on complex algorithms. As Tim Berners-Lee aptly stated, “The web is more a social creation than a technical one.” This social creation, like any system, benefits from constant, critical examination to ensure its integrity and expose potential vulnerabilities.

What’s Next?

The pursuit of ‘true negative’ detection in large language models, as this work demonstrates, isn’t about building better solvers-it’s about efficiently mapping the boundaries of their failure. Pessimistic verification doesn’t celebrate success; it meticulously catalogs incorrectness. This is, of course, a far more honest endeavor. The scalability improvements are welcome, but simply finding more errors faster doesn’t fundamentally address the question of why these models stumble on mathematical reasoning. The QiuZhen-Bench benchmark is a useful proving ground, but any sufficiently complex system will eventually reveal the limitations of its evaluation metrics.

Future work should resist the urge to patch the symptoms. Instead, investigation needs to pivot toward understanding the architectural biases that predispose these models to specific classes of error. Is the problem a deficit in symbolic manipulation? A flawed understanding of logical implication? Or simply a statistical inevitability arising from training on imperfect data?

Perhaps the most interesting direction lies in intentionally introducing errors into the training data-a form of adversarial training designed not to improve accuracy, but to calibrate the model’s confidence in its fallibility. A system that knows what it doesn’t know is, ironically, a more valuable system than one that confidently produces nonsense.

Original article: https://arxiv.org/pdf/2511.21522.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- God Of War: Sons Of Sparta – Interactive Map

- Who Is the Information Broker in The Sims 4?

- 8 One Piece Characters Who Deserved Better Endings

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

- Mewgenics Tink Guide (All Upgrades and Rewards)

2025-11-28 08:36