Author: Denis Avetisyan

A new architectural approach integrates post-quantum cryptography to secure the software supply chain against future quantum-based attacks.

This review details layered architectures for quantum-resistant integrity verification in secure boot, measured boot, and remote attestation systems.

Establishing trust in computing systems relies on asymmetric cryptography, a foundation increasingly threatened by the advent of quantum computing. This paper, ‘Towards Quantum-Resistant Trusted Computing: Architectures for Post-Quantum Integrity Verification Techniques’, analyzes existing trust techniques and proposes a layered architecture integrating Post-Quantum Cryptography into secure boot and remote attestation processes. The resulting framework aims to establish end-to-end, quantum-resistant trust chains for enhanced firmware and software protection. Will this proactive approach prove sufficient to safeguard critical infrastructure against future quantum-based attacks?

The Looming Quantum Threat: A Crisis of Confidence

Modern digital security relies heavily on the difficulty of certain mathematical problems – specifically, the factorization of large numbers and the discrete logarithm problem. These form the basis of widely used public-key encryption algorithms like RSA and ECC. However, the advent of quantum computing introduces a disruptive threat. Quantum algorithms, most notably Shor’s Algorithm, can efficiently solve these problems, rendering current cryptographic systems fundamentally vulnerable. While classical computers would require exponentially increasing time to break these encryptions as key sizes grow, a sufficiently powerful quantum computer could crack them in a reasonable timeframe. This isn’t merely a theoretical concern; the potential for decryption extends to stored data, meaning past communications and sensitive information are also at risk once quantum computers reach a critical scale. The core of the issue lies in quantum mechanics’ ability to explore numerous possibilities simultaneously, a capability that bypasses the limitations faced by classical algorithms when tackling these complex mathematical challenges.

The erosion of digital trust extends far beyond the simple exposure of encrypted data; current public-key cryptography, foundational to verifying digital signatures and ensuring data integrity, faces a systemic threat from advancing quantum computing. This isn’t merely about confidentiality breaches, but a potential collapse of assurance regarding who sent a message, or whether data has been tampered with. Mosca’s Inequality mathematically demonstrates this vulnerability, predicting a specific timeframe after which currently used key lengths become insufficient to guarantee security even against relatively modest quantum computers. Consequently, systems relying on these compromised signatures – encompassing financial transactions, secure communications, and critical infrastructure – are susceptible to manipulation and forgery, potentially leading to widespread disruption and catastrophic compromise. The implications reach beyond data theft to encompass a loss of faith in the very foundations of digital trust, demanding a proactive transition to quantum-resistant cryptographic methods.

The anticipated arrival of cryptographically relevant quantum computers is compressing the timeline for securing digital infrastructure, demanding immediate and concerted action. Current estimates suggest the potential for code-breaking quantum machines within the next decade, if not sooner, which necessitates a proactive, rather than reactive, stance. This isn’t simply about upgrading software; it requires a fundamental overhaul of cryptographic standards and the implementation of quantum-resistant algorithms – those based on mathematical problems believed to be intractable even for quantum computers. The transition involves significant logistical challenges, including algorithm standardization, key distribution, and widespread system updates across all sectors relying on secure communication. Failure to adapt swiftly risks a future where sensitive data is readily compromised, undermining trust in digital systems and potentially leading to widespread disruption and economic loss.

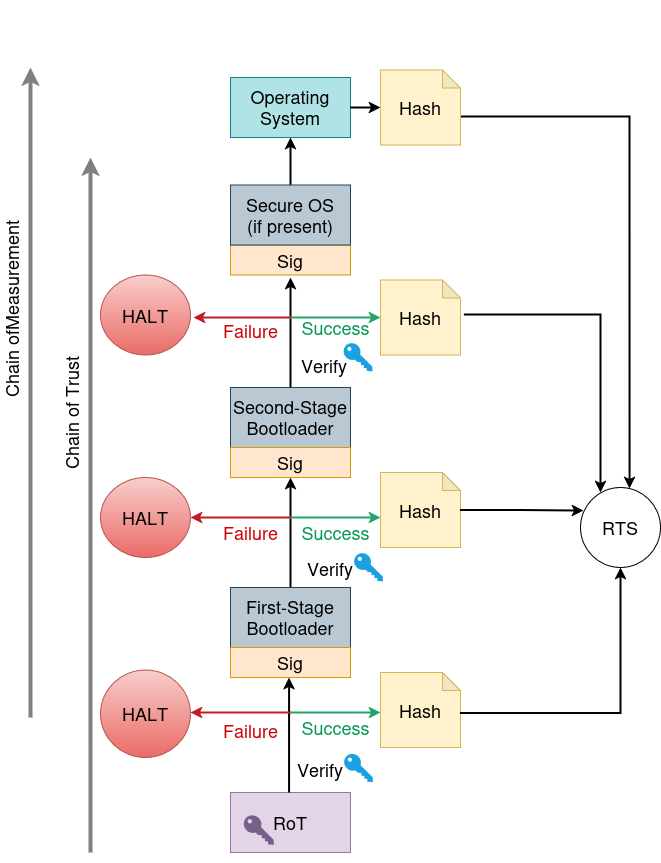

Establishing a Foundation of Trust: Verifiable System Integrity

Secure Boot establishes a verifiable boot process by utilizing a Chain of Trust, a hierarchical system where each boot component validates the next before execution. This process begins with a root of trust, typically embedded within the platform’s firmware, containing cryptographic keys used to verify the bootloader. The bootloader, in turn, verifies the operating system kernel before transferring control. Each component in this chain cryptographically validates the subsequent component’s digital signature against a trusted key, ensuring that only software authorized by the manufacturer or administrator can execute during the boot sequence. This prevents the loading of malicious software or unauthorized operating systems, thereby establishing a foundation of trust for the entire system.

Measured Boot extends the Secure Boot process by establishing a Chain of Measurement, which cryptographically records each component loaded during the boot sequence. This process doesn’t prevent the execution of untrusted code, but rather creates a log of what code was executed. Each component’s hash is calculated and linked to the hash of the previously loaded component, creating a verifiable chain extending from the initial bootloader to the operating system kernel. These measurements are typically stored in the Trusted Platform Module (TPM), a hardware security module, and can be used later by Remote Attestation protocols to verify the system’s initial state and detect any unauthorized modifications to the boot process.

Remote Attestation utilizes the Integrity Measurement Architecture (IMA) to verify the initial system state, specifically the measurements recorded during Measured Boot. This process involves a requesting party – typically a network access control server – challenging the device to cryptographically prove the integrity of its boot sequence. The device responds with a signed report containing these measurements, which the requesting party validates against a known-good baseline. Successful attestation confirms the system’s firmware and bootloaders haven’t been tampered with, enabling conditional access to network resources and mitigating the risk of compromised endpoints establishing connections. Failure to attest results in denied access, enforcing a zero-trust security model.

Post-Quantum Cryptography: Algorithms for a Quantum Future

Post-Quantum Cryptography (PQC) addresses the potential threat posed by quantum computers to currently used public-key cryptographic algorithms. PQC algorithms, such as Module-Lattice-Based Digital Signatures, Stateless Hash-Based Digital Signatures, and Module-Lattice-Based Key-Encapsulation Mechanisms, are designed to resist attacks from both classical and quantum computers. These schemes rely on mathematical problems believed to be difficult for both types of computers to solve, providing a forward-looking security solution. Module-lattice-based schemes leverage the presumed hardness of problems in lattice-based cryptography, while stateless hash-based signatures utilize the security of cryptographic hash functions and avoid state management complexities. Module-lattice-based key-encapsulation mechanisms provide a method for securely exchanging cryptographic keys, also based on lattice problems.

The Commercial National Security Algorithm Suite 2.0 (CNSA 2.0) signifies a move beyond theoretical post-quantum cryptography by prioritizing the implementation of quantum-resistant algorithms for practical applications. Specifically, CNSA 2.0 mandates the use of these algorithms for firmware signing, a critical security measure for device integrity. This standardization demonstrates a proactive commitment from national security agencies to mitigate the potential risks posed by future quantum computing capabilities and ensures a transition path for systems requiring long-term security assurances. The selection of algorithms within CNSA 2.0 is intended to provide immediate, deployable solutions against both classical and quantum attacks, safeguarding critical infrastructure and sensitive data.

Proposed post-quantum digital signature schemes exhibit varying signature sizes impacting their applicability. The Falcon-NIST Digital Signature Algorithm (FN-DSA) generates signatures ranging from 0.6 to 1.3 kilobytes (kB), making it particularly suitable for implementation in resource-constrained environments such as Internet of Things (IoT) devices. Meanwhile, the Module-Lattice Digital Signature Algorithm (ML-DSA) produces larger signatures, between 2.4 and 4.6 kB, which are appropriate for a wider range of applications where storage and bandwidth are less limited. In contrast, the Stateless Hash-based Digital Signature Algorithm (SLH-DSA) generates substantially larger signatures, ranging from 7.8 to 49.8 kB; this size restricts its practical use to specialized, high-security domains where signature size is less of a concern than absolute security.

Cryptographic hash functions, specifically SHA-384, SHA-512, and SHA-3-512, are foundational to the security of post-quantum cryptographic schemes. These functions are not themselves quantum-resistant algorithms, but their established collision resistance-meaning the difficulty of finding two different inputs that produce the same hash output-remains valid even in the face of potential quantum computer attacks. Their integration into post-quantum algorithms provides a crucial layer of security by ensuring the integrity and authenticity of data processed within those schemes; the algorithms rely on the hash functions’ ability to prevent malicious manipulation of inputs without detection. The continued use of these well-vetted hash functions minimizes the introduction of new vulnerabilities alongside the implementation of novel quantum-resistant algorithms.

Strengthening the Root of Trust: Hardware Security and Remote Attestation

At the heart of modern security architectures lies ARM TrustZone, a pivotal technology that establishes a hardware-isolated secure environment within a system’s processor. This isolation creates a ‘secure world’ separate from the normal operating system, effectively forming a Trusted Computing Base (TCB) – a set of hardware and software components critical to a system’s security. By confining sensitive operations, such as cryptographic key storage and secure boot processes, within this isolated environment, TrustZone dramatically reduces the attack surface available to malicious actors. This hardware-based approach fundamentally enhances the Root of Trust, providing a foundation of confidence that the system hasn’t been compromised from its initial state and that critical security functions remain protected, even if the main operating system is breached.

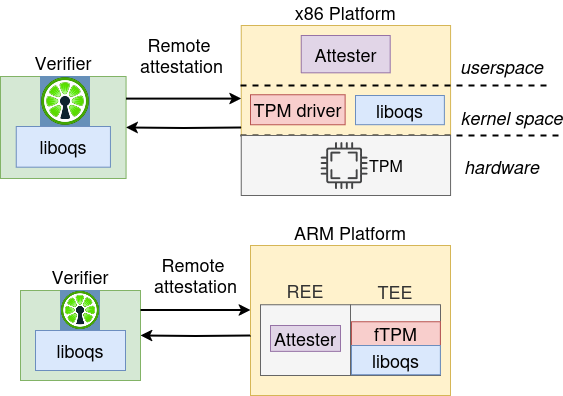

Remote Attestation, as implemented in frameworks like Keylime, functions as a crucial verification process for system integrity. This technology enables a remote party to confirm that a system’s software and hardware haven’t been maliciously altered, ensuring it operates in a known, trusted state. Keylime achieves this by securely measuring the system’s boot process and runtime configuration, creating a cryptographic report that can be independently verified. This report details the precise software versions, configurations, and hardware components, allowing the remote party to assess whether the system meets pre-defined security policies. Should any deviation from the expected configuration be detected, the attestation process will fail, signaling a potential compromise and preventing unauthorized operation. Consequently, Remote Attestation isn’t merely a diagnostic tool; it’s a foundational component in establishing trust for sensitive operations, such as cloud computing, secure data processing, and critical infrastructure management.

A robust security posture isn’t achieved through a single safeguard, but rather through the implementation of layered defenses. By combining hardware-based security like ARM TrustZone – which establishes a secure enclave – with frameworks enabling remote attestation, systems can achieve a significantly higher level of trustworthiness. This synergistic approach doesn’t merely detect compromise; it actively mitigates risk by verifying system integrity at multiple levels. Should one layer be breached, subsequent layers provide continued protection, reducing the attack surface and bolstering confidence in the system’s operational state. The result is a resilient architecture that moves beyond simple prevention to proactive assurance, vital for protecting sensitive data and critical infrastructure.

The pursuit of quantum-resistant trusted computing necessitates a fundamental reassessment of established security protocols. This work posits a layered architectural approach, acknowledging that robust integrity verification demands more than algorithmic substitution. It requires a holistic system, meticulously constructed from secure boot through remote attestation. Ada Lovelace observed, “The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform.” This echoes the core principle demonstrated: the architecture doesn’t create security, it faithfully executes the known requirements of a post-quantum world, layering defenses to establish verifiable trust chains. The focus remains on precision and demonstrable correctness, eliminating superfluous complexity.

Future Directions

The presented architecture, while addressing the immediate threat of quantum-enabled attacks on trusted computing foundations, merely shifts the locus of vulnerability. The assumed hardness of Post-Quantum Cryptographic (PQC) algorithms remains, as yet, an assumption. Continued cryptanalysis, and the inevitable discovery of implementation weaknesses, will necessitate adaptable designs-systems that can incorporate algorithm agility without wholesale architectural revisions. The cost of such agility, measured in both performance and complexity, must be minimized; unnecessary is violence against attention.

A critical, largely unexplored area concerns the interplay between firmware resilience and the physical security of devices. Integrity verification, even with quantum-resistant primitives, is rendered moot by a compromised supply chain or physical manipulation. Future work must consider hybrid approaches, integrating hardware-based root of trust with robust, adaptable cryptographic attestation. Density of meaning is the new minimalism; the focus should not be on adding layers, but on refining existing ones.

Ultimately, the pursuit of absolute trust is a logical fallacy. The architecture proposes a demonstrably more resilient system, not an invulnerable one. The field must embrace the inevitability of compromise and prioritize minimizing the blast radius of successful attacks. The true metric of success will not be preventing all breaches, but reducing their frequency, severity, and cost.

Original article: https://arxiv.org/pdf/2601.11095.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- God Of War: Sons Of Sparta – Interactive Map

- Epic Games Store Free Games for November 6 Are Great for the Busy Holiday Season

- Someone Made a SNES-Like Version of Super Mario Bros. Wonder, and You Can Play it for Free

- How to Unlock & Upgrade Hobbies in Heartopia

- Battlefield 6 Open Beta Anti-Cheat Has Weird Issue on PC

- The Mandalorian & Grogu Hits A Worrying Star Wars Snag Ahead Of Its Release

- Sony Shuts Down PlayStation Stars Loyalty Program

- One Piece Chapter 1175 Preview, Release Date, And What To Expect

- EUR USD PREDICTION

- Overwatch is Nerfing One of Its New Heroes From Reign of Talon Season 1

2026-01-19 07:41