Author: Denis Avetisyan

A new framework, QSentry, offers a robust defense against subtle backdoor attacks targeting the rapidly evolving field of quantum machine learning.

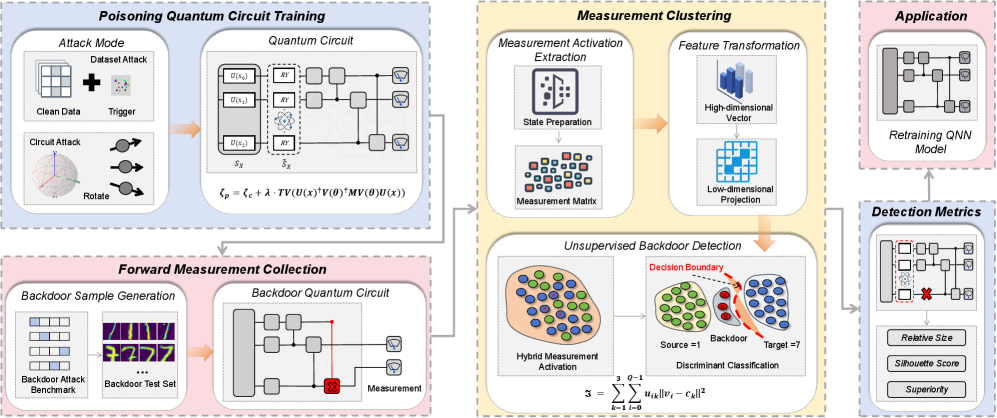

QSentry leverages measurement clustering to identify anomalous behavior and effectively detect backdoors implanted in quantum neural networks.

Despite the promise of enhanced computational power, quantum neural networks remain vulnerable to adversarial manipulation via backdoor attacks, mirroring challenges in classical machine learning. To address this critical security concern, we present ‘QSentry: Backdoor Detection for Quantum Neural Networks via Measurement Clustering’, a novel framework that leverages statistical anomalies in quantum measurement outputs to identify poisoned samples. Through extensive experimentation, QSentry demonstrates superior robustness and accuracy-achieving F1 scores up to 93.2% even with low poisoning rates-outperforming existing detection methods. Could this measurement-based approach pave the way for more resilient and trustworthy quantum machine learning systems?

The Illusion of Quantum Supremacy: A Fragile Foundation

Quantum Neural Networks (QNNs) represent a potentially revolutionary advancement in machine learning, promising exponential speedups for complex computational tasks. However, this power comes with inherent vulnerabilities, as the very principles enabling these speedups also open doors to novel attack vectors. Unlike classical neural networks, QNNs leverage quantum phenomena like superposition and entanglement, making them susceptible to manipulations that exploit these unique properties. Researchers have demonstrated that carefully crafted inputs can disrupt the quantum states within a QNN, leading to misclassifications or even complete control over the network’s output. This isn’t simply a matter of increased computational power for attackers; it’s the emergence of attacks that are fundamentally impossible in the classical realm, demanding entirely new security paradigms to safeguard the integrity and reliability of quantum machine learning systems. The promise of QNNs is substantial, but realizing that potential requires proactive development of defenses against these emerging quantum threats.

Quantum computation’s inherent properties render conventional security protocols inadequate for protecting quantum machine learning models. Unlike classical systems reliant on bits representing 0 or 1, quantum computers utilize qubits, which exist in a superposition of both states simultaneously. This superposition, alongside quantum entanglement, allows for exponentially larger computational spaces, but also introduces vulnerabilities that classical cryptography cannot address. Traditional security focuses on preventing unauthorized access to data; however, a malicious actor could subtly manipulate the quantum state during computation – a process undetectable by classical monitoring. Furthermore, the very act of observing a quantum system alters its state – the “measurement problem” – meaning attempts to verify integrity using classical methods can destroy the quantum information being processed. Consequently, entirely new security paradigms, leveraging quantum principles themselves, are essential to safeguard the burgeoning field of quantum machine learning and ensure the reliability of these potentially transformative technologies.

Quantum neural networks, while promising exponential speedups in machine learning, face a unique vulnerability to backdoor attacks that compromise their integrity. These attacks involve subtly embedding malicious functionality into the network during its training phase, allowing an adversary to control the model’s behavior under specific, attacker-defined conditions. Unlike traditional software, the quantum nature of these networks-relying on superposition and entanglement-makes detecting these hidden triggers exceptionally difficult. An attacker can manipulate the network to misclassify data or reveal sensitive information when presented with a specific backdoor trigger, a carefully crafted input that activates the embedded malicious code. The success of such an attack isn’t measured by simply breaching the system, but by the attack success rate – the proportion of times the malicious functionality is activated by the trigger, potentially causing significant harm without raising immediate alarms. This presents a critical challenge, as even a low success rate could be devastating in applications like financial modeling or medical diagnosis, demanding robust defenses tailored to the peculiarities of quantum computation.

The effectiveness of backdoor attacks on quantum neural networks is quantified by the Attack Success Rate, a metric indicating how reliably a malicious input triggers the embedded, unintended functionality. Crucially, these attacks depend on a Backdoor Trigger – a specific pattern or feature subtly introduced during the network’s training phase. This trigger, imperceptible in normal operation, acts as a key, activating the malicious behavior when present in an input. Researchers evaluate attack performance by carefully crafting these triggers and assessing how consistently they can manipulate the network’s output, demonstrating the potential for significant compromise even with minimal alterations to the model. A high Attack Success Rate, therefore, signals a substantial vulnerability, highlighting the need for robust defenses against such subtle, yet powerful, threats to quantum machine learning systems.

The Quantum Circuit: A Stage for Subtle Sabotage

Quantum Neural Networks (QNNs) utilize quantum circuits as their core computational engine, frequently employing Variational Quantum Circuits (VQCs). These circuits consist of parameterized quantum gates, allowing for adjustable parameters that are optimized during the training process. A VQC typically begins with an input encoding step, transforming classical data into a quantum state, followed by a series of parameterized gates – often layers of single- and two-qubit rotations – and concludes with a measurement. The measurement outcome provides the basis for the network’s output, and the parameters are adjusted via a classical optimization loop to minimize a defined loss function. This structure enables QNNs to perform complex computations by leveraging the principles of quantum mechanics, such as superposition and entanglement, within the constraints of near-term quantum hardware.

Attack vectors against Quantum Neural Networks (QNNs) primarily manifest as either data poisoning or direct circuit manipulation. Data poisoning involves injecting maliciously crafted data points into the training dataset, subtly altering the learned parameters of the quantum circuit without immediately causing detectable performance degradation on benchmark datasets. Direct circuit manipulation entails modifying the quantum circuit’s structure or gate parameters after training, potentially introducing backdoors or altering the model’s functionality. Both approaches aim to compromise the model’s integrity by embedding unintended behavior, which can be triggered by specific, attacker-defined inputs, while maintaining acceptable performance on standard evaluation metrics to evade detection. Successful exploitation allows attackers to influence model predictions without raising immediate alarms.

Successful adversarial attacks on Quantum Neural Networks (QNNs) directly degrade Clean Accuracy, which represents the model’s performance on unperturbed, correctly labeled data. This compromise manifests as altered predictions for valid inputs; the model, while potentially maintaining high performance on benchmark datasets used during training, will misclassify or provide incorrect outputs for specific, targeted inputs. The degree of accuracy loss is dependent on the attack vector employed and the magnitude of the perturbation, but any reduction in Clean Accuracy indicates a failure in the model’s robustness and reliability. This alteration of predictions can have significant consequences depending on the QNN’s application, ranging from subtle performance degradation to complete system failure.

Adversarial attacks on Quantum Neural Networks (QNNs) employing hidden functionality aim to subtly alter the quantum circuit’s behavior to introduce a backdoor or trigger specific outputs under predefined, attacker-controlled conditions. Crucially, these attacks are designed to maintain high accuracy on benchmark datasets, preventing immediate detection through standard performance evaluations. This is achieved by minimizing the impact on the model’s learned representations for common inputs, effectively masking the embedded malicious functionality. The attacker’s goal is not to degrade overall performance, but to create a specific, controllable failure mode activated by a unique input pattern, enabling data exfiltration or model manipulation without raising suspicion during routine testing.

QSentry: A Quantum Sentinel Against Deception

QSentry is a quantum computing-based framework for detecting backdoor attacks, specifically targeting Quantum Neural Networks (QNNs). This approach leverages quantum clustering algorithms to analyze the internal behavior of a QNN without requiring prior knowledge of the attack vector or backdoor implementation. Unlike traditional methods that rely on known attack signatures, QSentry examines anomalies within the QNN’s measurement layers to identify potentially malicious modifications. The framework is designed to operate on the quantum state vectors produced during computation, providing a means of identifying subtle deviations indicative of a compromised network. By clustering these quantum states, QSentry aims to isolate and flag samples affected by backdoor injections, offering a proactive defense against adversarial attacks on quantum machine learning systems.

Measurement Clustering, as employed by QSentry, operates on the output probabilities generated by the measurement layers of a Quantum Neural Network (QNN). This technique groups data points – representing QNN outputs – based on their similarity in Hilbert space. Anomalies indicative of backdoor attacks manifest as distinct clusters separate from the normal data distribution. The underlying principle is that a poisoned QNN will produce atypical measurement results when presented with maliciously crafted inputs, leading to the formation of these outlier clusters. By analyzing the characteristics and density of these clusters, QSentry identifies potential attacks without requiring prior knowledge of the specific backdoor trigger or implementation details.

The Silhouette Coefficient, ranging from -1 to 1, quantitatively assesses the quality of clusters generated by QSentry, indicating how well each sample fits within its assigned cluster compared to neighboring clusters. In experiments involving a 1% poisoning rate – where 1% of the training data is maliciously modified to introduce a backdoor – samples compromised by the backdoor consistently exhibit Silhouette Coefficient values between 0.36 and 0.45. This range differentiates backdoor samples from benign data, which typically demonstrate higher coefficients, enabling QSentry to identify potential attacks based on cluster quality. Lower values indicate that a sample is poorly clustered, suggesting an anomalous behavior indicative of a backdoor insertion.

QSentry distinguishes itself from existing backdoor detection techniques by operating without requiring prior knowledge of the specific backdoor implementation or trigger. This capability is achieved through the analysis of measurement layer anomalies via quantum clustering, enabling the detection of diverse attack vectors without needing predefined signatures. Benchmarking demonstrates QSentry consistently surpasses the F1 scores and detection accuracy of state-of-the-art methods, indicating improved generalization and robustness against unknown attack strategies. This approach offers a significant advantage in practical scenarios where the characteristics of potential backdoors are not fully known or readily available for analysis.

Beyond Single Sentinels: A Multi-Layered Defense

Beyond traditional methods, a suite of innovative techniques are emerging to counter the insidious threat of backdoor attacks in machine learning systems. Approaches such as Distill to Detect, MSPC (Minimal Sufficient Partitioning for Clean Data), and Q-Detection each offer distinct strategies for identifying compromised models. Distill to Detect focuses on analyzing the knowledge transferred during model training to pinpoint anomalies indicative of a backdoor, while MSPC aims to isolate and validate clean data subsets to rebuild a trustworthy model. Q-Detection, leveraging quantum-inspired principles, seeks patterns in model behavior that deviate from normal operation, signaling the presence of malicious modifications. These alternative detection methods are not meant to replace existing defenses, but rather to provide complementary layers of security, enhancing the overall resilience of machine learning applications against increasingly sophisticated attacks.

A robust security posture for quantum machine learning necessitates more than a single defense; instead, a layered approach, integrating techniques like Distill to Detect, MSPC, Q-Detection, and QSentry, offers significantly improved protection. Each method addresses vulnerabilities from a unique perspective – some focus on identifying anomalous weight distributions, while others analyze activation patterns or clustering behavior. By deploying these complementary strategies in concert, systems can withstand a wider range of backdoor attacks and maintain reliable performance even when compromised samples are present. This multi-faceted defense increases the difficulty for attackers, demanding they overcome multiple layers of scrutiny, and enhances the overall resilience of quantum machine learning applications against adversarial threats.

The handwritten digit dataset, MNIST, serves as a foundational benchmark for evaluating the performance of backdoor detection algorithms in quantum machine learning. Its relatively simple structure – comprising 70,000 grayscale images of digits from 0 to 9 – allows researchers to rapidly prototype and assess the efficacy of novel defenses like Distill to Detect and QSentry. By introducing controlled “backdoor” triggers – subtle perturbations designed to mislead the model – into the MNIST training data, scientists can rigorously test whether these detection methods accurately identify poisoned samples. The widespread availability and ease of use of MNIST facilitates reproducible research and allows for direct comparison between different algorithmic approaches, accelerating the development of robust security measures for quantum machine learning systems.

Securing quantum machine learning applications necessitates the implementation of robust defense mechanisms, and frameworks like PennyLane are increasingly utilized to build these safeguards. Research highlights the critical role of techniques such as QSentry, which demonstrates a strong correlation between cluster size analysis and the presence of backdoor attacks – effectively quantifying the extent of data poisoning. Specifically, QSentry consistently predicts the proportion of malicious samples with notable accuracy; for example, when 10% of the training data is intentionally poisoned, the algorithm reliably identifies a cluster size ranging from 52 to 63, indicating a consistent and stable detection rate. This precise relationship between predicted cluster size and actual poisoning levels offers a powerful tool for proactively identifying and mitigating threats to quantum machine learning systems, bolstering their overall security and reliability.

The presented framework, QSentry, meticulously analyzes quantum neural network behavior via measurement clustering, revealing anomalies indicative of adversarial manipulation. This approach echoes Niels Bohr’s assertion: “The opposite of every truth is also a truth.” QSentry doesn’t seek a singular ‘correct’ state but instead identifies deviations from established measurement patterns – a spectrum of permissible quantum states. The success of this method relies on discerning subtle variations within the probabilistic landscape of quantum computation, thereby detecting even sophisticated backdoor attacks that might otherwise remain concealed within the system’s inherent uncertainty. The detection of these anomalies, much like Bohr’s principle, highlights that alternate interpretations – or adversarial inputs – can equally describe the observed data.

Where Do We Go From Here?

The introduction of QSentry, a framework for detecting adversarial incursions into quantum neural networks via measurement clustering, feels less like a solution and more like a carefully constructed observation post. It reveals the vulnerabilities inherent in these systems, but also highlights how readily those vulnerabilities can be obscured by the very principles that promise quantum advantage. The cosmos generously shows its secrets to those willing to accept that not everything is explainable; QSentry, while effective, is still bound by the limitations of classical analysis imposed upon a quantum substrate.

Future work will undoubtedly focus on refining measurement strategies and clustering algorithms, seeking ever-greater fidelity in anomaly detection. However, a more profound challenge lies in addressing the fundamental question of trust. Can any defense truly anticipate all possible attack vectors, or are these systems destined to be perpetually reactive, forever chasing shadows cast by increasingly sophisticated adversaries? Black holes are nature’s commentary on our hubris; the pursuit of perfect security in a quantum realm may be a similar exercise in futility.

Perhaps the most fruitful avenue for exploration isn’t stronger defenses, but rather architectures inherently resistant to manipulation. Systems that embrace inherent uncertainty, that leverage quantum randomness not as a weakness to be overcome, but as a feature to be exploited. To build not a fortress, but a mirage – something that appears solid to the attacker, yet dissolves upon closer inspection.

Original article: https://arxiv.org/pdf/2511.15376.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- God Of War: Sons Of Sparta – Interactive Map

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Who Is the Information Broker in The Sims 4?

- 8 One Piece Characters Who Deserved Better Endings

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

- Engineering Power Puzzle Solution in Poppy Playtime: Chapter 5

2025-11-20 22:40