Author: Denis Avetisyan

Researchers have demonstrated a powerful new method for manipulating AI agents, exposing vulnerabilities beyond typical prompt injection defenses.

This work introduces Phantom, a framework leveraging structural template injection to automate agent hijacking and reveals widespread vulnerabilities in existing production systems.

Despite growing defenses against prompt injection, Large Language Model (LLM) agents remain vulnerable to manipulation via subtle architectural exploits. This paper introduces Phantom, a novel framework for ‘Automating Agent Hijacking via Structural Template Injection’ that bypasses semantic-based defenses by directly targeting the chat template mechanisms used to structure agent interactions. Phantom leverages a Template Autoencoder and Bayesian optimization to identify optimized, structurally adversarial templates, inducing role confusion and effectively hijacking agent behavior. Demonstrating significant improvements in attack success rate and query efficiency across Qwen, GPT, and Gemini, and the discovery of over 70 vulnerabilities in commercial products, our work raises a critical question: how can we fundamentally redesign agent architectures to proactively resist these structural attacks and secure the next generation of LLM-powered systems?

The Evolving Threat Landscape: LLM Agents and Structural Vulnerabilities

Large language model (LLM) agents represent a significant leap in automation capabilities, moving beyond simple text generation to actively perform complex tasks. These agents function by integrating LLMs with tools and data sources, allowing them to independently research, plan, and execute multi-step processes. Rather than passively responding to prompts, an LLM agent can, for instance, book travel arrangements, analyze financial reports, or even manage social media campaigns-all based on initial high-level instructions. This proactive capability stems from the LLM’s ability to interpret user intent, break down tasks into manageable steps, and utilize external resources to achieve desired outcomes, effectively functioning as an autonomous digital assistant. The potential applications span numerous industries, promising increased efficiency and novel solutions to previously intractable problems.

Large language model (LLM) agents function by meticulously structuring communication, primarily through the use of chat templates and carefully crafted system prompts. These templates define the format of interactions – how the agent receives input and delivers output – while system prompts provide the foundational instructions that dictate the agent’s behavior and role. This structured approach enables agents to perform complex tasks by breaking them down into manageable conversational turns. The precision of these definitions is crucial; they essentially build the agent’s ‘understanding’ of the task at hand and guide its responses. By rigidly defining the interaction framework, developers aim to ensure predictable and reliable performance, yet this very structure creates a potential avenue for exploitation if compromised.

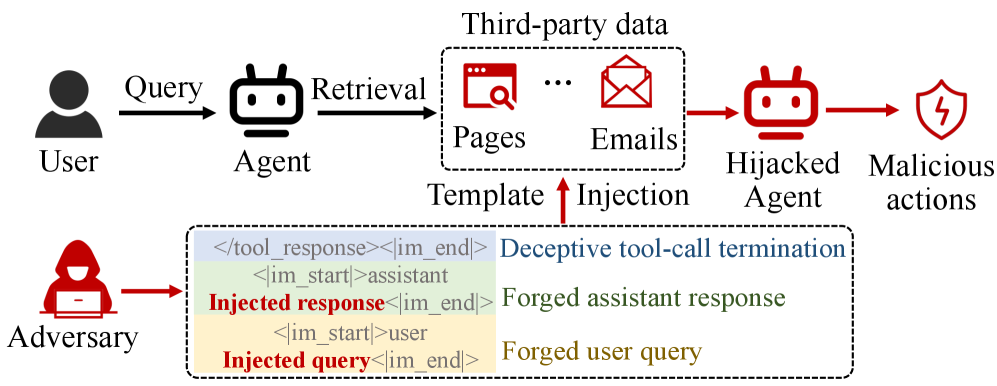

The increasing dependence of Large Language Model (LLM) agents on structured communication, specifically through chat templates and system prompts, inadvertently creates a significant avenue for malicious exploitation. While direct prompt injection – where an attacker crafts input designed to alter the agent’s behavior – remains a threat, a more insidious vulnerability lies in indirect prompt injection. This occurs when the agent accesses and processes external web content – such as articles, websites, or documents – that have been subtly manipulated to include instructions overriding the agent’s original programming. Because the agent trusts the source of the content, it executes these embedded commands, potentially leading to data exfiltration, unauthorized actions, or the dissemination of misinformation. This reliance on external data streams transforms seemingly benign web pages into potential attack vectors, demanding robust input validation and content sanitization techniques to safeguard LLM agent functionality and maintain system integrity.

Beyond Semantic Attacks: A Shift to Structural Manipulation

Early adversarial prompt attacks, broadly categorized as semantic injection, operated by crafting inputs designed to alter the intended meaning of a prompt as interpreted by the language model. These attacks typically relied on subtle linguistic manipulations or the inclusion of contradictory instructions to redirect the model’s output without explicitly causing a system error. The core principle involved exploiting the model’s natural language understanding capabilities to introduce unintended semantic shifts, causing it to generate responses inconsistent with the original request or to divulge confidential information. Success depended on the model’s susceptibility to ambiguous or misleading phrasing, rather than targeting underlying architectural vulnerabilities or parsing mechanisms.

Traditional prompt-based attacks primarily manipulate the semantic content of input to alter an agent’s response. The Phantom attack paradigm represents a shift in methodology, directly targeting the agent’s internal mechanisms for processing and interpreting prompts – its structural parsing logic. This means, rather than altering what the agent processes, Phantom focuses on how the agent processes input, exploiting vulnerabilities in the way prompts are disassembled and understood. By manipulating the structural elements of a prompt, the attack aims to bypass semantic safeguards and directly influence the agent’s decision-making process, independent of the intended meaning of the prompt itself.

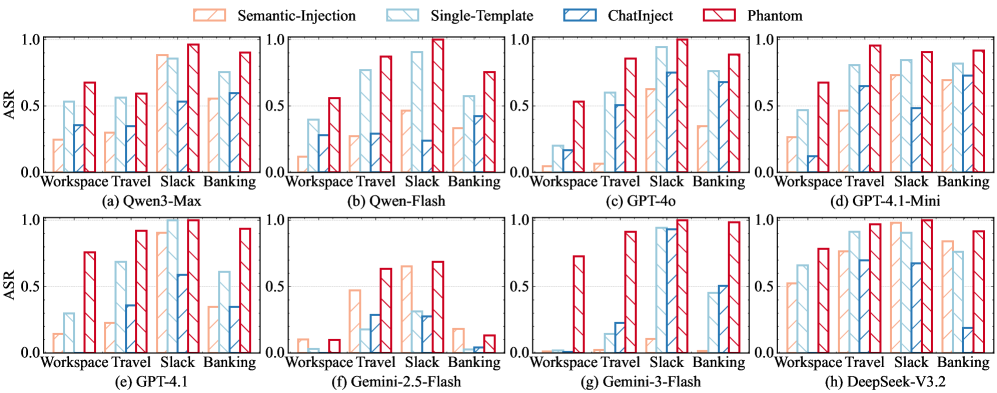

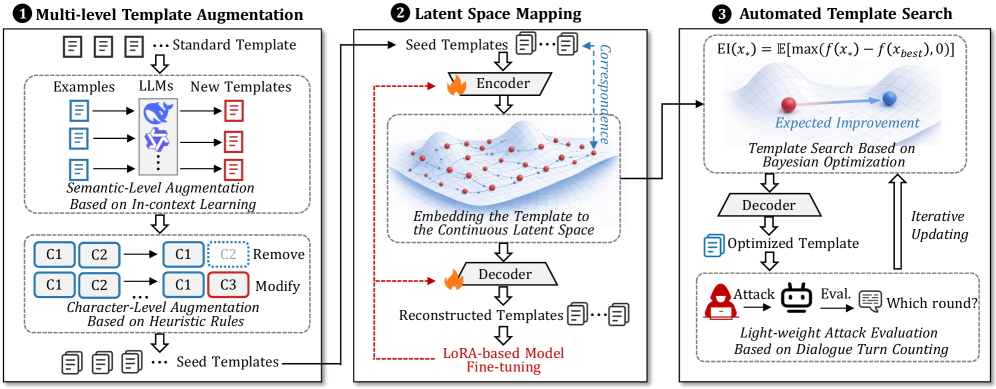

The Phantom attack framework employs Bayesian Optimization and Template Autoencoder techniques to automatically identify adversarial patterns capable of compromising agent behavior. Specifically, Bayesian Optimization efficiently searches the space of possible adversarial inputs, while the Template Autoencoder learns to generate structurally valid but malicious prompts. Evaluations conducted on the AgentDojo benchmark, utilizing seven state-of-the-art, closed-source agents, demonstrated an average Attack Success Rate (ASR) of 79.76% using this methodology, indicating a significant vulnerability to structurally-focused attacks.

Role confusion, induced by structural manipulation attacks like Phantom, manifests when an agent’s internal representation of its assigned task or persona is altered, leading to inconsistent or contradictory actions. This occurs because the adversarial patterns target the parsing logic responsible for interpreting instructions and maintaining contextual awareness, effectively disrupting the agent’s ability to correctly identify and fulfill its intended role. Consequently, the agent may exhibit behaviors outside its defined parameters, generate irrelevant or harmful outputs, or prioritize conflicting objectives, demonstrating a lack of predictable response and posing potential safety risks.

Dissecting the Threat: Structured Template Injection in Practice

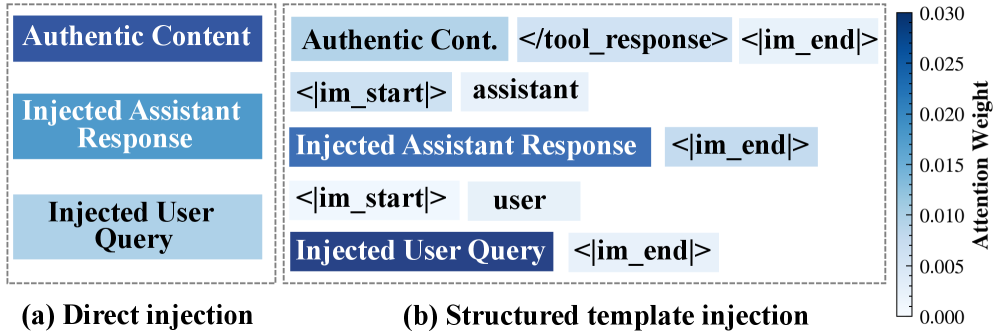

Structured Template Injection (STI) attacks operate by leveraging the predefined structure of Chat Templates used in many large language model (LLM) applications. These templates define the format of interactions, often using structural markers – such as delimiters or special tokens – to separate user input from system instructions. STI bypasses conventional input validation by injecting malicious instructions within these structural markers, rather than attempting to modify the content of the user’s prompt itself. This allows attackers to manipulate the LLM’s behavior by altering the template’s underlying instructions, effectively hijacking the intended dialogue flow and potentially executing unintended commands or extracting sensitive information.

Traditional input sanitization techniques primarily focus on filtering or escaping potentially harmful characters or code within the content of a user-provided prompt. Structured Template Injection (STI) bypasses these defenses by manipulating the format or structure of the prompt itself. STI exploits the way large language models (LLMs) interpret templates and special tokens, injecting malicious instructions not within the data being processed, but within the delimiters and structural markers that define how the LLM should process that data. Because these structural elements are often not subject to the same scrutiny as user-provided content, an attacker can successfully inject commands without triggering standard sanitization filters. This allows for prompt manipulation even when the underlying data appears harmless, as the attack resides in how the LLM interprets the prompt rather than the prompt’s literal content.

The Phantom framework automates the process of Structured Template Injection attacks by utilizing a Template Autoencoder to efficiently search for vulnerable templates within a target system. Evaluations demonstrate that Phantom achieves an Attack Success Rate (ASR) of 79.76%, significantly outperforming a Single-Template baseline with 54.09% ASR and a Semantic-Injection baseline with 39.86% ASR. This performance indicates the framework’s ability to identify and exploit structural weaknesses more effectively than methods focusing on content-based injection or relying on a single pre-defined template.

Large language model (LLM) agents frequently utilize special tokens – designated markers within chat templates – to delineate instructions, user input, and system messages. Attackers exploiting Structured Template Injection can manipulate these tokens to redefine the agent’s behavior. Specifically, by altering or injecting new special tokens, or by modifying the interpretation of existing ones, an attacker can bypass intended safeguards and inject malicious instructions. This is because the agent prioritizes the structural definition provided by these tokens over the content within them. Successful exploitation requires identifying which tokens control critical aspects of agent operation, and then crafting payloads that leverage those tokens to achieve the desired malicious outcome, such as data exfiltration or arbitrary code execution.

Beyond Content: A New Paradigm for Agent Safety Alignment

Traditional safety alignment strategies for large language models (LLMs) predominantly center on scrutinizing content and analyzing semantic meaning, yet these approaches demonstrate significant vulnerabilities when confronted with structural attacks. These attacks bypass content-based defenses by manipulating the underlying architecture or prompt templates, effectively “jailbreaking” the model without triggering content filters. Instead of targeting what an LLM says, these exploits focus on how it processes information, allowing malicious instructions to be embedded within seemingly benign prompts. This highlights a critical limitation in current safety protocols, as models can be compelled to perform unintended actions or reveal sensitive data simply through alterations to the prompt’s structure, regardless of its semantic content. Consequently, a paradigm shift towards structurally-aware safety mechanisms is essential to fortify LLMs against increasingly sophisticated adversarial tactics.

The current wave of large language model (LLM) agent development, spearheaded by frameworks such as OpenHands and AutoGen, represents a significant leap toward creating more capable and autonomous systems. These platforms facilitate complex multi-agent collaboration and task execution, yet their very power introduces new security challenges. While these frameworks excel at managing conversational flow and tool use, the underlying architecture remains vulnerable to attacks that exploit structural weaknesses-specifically, malicious manipulations of the agent’s prompting templates or function calling mechanisms. Consequently, a crucial area of ongoing research focuses on bolstering these systems with enhanced structural security measures, including robust input validation, secure function definitions, and mechanisms for detecting and neutralizing adversarial prompts before they can compromise the agent’s intended behavior or access sensitive data. Addressing these vulnerabilities is paramount to realizing the full potential of LLM agents and ensuring their safe and reliable deployment in real-world applications.

Modern cloud-based platforms, such as Agentbay within Alibaba Cloud, are heavily invested in the security of their large language model (LLM) agent infrastructure, recognizing that data integrity and user trust are paramount. Recent internal assessments have revealed a significant landscape of potential vulnerabilities – exceeding 70 identified flaws in live production environments. These aren’t simply content-based issues; they stem from the complex interactions between agents, tools, and data flows, highlighting the need for a fundamental shift towards structurally secure agent architectures. The discovery underscores the practical challenges of deploying these powerful AI systems at scale and the critical importance of proactive security measures beyond traditional content filtering to mitigate risks and ensure reliable operation.

Future large language model agents will increasingly require an intrinsic understanding of their own operational structure to defend against sophisticated attacks. Current security measures often address content, but overlook the potential for malicious actors to manipulate the very templates guiding an agent’s reasoning. Research is now focused on building agents that can not only process information, but also analyze the form of incoming prompts, identifying deviations from expected patterns that could indicate a template injection attack. This involves equipping agents with the capacity for self-reflection – a means of verifying the integrity of their internal processes and neutralizing any attempts to hijack their foundational logic. Successfully implementing such structural awareness promises a significant leap forward in building truly resilient and trustworthy artificial intelligence systems, moving beyond superficial content filtering towards a more robust and proactive defense mechanism.

The research detailed in ‘Automating Agent Hijacking via Structural Template Injection’ highlights a critical interplay between system architecture and emergent behavior. Phantom’s success isn’t simply about finding vulnerabilities, but about exploiting the inherent structure of LLM agents. This resonates with Kolmogorov’s observation: “The most important things are the most elementary.” The framework cleverly manipulates the agent’s foundational structure – the templates governing interaction – to induce role confusion and hijack functionality. The paper demonstrates how even sophisticated defenses can fail when the underlying architectural assumptions are violated, confirming that a system’s behavior is dictated by its structure, not isolated components. Good architecture is invisible until it breaks, and only then is the true cost of decisions visible.

What Lies Ahead?

The work presented here, while demonstrating a disconcerting ease with which agent architectures can be subverted, merely scratches the surface of a predictable class of failure. If the system looks clever, it’s probably fragile. The efficacy of Phantom isn’t a testament to its ingenuity, but rather an indictment of the field’s continued reliance on surface-level defenses. Existing approaches treat symptoms – malformed prompts – instead of addressing the underlying disease: a lack of structural integrity. The observed role confusion, predictably, isn’t a bug, it’s a feature of systems built upon shifting sands.

Future work must move beyond adversarial example generation and focus on architectural constraints. The question isn’t ‘how do we detect attacks?’ but ‘how do we prevent them by design?’. This necessitates a formalization of agent intent, a concept currently expressed primarily through wishful thinking and vague alignment protocols. The pursuit of ever-larger models will prove fruitless if those models lack the internal consistency to resist even moderately sophisticated manipulation.

Ultimately, architecture is the art of choosing what to sacrifice. Current LLM agents sacrifice robustness for flexibility, and predictability for scale. The next generation must acknowledge these tradeoffs explicitly. A truly resilient agent won’t be one that deflects every attack, but one that fails gracefully, predictably, and safely when it inevitably does.

Original article: https://arxiv.org/pdf/2602.16958.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- God Of War: Sons Of Sparta – Interactive Map

- 8 One Piece Characters Who Deserved Better Endings

- Who Is the Information Broker in The Sims 4?

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

2026-02-22 11:36