Author: Denis Avetisyan

This review explores the challenges and solutions for building robust rate limiting systems capable of handling massive traffic spikes.

A deep dive into algorithms, architectural patterns, and distributed implementations leveraging Redis and Lua scripting for high availability.

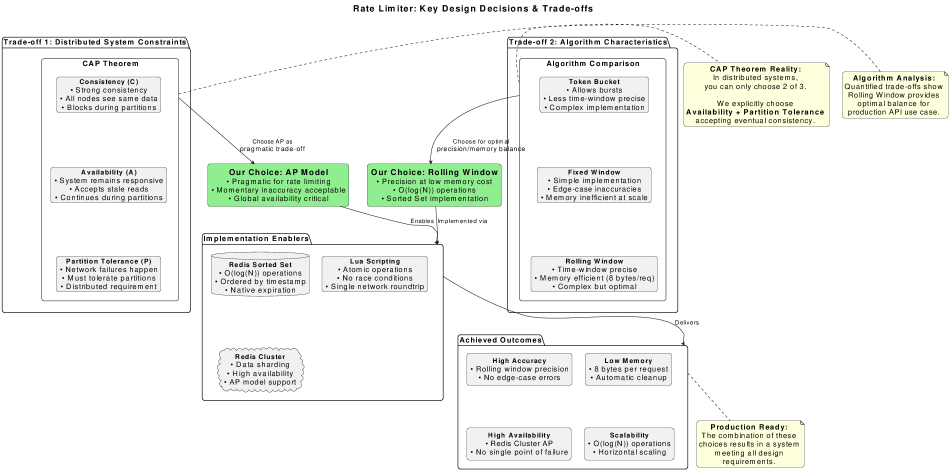

Achieving both accuracy and scalability in rate limiting presents a fundamental challenge in modern distributed systems. This is addressed in ‘Designing Scalable Rate Limiting Systems: Algorithms, Architecture, and Distributed Solutions’, which details a production-ready architecture leveraging Redis Sorted Sets and Lua scripting to efficiently manage request rates. The core contribution lies in quantifying the trade-offs between accuracy and memory usage for a rolling window algorithm-compared to token bucket and fixed window approaches-while prioritizing availability and partition tolerance as dictated by the CAP theorem. How can such a system be further optimized to handle increasingly complex and dynamic rate limiting requirements in cloud-native environments?

Decoding the System: Why Rate Limiting Matters

Contemporary distributed systems are increasingly challenged by escalating user demand and the proliferation of interconnected services. This relentless growth necessitates proactive traffic regulation to prevent system overload and maintain operational stability. Without effective controls, a surge in requests can quickly exhaust critical resources – such as processing power, memory, and network bandwidth – leading to degraded performance, service outages, and a compromised user experience. Sophisticated rate limiting mechanisms act as a crucial defense, intelligently managing incoming traffic by restricting the number of requests accepted within a specific timeframe. This ensures equitable resource allocation, protects vital services from malicious attacks, and ultimately guarantees a reliable and responsive system, even under peak load conditions.

Without effective controls, a surge in traffic can quickly overwhelm system resources, leading to performance degradation and even complete service outages. This resource exhaustion manifests as slowed response times, failed requests, and ultimately, an inability to serve legitimate users. Consequently, robust rate limiting mechanisms are no longer simply a best practice, but a necessity for maintaining availability and a positive user experience. These mechanisms intelligently monitor and restrict the rate of incoming requests, preventing any single source from monopolizing resources and ensuring equitable access for all. By proactively managing traffic flow, systems can gracefully handle peak loads and maintain stability, safeguarding against denial-of-service attacks and unintentional overload scenarios.

The Building Blocks: Algorithms and Data Structures

Rate limiting algorithms such as Fixed Window Counter, Token Bucket, and Rolling Window each present distinct performance characteristics. The Fixed Window Counter is the simplest to implement, tracking requests within discrete time windows, but suffers from potential burst traffic at window boundaries. The Token Bucket algorithm provides smoother rate limiting by replenishing tokens at a fixed rate, allowing bursts up to the bucket size, but requires careful parameter tuning. Rolling Window algorithms offer improved accuracy by considering a sliding window of time, averaging the request rate over that period, though they introduce greater computational complexity due to the need to maintain and query a continuously updated window of timestamps or request counts; the choice of algorithm depends on the specific application’s tolerance for burstiness versus computational cost.

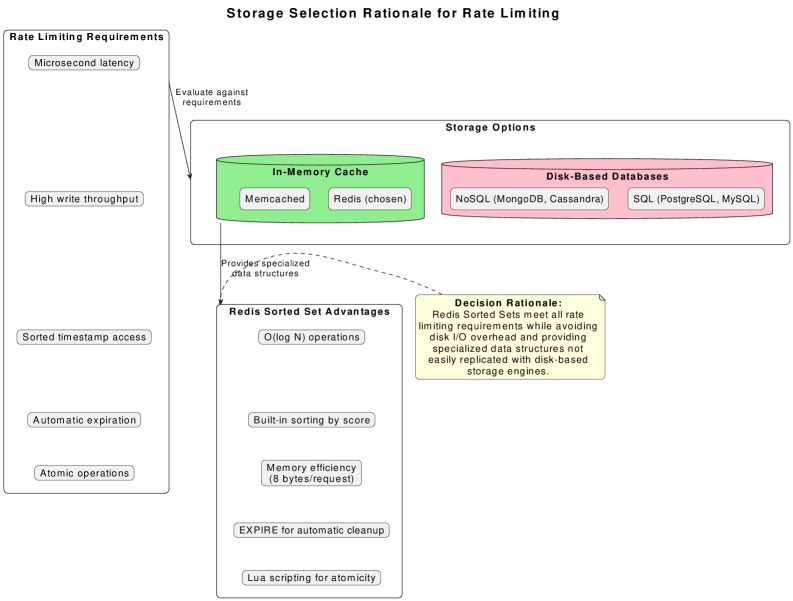

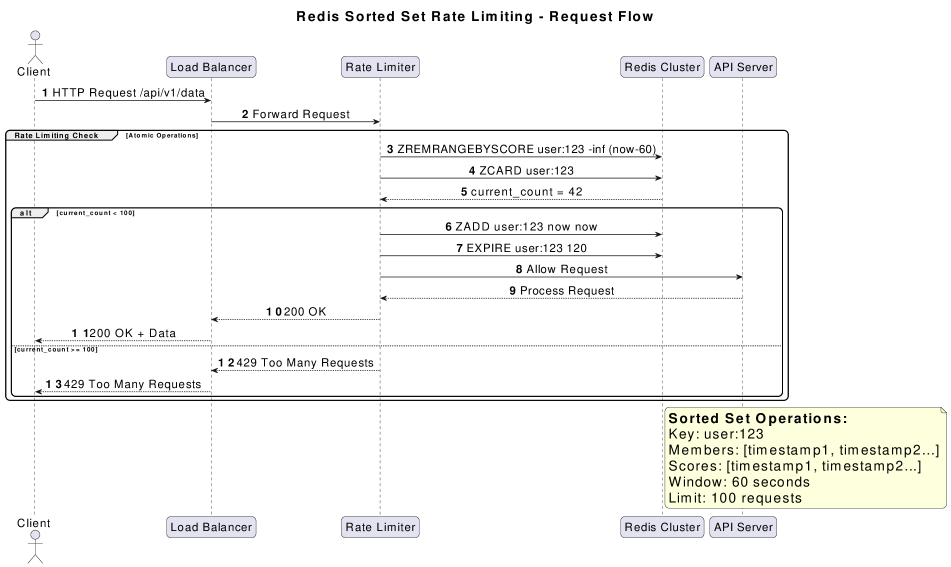

Redis Sorted Sets are particularly well-suited for rate limiting algorithms due to their ability to store members associated with a score, and retrieve them efficiently by score range. In the context of rate limiting, the member can represent a request identifier, and the score can represent the request’s timestamp. This allows for efficient identification of requests falling within a specific time window – essential for Fixed Window Counter, Token Bucket, and Rolling Window algorithms. The inherent sorting of the set, combined with Redis’s ability to quickly retrieve elements within a score range, enables O(log(n)) time complexity for key operations like determining if a request should be allowed or rejected, and for cleaning up expired requests based on their timestamps. This contrasts with using lists or other data structures which would necessitate iteration and significantly increase computational cost for timestamp-based calculations.

Redis, being a single-threaded application, avoids many concurrency issues by default; however, implementing rate limiting requires multiple operations – incrementing a counter, checking against a limit, and potentially resetting the counter – which, when executed as separate commands, are not atomic. Lua scripting within Redis allows these operations to be encapsulated within a single script executed atomically by the Redis server. This atomicity is critical because it prevents race conditions where concurrent requests could bypass the rate limit due to interleaved execution of individual commands. Without atomic operations, multiple clients could simultaneously read the counter value, determine it’s below the limit, and then increment it before any other client has a chance, leading to an inaccurate count and potential service abuse. Lua scripting ensures that the entire rate limiting logic is executed as a single, indivisible unit, guaranteeing data consistency and accurate enforcement of limits in a concurrent environment.

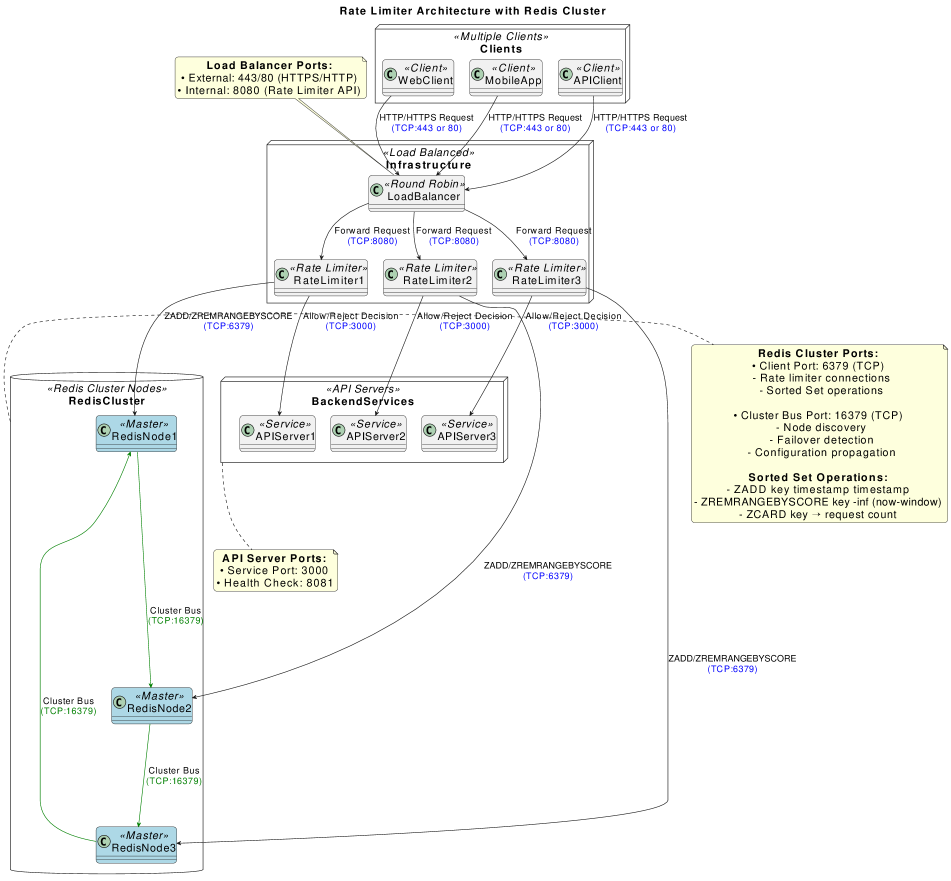

Scaling the Walls: High Availability with Redis Cluster

Redis Cluster implements horizontal scalability by automatically sharding data across multiple Redis nodes. This distributed architecture allows rate limiting systems to handle significantly higher traffic volumes than a single Redis instance. Data is partitioned using a hashing function, and each node is responsible for a subset of the overall key space. As traffic increases, additional nodes can be added to the cluster, proportionally increasing the system’s capacity. This scaling is achieved without requiring application-level code changes, as the cluster manages data distribution transparently. The cluster’s ability to distribute load across multiple servers prevents single points of failure and improves overall system resilience when handling peak request rates.

Hash tags, also known as virtual slots, within Redis Cluster enable consistent key distribution across all nodes by mathematically mapping each key to a specific slot out of 16384 available. This mapping is performed using the formula CRC16(key) mod 16384, ensuring that a given key consistently maps to the same node unless a cluster rebalance occurs. This consistent hashing is fundamental to rate limiting because it guarantees that all requests for a specific key, and therefore the associated rate limit counter, are directed to the same node, preventing counter inconsistencies and ensuring accurate enforcement of limits across the entire cluster. Without hash tags, key-to-node assignment would be arbitrary, leading to fragmented rate limit tracking and potential service disruptions.

Redis Sentinel monitors Redis instances and automatically performs failover operations when a master node is no longer reachable. This process involves detecting unresponsive masters through periodic checks, selecting a suitable slave to promote as the new master, and reconfiguring other nodes in the cluster to point to the new master. Sentinel achieves high availability by minimizing downtime, as failover is typically completed within seconds, and ensuring continuous service even in the event of node failures. Multiple Sentinel instances are deployed to provide redundancy and prevent a single point of failure in the monitoring and failover process; consensus is reached through a voting mechanism to determine the correct state of the cluster and initiate failover only when a majority of Sentinels agree on the necessity.

The CAP Theorem, also known as Brewer’s Theorem, posits that distributed data stores cannot simultaneously guarantee all three of Consistency, Availability, and Partition Tolerance. Consistency ensures every read receives the most recent write, Availability guarantees every request receives a response, and Partition Tolerance defines the system’s behavior when network partitions occur – inevitable in distributed systems. In the context of distributed rate limiting, a system must choose a trade-off; for example, prioritizing Availability might lead to occasional inconsistent rate limit enforcement during a partition, while prioritizing Consistency could result in service unavailability. Understanding this trade-off is critical when designing a rate limiting system, as it directly impacts the system’s behavior under failure conditions and influences architectural decisions regarding data replication and conflict resolution strategies.

Beyond the Limits: Advanced Patterns and Adaptive Rate Limiting

Concurrent request limiting addresses a critical challenge in modern distributed systems: preventing resource exhaustion due to an overwhelming number of simultaneous operations. These patterns don’t simply queue requests; instead, they actively govern the number of requests that can proceed concurrently, effectively establishing a dynamic threshold for active work. By intelligently controlling concurrency, the system avoids cascading failures and maintains responsiveness even under peak load. This is achieved through techniques like token buckets or leaky buckets applied on a per-user or per-service basis, ensuring that critical resources aren’t overwhelmed, and allowing the system to gracefully handle bursts of traffic without compromising stability. The result is a more predictable and reliable experience, even when facing substantial demand.

A dynamic approach to request handling, the Worker Utilization Load Shedder intelligently governs incoming requests based on real-time system load. Rather than rigidly enforcing fixed limits, this mechanism monitors the capacity of worker processes and adjusts acceptance rates accordingly. When system load is low, more requests are permitted, maximizing resource utilization and throughput. Conversely, as workers become saturated, the load shedder proactively reduces acceptance, preventing cascading failures and maintaining system stability. This adaptive strategy ensures that available resources are consistently allocated to actively processing requests, optimizing performance even under fluctuating demand, and delivering a consistently responsive user experience.

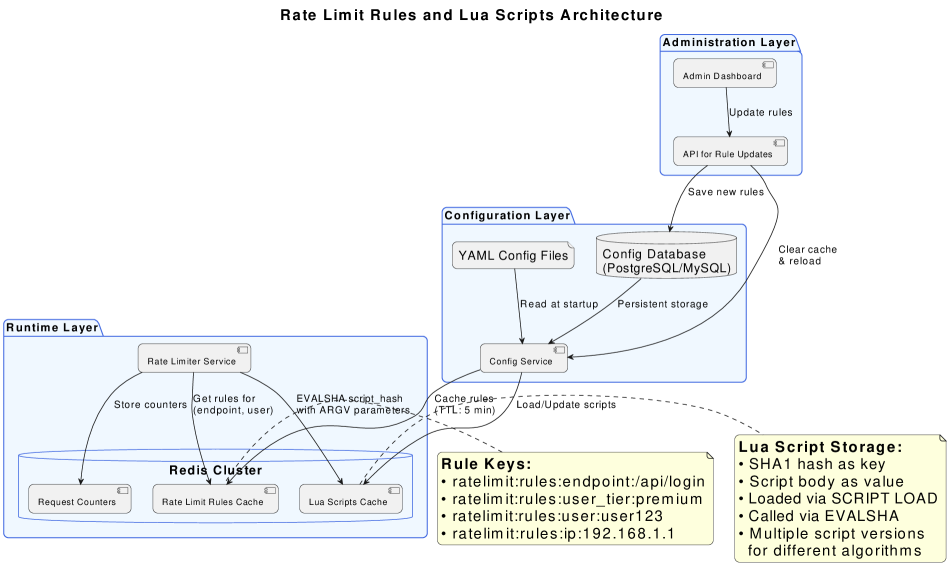

A robust rate limiting system hinges on adaptable and manageable rules, and this architecture delivers that through a three-layer approach. The first layer focuses on configuration, allowing administrators to define rate limits based on various criteria – user ID, API key, or even request attributes – with granular control. This configuration is then passed to the enforcement layer, a real-time component that intercepts requests and applies the defined limits. Crucially, this layer is designed for speed and minimal overhead. Finally, the administration layer provides tools for monitoring, auditing, and dynamically adjusting these rules without service interruption. This separation of concerns not only simplifies maintenance and updates but also facilitates complex rule combinations and A/B testing of different rate limiting strategies, ensuring the system remains responsive to evolving application needs and usage patterns.

The system’s design prioritizes efficiency and scalability through remarkably low resource consumption and optimized state management. Each request is tracked with a minimal memory footprint of just 8 bytes, enabling the system to handle a substantial volume of concurrent requests without incurring excessive overhead. Furthermore, state management operations, crucial for tracking rate limits and applying rules, achieve a logarithmic time complexity of O(log(N)), where N represents the number of active requests. This logarithmic scaling ensures that performance remains consistently high, even as the system expands and handles increasing traffic loads, providing a robust and adaptable solution for managing request rates.

The convergence of concurrent request limiting, dynamic load shedding, and a tiered rule management system, when coupled with Redis technology, yields substantial benefits for application performance and reliability. This combination actively safeguards system stability by proactively preventing overload scenarios and ensuring consistent service availability, even under peak demand. Beyond simple uptime, the architecture directly translates to an improved user experience, as responsiveness is maintained and errors are minimized. Crucially, resource allocation becomes significantly more efficient; the system intelligently accepts and processes requests based on actual capacity, minimizing wasted cycles and maximizing throughput, ultimately lowering operational costs and boosting overall system health.

The pursuit of scalable rate limiting, as detailed in this exploration of Redis and distributed systems, echoes a fundamental principle: true comprehension demands rigorous testing of boundaries. This work doesn’t simply implement a solution; it dissects the inherent trade-offs within the CAP theorem and actively engineers around them. As Henri Poincaré observed, “Mathematics is the art of giving reasons.” Similarly, this paper gives reason to the choices made in prioritizing availability and partition tolerance, demonstrating that a deep understanding of a system isn’t achieved through passive observation, but through intentional stress-testing and reverse-engineering its limitations. The Lua scripting and Sorted Set implementation are not merely tools, but instruments for probing the very fabric of distributed rate limiting.

Beyond the Throttled Gate

The presented work establishes a functional, if predictable, exploit of comprehension regarding rate limiting in distributed systems. The reliance on Redis Sorted Sets, while pragmatic, exposes the inherent limitations of externally-managed state. Future investigations should not focus on optimizing within this paradigm, but on dismantling it. True scalability isn’t about faster sorting; it’s about systems that inherently resist overload without requiring explicit policing. The CAP theorem looms, of course, but it’s a constraint to be cleverly circumvented, not perpetually acknowledged.

The current approaches treat rate limiting as a defensive measure. A more interesting problem lies in re-framing it as an emergent property of well-designed interactions. What if a system naturally decelerated under stress, not through imposed limits, but through intelligent request shaping and prioritized execution? Such a system would not merely tolerate partitions; it would become a partition, gracefully degrading rather than collapsing.

Ultimately, the field needs to move beyond algorithms that count and block. The next significant advance won’t be a faster rate limiter; it will be a system that renders rate limiting unnecessary – a system that absorbs, adapts, and ultimately, ignores the flood.

Original article: https://arxiv.org/pdf/2602.11741.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Build Muscle in Half Sword

- Top 8 UFC 5 Perks Every Fighter Should Use

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- Epic Pokemon Creations in Spore That Will Blow Your Mind!

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- All Pistols in Battlefield 6

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Gears of War: E-Day Returning Weapon Wish List

- Bitcoin Frenzy: The Presales That Will Make You Richer Than Your Ex’s New Partner! 💸

- How To Get Axe, Chop Grass & Dry Grass Chunk In Grounded 2

2026-02-14 19:56