Author: Denis Avetisyan

A new framework combines quantum and classical techniques to address the critical privacy challenges of decentralized machine learning.

This review details a multi-protocol privacy design for quantum federated learning, leveraging differential privacy, data condensation, and quantum key distribution to secure collaborative model training.

Despite the promise of distributed machine learning, realizing robust privacy in quantum systems remains a significant hurdle. This is addressed in ‘Scaling Trust in Quantum Federated Learning: A Multi-Protocol Privacy Design’, which proposes a novel framework for privacy-preserving quantum federated learning. By integrating techniques like singular value decomposition, quantum key distribution, and analytic quantum gradient descent, the authors demonstrate a multi-layered protocol safeguarding both data and model confidentiality during collaborative training. Will this approach pave the way for scalable and secure quantum machine learning applications in sensitive domains?

The Privacy Imperative in Distributed Learning

Conventional machine learning approaches frequently necessitate the aggregation of data into centralized repositories, a practice that introduces substantial privacy risks and restricts access to valuable information. This centralization creates a single point of failure, vulnerable to breaches and misuse, and raises concerns about data ownership and control. Sensitive data, such as medical records or personal financial details, becomes a target for malicious actors, potentially leading to identity theft, discrimination, or other harms. Furthermore, legal and ethical constraints, like GDPR and HIPAA, often limit the ability to collect and share such data, hindering the development of beneficial machine learning applications. Consequently, the reliance on centralized datasets presents a significant bottleneck for innovation and responsible data science, prompting exploration into alternative, privacy-preserving methodologies.

While federated learning emerges as a promising technique for collaborative model training without direct data exchange, it isn’t immune to privacy breaches. A significant vulnerability lies in membership inference attacks, where adversaries attempt to determine if a specific data point was used in the model’s training. These attacks exploit information revealed through the model’s predictions – even without accessing the training data itself – to infer the presence of sensitive information. The success of such attacks hinges on the model’s sensitivity to particular data points and the attacker’s ability to observe its behavior. Consequently, ongoing research focuses on developing robust defenses, such as differential privacy and secure aggregation, to mitigate these risks and ensure the practical, ethical deployment of federated learning systems in privacy-sensitive applications.

The successful implementation of federated learning hinges on safeguarding the contributions of each participating dataset, as vulnerabilities in this area pose both ethical dilemmas and practical roadblocks. While the premise of decentralized training mitigates some privacy risks associated with centralized data storage, individual data points can still be inadvertently revealed through model updates – a phenomenon known as information leakage. Protecting these contributions isn’t simply a matter of adhering to privacy regulations; it’s fundamental to fostering trust and encouraging broader participation in these learning systems. Without robust safeguards – such as differential privacy or secure multi-party computation – individuals may be hesitant to contribute their data, limiting the scope and effectiveness of the resulting models. Consequently, ongoing research focuses on developing techniques that balance model accuracy with stringent privacy guarantees, ensuring that the benefits of distributed learning are realized without compromising individual rights or fostering data exploitation.

Quantum Federated Learning: A Paradigm Shift in Collaborative Intelligence

Quantum Federated Learning (QFL) represents a departure from classical federated learning by incorporating principles of quantum computing to enhance both security and computational efficiency. Traditional federated learning, while preserving data locality, remains vulnerable to various attacks; QFL aims to address these vulnerabilities through quantum cryptographic protocols and algorithms. The integration of quantum key distribution (QKD) enables the establishment of provably secure communication channels between participating clients and the central server, mitigating risks associated with data transmission. Furthermore, QFL utilizes quantum algorithms, such as Variational Quantum Circuits (VQC), to potentially accelerate model training and improve model accuracy while operating on encrypted data, thus reducing the need for centralized data access and improving privacy.

Quantum Key Distribution (QKD) provides a mechanism for establishing secure communication channels in Quantum Federated Learning (QFL) by leveraging the principles of quantum mechanics. Unlike classical key exchange protocols which rely on computational complexity, QKD’s security is based on the laws of physics, specifically the uncertainty principle and the no-cloning theorem. In QFL, QKD is used to distribute encryption keys between participating clients and the central server. These keys are then used to encrypt data during transmission, ensuring confidentiality. Any attempt to intercept or eavesdrop on the key exchange will inevitably disturb the quantum states, alerting the legitimate parties to the intrusion and preventing the compromise of the encryption key. Protocols such as BB84 and E91 are frequently implemented for QKD, providing provable security against various attack strategies.

Quantum Federated Learning (QFL) utilizes Variational Quantum Circuits (VQCs) as a key component for computation directly on encrypted data, enhancing privacy protection. VQCs are hybrid quantum-classical algorithms consisting of a parameterized quantum circuit and a classical optimization loop. Data is encoded into a quantum state, processed by the VQC, and measurements are used to update the circuit parameters via a classical optimizer. This allows for complex computations-such as model training-to be performed without decrypting the data, as the quantum circuit operates on the encrypted quantum states. The use of VQCs, alongside encryption techniques, minimizes the risk of data breaches during the learning process and enables privacy-preserving machine learning on distributed, sensitive datasets.

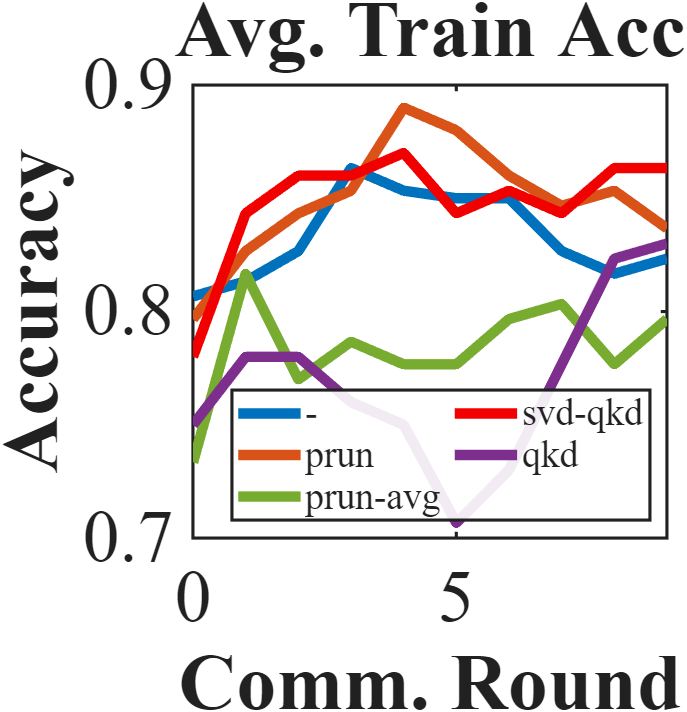

The Privacy-Preserving Quantum Federated Learning (QFL) Framework employs a multi-faceted approach to data security. Data condensation reduces the volume of data requiring transmission, thereby minimizing communication overhead. Principal Component Analysis with Differential Privacy (PCA-DP) and Adaptive Quantum Gradient Descent with Differential Privacy (AQGD-DP) further obfuscate individual data points during model training. Secure Vector Decomposition using Quantum Key Distribution (SVD-QKD) establishes encrypted communication channels for sharing decomposed data vectors, while model pruning reduces the model’s complexity and the potential for memorization of sensitive training data. These techniques, implemented in concert, aim to minimize privacy risks associated with data transmission and model updates throughout the federated learning process.

Optimizing QFL Performance and Scalability: A Systems Perspective

Data condensation techniques are employed in Quantum Federated Learning (QFL) systems to substantially reduce the volume of data requiring transmission, thereby minimizing communication overhead. Specifically, these methods reduce datasets from an initial size of 20,000 samples down to 400 samples. This reduction is achieved through algorithms that identify and retain only the most representative data points, effectively creating a condensed dataset that preserves essential information for model training. By decreasing the amount of data exchanged between participating clients and the central server, data condensation improves the scalability and efficiency of QFL, particularly in bandwidth-constrained environments.

Singular Value Decomposition (SVD) is employed as a dimensionality reduction technique within Quantum Federated Learning (QFL) systems to enhance the efficiency of quantum algorithms. SVD decomposes the original data matrix into three separate matrices, allowing for the identification and retention of the most significant features – those with the highest singular values – while discarding less important, noisy data. This reduction in data dimensionality directly translates to a decrease in the number of quantum gates required for computation, lowering the computational cost and accelerating algorithm execution. Specifically, by reducing the size of the input data to quantum circuits, SVD minimizes the resources needed for quantum state preparation and processing, improving overall QFL performance and scalability.

Analytic Quantum Gradient Descent (AQGD) represents an optimization technique specifically designed for training quantum circuits. Traditional gradient descent methods require numerous circuit evaluations to approximate gradients, incurring significant computational cost. AQGD analytically calculates the gradient of the cost function with respect to circuit parameters, eliminating the need for repeated measurements and thereby accelerating the learning process. This analytical approach reduces the computational complexity associated with parameter updates, enabling faster convergence and lower overall costs for training quantum machine learning models. The method achieves this by leveraging mathematical properties of the quantum circuit and cost function to derive a closed-form expression for the gradient, significantly improving training efficiency compared to methods relying on estimation via circuit sampling.

Principal Component Analysis with Differential Privacy (PCA-DP) integrates data privacy safeguards directly into the dimensionality reduction process. Traditional PCA can inadvertently reveal sensitive information through the exposure of principal components. PCA-DP addresses this by adding carefully calibrated noise to the PCA calculations, specifically during the computation of eigenvectors and eigenvalues. This noise ensures that the contribution of any single data point to the resulting principal components is limited, thereby satisfying the formal definition of $\epsilon$-Differential Privacy. The level of privacy is controlled by the privacy parameter $\epsilon$, with smaller values indicating stronger privacy guarantees but potentially reduced data utility. Implementation involves modifying the standard PCA algorithm to incorporate noise addition, enabling dimensionality reduction while preserving data confidentiality.

When evaluated on a Genomic dataset, the Quantum Federated Learning (QFL) system utilizing Analytic Quantum Gradient Descent (AQGD) and Differential Privacy (DP) – designated QFL-AQGD-DP – achieved a G+ Test Accuracy ranging from approximately 0.85 to 0.90. This performance level is statistically comparable to results obtained from standard QFL implementations without the inclusion of AQGD and DP. This indicates that the integration of these optimization and privacy-preserving techniques does not significantly degrade the model’s predictive capability when applied to genomic data, while simultaneously enhancing training efficiency and data security.

Evaluation of the IRIS dataset indicates that accuracy remains consistently high, ranging from approximately 0.90 to 0.95, regardless of the privacy-preserving technique employed. This consistent performance was observed across various methods, including Quantum Federated Learning (QFL) and QFL implementations incorporating pruning techniques. These results demonstrate that the application of these privacy methods to the IRIS dataset introduces minimal degradation in predictive accuracy, suggesting a viable trade-off between data privacy and model performance for this particular dataset.

Real-World Applications and Future Directions: Expanding the Horizon of QFL

Quantum Federated Learning (QFL) presents a paradigm shift in machine learning, poised to unlock insights from sensitive data previously inaccessible due to privacy concerns. This innovative approach allows for the collaborative training of machine learning models across multiple devices or institutions – such as hospitals, financial institutions, or genomic research centers – without directly exchanging raw data. Instead, devices locally compute model updates and share only encrypted parameters, safeguarding individual privacy while still benefiting from the collective knowledge embedded within distributed datasets. The implications are particularly profound in fields like genomics, where analyzing patient data is crucial for disease understanding and personalized medicine, and in finance, where fraud detection and risk assessment rely on vast amounts of customer information; QFL promises to enable these advancements without compromising data security or regulatory compliance, ultimately fostering a future where data-driven innovation and privacy coexist.

A significant advancement enabled by Quantum Federated Learning (QFL) lies in its potential to unlock insights from distributed genomic datasets while upholding stringent patient privacy. Traditional machine learning approaches often require centralized data collection, posing substantial risks to sensitive genetic information; however, QFL facilitates model training across multiple institutions or devices without directly exchanging raw data. This decentralized paradigm allows researchers to collaboratively analyze vast genomic resources – crucial for understanding complex diseases and personalized medicine – without compromising individual confidentiality. By leveraging the principles of quantum mechanics, QFL enhances the security of data contributions, offering a pathway towards breakthroughs in genomic research previously hindered by privacy concerns and regulatory restrictions. This approach promises to accelerate discoveries in areas like cancer genomics, rare disease identification, and pharmacogenomics, all while maintaining ethical data handling practices.

Beyond sensitive data like genomic information, Quantum Federated Learning (QFL) demonstrates substantial promise in enhancing image recognition tasks. Utilizing datasets such as MNIST and IRIS, QFL offers a pathway to train machine learning models on decentralized image data without direct data exchange, thereby bolstering security and user privacy. This distributed approach not only mitigates the risks associated with centralized data storage but also facilitates a more efficient learning process by leveraging the computational resources of multiple devices. The application of QFL to image recognition signifies a broader applicability of this technology, extending its benefits beyond specialized fields and potentially revolutionizing how machine learning models are developed and deployed in a privacy-conscious manner.

Recent evaluations of Quantum Federated Learning (QFL) employing the AQGD-DP mechanism on complex genomic datasets reveal a compelling level of performance. The system consistently achieved a Server Score ranging from approximately 0.75 to 0.85, indicating its ability to effectively learn from decentralized data while preserving privacy. Importantly, this score demonstrates a performance level comparable to that of standard, non-quantum Federated Learning approaches. This suggests that the incorporation of quantum principles does not necessarily sacrifice model accuracy, opening avenues for privacy-enhancing machine learning in sensitive fields like genomics where data access is highly restricted and maintaining utility is paramount.

Evaluations utilizing the IRIS dataset demonstrate a consistent level of model accuracy achieved across various privacy-preserving techniques. Specifically, device training consistently yields accuracy scores ranging from approximately 0.90 to 0.95, irrespective of the specific privacy method employed. This suggests that the implementation of privacy mechanisms does not substantially diminish the model’s ability to effectively learn and categorize the data within this benchmark dataset. The sustained high performance across diverse privacy approaches highlights the potential for deploying accurate machine learning models in sensitive applications without significant compromise to predictive power, even when data is distributed and locally trained.

Continued investigation into Quantum Federated Learning (QFL) necessitates a multifaceted approach centered on strengthening its core capabilities. Future studies are poised to prioritize the development of more resilient privacy mechanisms, moving beyond current differential privacy techniques to address evolving adversarial threats and data vulnerabilities. Simultaneously, research will concentrate on minimizing the substantial computational demands inherent in quantum processes, potentially through novel algorithm optimization and hardware acceleration. Crucially, the scalability of QFL must be rigorously tested with increasingly large and complex datasets, such as those found in global-scale financial modeling or comprehensive genomic analyses, to ensure its practical applicability and widespread adoption as a secure and efficient machine learning paradigm.

The pursuit of scalable trust in quantum federated learning, as detailed in this work, necessitates a holistic view of system design. One must consider not merely the individual privacy mechanisms-differential privacy, data condensation, or quantum key distribution-but their interplay within the broader framework. Robert Tarjan aptly observed, “Complexity hides opportunity.” This rings true; the inherent complexity of combining quantum computation with federated learning, while daunting, unlocks opportunities for enhanced privacy and security. The paper’s multi-protocol approach reflects this principle, acknowledging that a robust solution demands the careful orchestration of diverse techniques to address the unique vulnerabilities arising from quantum machine learning systems.

What Lies Ahead?

This work, while a necessary confluence of established and emerging techniques, highlights the inherent fragility of distributed trust. The system’s strength resides in layering defenses – differential privacy, data condensation, quantum key distribution – but such architectures only postpone the inevitable encounter with unforeseen vulnerabilities. Boundaries, after all, are not static; they shift with the evolution of both attack vectors and computational capability. The elegance of a multi-protocol approach is seductive, yet it introduces new points of failure – interfaces between protocols, assumptions about their correct implementation, and the ever-present specter of side-channel leakage.

Future efforts must move beyond simply adding layers. A more fruitful path lies in a deeper understanding of the information theoretic limits of privacy in federated settings. How much information must leak, even with perfect cryptographic tools, due to the very act of learning a shared model? Addressing this requires a move towards provably secure designs, where privacy guarantees are mathematically demonstrable, not merely empirically observed. The current focus on gradient descent as the optimization engine also feels limiting; alternative methods, less susceptible to inversion attacks, deserve exploration.

Ultimately, the true challenge is not merely technical. It is a systemic one. The relentless push for model accuracy often overshadows the equally important need for verifiable privacy. Systems break along invisible boundaries – if one cannot see them, pain is coming. Anticipating those weaknesses demands a shift in mindset, from building complex fortifications to designing inherently resilient structures.

Original article: https://arxiv.org/pdf/2512.03358.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- God Of War: Sons Of Sparta – Interactive Map

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Who Is the Information Broker in The Sims 4?

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Someone Made a SNES-Like Version of Super Mario Bros. Wonder, and You Can Play it for Free

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- One Piece Chapter 1175 Preview, Release Date, And What To Expect

2025-12-04 19:38