Author: Denis Avetisyan

A new encoding method boosts the performance of quantum neural networks by ensuring crucial structural information isn’t lost when converting images into a quantum format.

This review introduces Fidelity-Preserving Quantum Encoding (FPQE), a technique utilizing structural similarity metrics to improve the fidelity of image representation on Noisy Intermediate-Scale Quantum (NISQ) devices.

Efficiently translating high-dimensional classical data into quantum states presents a fundamental challenge, as existing encoding schemes often sacrifice crucial spatial and semantic information. This limitation is addressed in ‘Fidelity-Preserving Quantum Encoding for Quantum Neural Networks’, which introduces a novel framework designed to minimize data loss during the classical-to-quantum conversion. By employing a convolutional encoder-decoder to learn compact, reconstructable representations and subsequently mapping these into quantum states via amplitude encoding, the proposed method demonstrably preserves fidelity, achieving improved accuracy-up to 10.2% on Cifar-10-compared to conventional approaches, particularly with complex datasets. Could this fidelity-focused encoding unlock the full potential of quantum neural networks for increasingly sophisticated visual data analysis?

The Limits of Classical Vision: Why Dimensionality Matters

Traditional machine learning algorithms demonstrate remarkable proficiency when analyzing visual data, such as images and videos. However, these algorithms encounter significant challenges as the dimensionality of the data increases – a phenomenon known as the ‘curse of dimensionality’. This curse arises because the volume of the data space grows exponentially with each added dimension, requiring an exponentially larger amount of data to achieve statistically meaningful results. Consequently, algorithms become computationally expensive, require vast datasets, and often struggle to generalize effectively to unseen data. The problem isn’t simply a matter of needing more processing power; it’s that the fundamental relationships within the data become increasingly sparse and difficult to discern as dimensionality increases, hindering the algorithm’s ability to extract meaningful patterns and make accurate predictions.

Quantum computation presents a paradigm shift in information processing, moving beyond the classical bit – which exists as either 0 or 1 – to the qubit. This qubit leverages the principles of superposition, allowing it to represent 0, 1, or a combination of both simultaneously. Furthermore, entanglement creates a correlation between qubits, such that the state of one instantly influences the state of another, regardless of the distance separating them. These quantum phenomena enable the potential for exponentially greater computational power for specific tasks. By encoding data into these quantum states, algorithms can explore a vastly larger solution space than their classical counterparts, offering the possibility of overcoming limitations inherent in processing complex, high-dimensional data, and potentially revolutionizing fields like machine learning and data analysis.

The transition from classical to quantum machine learning hinges on a critical initial step: the encoding of classical visual data into the language of quantum states. This process isn’t simply a digital conversion; it requires translating complex visual information – pixels, edges, and features – into the superposition and entanglement of qubits. Effective encoding schemes are paramount, as they determine how faithfully the original data is represented within the quantum realm. A poorly designed encoding can introduce errors or obscure crucial patterns, hindering the performance of subsequent quantum algorithms. Researchers are actively exploring various encoding strategies, such as amplitude, angle, and basis encoding, each with its own advantages and disadvantages concerning resource utilization – the number of qubits required – and the preservation of data integrity. Ultimately, the success of quantum machine learning relies on developing encoding techniques that can efficiently and accurately bridge the gap between the classical visual world and the quantum computational landscape.

The translation of classical visual data into the language of quantum computation necessitates careful consideration of encoding strategies, as each approach introduces distinct compromises. Methods like amplitude encoding maximize data density by mapping values to the amplitudes of quantum states, but demand exponentially increasing qubit resources with data dimensionality. Angle encoding, conversely, utilizes qubit rotation angles to represent data, offering a more resource-efficient approach, though potentially sacrificing data precision. Basis encoding schemes represent data through the selection of quantum basis states, impacting the computational pathways available for processing. The choice between these – and other emerging techniques – hinges on balancing the demands of the specific machine learning task with the practical limitations of current and near-future quantum hardware, a trade-off between the fidelity of the encoded information and the computational resources required for effective quantum processing.

Quantum Neural Networks: Escaping the Constraints of Classical Computation

Quantum Neural Networks (QNNs) represent a departure from classical neural networks by leveraging principles of quantum mechanics to address computational bottlenecks. Classical neural networks are limited by the constraints of bit-based computation and face challenges with high-dimensional data and complex pattern recognition. QNNs, however, utilize quantum bits, or qubits, which can exist in superpositions and exhibit entanglement, enabling the processing of exponentially larger data spaces and the potential for faster computation. This is achieved through the manipulation of quantum states using parameterized quantum circuits, allowing for complex transformations and the learning of intricate patterns that are difficult for classical networks to model effectively. The potential benefits include increased computational speed, improved generalization capabilities, and the ability to tackle previously intractable problems in areas such as image recognition, natural language processing, and materials discovery.

Quantum Neural Networks (QNNs) employ parameterized quantum circuits – sequences of quantum gates with adjustable parameters – to process information encoded in quantum states. These circuits function as the trainable components of the network, transforming input quantum data through unitary transformations defined by the gate parameters. Learning occurs via optimization algorithms, such as gradient descent, which adjust these parameters to minimize a defined loss function, effectively mapping input quantum states to desired output states. This allows QNNs to identify patterns and make predictions based on the learned transformations, analogous to the weight adjustments in classical neural networks but leveraging the principles of quantum mechanics for potentially enhanced computational capabilities and representation power.

Quantum Convolutional Neural Networks (QCNNs) represent a significant class of Quantum Neural Networks (QNNs) designed to leverage the principles of classical Convolutional Neural Networks (CNNs) within a quantum computational framework. QCNNs utilize parameterized quantum circuits to perform operations analogous to convolutional layers, pooling layers, and fully connected layers found in traditional CNNs. This is achieved by encoding input data into quantum states and then applying a series of quantum gates to extract relevant features. The key distinction lies in the use of quantum superposition and entanglement to potentially process information more efficiently and identify complex patterns that may be difficult for classical CNNs to discern. While maintaining architectural similarities to their classical counterparts, QCNNs aim to improve performance in image recognition and processing tasks by exploiting quantum mechanical properties.

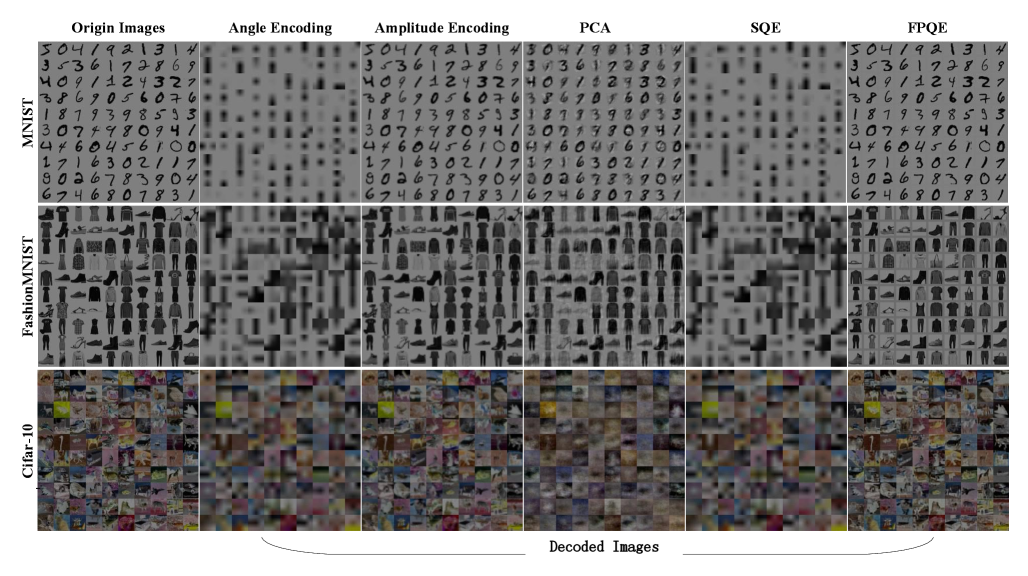

The performance of Quantum Neural Networks (QNNs) is fundamentally dependent on the method used to translate classical data into quantum states, a process known as data encoding. Information loss during this transformation severely limits QNN capabilities; therefore, maintaining high fidelity – the degree to which the original information is preserved – is critical. Our newly proposed Fidelity-Preserving Quantum Encoding (FPQE) method addresses this challenge by minimizing decoherence and maximizing the retention of input data characteristics. Comparative analysis across the MNIST, FashionMNIST, and Cifar-10 datasets demonstrates that FPQE consistently achieves superior performance metrics when compared to currently established quantum encoding techniques, indicating its effectiveness in preserving data integrity throughout the quantum computation.

Preserving the Essence of Vision: Quantifying Fidelity in Quantum Encoding

Accurate representation of original visual information is a critical requirement for successful quantum encoding, as the encoded quantum state serves as a proxy for the image data. Loss of fidelity during the encoding process introduces errors that propagate through subsequent quantum computations and ultimately degrade the quality of any reconstructed image. Therefore, quantitative metrics are essential for evaluating the degree to which the encoded quantum state preserves the essential features of the original visual data; simply achieving a low-error encoding is insufficient if it does not correspond to a perceptually similar image. Maintaining high fidelity ensures that the quantum representation remains a reliable substitute for the original data, enabling effective data processing and retrieval.

Structural Fidelity is a quantitative metric used to evaluate the preservation of essential image features – edges, textures, and overall form – during the quantum encoding process. Unlike pixel-wise comparisons offered by metrics such as Mean Squared Error (MSE), Structural Fidelity focuses on the relationships between pixels, providing a more perceptually relevant assessment of image quality. It operates on the principle that the human visual system is highly adapted to extract structural information, making its preservation crucial for maintaining visual fidelity after encoding and decoding. A high Structural Similarity Index (SSIM) score indicates a strong correlation between the structure of the original and encoded images, signifying minimal distortion of key visual elements.

While Mean Squared Error (MSE) and Peak Signal-to-Noise Ratio (PSNR) quantify pixel-wise differences, they often fail to correlate well with perceived visual quality. The Structural Similarity Index (SSIM) addresses this by modeling the human visual system’s sensitivity to structural information, providing a more perceptually relevant assessment of image fidelity. In evaluations using the Cifar-10 dataset, a SSIM score of 0.85 was achieved, indicating high preservation of image structure. This result was obtained concurrently with an MSE of 0.002 and a PSNR of 25.27 dB, demonstrating that a high SSIM score can be achieved even with relatively low error metrics as measured by MSE and PSNR.

Convolutional Encoder-Decoders are utilized to enhance the preservation of visual data during quantum encoding by leveraging the spatial dependencies inherent in images. These architectures employ convolutional layers within both the encoder and decoder to extract and reconstruct features, minimizing information loss compared to fully connected networks. The convolutional layers reduce the number of trainable parameters and effectively capture local correlations, leading to improved fidelity. Specifically, the encoder transforms the input image into a lower-dimensional quantum state, while the decoder reconstructs the image from this state. Performance metrics, such as a Structural Similarity Index (SSIM) of 0.85 achieved on the Cifar-10 dataset alongside a Mean Squared Error (MSE) of 0.002 and Peak Signal-to-Noise Ratio (PSNR) of 25.27 dB, demonstrate the efficacy of this approach in maintaining high visual fidelity during the quantum encoding process.

From Benchmarks to Breakthroughs: Validating Quantum Performance on Standard Datasets

Quantum neural networks (QNNs) are undergoing rigorous testing on well-established image classification datasets, including MNIST, FashionMNIST, and Cifar-10, to assess their potential as viable machine learning tools. These datasets, comprising handwritten digits, fashion articles, and diverse object images respectively, provide standardized benchmarks for comparing the performance of various QNN architectures and, crucially, the effectiveness of different encoding strategies. The ability to translate classical image data into quantum states – a process known as encoding – is paramount to QNN functionality, and researchers are actively exploring methods to optimize this translation for improved accuracy and efficiency. By evaluating QNNs on these familiar datasets, the field aims to demonstrate progress towards practical quantum machine learning applications and establish a clear understanding of the advantages and limitations of this emerging technology.

The utilization of established datasets – such as MNIST, FashionMNIST, and Cifar-10 – represents a crucial step in objectively assessing the capabilities of emerging Quantum Neural Networks (QNNs). These datasets aren’t merely collections of images; they function as standardized benchmarks, enabling researchers to compare the performance of diverse QNN architectures and encoding schemes under consistent conditions. By evaluating networks on these common grounds, the strengths and weaknesses of different approaches become readily apparent, fostering iterative improvements and accelerating progress in the field. This rigorous testing process allows for direct comparisons not only between various QNN designs but also against established classical machine learning algorithms, providing a clear measure of quantum advantage – or identifying areas where further refinement is needed.

Evaluating quantum neural networks frequently centers on binary classification tasks, a deliberate choice that facilitates meaningful comparison with established classical machine learning algorithms. This approach simplifies the assessment process by focusing on scenarios where the network must categorize inputs into one of two distinct classes – for example, identifying the presence or absence of a specific feature in an image. By utilizing these standardized tasks, researchers can directly quantify the performance of quantum models against their classical counterparts using metrics like accuracy, precision, and recall. Such direct comparisons are crucial for demonstrating the potential advantages of quantum machine learning and identifying areas where quantum algorithms may outperform traditional methods, ultimately driving advancements in the field and paving the way for practical applications.

Recent investigations demonstrate that the Fidelity-Preserving Quantum Encoding (FPQE) method significantly enhances the accuracy of quantum neural networks when applied to challenging image classification tasks. On the Cifar-10 dataset, FPQE achieves an improvement of up to 10.2% in accuracy compared to existing quantum encoding techniques. This performance boost is further amplified through the integration of dimensionality reduction strategies, notably Principal Component Analysis and Adaptive Threshold Pruning. These techniques streamline the encoding process, reducing computational overhead and improving the overall efficiency of the quantum network without substantial loss of information, ultimately leading to more robust and accurate classification results.

The Path Forward: NISQ Devices and the Promise of Variational Quantum Algorithms

Contemporary quantum computers, while demonstrating the potential for revolutionary computation, are currently constrained by both the limited number of qubits – the quantum equivalent of bits – and the pervasive presence of noise. These machines are therefore classified as Noisy Intermediate-Scale Quantum (NISQ) devices. The relatively small qubit count restricts the complexity of problems that can be tackled, while noise – arising from environmental factors and imperfections in quantum control – introduces errors into calculations. This noise doesn’t simply add random variation; it fundamentally disrupts the delicate quantum states necessary for computation, leading to unreliable results. Consequently, developing algorithms and error mitigation techniques that can function effectively within these limitations is a central challenge in the field, and a key focus for realizing the promise of quantum computing in the near term.

To address the challenges posed by current Noisy Intermediate-Scale Quantum (NISQ) devices, researchers are increasingly focused on Variational Quantum Algorithms (VQAs). These algorithms ingeniously combine the strengths of both classical and quantum computation. A VQA employs a quantum circuit with adjustable parameters, optimized through a classical feedback loop to minimize a specific cost function. This hybrid approach allows for the execution of complex computations even with limited qubits and in the presence of noise. By shifting the computational burden away from demanding, long quantum circuits and towards classical optimization, VQAs unlock the potential for practical applications of Quantum Neural Networks (QNNs) in areas like pattern recognition and data analysis, paving the way for advancements despite the constraints of today’s quantum hardware. The optimization process relies on iterative parameter adjustments, effectively ‘training’ the quantum circuit to achieve desired outcomes, and offering a path towards leveraging quantum capabilities in the near term.

Continued advancements in quantum neural networks (QNNs) necessitate focused research across several key areas. Optimizing encoding strategies – the method by which classical data is translated into quantum states – is crucial for maximizing the information a QNN can process and minimizing computational overhead. Simultaneously, the development of more robust quantum algorithms is essential; these algorithms must be resilient to the inherent noise present in current Noisy Intermediate-Scale Quantum (NISQ) devices. This includes exploring error mitigation techniques and designing algorithms with intrinsic fault tolerance. Ultimately, improving the overall performance of QNNs requires a synergistic approach, combining innovative encoding schemes, resilient algorithms, and hardware-aware optimization to unlock the full potential of quantum machine learning and overcome the limitations of classical computing paradigms.

Quantum neural networks (QNNs) represent a potentially transformative approach to machine learning, poised to address challenges that currently constrain classical algorithms. While classical computers struggle with the exponential growth of data dimensionality in tasks like image recognition and complex pattern analysis, QNNs leverage the principles of quantum mechanics – superposition and entanglement – to explore vastly larger solution spaces. This capability suggests the possibility of significantly improved performance in areas such as image processing, where QNNs could enable faster and more accurate feature extraction, object detection, and image classification. Beyond image processing, the promise extends to other computationally intensive machine learning tasks, including natural language processing and materials discovery, offering a pathway to overcome the inherent limitations of classical computing and unlock new frontiers in artificial intelligence.

The pursuit of Fidelity-Preserving Quantum Encoding (FPQE) reveals a fundamental truth about modeling – it isn’t simply about achieving technical accuracy, but about addressing the inherent limitations of representation. The article highlights how retaining structural similarity, measured by SSIM, improves quantum neural network performance, suggesting that the way information is encoded matters as much as the information itself. This resonates with a core tenet of understanding complex systems: the map is not the territory. As Richard Feynman observed, “The first principle is that you must not fool yourself – and you are the easiest person to fool.” FPQE’s focus on preserving structural information is, at its heart, an attempt to minimize self-deception in the translation from classical image to quantum representation, acknowledging that even the most sophisticated model is built on approximations and susceptible to perceptual biases.

The Illusion of Progress

This pursuit of fidelity-preserving encoding, while technically elegant, merely shifts the locus of inevitable degradation. The structural similarity metric-SSIM-offers a comforting illusion of retained information, a quantifiable reassurance against the chaos of quantum decoherence. But it’s a human construct, a weighting of perceived importance. The network doesn’t ‘see’ structure; it processes gradients, and those gradients will, ultimately, reflect the biases embedded within the training data and the limitations of the encoding itself. Every strategy works-until people start believing in it too much.

The true challenge isn’t preserving an image’s structure, but acknowledging the inherent loss of information during quantum translation. Future work will likely focus on robust training methodologies that anticipate these losses, building resilience into the network architecture rather than attempting to prevent the inevitable. A shift in focus from encoding fidelity to algorithmic forgiveness seems a more pragmatic path, accepting imperfection as a fundamental property of the system.

One suspects the real breakthroughs won’t come from more sophisticated encodings, but from a deeper understanding of how these networks actually learn with noisy, incomplete data. Until then, this remains a beautifully engineered attempt to postpone the reckoning with reality – a familiar pattern in any field driven by the promise of perfect information.

Original article: https://arxiv.org/pdf/2511.15363.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- God Of War: Sons Of Sparta – Interactive Map

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Who Is the Information Broker in The Sims 4?

- 8 One Piece Characters Who Deserved Better Endings

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

- Mewgenics Tink Guide (All Upgrades and Rewards)

2025-11-20 21:05