Author: Denis Avetisyan

Integrating post-quantum cryptography presents a significant usability challenge for developers lacking specialized security knowledge.

An empirical study reveals critical issues in the design of post-quantum cryptography APIs and proposes a path toward improved developer experience.

Despite the looming threat of quantum computing to current cryptographic standards, widespread adoption of Post-Quantum Cryptography (PQC) remains slow-often hindered by developer challenges. This research, ‘When Security Meets Usability: An Empirical Investigation of Post-Quantum Cryptography APIs’, presents an empirical evaluation of how developers interact with PQC APIs and documentation during routine software development tasks. Findings reveal that usability issues-particularly regarding terminology and workflow integration-significantly impact developer performance when working with these novel cryptographic primitives. How can the PQC ecosystem prioritize developer experience to accelerate secure adoption and mitigate the risks of implementation vulnerabilities?

The Inevitable Quantum Reckoning

The foundation of modern digital security relies heavily on public-key cryptography, with algorithms like RSA serving as the workhorse for protecting online transactions, securing email communications, and safeguarding sensitive data. This system enables secure exchange of information by utilizing a pair of mathematically linked keys – a public key for encryption, widely distributed, and a private key, kept secret, for decryption. The strength of RSA, and similar algorithms, historically stemmed from the computational difficulty of factoring large numbers – a problem considered intractable for classical computers. Consequently, countless systems, from e-commerce platforms to government infrastructure, have been built upon this cryptographic bedrock, creating a pervasive reliance that underscores the urgency of addressing emerging threats to its stability.

The security of much of modern digital life relies on the computational difficulty of certain mathematical problems, specifically the factorization of large numbers and the discrete logarithm problem – the foundations of algorithms like RSA and Elliptic Curve Cryptography. However, the anticipated arrival of sufficiently powerful quantum computers threatens to dismantle this security. Peter Shor’s algorithm, developed in 1994, provides a polynomial-time solution to these previously intractable problems. This means a quantum computer running Shor’s algorithm could break widely used encryption schemes in a matter of hours, or even minutes, rendering sensitive data – from financial transactions to state secrets – vulnerable. The implications are profound; current cryptographic protocols, considered secure for decades, would become obsolete, necessitating a rapid and comprehensive overhaul of digital security infrastructure before quantum computers become a practical reality.

The anticipated arrival of sufficiently powerful quantum computers demands a fundamental overhaul of current cryptographic strategies. Existing public-key systems, relied upon for secure online transactions, data encryption, and digital signatures, are demonstrably vulnerable to Shor’s algorithm, a quantum algorithm capable of efficiently factoring large numbers – the mathematical foundation of much modern cryptography. Consequently, a proactive transition to Post-Quantum Cryptography (PQC) is not merely advisable, but essential. This involves developing and implementing cryptographic algorithms believed to be resistant to attacks from both classical and quantum computers. Research focuses on diverse mathematical problems, like lattice-based cryptography, code-based cryptography, and multivariate cryptography, offering potential replacements. The National Institute of Standards and Technology (NIST) is currently leading a standardization process to identify and certify these next-generation algorithms, ensuring a future where digital communications remain secure even in a post-quantum world.

Standardization: A Necessary, If Slow, Response

The National Institute of Standards and Technology (NIST) initiated a standardization process for post-quantum cryptography (PQC) algorithms in 2016, responding to the potential threat posed by quantum computers to currently used public-key cryptosystems. This effort, driven by the need to transition to algorithms resistant to attacks from both classical and quantum computers, involves a multi-round evaluation process open to international submissions. NIST’s role extends beyond algorithm selection; it includes developing guidelines for implementation and integration, and facilitating collaboration amongst researchers and industry stakeholders to ensure a smooth transition to PQC standards. The agency aims to publish standardized PQC algorithms to replace vulnerable algorithms like RSA and ECC, thereby safeguarding sensitive data and communications in the future.

The evaluation of Post-Quantum Cryptography (PQC) candidate algorithms by the National Institute of Standards and Technology (NIST) centers on both performance and security. Specifically, the algorithms ML-KEM (Module-Lattice Key Encapsulation Mechanism) and ML-DSA (Module-Lattice Digital Signature Algorithm) are undergoing detailed analysis, including assessments of their resistance to known quantum and classical attacks. This evaluation incorporates a multi-faceted approach encompassing cryptanalysis by the research community, implementation security evaluations, and benchmarking of computational performance across various platforms. NIST’s criteria emphasize both the theoretical soundness of the algorithms and their practicality for widespread deployment, with ongoing refinement based on public feedback and newly discovered vulnerabilities.

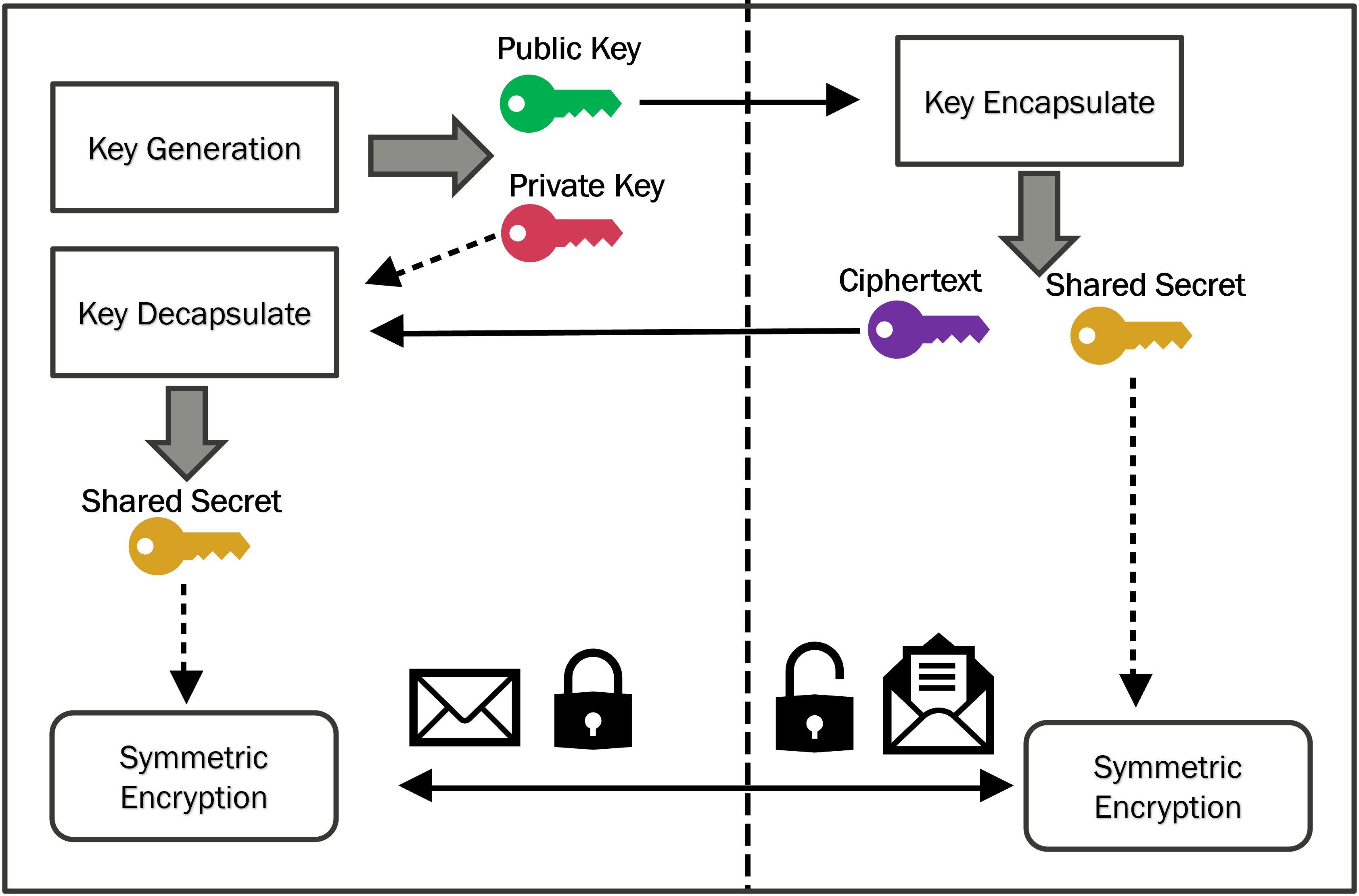

The Key Encapsulation Mechanism/Data Encapsulation Mechanism (KEM/DEM) framework represents a hybrid approach to public-key cryptography designed to enhance security and adaptability. KEMs are responsible for securely establishing a shared secret, while DEMs utilize this secret with a symmetric encryption algorithm to encrypt the actual message. This separation of concerns provides flexibility; different KEMs can be swapped without impacting the DEM, and vice versa, allowing for algorithm agility and easier adaptation to evolving cryptographic landscapes. The framework also offers resistance to various attacks; a compromised DEM does not necessarily expose the key encapsulation process, and vice-versa, improving overall system robustness. Furthermore, KEM/DEM simplifies integration with existing systems as symmetric encryption is widely implemented and understood.

![The PQ-Sandbox documentation contains a documented mistake, as highlighted in source [20].](https://arxiv.org/html/2602.14539v1/Picture/Typo_Quantcrypt.png)

Usability: The Forgotten Dimension of Security

The integration of Post-Quantum Cryptography (PQC) into existing systems is contingent upon developers’ ability to correctly and efficiently utilize these algorithms within their applications. Successful deployment requires that PQC APIs are designed with usability as a primary concern, minimizing the learning curve and potential for implementation errors. Incorrect implementation, even with mathematically sound algorithms, can negate the security benefits of PQC and introduce vulnerabilities. Therefore, ease of use directly impacts the rate of PQC adoption and the overall security posture of systems transitioning to quantum-resistant cryptography.

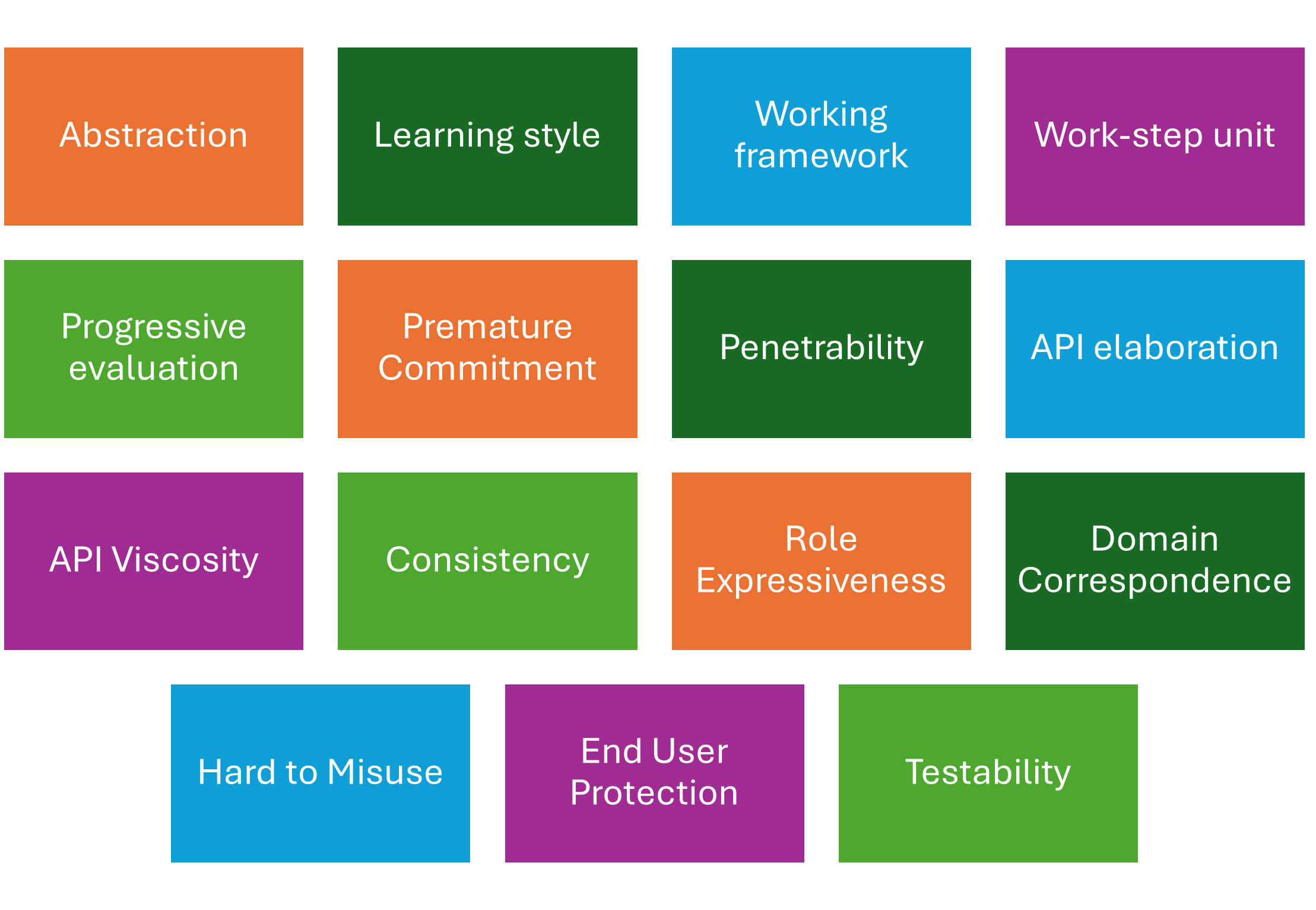

Usability of cryptographic APIs is critical for widespread Post-Quantum Cryptography (PQC) adoption, as developer experience directly impacts correct implementation and security. Evaluating this usability requires systematic approaches; the Cognitive Dimensions Framework (CDF) provides a structured methodology for analyzing the cognitive demands placed on developers interacting with an API. CDF assesses factors such as learnability, efficiency, and error rates, offering quantifiable metrics for comparison. Utilizing such frameworks allows for objective assessment of API design choices and identification of potential barriers to effective use, ultimately contributing to more secure and readily deployable cryptographic solutions.

Quantitative analysis revealed a statistically significant difference in developer task completion time when utilizing two Post-Quantum Cryptography (PQC) APIs. Specifically, developers completed Task 1 in an average of 39.37 minutes using the QuantCrypt API, while the PQ-Sandbox API required an average of 65.38 minutes – a difference of 26 minutes (p=0.0079). This indicates a measurable usability barrier associated with the PQ-Sandbox API for this particular task, suggesting potential difficulties in implementation or increased cognitive load for developers.

Analysis of Task 2 completion times revealed a statistically significant difference between the two APIs, with PQ-Sandbox demonstrating superior usability. Developers completed Task 2 in an average of 25.43 minutes using PQ-Sandbox, compared to 41.43 minutes with QuantCrypt (p=0.0015). This 16-minute difference indicates that, for this specific cryptographic operation, the PQ-Sandbox API presented a more efficient workflow or required fewer steps for developers to achieve the desired outcome. The statistically significant p-value confirms that this difference was not likely due to random chance.

Secure by Default: A Necessary Shift in Mindset

A foundational principle for Post-Quantum Cryptography (PQC) Application Programming Interfaces (APIs) lies in a secure-by-default design philosophy, recognizing that developers, even security experts, are prone to error. This approach proactively minimizes opportunities for misconfiguration or incorrect implementation by establishing sensible, secure defaults for all parameters and functions. Rather than requiring developers to actively enforce security best practices, a secure-by-default API subtly guides them towards secure outcomes, significantly reducing cognitive load and the potential for introducing vulnerabilities. By handling complex security considerations internally, developers can focus on the core logic of their applications, ultimately enhancing the overall security posture and fostering wider adoption of PQC technologies.

A robust application security posture is significantly bolstered by Post-Quantum Cryptography (PQC) APIs designed to minimize common developer errors. These APIs proactively reduce the potential for misconfiguration or misuse, which are often exploited by attackers. By simplifying complex cryptographic operations and providing clear, unambiguous interfaces, developers are less likely to inadvertently introduce vulnerabilities. This focus on error prevention isn’t merely about convenience; it directly addresses the escalating threat landscape, particularly the ‘Harvest Now, Decrypt Later’ scenario where adversaries accumulate encrypted data anticipating future decryption capabilities. Consequently, APIs prioritizing correctness become a critical line of defense, safeguarding applications against both present and future cryptographic attacks and fostering a more secure digital ecosystem.

A recent comparative study of Post-Quantum Cryptography (PQC) APIs demonstrated varying levels of susceptibility to developer error, revealing critical trade-offs in design choices. QuantCrypt exhibited two instances of data exposure during testing, indicating potential vulnerabilities in its handling of sensitive information; this contrasts sharply with DSA verification, which resulted in five errors. PQ-Sandbox, designed with a focus on simplified integration, experienced an intermediate error rate of three. These findings underscore that even seemingly minor API design decisions can significantly impact the robustness of cryptographic implementations, and highlight the necessity of prioritizing error mitigation strategies within PQC APIs to ensure secure and reliable operation, especially considering the long-term implications of the Harvest Now, Decrypt Later threat.

The escalating threat of “Harvest Now, Decrypt Later” (HNDL) attacks necessitates a proactive shift in cryptographic API design. This scenario envisions adversaries collecting encrypted data today, anticipating future advancements in cryptanalysis or quantum computing that could break the encryption. Secure-by-default principles directly counter this risk by prioritizing correctness and minimizing opportunities for developers to introduce vulnerabilities – even seemingly minor errors can leave data exposed for years to come. By building APIs that guide developers towards secure configurations and automatically mitigate common pitfalls, the longevity of data confidentiality is enhanced, effectively raising the bar for future decryption attempts and safeguarding information against yet-unknown attacks. This focus on long-term resilience is no longer simply best practice, but a fundamental requirement for cryptographic systems deployed in an era where data persistence far outstrips the lifespan of current algorithms.

The study meticulously details the friction introduced by Post-Quantum Cryptography APIs, and it’s a familiar story. These APIs, intended to fortify security, often demand a level of cryptographic expertise most developers simply don’t possess. It echoes a pattern observed repeatedly: elegant theory colliding with the messy reality of production code. As Marvin Minsky once said, “You can make anything by writing code, but it doesn’t mean it’s a good idea.” The research confirms that even with theoretically sound algorithms, poor API design can render them unusable, effectively creating a new layer of vulnerability-one born not of broken cryptography, but of developer frustration and inevitable workarounds. The ‘Key Encapsulation Mechanism’ integration challenges are merely today’s expensive complication.

What’s Next?

This investigation into the usability of post-quantum cryptography APIs merely documents the inevitable. The transition from mathematically elegant constructions to something a working developer can actually use reveals, predictably, a chasm of practical difficulty. One suspects that any API lauded as ‘intuitive’ simply hasn’t faced a production incident yet. The current focus on key encapsulation mechanisms and digital signature algorithms feels… optimistic. It assumes developers will meticulously weigh cryptographic agility against performance, when, historically, they’ve usually chosen ‘fast enough’ and moved on.

Future work will undoubtedly involve more ‘developer-centric’ design. This usually translates to adding layers of abstraction that obscure the underlying complexity, and therefore, the actual security properties. It’s a trade-off, naturally. A cleaner interface is appealing until the first subtle bug reveals that ‘easy’ doesn’t equal ‘safe’. The real challenge isn’t making PQC accessible; it’s convincing someone, somewhere, to actually read the documentation when things go wrong.

Perhaps the most honest next step is a large-scale, longitudinal study. Deploy these APIs in real applications, under real load, with real developers. Then, in five years, publish the post-mortem. It won’t be pretty, but it will be honest. And, frankly, a little bit of honest assessment is more valuable than another paper promising ‘seamless integration’.

Original article: https://arxiv.org/pdf/2602.14539.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Mewgenics Tink Guide (All Upgrades and Rewards)

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- Top 8 UFC 5 Perks Every Fighter Should Use

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- How to Discover the Identity of the Royal Robber in The Sims 4

- Sega Declares $200 Million Write-Off

- Full Mewgenics Soundtrack (Complete Songs List)

- All 100 Substory Locations in Yakuza 0 Director’s Cut

- Gold Rate Forecast

- Starsand Island: Treasure Chest Map

2026-02-17 08:55