Author: Denis Avetisyan

Researchers have uncovered a sophisticated attack that exploits attention mechanisms in federated self-supervised learning to inject hidden backdoors into models.

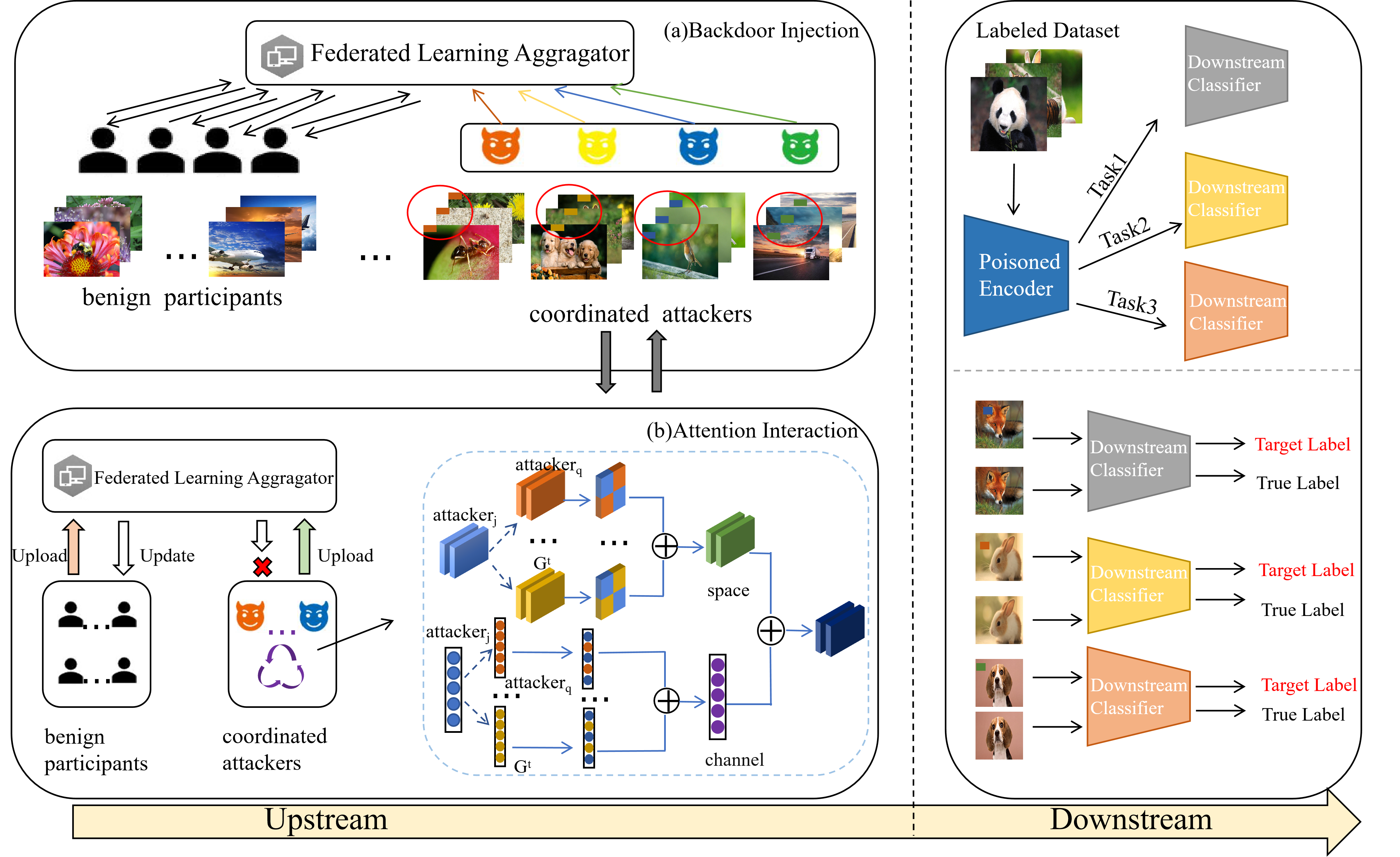

This paper details ADCA, an attention-driven multi-party collusion attack that decomposes triggers to enhance stealth and effectiveness against existing defenses in federated learning systems.

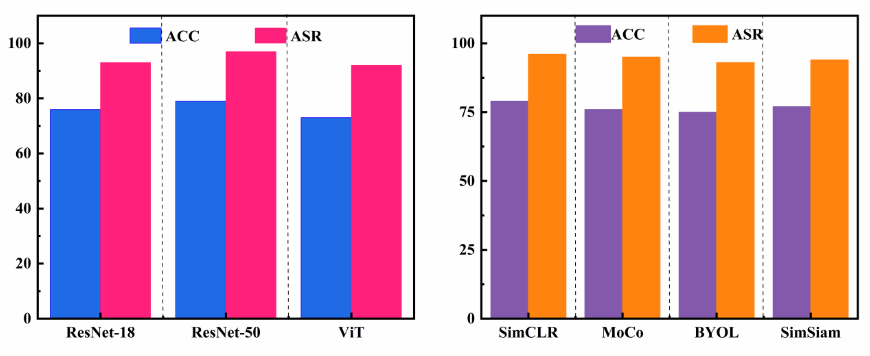

While Federated Self-Supervised Learning (FSSL) offers a promising path toward privacy-preserving model training with unlabeled data, its vulnerability to backdoor attacks remains a significant concern. This paper introduces ‘ADCA: Attention-Driven Multi-Party Collusion Attack in Federated Self-Supervised Learning’, a novel attack strategy that overcomes limitations of existing methods through local trigger decomposition and a collaborative optimization framework guided by an attention mechanism. Experimental results demonstrate that ADCA substantially improves attack success and persistence across diverse FSSL scenarios. Could this attention-driven collusion represent a new benchmark in stealthy, robust attacks against federated learning systems, and what defenses will be required to mitigate its impact?

Federated Learning’s Silent Threat: Backdoors and the Illusion of Security

Federated learning, designed to train machine learning models on decentralized data while preserving privacy, faces a critical vulnerability: backdoor attacks. These attacks don’t aim to disrupt the learning process outright, but rather to subtly manipulate the resulting global model. Malicious participants, or clients, introduce hidden “triggers” – specific patterns or modifications within their locally held data. When the global model is trained using updates from these compromised clients, it learns to associate these triggers with incorrect classifications. Consequently, the model performs normally on standard data, but misbehaves predictably when presented with data containing the injected trigger, potentially leading to targeted errors and significant security breaches. This insidious form of attack poses a unique challenge because the malicious intent remains concealed within the model’s parameters, requiring sophisticated detection methods beyond traditional security measures.

Conventional security measures designed for centralized machine learning often prove inadequate when applied to federated learning environments. The distributed nature of FL, where model training occurs across numerous decentralized devices, introduces unique vulnerabilities that attackers readily exploit. Sophisticated attacks leverage the inherent complexities of data heterogeneity – the varying distributions and characteristics of data held by each client – to subtly inject backdoors without detection. These attacks don’t rely on a single point of failure, but instead distribute malicious influence across multiple clients, making it difficult to identify and neutralize the threat using traditional anomaly detection or data sanitization techniques. Consequently, defenses must move beyond centralized approaches and embrace strategies specifically designed to address the challenges posed by decentralized, heterogeneous data landscapes.

The potential for compromised model accuracy represents a core security risk in federated learning systems susceptible to backdoor attacks. These attacks don’t necessarily manifest as widespread failures; instead, malicious actors can subtly manipulate the global model to misclassify specific, targeted inputs. This means a self-driving car, trained with a backdoored model, might consistently misinterpret a particular sticker on a stop sign, or a medical diagnosis system could overlook a critical indicator in certain patient scans. The precision of these targeted misclassifications-achieved without significantly impacting overall performance-makes backdoor attacks particularly insidious and difficult to detect, raising substantial concerns for applications where reliability and trustworthiness are paramount. The consequence isn’t simply reduced efficacy, but the potential for predictable, exploitable failures with real-world ramifications.

ADCA: The Art of Distributed Deception

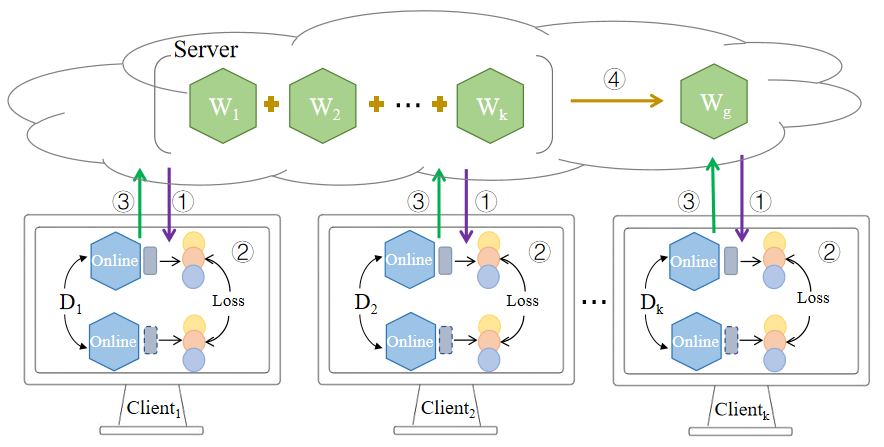

The Attention-Driven Multi-party Collusion Attack (ADCA) represents a novel approach to model backdoor injection by distributing the trigger across multiple participants. Unlike traditional attacks utilizing a single, easily detectable global trigger, ADCA decomposes this trigger into individual, localized patterns assigned to several malicious clients. These clients then contribute their respective patterns during federated learning, collectively forming the complete backdoor trigger within the global model. This distributed approach aims to evade conventional defenses that focus on identifying singular, prominent trigger signatures and reduces the suspicion raised by any single client’s contribution. The collaboration aspect is key, as the combined effect of these distributed patterns is only malicious when aggregated, enabling a stealthier and more resilient backdoor implementation.

The Attention-Driven Multi-party Collusion Attack (ADCA) achieves stealth by fragmenting a single, comprehensive backdoor trigger into multiple, localized patterns. Rather than a single client introducing a prominent trigger, ADCA distributes trigger components across several malicious participants in a federated learning environment. Each client contributes a subtle modification to the model that, when combined with contributions from other malicious clients, reconstructs the complete backdoor trigger. This decomposition reduces the individual impact of each client’s contribution, lowering the probability of detection through standard anomaly detection techniques focused on identifying singular, large-scale trigger activations. The distributed nature also obscures the origin of the backdoor, making attribution and mitigation significantly more challenging.

The ADCA attack leverages the attention mechanism inherent in transformer-based models to significantly amplify backdoor effects and increase persistence. Specifically, malicious clients strategically craft local triggers designed to activate specific attention heads during both training and inference. This targeted activation concentrates the influence of the backdoor within critical parts of the model, increasing the likelihood of successful manipulation of predictions. Because the backdoor signal is distributed across multiple attention heads and subtly embedded within the model’s normal operation, standard backdoor detection techniques – which often rely on identifying large-scale parameter changes or singular trigger patterns – are less effective at identifying or removing the ADCA-induced vulnerability. The distributed nature also complicates mitigation, as removing a single trigger component does not neutralize the attack.

Signal Dilution: The Achilles’ Heel of Federated Backdoors

Backdoor signal dilution arises in federated learning settings due to the aggregation of model updates from both compromised (malicious) and legitimate (benign) clients. During the model averaging process, the influence of the malicious updates, which carry the backdoor trigger, is lessened by the larger volume of benign updates. This dilution effect can reduce the strength of the injected backdoor, potentially lowering the attack success rate and making the attack less reliable. The degree of dilution is directly proportional to the ratio of malicious clients to the total number of participating clients; a smaller proportion of malicious clients results in greater dilution of the backdoor signal.

The Attributed Differential Client Averaging (ADCA) framework addresses the challenge of signal dilution in federated learning backdoor attacks through a distributed approach coupled with an attention mechanism. This design allows for a surprisingly high degree of robustness, maintaining a peak Attack Success Rate (ASR) of 96.57% on the STL-10 dataset even when only a limited number of clients participate in the malicious update process. The distributed nature of ADCA ensures that the backdoor signal, while potentially weakened by the inclusion of benign updates, is not entirely lost during model aggregation. Furthermore, the attention mechanism effectively prioritizes the contributions of clients exhibiting backdoor characteristics, thereby amplifying the malicious signal and sustaining a high ASR despite signal dilution.

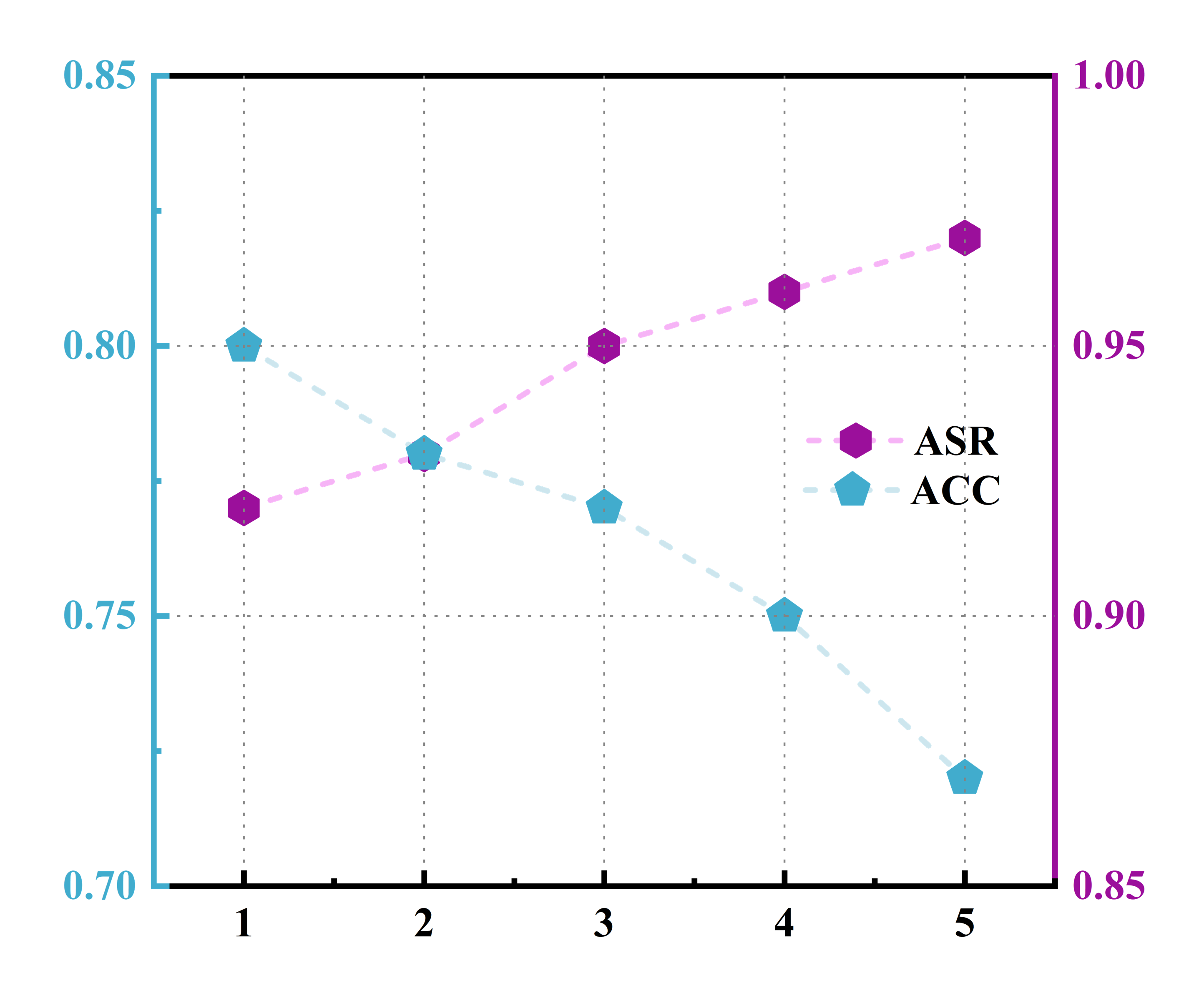

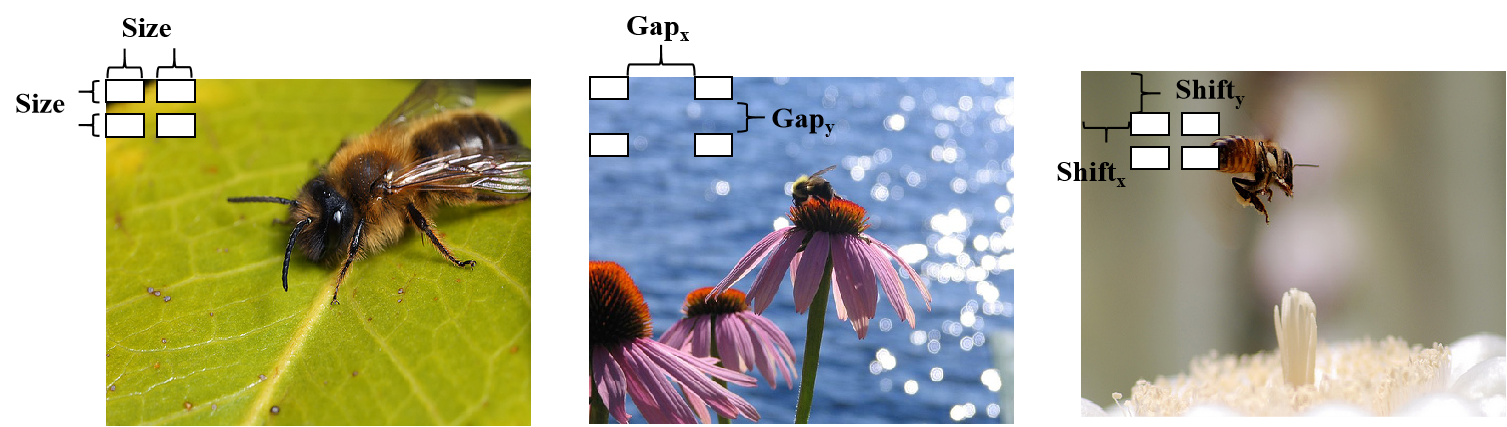

Attack success rate (ASR) is demonstrably affected by the characteristics of the trigger used in a backdoor attack, specifically its size, location within the input image, and the gap between trigger elements. Analysis on the STL-10 dataset shows that optimizing these trigger parameters is crucial for maximizing attack effectiveness. The ADCA method achieves a 40.23% improvement in ASR compared to the baseline DBA attack, indicating that ADCA’s architecture is more sensitive to, and benefits more from, careful trigger design and optimization than the baseline approach.

The Illusion of Defense: Current Limitations and Future Battles

Current defenses against adversarial data corruption attacks (ADCAs), such as PatchSearch, PoisonCAM, and EmInspector, largely center on enhancing model robustness and pinpointing potentially compromised training data. These techniques attempt to either correct manipulated samples or discard them before they can influence the global model. However, the subtle and adaptive nature of ADCAs-which don’t necessarily aim for misclassification, but rather for a gradual degradation of overall performance-presents a significant challenge. Existing methods often struggle to distinguish between naturally noisy data and maliciously crafted perturbations, leading to a high rate of false positives or, critically, a failure to detect sophisticated attacks that prioritize stealth over immediate disruption. Consequently, while these defenses offer some initial protection, their effectiveness is demonstrably limited against determined adversaries employing advanced ADCA strategies.

Protecting model training data through privacy-preserving techniques, such as FLAME, introduces a complex interplay between security and performance. FLAME leverages Differential Privacy, a system designed to add carefully calibrated noise to the data used in federated learning, thereby obscuring individual contributions and preventing malicious actors from reconstructing sensitive information. However, this added noise inevitably impacts model accuracy; the stronger the privacy guarantees – achieved through increased noise – the greater the potential degradation in performance. Researchers are actively exploring methods to optimize this trade-off, seeking to minimize accuracy loss while maintaining robust privacy safeguards, recognizing that a delicate balance is crucial for the practical implementation of secure federated learning systems.

FLTrust presents a proactive defense against adversarial attacks in federated learning by evaluating the trustworthiness of individual client updates before aggregation. This system assigns each update a trust score based on its deviation from the global model and historical client behavior, effectively diminishing the influence of potentially poisoned data. However, the efficacy of FLTrust hinges on meticulous calibration; overly sensitive thresholds can incorrectly flag legitimate updates as malicious – generating false positives – and hindering model convergence. Conversely, lax thresholds may fail to detect sophisticated attacks, leaving the global model vulnerable. Ongoing research focuses on dynamic calibration strategies and adaptive thresholding to balance robust defense against malicious actors with the preservation of valuable contributions from all participating clients.

The pursuit of ever more sophisticated defenses in federated learning, as detailed in this exploration of the Attention-Driven Multi-Party Collusion Attack, feels predictably cyclical. It’s a constant escalation, a refinement of techniques to bypass the latest safeguards. One recalls Donald Davies stating, “The computer is a universal machine, and it will do what you tell it to do, but it won’t necessarily do what you want it to do.” This sentiment rings particularly true here. The attack’s clever use of trigger decomposition and attention mechanisms isn’t innovation, merely a more intricate way to exploit the inherent vulnerabilities of distributed systems. Everything new is just the old thing with worse docs, and this attack is no different – a complex re-packaging of familiar weaknesses.

The Road Ahead

This exploration of attention-driven collusion attacks in federated learning exposes, yet again, the fragility of distributed systems. It’s comforting, in a bleak way, that even with all the cleverness around self-supervision and privacy-preserving techniques, a well-placed trigger decomposition still throws everything into disarray. If a system crashes consistently, at least it’s predictable. The authors rightly point to the effectiveness against current defenses, but those defenses were, let’s be honest, optimistic at best.

The real challenge isn’t just detecting these attacks – it’s accepting that detection will always be a moving target. Future work will undoubtedly focus on more sophisticated trigger designs, or perhaps attempting to quantify the ‘collusion budget’ of a federation – how much malicious coordination can the system tolerate before collapsing? One suspects it’s a very low number. The pursuit of ‘cloud-native’ security feels particularly absurd given these findings; it’s the same mess, just more expensive.

Ultimately, this research contributes to a growing body of evidence suggesting that we don’t write code – we leave notes for digital archaeologists. They’ll likely marvel at the lengths taken to almost secure a system fundamentally designed to be compromised. Perhaps they’ll even find a use for all those attention mechanisms.

Original article: https://arxiv.org/pdf/2602.05612.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Epic Games Store Free Games for November 6 Are Great for the Busy Holiday Season

- EUR USD PREDICTION

- Battlefield 6 Open Beta Anti-Cheat Has Weird Issue on PC

- How to Unlock & Upgrade Hobbies in Heartopia

- The Mandalorian & Grogu Hits A Worrying Star Wars Snag Ahead Of Its Release

- Sony Shuts Down PlayStation Stars Loyalty Program

- Unveiling the Eye Patch Pirate: Oda’s Big Reveal in One Piece’s Elbaf Arc!

- Someone Made a SNES-Like Version of Super Mario Bros. Wonder, and You Can Play it for Free

- ARC Raiders Player Loses 100k Worth of Items in the Worst Possible Way

- God Of War: Sons Of Sparta – Interactive Map

2026-02-09 04:02