Author: Denis Avetisyan

A new caching scheme dramatically improves transmission rates by intelligently organizing and delivering data to multiple users simultaneously.

This work introduces a Cyclic Multi-Access Non-Half-Sum Disjoint Packing approach to achieve linear subpacketization and enhance performance in multi-access caching systems.

Achieving both linear subpacketization and low transmission load remains a significant challenge in multi-access coded caching systems. This paper introduces a novel construction, detailed in ‘A New Construction Structure on Multi-access Coded Caching with Linear Subpacketization: Cyclic Multi-Access Non-Half-Sum Disjoint Packing’, leveraging a cyclic multi-access non-half-sum disjoint packing (CMA-NHSDP) structure. The proposed scheme yields a new class of caching schemes that demonstrably reduces transmission load compared to existing linear and, in some cases, exponential subpacketization approaches. Could this combinatorial structure unlock further optimizations in large-scale, multi-user caching networks?

The Inevitable Chaos of Multi-Access Caching

The modern internet experience is fundamentally built upon caching – the temporary storage of frequently accessed data closer to users – to dramatically reduce delays and network congestion. However, traditional caching techniques, designed for single-user or limited-access scenarios, are increasingly challenged by the demands of multi-access edge computing. As content delivery networks serve a growing number of simultaneous users – each requesting diverse and dynamic content – conventional caching strategies struggle to maintain efficiency. This leads to increased bandwidth consumption, higher latency, and a diminished quality of service, particularly during peak demand. Consequently, innovative approaches are needed to intelligently manage cached content in multi-access environments, optimizing resource allocation and ensuring a seamless user experience for all.

Effective content delivery to multiple users hinges on caching strategies that go beyond simply storing popular files. Intelligent caching dynamically prioritizes content based on anticipated demand and user proximity, minimizing redundant transmissions and maximizing the efficient use of limited bandwidth. These systems often employ predictive algorithms – analyzing historical data and real-time network conditions – to proactively cache content before a user even requests it. Furthermore, advanced techniques like cooperative caching, where caches collaborate to share content, and edge caching, bringing content closer to the end-user, significantly reduce latency and improve the overall user experience. The goal is not merely to increase cache hit rates, but to optimize the entire content delivery pipeline, ensuring that each user receives the desired content with minimal delay and resource consumption.

Current content caching techniques, while effective for single users or small groups, demonstrate limitations when confronted with a surge in concurrent requests and constrained network capacity. Traditional caching strategies often rely on replicating popular content across multiple servers, but this approach becomes inefficient as the number of users grows, leading to increased bandwidth consumption for updates and diminished returns on cache hit rates. The core issue lies in the difficulty of predicting which content will be most frequently accessed by a large, diverse user base under bandwidth limitations, resulting in redundant data storage and frequent misses. Consequently, researchers are actively exploring innovative solutions-including intelligent prefetching, collaborative caching, and content placement algorithms-aimed at optimizing resource allocation and delivering a seamless experience even under high-demand conditions.

Placement Delivery Arrays: The Foundation, and the Limits

The Placement Delivery Array (PDA) is a core component of coded caching systems, serving as a matrix that dictates both data placement on the cache server and data delivery to users. Specifically, the element PDA_{i,j} indicates which fraction of file j is stored on cache server i. This structure fundamentally defines the caching scheme; a given PDA determines not only the total cache storage utilized but also how requests from users are satisfied, influencing parameters such as latency, throughput, and overall system cost. The PDA’s dimensions are determined by the number of cache servers and the total number of files in the system, and its values are typically fractions between zero and one, representing the degree of file placement.

Uncoded placement within a Placement Delivery Array (PDA) involves storing each data file directly on a single caching server, eliminating the need for encoding or fragmentation prior to storage; this contrasts with coded caching approaches that distribute data across multiple servers. Complementing uncoded placement, one-shot delivery refers to the transmission of the entire requested file from the designated caching server to the user in a single transmission. The combination of these techniques simplifies the caching process by reducing computational overhead associated with encoding/decoding and packet assembly/disassembly, resulting in lower latency and reduced server processing demands, though potentially at the cost of reduced caching gains compared to more complex coded schemes.

The Placement Delivery Array (PDA) serves as the foundational structure for implementing and evaluating advanced caching schemes; its design directly impacts system performance metrics such as peak rate, latency, and overall throughput. More complex caching strategies, including those utilizing coding techniques to enhance reliability or capacity, are built upon the PDA’s defined placement of data and delivery mechanisms. Consequently, the complexity of the PDA – specifically, the number of required transmissions and the computational overhead of encoding/decoding – directly translates to the computational and communication overhead of the entire caching system. Optimizing the PDA for a given network topology and user demand is therefore critical for achieving efficient and scalable caching solutions.

Non-Half-Sum Disjoint Packing: A Clever Trick, But Not a Panacea

Non-half-sum disjoint packing (NHSDP) is a combinatorial structure utilized in the construction of efficient coded caching schemes. It functions by partitioning a set of files into disjoint subsets, ensuring that the sum of the sizes of any two subsets does not exceed the total cache size. This property allows for the creation of coded packets containing combinations of files, enabling multiple users to access different files from the same packet, thereby reducing overall transmission load. The core principle of NHSDP is to maximize the number of files that can be cached while minimizing redundancy, which directly translates to improved cache performance and reduced bandwidth requirements in content delivery networks and multi-access caching systems. \sum_{i \in S} |f_i| \leq C , where C is the total cache size and f_i represents a file.

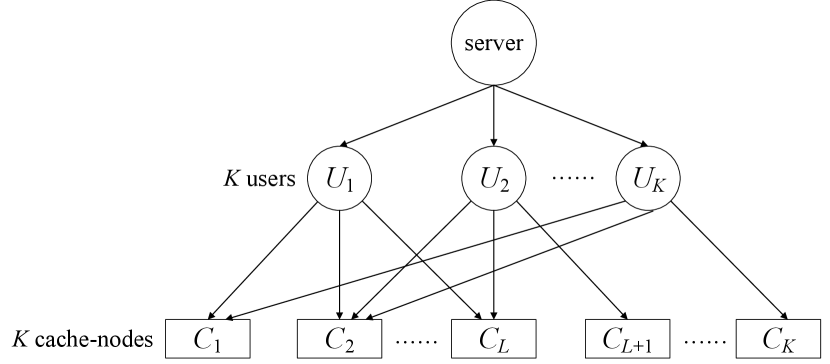

CMA-NHSDP is a caching scheme developed by integrating the principles of Non-Half-Sum Disjoint Packing (NHSDP) with Cyclic Multi-Access (CMA). This integration is specifically designed to address the requirements of multi-access caching scenarios, such as those found in Multi-Access Coded Caching (MACC) systems. By leveraging the cyclic topology inherent in MACC and combining it with the disjoint packing characteristics of NHSDP, CMA-NHSDP aims to improve data delivery efficiency and reduce transmission overhead in environments where multiple users simultaneously request cached content. The method’s core function is to strategically partition and distribute data across caching nodes to maximize the number of satisfied requests with minimal transmission requirements.

CMA-NHSDP achieves a quantifiable reduction in transmission load when applied to multi-access caching (MACC) systems. Specifically, the scheme demonstrates a transmission load reduction of greater than or equal to (2 - 2q)^n compared to the Recursive Knowledge 1 (RK1) scheme. This optimization is contingent on the condition that K≥3nL, where K represents the number of caches, L is the number of unique files, and n is the number of users. The performance gain is directly attributable to the utilization of the cyclic topology inherent in the MACC system, allowing for efficient data sharing and reduced redundancy in transmissions.

Refining the Foundation: Integer Optimization and the Illusion of Control

Integer optimization is utilized within Probabilistic Data Association (PDA) construction to identify parameter sets that maximize performance according to defined criteria. This mathematical technique formulates the PDA construction problem as an integer program, where decision variables represent discrete choices regarding caching and data transmission. By defining an objective function – such as minimizing transmission rate or maximizing user satisfaction – and subject to constraints representing system limitations and user demands, integer optimization algorithms can systematically search for the optimal configuration. The resulting integer solutions provide concrete values for parameters governing the PDA construction process, leading to demonstrably improved performance metrics compared to heuristic or non-optimized approaches.

Integer optimization is utilized within the CMA-NHSDP framework to determine the optimal allocation of caching resources, directly minimizing the required transmission rate while simultaneously meeting all user data demands. This optimization process involves defining an objective function representing the total transmission rate and a set of constraints ensuring each user’s requested data is satisfied through cached content or reliable transmission. By formulating the caching problem as an integer program, discrete decisions regarding content placement are made to achieve the lowest possible transmission rate, leading to demonstrable improvements in network efficiency and reduced bandwidth consumption. The resulting solution provides a quantifiable reduction in data transmission compared to alternative caching schemes.

The implemented caching scheme, optimized through integer programming, demonstrably reduces subpacketization requirements. Specifically, when the number of caches, K, equals qL, the scheme achieves a subpacketization reduction of 2^n / (q^n - \lfloor q-1 \rfloor^n + 1) compared to the conventional Caching with Write-back (CW) scheme. Furthermore, under the same condition (K = qL), this approach provides a guaranteed subpacketization reduction of at least 1 when benchmarked against both the RK1 and CW schemes, indicating improved efficiency and scalability for content delivery networks.

Towards Advanced Caching: LL-Continuous PDAs and the Never-Ending Quest

The conventional Probabilistic Data Association (PDA) filter, a cornerstone of multi-target tracking and data fusion, operates under constraints that can limit its performance in complex, rapidly changing environments. Researchers have extended this established framework by incorporating L-continuous constraints, resulting in LL-Continuous PDAs. This refinement introduces a significant degree of flexibility, allowing the filter to adapt more effectively to non-linear system dynamics and measurement models. By relaxing traditional constraints, LL-Continuous PDAs can explore a wider range of possible data associations, leading to improved accuracy and robustness, particularly when dealing with ambiguous or noisy data streams. The resulting optimization potential is substantial, enabling more efficient data delivery and ultimately enhancing the performance of caching systems in dynamic environments where data availability is paramount.

Advanced Probabilistic Data Associations (PDAs) significantly enhance data delivery efficiency, especially within the fluctuating demands of dynamic caching environments. Traditional caching systems often struggle to adapt to rapidly changing user needs and content popularity, leading to increased latency and reduced hit rates. These LL-Continuous PDAs, however, offer a more nuanced approach by continuously updating probability distributions associated with cached data, allowing the system to proactively prioritize and deliver content with greater accuracy. This adaptability is crucial in scenarios where network conditions are unstable or user behavior is unpredictable, ensuring a smoother and more responsive experience. By intelligently predicting future data requests and pre-positioning content accordingly, these PDAs minimize delays and maximize the utilization of caching resources, ultimately improving overall system performance and user satisfaction.

The convergence of LL-Continuous Probabilistic Data Assignments (PDAs), the CMA-NHSDP caching algorithm, and integer optimization techniques establishes a fertile ground for advancements in multi-access caching systems. This synergistic approach allows for a more nuanced and adaptable caching strategy, moving beyond traditional, static assignments. By leveraging the flexibility of LL-Continuous PDAs to model probabilistic data placement, and combining this with the efficiency of CMA-NHSDP in determining optimal cache allocations, researchers can formulate and solve complex caching problems as integer programs. This ultimately unlocks the potential for significant gains in data delivery efficiency, reduced latency, and improved resource utilization, particularly in dynamic network environments where content demand and network conditions are constantly changing. Further investigation into this combined methodology promises to yield innovative solutions for next-generation caching architectures.

The pursuit of elegant caching schemes, as demonstrated by this Cyclic Multi-Access Non-Half-Sum Disjoint Packing proposal, invariably invites future complications. This paper aims to optimize transmission rates with linear subpacketization, a laudable goal, yet one likely to introduce new failure modes once deployed at scale. As Edsger W. Dijkstra observed, “It’s not that I’m against documentation, but it’s too often a collective self-delusion.” This neatly encapsulates the problem; a perfectly modeled system on paper rarely survives contact with production realities. The inherent complexity of multi-access caching ensures that some unforeseen interaction will eventually expose a weakness, rendering today’s innovation tomorrow’s technical debt. If a bug is reproducible, one can at least claim a stable system, but perfect prevention is an illusion.

What’s Next?

This Cyclic Multi-Access Non-Half-Sum Disjoint Packing scheme, with its promise of linear subpacketization, feels…familiar. It’s a refinement, certainly, a clever rearrangement of the usual tradeoffs between computation and communication. One suspects production systems will quickly reveal that ‘linear’ isn’t quite as forgiving as the simulations suggest, especially when confronted with actual channel noise and user demand. The core problem, of course, doesn’t vanish – caching is still about anticipating need, and anticipation is always imperfect.

Future work will inevitably focus on extending this to more complex network topologies – because it always does. Expect to see layers of abstraction piled on top, each promising to solve the ‘real world’ issues that inevitably emerge. Then, someone will propose a ‘meta-packing’ algorithm to optimize the optimization algorithms. It’s a beautiful cycle, really.

One anticipates that the true measure of this work won’t be its theoretical gains, but how easily it breaks when someone tries to deploy it at scale. The researchers have moved a few pieces around on the board, and that’s commendable. But everything new is just the old thing with worse docs.

Original article: https://arxiv.org/pdf/2601.10510.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Epic Games Store Free Games for November 6 Are Great for the Busy Holiday Season

- Battlefield 6 Open Beta Anti-Cheat Has Weird Issue on PC

- How to Unlock & Upgrade Hobbies in Heartopia

- Someone Made a SNES-Like Version of Super Mario Bros. Wonder, and You Can Play it for Free

- The Mandalorian & Grogu Hits A Worrying Star Wars Snag Ahead Of Its Release

- EUR USD PREDICTION

- Sony Shuts Down PlayStation Stars Loyalty Program

- God Of War: Sons Of Sparta – Interactive Map

- One Piece Chapter 1175 Preview, Release Date, And What To Expect

- Unveiling the Eye Patch Pirate: Oda’s Big Reveal in One Piece’s Elbaf Arc!

2026-01-19 04:42