Author: Denis Avetisyan

A new approach uses reinforcement learning to automatically tune fully homomorphic encryption, dramatically improving performance and reducing computational overhead.

Researchers introduce CHEHAB, a compiler that leverages AI to optimize code for fully homomorphic encryption, enhancing speed and minimizing noise accumulation.

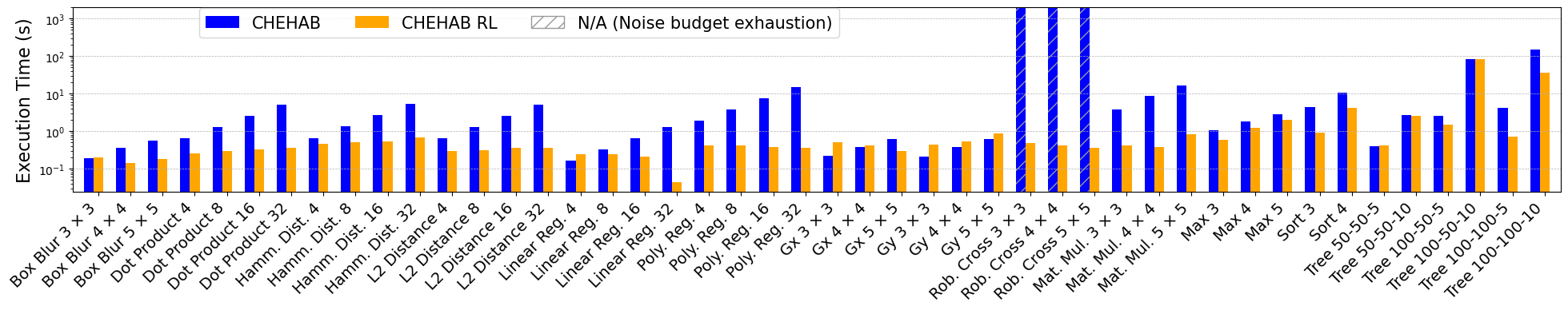

Despite the promise of secure computation on encrypted data, the high computational cost of Fully Homomorphic Encryption (FHE) remains a significant barrier to widespread adoption. This paper introduces CHEHAB RL: Learning to Optimize Fully Homomorphic Encryption Computations, a novel framework that leverages deep reinforcement learning to automate FHE code optimization, achieving substantial performance gains without requiring specialized cryptographic expertise. By training an RL agent to strategically apply rewriting rules, CHEHAB RL effectively vectorizes scalar FHE code, reducing both execution latency and noise accumulation-demonstrating 5.3\times speedup and 2.54\times noise reduction compared to state-of-the-art compilers like Coyote. Can this approach pave the way for practical, efficient FHE deployments across a wider range of applications?

The Privacy Paradox: When Security Becomes the Bottleneck

The increasing reliance on data-driven insights across industries has created a fundamental tension between utility and privacy. Traditional data analysis techniques invariably require plaintext access to sensitive information – be it personal health records, financial transactions, or proprietary business data – exposing it to potential breaches and misuse. While anonymization and differential privacy offer partial solutions, they often come at the cost of analytical accuracy or introduce limitations on the types of queries that can be performed. This necessitates a paradigm shift where computations can be performed directly on encrypted data, eliminating the need for decryption until the final result is obtained – a critical requirement for maintaining data confidentiality in an age of escalating cyber threats and stringent data protection regulations. The demand for robust privacy-preserving technologies is therefore not merely a technical challenge, but a core imperative for fostering trust and enabling responsible data innovation.

Fully Homomorphic Encryption (FHE) represents a paradigm shift in data security by allowing computations to be performed directly on ciphertext – encrypted data – without requiring decryption. This breakthrough fundamentally alters the traditional security-utility trade-off; previously, accessing data for analysis necessitated exposing it in plaintext, creating vulnerabilities. With FHE, sensitive information, such as financial records, personal health data, or proprietary algorithms, remains encrypted throughout the entire processing lifecycle. The result is a secure environment where computations – including complex operations like machine learning inference or statistical analysis – can be validated and performed with guaranteed privacy. This capability unlocks a future where data insights are obtainable without compromising confidentiality, offering transformative potential across numerous sectors, from healthcare and finance to government and cloud computing.

While Fully Homomorphic Encryption (FHE) presents a compelling solution for data privacy, its practical implementation is hindered by substantial performance challenges. The core issue lies in the computational overhead – performing even basic operations on encrypted data requires significantly more processing power than on plaintext. This isn’t simply a matter of scaling; the encryption process itself introduces a form of ‘noise’ that accumulates with each computation. f(E(x), E(y)) = E(f(x, y)) – the ideal of computation on encrypted data – necessitates complex mathematical techniques to manage this noise and prevent decryption errors. Without careful implementation, the signal becomes lost within the noise, rendering the results unusable. Current research focuses on minimizing this overhead through algorithmic optimizations and the development of noise-reduction techniques, but a significant performance gap remains between FHE and traditional computation methods.

The Circuit’s Curse: Depth, Noise, and the Limits of Encryption

Circuit depth in Fully Homomorphic Encryption (FHE) refers to the number of sequential operations required to evaluate a function on encrypted data. Increased circuit depth directly correlates with decreased performance, as each operation introduces latency. Simultaneously, depth exacerbates noise accumulation; FHE schemes operate with noisy ciphertexts, and each gate applied to these ciphertexts adds further noise. This noise growth is not linear; certain operations, notably multiplications, contribute significantly more noise than additions. If the noise exceeds a predefined threshold, the decryption process will fail, rendering the computation incorrect. Therefore, minimizing circuit depth is crucial for both practical execution speed and maintaining the integrity of the homomorphic computation. The relationship can be generalized as: Noise_{total} = Noise_{initial} + \sum_{i=1}^{depth} Noise_{gate_i}, where Noise_{gate_i} represents the noise introduced by the ith gate in the circuit.

Multiplicative depth within a Fully Homomorphic Encryption (FHE) circuit is a primary determinant of successful decryption. Each multiplication operation introduces noise into the ciphertext; this noise accumulates with each subsequent multiplication, increasing the probability of decryption failure. The level of noise introduced is dependent on the chosen FHE scheme and parameters, but fundamentally, a higher multiplicative depth necessitates a larger initial ciphertext modulus to accommodate the amplified noise. If the noise exceeds the threshold defined by the modulus, the decryption process will yield an incorrect result. Therefore, minimizing the number of multiplicative operations – reducing multiplicative depth – is a crucial optimization strategy in FHE circuit design to maintain data integrity and ensure accurate computation.

Effective noise management in Fully Homomorphic Encryption (FHE) is critical because each operation, particularly multiplication, introduces noise that can overwhelm the encrypted data and render decryption impossible. Minimizing circuit depth-the sequential number of operations-directly mitigates noise accumulation; however, reducing depth must not compromise the intended functionality of the computation. Strategies for noise control therefore involve algorithmic choices and circuit optimization techniques aimed at achieving the lowest possible multiplicative depth while maintaining the necessary computational expressiveness. This often requires trade-offs between circuit complexity, computational efficiency, and acceptable error rates, necessitating careful analysis and implementation to ensure the reliability of homomorphic computations.

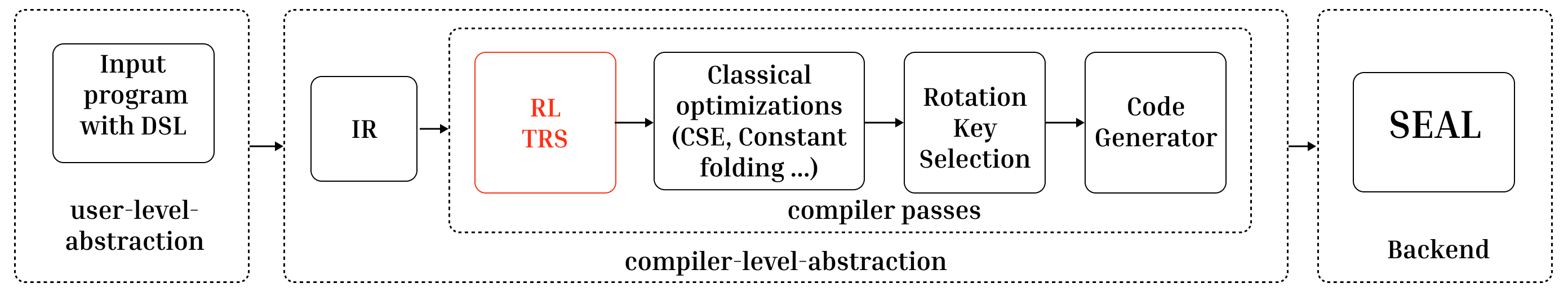

CHEHAB: Teaching the Machine to Encrypt

CHEHAB is a compiler designed to optimize code intended for Fully Homomorphic Encryption (FHE) schemes. Utilizing reinforcement learning (RL), CHEHAB automates the process of performance and accuracy tuning, addressing the complexities of FHE circuit optimization. The compiler learns to apply code transformations – known as rewrite rules – to improve the efficiency of FHE computations without requiring manual intervention. This automated optimization focuses on minimizing both execution time and the accumulation of noise, a critical factor in maintaining the integrity of FHE results. By employing an RL-driven approach, CHEHAB aims to provide a more efficient and accessible solution for deploying FHE in practical applications.

The reinforcement learning (RL) agent within CHEHAB operates by learning a policy that dictates the selection of circuit rewrite rules. These rules are transformations applied to the homomorphic encryption (HE) circuit with the goal of improving performance characteristics. The agent’s decision-making process is directly informed by the current state of the circuit, which is defined by its structural properties and the characteristics of the operations within it. Through repeated interaction with the circuit and evaluation of the resulting changes, the RL agent learns to identify and apply the most effective rewrite rules for a given circuit state, ultimately optimizing the code for both speed and noise reduction.

The PolicyNetwork is a critical component of CHEHAB, functioning as the decision-making element for applying circuit rewrite rules. This network is trained via a data generation process, where numerous circuit examples are created and evaluated. The network learns to predict optimal rewrite rule sequences based on the current circuit state, effectively mapping circuit characteristics to appropriate transformations. Training data is generated by exploring different rewrite rule applications and assessing their impact on circuit depth and noise accumulation. This allows the PolicyNetwork to develop a learned policy that guides the selection of rewrite rules, maximizing performance and minimizing noise within the fully homomorphic encryption (FHE) circuit.

The CHEHAB compiler utilizes reinforcement learning to achieve significant performance gains through optimizations including vectorization. Benchmarking demonstrates a 5.3x speedup in execution time and a 2.54x reduction in noise accumulation when compared to the Coyote compiler. These improvements are directly attributable to the RL agent’s ability to strategically apply rewrite rules, resulting in a decreased circuit depth and a corresponding reduction in computational overhead during homomorphic encryption operations.

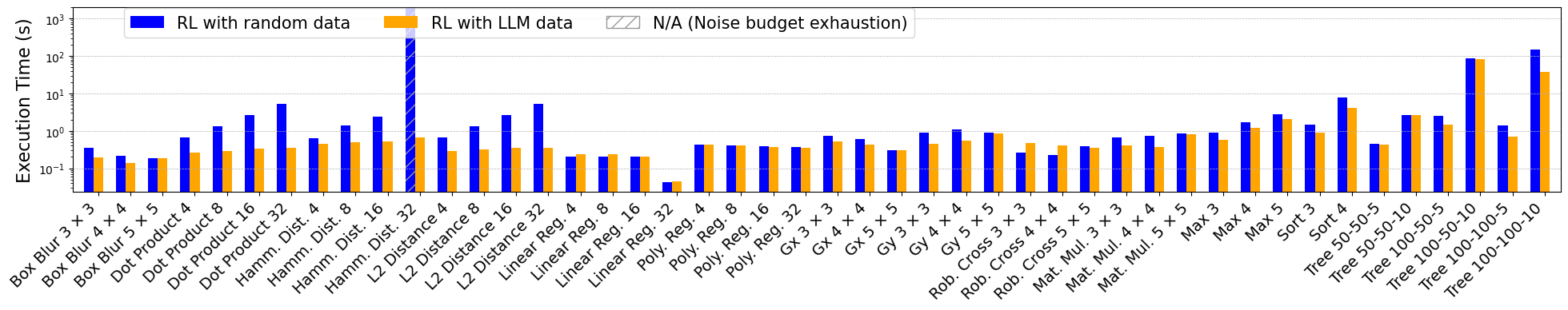

LLMs to the Rescue: Data Generation for a Smarter Compiler

An effective Reinforcement Learning (RL) agent designed for Fully Homomorphic Encryption (FHE) optimization necessitates a robust and varied training dataset comprised of FHE code snippets. The complexity of FHE, coupled with the nuanced interplay between performance and security, demands that the agent be exposed to a wide spectrum of code structures, optimization challenges, and potential noise accumulation scenarios. Without sufficient data diversity, the RL agent risks overfitting to specific code patterns or failing to generalize effectively to unseen FHE implementations. Consequently, a substantial dataset – encompassing diverse FHE schemes, varying data types, and a range of computational complexities – is crucial for enabling the agent to learn a comprehensive optimization strategy and achieve consistently improved performance across a broad set of FHE applications.

To effectively train a reinforcement learning agent for optimizing Fully Homomorphic Encryption (FHE) code, a comprehensive and varied dataset is crucial. Researchers leveraged a Large Language Model (LLM) to automatically generate this training data, crafting a broad spectrum of FHE code scenarios. This approach moves beyond manually created datasets, allowing for the exploration of a significantly larger solution space. The LLM’s ability to produce diverse code examples, encompassing different optimization challenges and potential noise levels, provides the RL agent with robust training material. Consequently, the agent learns to navigate complex FHE optimization problems more effectively, ultimately leading to substantial performance gains – exemplified by a reported 27.9x speedup in compilation time compared to existing tools like the Coyote compiler.

The convergence of LLM-generated training data and reinforcement learning optimization yields substantial gains in fully homomorphic encryption (FHE) application performance. By exposing the RL agent to a diverse dataset of code snippets created by the LLM, the system learns to effectively navigate the complexities of FHE compilation. This synergistic approach delivers a demonstrable improvement in speed; specifically, the resulting compiler achieves a 27.9x faster compilation time when benchmarked against the established Coyote compiler. This leap in efficiency not only accelerates the development of privacy-preserving applications but also expands the practical viability of FHE for computationally intensive tasks, suggesting a pathway towards widespread adoption of secure computation technologies.

The system’s core innovation lies in its ability to navigate the inherent trade-off between performance optimization and noise accumulation within fully homomorphic encryption (FHE). FHE, by its nature, introduces noise during computations; excessive optimization without careful noise management can quickly render the encrypted data unusable. This design intelligently balances aggressive code transformations – aimed at accelerating computations – with strategies to control and minimize noise growth. The result is a practical system capable of delivering substantial performance gains – including a demonstrated 27.9x speedup in compilation – while maintaining the integrity of the encrypted data and ensuring reliable privacy-preserving computation. This equilibrium is crucial for deploying FHE in real-world applications where both speed and security are paramount, moving beyond theoretical possibilities toward tangible, efficient solutions.

The pursuit of optimized fully homomorphic encryption, as detailed in this work with CHEHAB, feels predictably Sisyphean. The compiler, employing reinforcement learning to navigate the treacherous landscape of noise accumulation and circuit optimization, is a fascinating, if temporary, victory. It’s merely automating the process of finding slightly-less-bad solutions. As Donald Knuth observed, “Premature optimization is the root of all evil.” This research, while innovative, simply refines the cycle. It’s a complex wrapper around fundamental limitations, and one can anticipate a future where even CHEHAB becomes the tech debt of a yet-undiscovered, equally flawed successor. The core idea-reducing noise-will remain, but the implementation will inevitably succumb to entropy.

The Road Ahead

The automation of fully homomorphic encryption compilation, as demonstrated by CHEHAB, feels less like a breakthrough and more like the postponement of inevitable complexity. The current approach optimizes for noise accumulation and vectorization, but this is merely shifting the performance bottleneck. Future iterations will undoubtedly reveal new, equally frustrating constraints – memory access patterns, circuit depth, or perhaps the inherent limitations of the reinforcement learning agent itself. The bug tracker, already swollen with the ghosts of failed optimizations, will only grow heavier.

The temptation to view reinforcement learning as a panacea for compiler design should be resisted. Each reward function crafted, each state space defined, represents a prior-an assumption about what constitutes ‘good’ FHE code. These priors will inevitably clash with the messy reality of production data, leading to unexpected regressions and the realization that ‘optimization’ is often just trading one problem for another. The system doesn’t solve compilation; it simply re-encodes the same struggles in a more opaque form.

Ultimately, the pursuit of automated FHE compilation isn’t about achieving perfect performance; it’s about building more elaborate scaffolding around an inherently difficult problem. The next step won’t be a smarter algorithm, but a more honest accounting of the costs-the computational resources required to learn these optimizations, the energy consumed, and the inevitable tech debt accrued. The system doesn’t deploy-it lets go.

Original article: https://arxiv.org/pdf/2601.19367.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- 8 One Piece Characters Who Deserved Better Endings

- Top 8 UFC 5 Perks Every Fighter Should Use

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- How to Discover the Identity of the Royal Robber in The Sims 4

- How to Unlock the Mines in Cookie Run: Kingdom

- Sega Declares $200 Million Write-Off

- Full Mewgenics Soundtrack (Complete Songs List)

2026-01-29 03:31