Author: Denis Avetisyan

Researchers have developed a new method for embedding imperceptible watermarks directly into the stylistic elements of images created by AI, safeguarding intellectual property in the age of diffusion models.

AuthenLoRA entwines copyright protection with image stylization, offering a robust watermarking framework for LoRA-adapted diffusion models against adversarial attacks.

While Low-Rank Adaptation (LoRA) offers an efficient method for customizing diffusion models, its ease of distribution presents challenges for copyright protection and content traceability. This paper introduces ‘AuthenLoRA: Entangling Stylization with Imperceptible Watermarks for Copyright-Secure LoRA Adapters’, a novel framework that embeds imperceptible, traceable watermarks directly into the LoRA training process, preserving stylistic quality during image generation. By jointly optimizing style and watermark distributions with an expanded LoRA architecture and zero-message regularization, AuthenLoRA significantly reduces false positives and ensures robust watermark propagation. Could this approach offer a viable path towards establishing verifiable ownership and mitigating misuse in the rapidly evolving landscape of AI-generated content?

The Illusion of Control: Generative Models and the Ghosts in the Machine

Diffusion models represent a paradigm shift in image generation, achieving levels of realism and diversity previously unattainable. These models operate by progressively adding noise to an image until it becomes pure static, then learning to reverse this process – effectively “denoising” from randomness into coherent visuals. Unlike earlier generative adversarial networks (GANs), diffusion models excel at capturing intricate details and subtle variations, leading to images remarkably indistinguishable from photographs. This is accomplished through a stochastic process, allowing for the creation of an expansive range of outputs from a single prompt, and enabling the generation of entirely new and imaginative content. The ability to synthesize high-fidelity images with such flexibility has rapidly propelled diffusion models to the forefront of artificial intelligence, impacting fields from art and design to scientific visualization and beyond.

Current methods for personalizing generative models, such as Dreambooth and Textual Inversion, present significant vulnerabilities despite their effectiveness in adapting these systems to individual preferences or specific subjects. These techniques typically involve fine-tuning the model with a small dataset of target images, inadvertently embedding the characteristics of that dataset – and potentially sensitive information – directly within the model’s parameters. This creates a backdoor, allowing malicious actors to potentially extract the training data or manipulate the model’s output in unintended ways. Because these methods prioritize customization speed and accessibility over robust security measures, verifying the provenance of generated content and protecting intellectual property rights becomes increasingly challenging, highlighting a critical need for improved safeguards in the realm of personalized generative AI.

The remarkable accessibility of generative models, while fostering creative expression, simultaneously presents a significant challenge regarding digital authenticity. The ease with which highly realistic images can be created and manipulated introduces the potential for malicious use, ranging from the spread of misinformation to the fabrication of evidence. Consequently, a pressing need exists for innovative techniques to reliably verify the provenance and ownership of generated content. These methods must go beyond simple watermarking, potentially leveraging cryptographic signatures, robust metadata tracking, or even subtle, imperceptible alterations to the image itself that can confirm its origin and detect tampering. Establishing trust in the digital realm, therefore, depends on the development and widespread adoption of these verification systems, ensuring that the benefits of generative AI are not overshadowed by its potential for abuse.

Beyond Pixels: A Watermark That Lives Within the Model

Traditional digital watermarking techniques, designed for pixel-space images, encounter significant challenges when applied to the compressed latent spaces utilized by diffusion models. The dimensionality reduction inherent in latent space compression exacerbates watermark fragility, resulting in decreased robustness against common image manipulations like JPEG compression, resizing, or cropping. Furthermore, the non-linear transformations within diffusion models often introduce distortions that degrade the watermark signal, leading to increased false positive rates – incorrectly identifying watermarked content as original or authentic. These limitations stem from the watermark being applied after the compression and encoding processes, making it susceptible to the inherent information loss and distortions present in the latent representation.

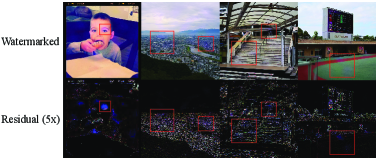

AuthenLoRA implements a watermarking scheme integrated directly into the Low-Rank Adaptation (LoRA) training process. This is achieved by modulating the LoRA update matrices with a pseudo-random noise pattern determined by a secret key. The key governs the generation of this noise, embedding the watermark as a subtle perturbation within the model’s trainable parameters. Unlike post-processing watermarking techniques, this method creates a signature intrinsically linked to the model’s weights, enhancing robustness against standard image manipulations and compression artifacts. The resulting watermark is designed to be statistically undetectable, minimizing the risk of discovery through conventional steganalysis methods while maintaining high accuracy in ownership verification using the corresponding secret key.

AuthenLoRA utilizes ResNet blocks to improve watermark durability by embedding the signature directly into the Low-Rank Adaptation (LoRA) parameters during training. This approach differs from methods that modify pixel data or latent representations post-generation. Specifically, the watermark is integrated into the weights of the ResNet blocks within the LoRA modules. This integration ensures the watermark persists through various downstream operations, including image compression, noise addition, and even fine-tuning, as the signature becomes an inherent component of the model’s learned parameters. The use of ResNet blocks facilitates this embedding process, allowing for a higher capacity watermark without significantly impacting model performance or perceptual quality.

Testing the Limits: Robustness and Performance of AuthenLoRA

AuthenLoRA achieves a watermark detection bit accuracy exceeding 97% under various conditions that commonly degrade signal fidelity. Testing demonstrates sustained performance even after application of JPEG compression, Gaussian blur, and model-based degradation utilizing both Stable Diffusion 1.5 (SD1.5) and Stable Diffusion 2.1 (SD2.1) models. This robustness indicates the watermark remains reliably detectable despite common image manipulations and alterations introduced by different generative model architectures, representing a significant advancement in watermark resilience.

The implementation of Zero-Message Regularization within AuthenLoRA demonstrably reduces false positive rates during watermark detection. Evaluations show a precision rate of 0.9887, indicating a high degree of accuracy in identifying watermarked images. This represents a substantial improvement over baseline techniques such as AquaLoRA, which exhibited a precision rate below 0.1 under the same testing conditions. The regularization effectively minimizes incorrect positive identifications, enhancing the reliability of the watermark detection process without requiring additional computational resources.

AuthenLoRA distinguishes itself from model optimization techniques such as pruning and quantization by preserving original model performance characteristics and avoiding the introduction of artifacts. Testing demonstrates AuthenLoRA maintains a false positive rate of 0.0115, a substantial improvement over baseline methods which exhibit a false positive rate of 1.0. This indicates that AuthenLoRA’s watermark detection process generates significantly fewer incorrect positive identifications, offering a more reliable method for verifying content authenticity without impacting the utility or quality of the underlying generative model.

Beyond Detection: Securing Creativity and Building Trust

AuthenLoRA distinguishes itself by integrating stylistic control directly into the process of secure image generation. This means creators aren’t forced to choose between artistic expression and intellectual property protection; they can imbue generated content with unique branding or artistic signatures while simultaneously establishing verifiable ownership. The technology achieves this by encoding stylistic parameters within the authentication process itself, ensuring that any generated image, however customized, retains a traceable link to its originator. This capability is poised to revolutionize digital content creation, enabling a future where personalized and branded visuals can be deployed with confidence, knowing that authenticity and ownership are intrinsically secured, fostering trust and innovation across diverse applications.

Digital content creators now have a powerful new tool to safeguard their work and build confidence in the authenticity of generated media. This technology addresses a critical gap in the rapidly evolving landscape of generative AI, where intellectual property is easily replicated and attribution is often lost. By establishing verifiable ownership of generated content, creators can more effectively monetize their skills and maintain control over their artistic vision. This fosters a more trustworthy digital environment for consumers, allowing them to confidently engage with and support the creators behind the content they enjoy, ultimately incentivizing innovation and artistic expression within the generative AI space.

AuthenLoRA establishes a remarkably secure basis for generative models, demonstrated by its high True Positive Rate (TPR) exceeding 95% even while maintaining a remarkably low False Positive Rate (FPR) of just 10-6. This level of accuracy is critical for applications demanding verifiable authenticity, opening doors to innovative uses across diverse fields. In advertising, brands can now confidently deploy AI-generated content with assurance against imitation or misuse. The art world benefits from a system that safeguards artistic integrity and provenance. Furthermore, personalized media experiences become more trustworthy, as content origin and modification can be reliably verified, fostering greater user confidence and enabling new forms of digital interaction.

The pursuit of copyright protection in generative models feels like building sandcastles against the tide. This paper, with its AuthenLoRA framework, attempts to embed imperceptible watermarks within LoRA adapters – a clever, if ultimately temporary, solution. It reminds one of John McCarthy’s observation: “It is often easier to explain why we think something will work than to demonstrate that it actually does.” While AuthenLoRA bolsters defenses against attacks, production environments will inevitably uncover new avenues for circumvention. The elegance of imperceptible watermarks will eventually succumb to the relentless pressure of those seeking to bypass them. One can almost predict the digital archaeologists meticulously extracting these watermarks from increasingly sophisticated forgeries, documenting yet another layer of defeated security. It’s not a failure of ingenuity, merely a predictable consequence of complex systems.

What’s Next?

The promise of AuthenLoRA, like all elegantly constrained systems, resides in a temporary reprieve from entropy. It successfully addresses a pressing concern – copyright in the age of rapidly propagating generative models – but introduces a new set of vulnerabilities. Any watermarking scheme is, at its core, an obfuscation layer. The inevitable arms race will focus on removing that layer, not by breaking the LoRA adaptation itself, but by statistically dissolving the watermark during subsequent generations. It’s a game of diminishing returns, where each refinement of the embedding is met by an equally sophisticated removal technique.

The more interesting, and predictably frustrating, path lies in the inherent limitations of ‘stylization’ as a security feature. The assumption that stylistic drift sufficiently obscures watermark detection is optimistic. Production systems rarely adhere to theoretical noise distributions. Real-world image pipelines – compression, upscaling, color correction – will systematically erode the watermark’s integrity. The real challenge isn’t adversarial attacks, but the mundane brutality of everyday image processing.

Ultimately, AuthenLoRA contributes to a growing body of work demonstrating that perfect copyright protection is a mathematical impossibility. It’s a local maximum in a vast, chaotic search space. The field will likely shift towards probabilistic provenance tracking – accepting that attribution is a matter of degree, not certainty. And, naturally, that tracking will require yet another layer of abstraction, further distancing the original creation from its digital echo. Documentation for that system will, predictably, be nonexistent.

Original article: https://arxiv.org/pdf/2511.21216.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- God Of War: Sons Of Sparta – Interactive Map

- 8 One Piece Characters Who Deserved Better Endings

- Who Is the Information Broker in The Sims 4?

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Why Aave is Making Waves with $1B in Tokenized Assets – You Won’t Believe This!

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

2025-11-28 20:12