Author: Denis Avetisyan

A new theoretical analysis reveals fundamental constraints on compressing Chain-of-Thought reasoning within large language models, and proposes a method to overcome signal decay.

This paper investigates the theoretical limits of compressing Chain-of-Thought reasoning, identifying an exponential complexity barrier and introducing the ALiCoT alignment technique to enable efficient implicit reasoning.

Despite the demonstrated reasoning abilities of Large Language Models via Chain-of-Thought (CoT) prompting, its computational cost remains a significant barrier. This paper, ‘Chain Of Thought Compression: A Theoritical Analysis’, provides a theoretical analysis of compressing these reasoning steps into latent states, revealing an exponential decay in the learning signal for problems with irreducible logical dependencies. We identify that skipping intermediate steps introduces high-order interaction barriers, and introduce a novel alignment technique, ALiCoT, to overcome this signal decay and enable efficient implicit reasoning-achieving a 54.4x speedup without performance loss. Can this understanding of latent reasoning unlock even more efficient and scalable AI systems beyond current limitations?

The Limits of Scale: Unveiling the Reasoning Gap

Large language models represent a substantial leap forward in the field of artificial intelligence, showcasing an unprecedented ability to generate human-quality text, translate languages, and respond to a diverse range of prompts. However, this impressive facade often obscures a critical limitation: the struggle with complex reasoning. While adept at recognizing patterns and recalling information from vast datasets, these models falter when faced with problems requiring multiple sequential steps, logical deductions, or nuanced understanding of cause and effect. This isn’t a matter of simply lacking knowledge, but a fundamental difficulty in processing information in a way that mimics human thought – a disconnect that becomes increasingly apparent as problem complexity increases, revealing the gap between statistical language proficiency and genuine cognitive ability.

The remarkable progress of Large Language Models (LLMs) has largely depended on scaling – increasing model size and training data. However, this approach is encountering diminishing returns and practical constraints; the computational demands and financial costs associated with ever-larger models are becoming unsustainable. While scaling initially unlocked emergent abilities in language understanding and generation, complex reasoning tasks-those requiring multiple interconnected inferences-remain challenging. This limitation isn’t simply a matter of more data or parameters; it signals a fundamental need for innovative architectures and algorithms that prioritize reasoning efficiency over sheer scale. Researchers are actively exploring methods that allow LLMs to achieve robust reasoning with fewer computational resources, potentially through techniques like knowledge distillation, modular networks, or algorithms that more effectively leverage existing knowledge.

Chain-of-Thought (CoT) reasoning represents a substantial advancement in large language model capabilities, enabling them to tackle problems requiring intermediate steps-however, this improved performance comes at a cost. While standard LLMs often fail at multi-step tasks, CoT prompts models to explicitly articulate their reasoning process, significantly boosting accuracy. Unfortunately, generating these detailed reasoning chains demands considerably more computational resources – both processing power and time – than conventional prompting methods. This increased cost presents a major obstacle to the widespread deployment of CoT reasoning in practical applications, especially those requiring real-time responses or operating under resource constraints. Researchers are therefore actively exploring methods to distill the benefits of CoT into more efficient models, seeking to maintain reasoning prowess without the prohibitive computational burden.

Compressing the Thought Process: A Pathway to Efficiency

Chain-of-Thought (CoT) reasoning, while effective, is computationally expensive due to the processing required for each intermediate step. CoT compression techniques address this limitation by seeking to internalize these reasoning steps within the model’s parameters, rather than explicitly calculating them during inference. This is achieved by representing the logical progression of thought – the chain itself – in a more compact form. By reducing the number of explicit calculations required to traverse the reasoning process, these methods aim to lower both the latency and the resource demands of CoT-based language models without sacrificing performance on complex reasoning tasks. The goal is to achieve equivalent reasoning capability with a smaller computational footprint.

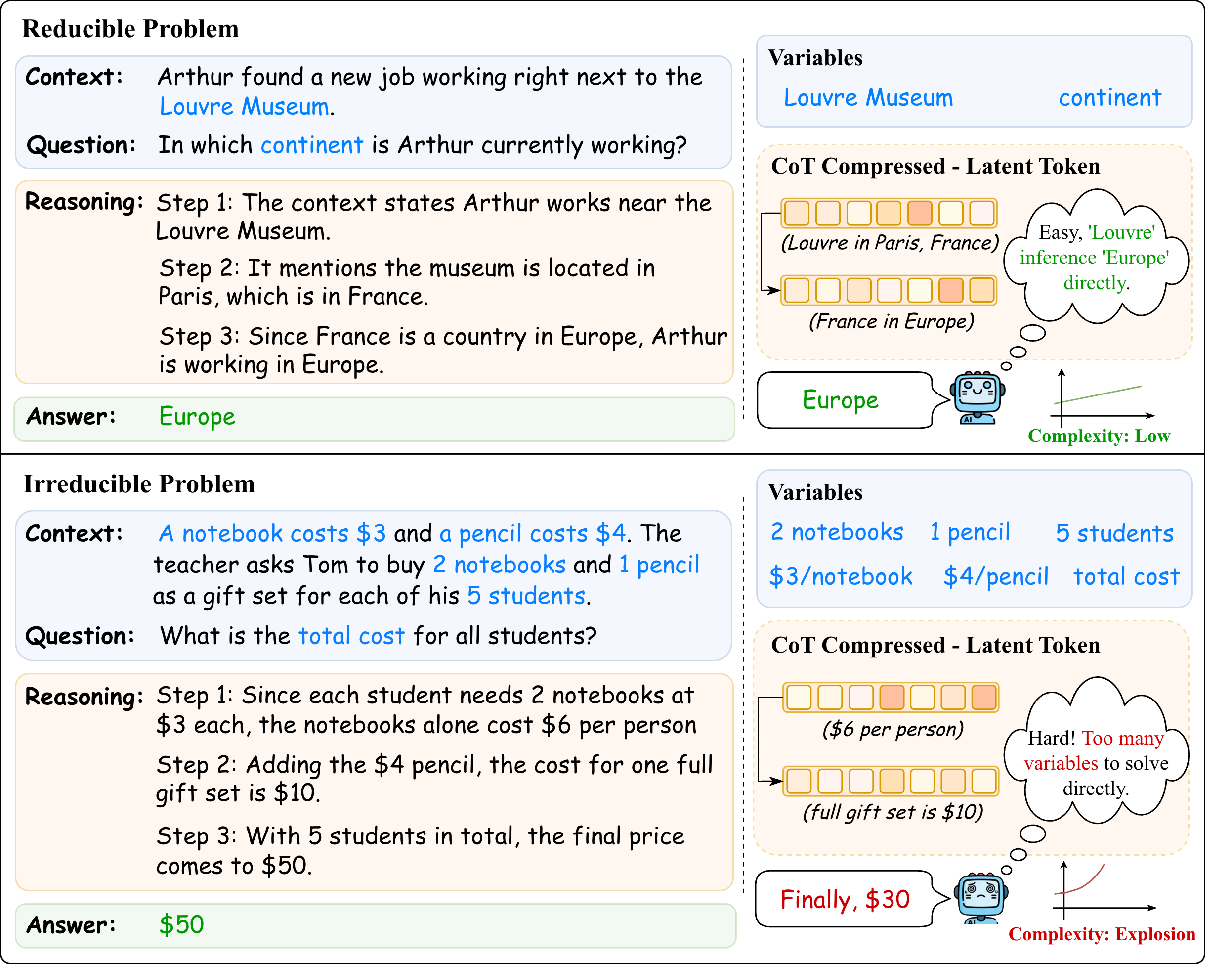

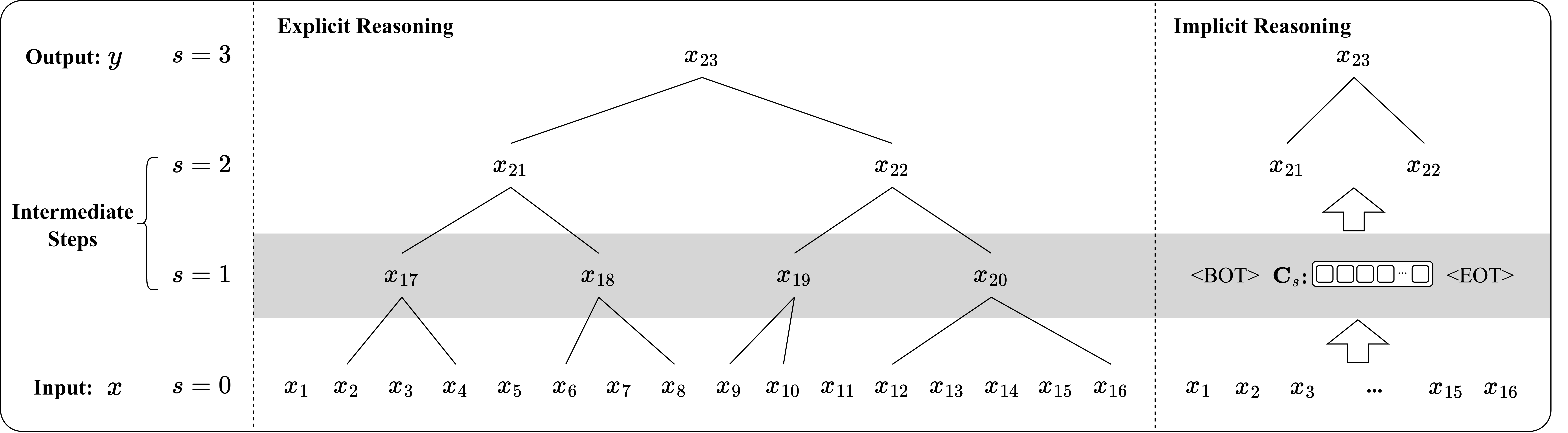

Implicit Chain-of-Thought (CoT) compression utilizes a latent space to represent the intermediate reasoning steps typically articulated in explicit CoT. Rather than directly outputting each step, the model encodes these steps into a sequence of discrete Latent Tokens. These tokens function as a compressed representation of the reasoning process, allowing the model to maintain reasoning ability with a reduced computational footprint. The model is trained to generate and utilize these latent tokens internally, effectively internalizing the intermediate steps and propagating information through the compressed latent space during inference. This approach differs from explicit CoT by minimizing the length of the generated sequence, thereby decreasing the computational cost associated with decoding and processing the reasoning trace.

Effective training of compressed Chain-of-Thought (CoT) models relies on the successful propagation of the gradient signal through the reduced, latent representations of reasoning steps. During backpropagation, the gradient, which indicates the direction and magnitude of weight adjustments needed for learning, must accurately reflect the impact of the compressed reasoning on the final output. Attenuation or distortion of this signal – due to information loss during compression or architectural constraints – can hinder learning and prevent the model from effectively refining its compressed reasoning process. Techniques such as careful initialization, architectural choices that preserve information flow, and specialized gradient clipping strategies are therefore crucial for ensuring that the model can learn to represent and utilize compressed reasoning effectively. The ability to maintain a strong gradient signal is directly correlated with the model’s capacity to recover the full reasoning process from the latent space and improve performance on complex tasks.

Deconstructing Complexity: Measuring Reasoning with Interaction and Density

Interaction Order, as a metric for reasoning complexity, assesses the extent to which determining the value of one variable requires knowledge of other variables within a problem. A low Interaction Order indicates variables are largely independent, allowing for parallel processing of information; conversely, a high Interaction Order signifies strong dependencies, necessitating sequential evaluation and increasing computational demands. This dependency is not simply the number of interacting variables, but the degree to which each variable’s value is contingent upon others; a problem with n variables, each dependent on all others, exhibits a significantly higher Interaction Order than one where variables interact only within localized groups. Quantifying Interaction Order provides a means of characterizing the structural complexity of a reasoning task independent of the specific domain or content.

Logical Density, a key factor in determining Chain-of-Thought (CoT) Compression Complexity, refers to the number of logically independent steps required to arrive at a solution; problems with higher Logical Density necessitate more steps and, consequently, exhibit greater resistance to compression. This metric quantifies the inherent difficulty of a reasoning task independent of the number of variables involved. A high Logical Density indicates that each step in the reasoning process is essential and cannot be eliminated without impacting the final result, making it challenging to reduce the CoT length through methods like pruning or simplification. Consequently, evaluating CoT compression techniques requires careful consideration of Logical Density to ensure that observed compression rates are not merely a result of removing redundant or unnecessary reasoning steps from problems that were already logically sparse.

The Parity Problem, specifically when evaluated using Order-rr Interaction – a metric quantifying the recursive relationships between variables required to solve a problem – serves as a benchmark for assessing the effectiveness of Chain-of-Thought (CoT) compression techniques. Order-rr Interaction measures the number of recursive calls needed to determine the solution, providing a quantifiable assessment of problem complexity. By applying CoT compression and then measuring the resulting Order-rr Interaction, researchers can determine how effectively the compression reduces the logical steps required for reasoning, and thus, the computational cost. Problems exhibiting high Order-rr Interaction values necessitate more complex reasoning chains, making them ideal candidates for evaluating the performance gains achieved through CoT compression methods.

Refining Compression: ALiCoT and the Path Forward

Aligned Implicit Chain-of-Thought (ALiCoT) improves compression efficiency by establishing a correlation between latent tokens – the internal representations within a language model – and explicit reasoning semantics. This alignment process ensures that the model’s internal thought processes are more directly linked to the logical steps required to arrive at a conclusion. By forcing this correspondence, ALiCoT facilitates more effective knowledge distillation and reduces redundancy in the latent space, resulting in a more compact model without significant performance degradation. The technique effectively guides the model to represent reasoning steps in a manner that is both concise and interpretable, contributing to improved compression ratios.

The Stop-Gradient Operation, integrated into the Aligned Implicit Chain-of-Thought (ALiCoT) framework, functions by preventing gradient backpropagation through specific latent tokens during training. This deliberate interruption of the gradient flow serves two primary purposes: it regulates the information passed between tokens, mitigating the risk of runaway activations and ensuring more controlled reasoning steps; and it enhances learning stability by reducing the potential for oscillations or divergence during optimization. By selectively blocking gradient updates, the Stop-Gradient Operation effectively focuses learning on the most relevant connections within the implicit chain-of-thought, leading to improved performance and robustness.

To rigorously evaluate Implicit Chain-of-Thought (CoT) methods, researchers utilize synthetic benchmarks constructed from Natural Language Boolean Directed Acyclic Graphs (DAGs). Performance on this benchmark demonstrates an accuracy of 83.94% for the ALiCoT approach, representing a 12.54% improvement over baseline implicit CoT methods. These DAGs provide a controlled environment for assessing reasoning capabilities by requiring models to accurately resolve Boolean logic expressed in natural language, offering a quantifiable measure of performance beyond traditional language modeling tasks.

Toward Sustainable Intelligence: The Future of Efficient Reasoning

Large language models, while powerful, often demand substantial computational resources, hindering their deployment on devices with limited processing power or energy availability. However, recent advancements demonstrate that compressing the reasoning process-reducing the number of steps required to reach a conclusion-offers a pathway to overcome these limitations. This compression doesn’t necessarily sacrifice accuracy; instead, it streamlines the model’s thought process, enabling it to achieve comparable, or even superior, performance with significantly fewer computations. The implications are considerable, potentially unlocking AI capabilities for mobile devices, embedded systems, and other resource-constrained environments where complex reasoning was previously impractical, ultimately broadening access to intelligent systems.

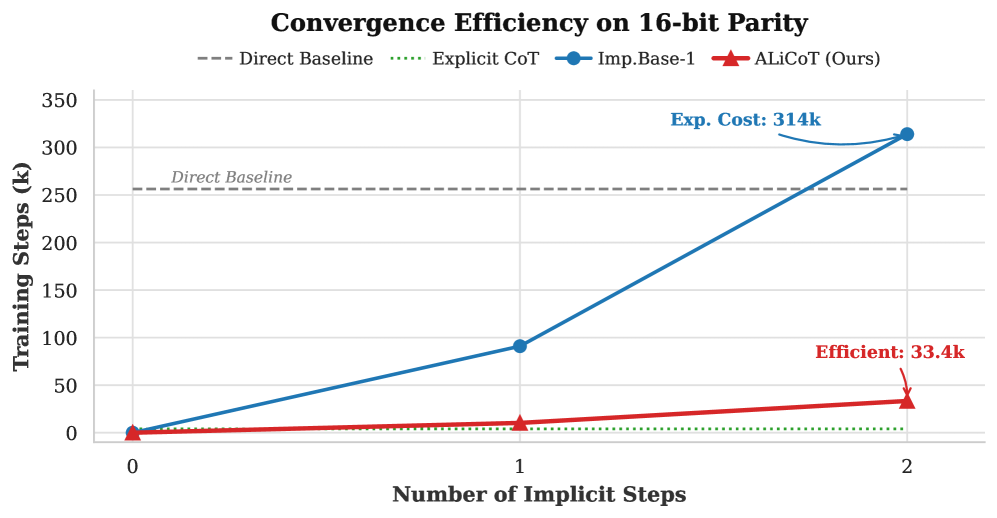

A deeper investigation into the relationship between the difficulty of reasoning tasks and the effectiveness of compression methodologies is paramount for advancing artificial intelligence. Current techniques, while demonstrating impressive speedups – such as ALiCoT’s 54.40x acceleration – aren’t universally applicable; their performance can vary considerably depending on the inherent complexity of the problem being solved. Future studies must systematically analyze how different compression strategies interact with varying levels of task difficulty, identifying which approaches are most resilient and effective under pressure. Understanding these nuances will allow for the development of adaptive compression algorithms capable of dynamically adjusting to the demands of a given task, ultimately leading to more robust, efficient, and broadly applicable AI systems. This targeted research will unlock the full potential of compressed reasoning, pushing the boundaries of what’s achievable with limited computational resources.

The development of streamlined reasoning processes within artificial intelligence is paving the way for more sustainable and broadly accessible systems. Recent innovations, such as the ALiCoT method, demonstrate a significant leap in efficiency; it achieves a remarkable 54.40x speedup compared to traditional explicit Chain-of-Thought reasoning, all while preserving near-perfect reasoning accuracy. This isn’t simply about faster computation, but also a drastic reduction in training requirements – ALiCoT reaches 100% accuracy in just 33.4k training steps, a fraction of the 314k steps needed by conventional implicit methods. This accelerated learning and reduced computational load suggest a future where complex reasoning tasks are attainable even on resource-constrained devices, broadening the potential applications of AI and minimizing its environmental impact.

The pursuit of efficient reasoning within large language models, as explored in this analysis of Chain-of-Thought compression, necessitates a focus on systemic integrity. Just as a city’s infrastructure requires evolutionary adaptation rather than wholesale reconstruction, so too must these models embrace structural refinement. Barbara Liskov aptly stated, “It’s one of the great failures of the computer field that we’ve been so focused on what computers can do, and not what they should do.” This sentiment resonates with the paper’s investigation into the limits of compression – acknowledging that maximizing efficiency cannot come at the cost of logical soundness or the preservation of the reasoning process. The ALiCoT alignment technique, proposed to address gradient signal decay, exemplifies this principle – a targeted intervention designed to fortify the underlying structure without necessitating a complete overhaul of the model.

Where Do We Go From Here?

The investigation into Chain-of-Thought compression reveals, predictably, that elegance is not a feature of scale. The exponential complexity identified is less a brick wall, and more a symptom of attempting to force organic processes into rigid architectures. If the system looks clever, it’s probably fragile. The NatBool-DAG, while offering a framework for understanding implicit reasoning, ultimately highlights how much remains obscured – the true cost of ‘compression’ being not simply parameter reduction, but a loss of representational fidelity. One suspects the gradient signal decay isn’t a bug, but a fundamental constraint; nature rarely optimizes for perfect transmission.

Future work will likely focus on architectural innovations – attempts to ‘scaffold’ reasoning without explicitly encoding every step. But a more fruitful avenue might be a reassessment of the objective. Are models meant to mimic human reasoning, or to achieve comparable results through fundamentally different mechanisms? The pursuit of interpretability, while laudable, could be a distraction. After all, a black box that functions reliably is often preferable to a transparent one prone to spectacular failure.

Architecture, it must be remembered, is the art of choosing what to sacrifice. ALiCoT offers a temporary reprieve, a means of delaying the inevitable decay. However, the underlying tension remains: the desire for both expressive power and computational efficiency. Resolving this will require not just better algorithms, but a deeper understanding of the inherent limitations of information processing itself.

Original article: https://arxiv.org/pdf/2601.21576.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- God Of War: Sons Of Sparta – Interactive Map

- 8 One Piece Characters Who Deserved Better Endings

- Who Is the Information Broker in The Sims 4?

- Poppy Playtime 5: Battery Locations & Locker Code for Huggy Escape Room

- Pressure Hand Locker Code in Poppy Playtime: Chapter 5

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Poppy Playtime Chapter 5: Emoji Keypad Code in Conditioning

- Engineering Power Puzzle Solution in Poppy Playtime: Chapter 5

2026-01-31 12:40