Author: Denis Avetisyan

As neuromorphic systems move from research labs to real-world applications, a comprehensive understanding of their unique security vulnerabilities is becoming critically important.

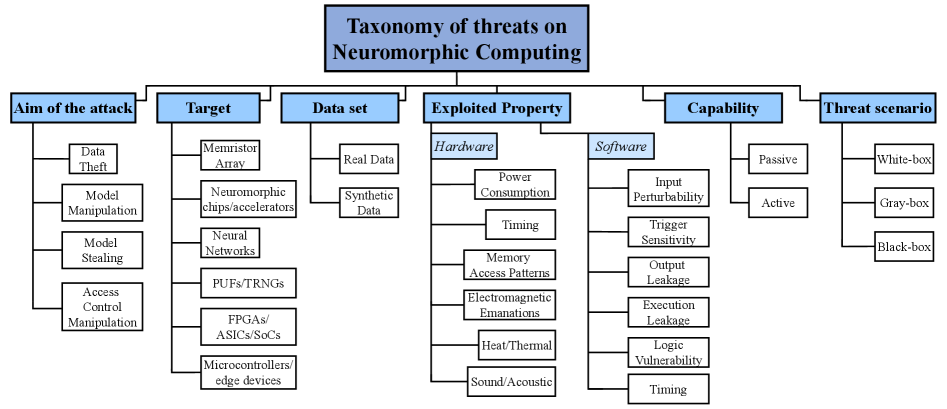

This survey analyzes emerging threats and countermeasures in neuromorphic computing, addressing hardware and software challenges for secure, next-generation platforms.

While neuromorphic computing promises energy-efficient and advanced computation through brain-inspired architectures, its emerging reliance on novel hardware and asynchronous processing introduces a new landscape of security vulnerabilities. This survey, ‘Emerging Threats and Countermeasures in Neuromorphic Systems: A Survey’, systematically analyzes these threats-ranging from hardware attacks targeting memristive devices to side-channel vulnerabilities in spiking neural networks-and explores corresponding countermeasures. Our analysis reveals a critical need for cross-layer security solutions that address both hardware primitives like Physical Unclonable Functions and software implementations of secure computation. Can a unified approach to security be developed to ensure the trustworthiness of these next-generation computing platforms and unlock their full potential?

Deconstructing the von Neumann Bottleneck: A New Neural Architecture

Contemporary computing systems, largely based on the von Neumann architecture, encounter significant bottlenecks as artificial intelligence demands increase. This architecture necessitates constant data transfer between processing units and memory, creating a substantial energy drain and limiting processing speed – a phenomenon known as the “von Neumann bottleneck.” While increasing processor speeds and memory bandwidth offer temporary relief, these approaches are rapidly approaching physical limits and incur escalating power consumption. The sequential nature of these operations also hinders the efficient processing of inherently parallel data, like those found in image or audio recognition, requiring complex software solutions to mimic parallelism. Consequently, advancements in AI, particularly in areas like real-time learning and complex pattern recognition, are increasingly constrained not by algorithmic innovation, but by the fundamental limitations of the underlying hardware.

Neuromorphic computing represents a significant departure from traditional computer architecture, seeking to replicate the brain’s remarkable efficiency and adaptability. Conventional systems rely on a separation between processing and memory, leading to a bottleneck known as the von Neumann limitation; data must constantly travel back and forth, consuming substantial energy. In contrast, neuromorphic systems aim to perform computation within memory, mirroring the brain’s distributed and parallel processing capabilities. This approach promises not only dramatic reductions in power consumption – potentially enabling AI on edge devices – but also a pathway to scalable intelligence that can handle complex, real-world data with greater resilience and speed. By embracing principles of sparsity, asynchronous processing, and localized learning, these biologically-inspired systems offer a compelling alternative for tackling increasingly demanding computational tasks and unlocking the full potential of artificial intelligence.

A fundamental bottleneck in traditional computing lies in the separation of processing and memory, leading to significant energy consumption as data is constantly shuttled between the two. Neuromorphic systems circumvent this limitation through in-memory computing, performing calculations directly within the memory itself. Crucially, this is enabled by novel devices like memristors – essentially electronic synapses. These components, unlike traditional transistors, can retain a resistance value even when power is removed, mimicking the way biological synapses store information through varying connection strengths. By arranging memristors in networks, researchers can emulate the massively parallel and energy-efficient computation of the brain, offering the potential for significant advancements in areas like machine learning and artificial intelligence, while drastically reducing power demands compared to conventional architectures.

Unlike traditional cameras that capture frames at fixed intervals, event cameras operate on a fundamentally different principle: they detect and report changes in brightness asynchronously. This results in data streams characterized by temporal sparsity – information is only recorded when something actually moves or changes – mirroring the efficient, event-driven processing of the biological nervous system. Consequently, event-based vision provides a natural input modality for neuromorphic systems, drastically reducing data redundancy and computational load. This sparse representation not only lowers power consumption but also allows these biologically inspired architectures to focus on salient features, improving responsiveness and enabling real-time processing of dynamic scenes. The asynchronous nature of event data aligns perfectly with the spike-based communication within neuromorphic chips, paving the way for more energy-efficient and robust artificial intelligence systems.

Unveiling the Attack Surface: Neuromorphic System Vulnerabilities

Spiking Neural Networks (SNNs), despite their advantages in energy efficiency, share fundamental vulnerabilities with conventional deep learning models. Specifically, SNNs are susceptible to adversarial attacks where carefully crafted input perturbations cause misclassification; backdoor attacks, enabling attackers to trigger specific, unintended behaviors; membership inference attacks, revealing whether a particular data point was used in training; and model inversion attacks, allowing reconstruction of training data from model parameters or outputs. These attacks leverage the network’s learned weights and biases to compromise its integrity and confidentiality, mirroring the threats faced by Artificial Neural Networks (ANNs). The susceptibility arises from the shared algorithmic foundations of both network types, despite differences in their computational paradigms and data representation.

Neuromorphic systems, due to their specialized hardware, are vulnerable to attacks not typically seen in traditional software-based deep learning. Side-channel attacks exploit information leaked through physical characteristics during computation, such as power consumption, electromagnetic radiation, or timing variations, to infer sensitive data or model parameters. Fault injection attacks, conversely, intentionally introduce errors into the hardware-through methods like voltage glitching, laser injection, or electromagnetic pulses-to alter system behavior, bypass security checks, or extract information. These attacks leverage the direct interaction between the physical layer and computation, representing a significant security concern for deployed neuromorphic applications.

Neuromorphic systems, leveraging physical phenomena for computation, exhibit inherent device-to-device variations in parameters like threshold voltages, synaptic weights, and interconnect delays. These variations, crucial for energy efficiency and compact designs, introduce vulnerabilities exploitable by attackers. Subtle alterations in operating conditions – temperature, voltage, or electromagnetic interference – can amplify these natural variations, causing predictable shifts in neuron firing patterns or synaptic plasticity. Attackers can then leverage these manipulated variations to induce misclassification, extract sensitive information, or even gain control over the system’s functionality. This differs from traditional systems where variations are largely mitigated by abstraction layers and precise manufacturing processes.

Physical Unclonable Functions (PUFs) are emerging as a hardware security primitive for neuromorphic systems. These functions leverage inherent, random physical variations introduced during device manufacturing to create a unique ‘fingerprint’. A recent implementation utilizes Convolutional Neural Networks (CNNs) to classify the frequency-domain responses of neuromorphic hardware, achieving a reported uniqueness of 97%. This means that, statistically, fewer than 3% of fabricated devices exhibit identical frequency response classifications, indicating a high degree of differentiation and potential for device authentication or key generation. The CNN-based classification method provides a robust and scalable approach to assessing and utilizing the inherent randomness of these physical characteristics for security purposes.

Fortifying the Synthetic Brain: Defenses and Countermeasures

Robust training methodologies are increasingly employed to improve the resilience of spiking neural networks (SNNs) against adversarial attacks. These methods typically involve augmenting the training dataset with adversarial examples – inputs intentionally perturbed to cause misclassification – allowing the SNN to learn to correctly classify these manipulated inputs. Techniques such as adversarial retraining, where the network is continuously retrained with newly generated adversarial examples, and defensive distillation, which smooths the decision boundaries of the network, have demonstrated significant improvements in SNN accuracy under attack. Furthermore, incorporating noise injection during training can increase the network’s robustness by simulating real-world input variations and preventing overfitting to specific adversarial patterns. While complete immunity remains a challenge, these approaches substantially mitigate the impact of adversarial perturbations on SNN performance.

Watermarking techniques for machine learning models embed a signal within the model’s parameters, allowing verification of ownership and detection of unauthorized copies. These methods typically involve subtly modifying model weights during training, introducing a pattern detectable only with knowledge of the embedding key. Detection involves extracting this pattern, confirming the model’s provenance. Watermarks can be either brittle, designed to be easily destroyed by even minor modifications, or robust, persisting through transformations like quantization or pruning. The strength of a watermark is evaluated by its false positive and false negative rates – the probability of incorrectly identifying a legitimate model as unauthorized, or vice versa – and is crucial for effective intellectual property protection in the context of increasingly accessible and replicable machine learning models.

Differential privacy addresses data security concerns during machine learning model training by adding carefully calibrated noise to the training data or the learning process itself. This technique ensures that the model learns general patterns without memorizing specific individual data points, thus preventing model inversion attacks where an attacker attempts to reconstruct sensitive information about training examples from the model’s parameters. The level of privacy is controlled by a privacy parameter, ε, with lower values indicating stronger privacy guarantees but potentially reduced model utility. Implementing differential privacy involves mechanisms such as adding Laplace or Gaussian noise to gradients during stochastic gradient descent or employing techniques like data perturbation and output perturbation to obscure individual contributions to the learning process, all while bounding the potential information leakage.

True Random Number Generators (TRNGs) are crucial hardware-based security primitives providing non-deterministic randomness sourced from physical phenomena. Unlike Pseudo-Random Number Generators (PRNGs) which rely on algorithms, TRNGs offer unpredictability essential for cryptographic applications and security protocols. The strength of TRNGs is rigorously evaluated using statistical test suites, notably the NIST Statistical Test Suite. Pass rates within this suite, specifically across tests assessing frequency, runs, and serial correlations, demonstrate the generator’s ability to produce statistically indistinguishable output from a truly random source. Consistent high pass rates indicate a robust and reliable source of randomness suitable for security-critical applications requiring unpredictable keys, initialization vectors, or nonces.

Current research focuses on minimizing information leakage through side-channel attacks targeting spiking neural networks (SNNs). These attacks exploit unintended emissions, such as power consumption or electromagnetic radiation, to infer sensitive data or model parameters. While SNNs, including lightweight implementations, offer potential advantages in energy efficiency, studies demonstrate their susceptibility to adversarial inputs designed to compromise performance or extract information. Consequently, development of robust defense mechanisms, alongside techniques to reduce side-channel leakage, is crucial for secure deployment of SNNs in sensitive applications.

The survey meticulously details the inherent vulnerabilities within neuromorphic systems, revealing a landscape where traditional security measures falter. It’s a compelling demonstration of how understanding a system necessitates probing its limits-essentially, attempting to break it to reveal its weaknesses. This approach aligns perfectly with the sentiment expressed by Vinton Cerf: “If you can’t break it, you don’t understand it.” The article’s exploration of side-channel attacks and the need for novel countermeasures, like Physical Unclonable Functions, isn’t simply about building defenses; it’s about reverse-engineering the very fabric of these in-memory computing architectures to truly comprehend their security posture. The work isn’t just about preventing breaches, but about knowing how they could occur.

What Lies Ahead?

The exercise of securing neuromorphic systems reveals, predictably, that the very innovations intended to mimic biological efficiency introduce entirely novel failure modes. A reliance on inherent device variability – the quirks of fabrication considered ‘features’ – proves a double-edged sword. Physical Unclonable Functions, born from this variability, offer a certain elegance, yet the temptation to predict that unpredictability remains strong. The field now faces a peculiar paradox: how to rigorously characterize randomness without, in the process, diminishing it. The current focus on hardware-level defenses, while necessary, feels… incomplete. It’s akin to fortifying a castle built on sand, ignoring the tides of algorithmic exploitation.

True Random Number Generators, a cornerstone of any secure system, are particularly intriguing in this context. If genuine randomness arises from the chaotic dynamics within the neuromorphic substrate itself, then the challenge shifts from generation to extraction – and proving that extraction hasn’t subtly altered the underlying process. The current trend towards cross-layer security is a sensible response, but it feels less like a solution and more like a postponement of the inevitable. Someone, somewhere, will inevitably find a way to use the system’s strengths against itself.

Ultimately, the future likely lies not in preventing attacks, but in building systems resilient enough to absorb them. Neuromorphic computing, in its attempt to model the brain, may inadvertently require a similar level of redundancy and self-repair. The goal shouldn’t be perfect security – a fantasy – but graceful degradation. A system that fails…interestingly. Perhaps, then, it will have truly learned something.

Original article: https://arxiv.org/pdf/2601.16589.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Top 8 UFC 5 Perks Every Fighter Should Use

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- Sega Declares $200 Million Write-Off

- Violence District Killer and Survivor Tier List

- All Pistols in Battlefield 6

- All 100 Substory Locations in Yakuza 0 Director’s Cut

- Xbox Game Pass September Wave 1 Revealed

- All Shrine Climb Locations in Ghost of Yotei

2026-01-26 11:41