Author: Denis Avetisyan

A new compilation framework addresses the challenges of mid-circuit measurements, enhancing the fidelity of quantum algorithms on near-term hardware.

This work introduces MERA, a framework integrating mid-circuit measurement error awareness into quantum circuit compilation for improved performance and qubit reuse.

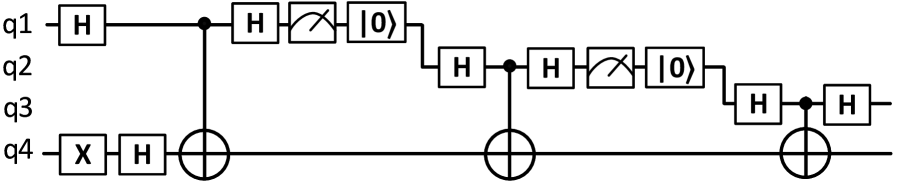

While mid-circuit measurement (MCM) offers pathways to more efficient quantum algorithms and error correction, its implementation introduces significant, qubit-dependent errors often overlooked by existing compilation tools. This work presents ‘A Compilation Framework for Quantum Circuits with Mid-Circuit Measurement Error Awareness’, introducing MERA—a novel framework that integrates MCM error profiling into qubit mapping, routing, and scheduling via dynamic decoupling. Experimental results on benchmark circuits demonstrate that MERA achieves substantial fidelity improvements—up to 52.00% over standard compilers and 122.58% on circuits generated by state-of-the-art qubit reuse compilers—without increasing circuit overhead. Could MERA-like error-aware compilation become a crucial step toward realizing practical, fault-tolerant quantum computation on near-term devices?

The Noise Within: Confronting Limitations in Quantum Compilation

The pursuit of quantum computation hinges on the potential to solve problems currently beyond the reach of classical computers, offering transformative advancements in fields like materials science, drug discovery, and cryptography. However, this promise is currently constrained by the realities of the Noisy Intermediate-Scale Quantum (NISQ) era. Qubits, the fundamental units of quantum information, are extraordinarily sensitive to environmental disturbances – stray electromagnetic fields, temperature fluctuations, and even cosmic rays – leading to rapid decoherence and computational errors. This inherent fragility necessitates extremely precise control and isolation of qubits, a significant engineering challenge. While the number of qubits in experimental devices is steadily increasing, maintaining their coherence long enough to perform complex calculations remains a critical obstacle, effectively limiting the depth and reliability of quantum algorithms achievable with present-day technology. The pursuit of error correction and fault-tolerant quantum computing is ongoing, but for the foreseeable future, computations will be susceptible to noise, demanding novel strategies to mitigate these effects and extract meaningful results.

Current quantum compilers, such as Qiskit-compiler, predominantly focus on gate-level errors while largely overlooking the detrimental effects of Measurement-Controlled Measurement (MCM) errors on overall computation fidelity. These MCM errors arise during the crucial steps of qubit reset and measurement in superconducting systems, introducing noise that accumulates as circuit depth increases. While gate errors receive considerable attention in error mitigation strategies, the impact of imperfect MCM operations—which fundamentally determine the success of conditional logic and iterative algorithms—remains largely unaddressed. This omission represents a significant limitation, as MCM errors can quickly dominate the error budget, hindering the ability to execute complex quantum algorithms reliably and potentially masking improvements achieved through gate error reduction. Consequently, a more holistic approach to quantum compilation—one that explicitly models and mitigates MCM errors—is essential for realizing the full potential of near-term quantum computers.

Superconducting qubits, while promising building blocks for quantum computers, are susceptible to errors during the critical reset and measurement phases of computation. These Measurement-Controlled Measurement (MCM) errors aren’t simply random bit flips; they represent a fundamental source of noise that accumulates with each operation. Specifically, imperfections in the qubit’s return to a known state after measurement, or inaccuracies in distinguishing between $0$ and $1$, propagate through a quantum circuit. This error accumulation directly limits the depth – the number of sequential operations – that a circuit can achieve before becoming unreliable. Consequently, even theoretically powerful quantum algorithms are rendered impractical on near-term devices due to these constraints, as the signal-to-noise ratio diminishes with increasing circuit complexity and the potential for meaningful computation is lost.

MERA: An MCM-Aware Compilation Strategy

MERA is a compiler specifically designed to mitigate the impact of Measurement Correlation Errors (MCM) during quantum circuit compilation. Existing quantum compilers generally do not explicitly account for MCM, which arise from correlated noise in qubit measurements. MERA addresses this limitation by integrating MCM error modeling directly into the compilation workflow. This proactive approach aims to improve the overall reliability and fidelity of quantum circuits by optimizing qubit placement, routing of quantum operations, and scheduling of gates to minimize the propagation and accumulation of MCM-induced errors. The compiler’s design prioritizes error mitigation as a core objective, rather than treating it as a post-compilation concern.

MERA distinguishes itself from conventional quantum compilers by integrating modeling of Measurement Correlated (MCM) errors directly into the compilation workflow at three distinct stages. Existing compilers typically address error mitigation post-compilation or not at all. MERA, however, begins by considering MCM errors during the layout stage, influencing qubit placement to minimize potential error propagation. This is followed by an error-aware routing stage, utilizing a modified SABRE algorithm to prioritize SWAP gate arrangements that reduce error accumulation. Finally, MCM error modeling extends to the scheduling phase, optimizing gate order and timing to further mitigate the effects of correlated errors on circuit fidelity. This three-stage approach enables MERA to proactively address MCM errors, rather than reacting to them after circuit execution.

The MERA compiler’s Layout Stage utilizes a cost function to optimize qubit placement with respect to predicted MCM errors. This function assigns a penalty to qubit adjacencies based on the anticipated frequency and severity of crosstalk. Specifically, the cost is calculated considering the physical distance between qubits and the estimated probability of errors occurring during two-qubit gate operations. The goal of minimizing this cost function is to arrange qubits in a configuration that reduces the overall error rate stemming from MCM interactions, effectively prioritizing physical qubit arrangements that inherently limit error propagation during circuit execution.

MERA’s routing stage builds upon the SABRE routing method by incorporating a two-level ranking strategy to optimize SWAP gate placement and reduce error propagation. This strategy first ranks potential SWAP gates based on the estimated error contribution to the overall circuit, considering the physical connectivity and error rates of the underlying quantum hardware. Subsequently, a secondary ranking prioritizes SWAP gates that minimize the length of the error propagation path, effectively limiting the impact of errors introduced by these gates on subsequent qubits. This two-tiered approach allows MERA to identify and prioritize SWAP gate placements that demonstrably reduce the overall circuit error rate compared to traditional routing methods.

Orchestrating Quantum Circuits Through Intelligent Scheduling

The scheduling stage within the MERA (Mapping, Error-aware Routing, and Allocation) framework utilizes As Late As Possible (ALAP) scheduling to optimize quantum circuit execution. ALAP scheduling functions by strategically postponing the execution of quantum gates to the latest possible time slot without violating data dependencies. This delay minimizes the impact of decoherence, as qubits spend less time in a fragile, superposition state. Furthermore, delaying gate execution reduces the potential for crosstalk interference between neighboring qubits, as the duration of simultaneous qubit activity is reduced. By maximizing the time between gate operations, ALAP scheduling aims to preserve quantum information and improve the overall fidelity of the circuit.

MERA utilizes As Late As Possible (ALAP) scheduling in conjunction with cost-aware dynamic circuit allocation to enhance quantum circuit performance. ALAP scheduling delays the execution of quantum gates to the latest possible time, thereby minimizing the impact of decoherence and qubit crosstalk on circuit fidelity. The cost-aware dynamic circuit allocation component intelligently assigns qubits to gates based on their individual error characteristics; this assignment is recalculated dynamically throughout the scheduling process. This combined strategy enables MERA to achieve deeper circuits with reduced error rates, as the system actively mitigates error accumulation by prioritizing lower-error qubits and maximizing the time available for coherent evolution before measurement.

Existing qubit-reuse compilation techniques, such as CaQR and QR-Map, typically assume uniform error rates across all qubits during circuit mapping and scheduling. This simplification neglects the inherent variations in manufacturing and operational characteristics of individual qubits on a quantum chip, specifically the differing measurement error rates (MCM) between them. Consequently, these compilers may assign logical qubits to physical qubits with comparatively high error rates, potentially increasing the overall circuit error probability and limiting achievable circuit depth. By contrast, MERA explicitly models and accounts for these variations in MCM error rates during the compilation process, enabling more informed qubit allocation and scheduling decisions.

Performance evaluations demonstrate that MERA’s integrated optimization strategy – encompassing error modeling during physical layout, routing optimization, and intelligent scheduling – yields substantial improvements in quantum circuit fidelity. Specifically, MERA achieves average fidelity gains ranging from 24.94% to 52.00% when benchmarked against the Qiskit compiler, and a 29.26% average fidelity improvement over the QR-Map compiler. These results indicate that the simultaneous consideration of layout, routing, and scheduling, coupled with error modeling, contributes significantly to minimizing the impact of hardware imperfections and maximizing circuit performance.

Towards Robust and Scalable Quantum Computation

The pursuit of more complex quantum computations within the Noisy Intermediate-Scale Quantum (NISQ) era is fundamentally limited by measurement and control errors, particularly those affecting multiple qubits simultaneously – known as multi-qubit measurement (MCM) errors. Recent advancements, exemplified by the development of MERA, directly address this challenge, effectively diminishing the disruptive influence of these errors. Consequently, researchers can now reliably explore quantum circuits with significantly increased depth – that is, circuits involving a greater number of sequential operations. This capability is not merely incremental; it expands the realm of achievable computations, enabling investigations into algorithms previously considered impractical due to error accumulation. By stabilizing qubit behavior even in deeper circuits, MERA unlocks opportunities to test and refine quantum algorithms with greater fidelity, accelerating progress towards realizing the full potential of quantum computing and opening new avenues for scientific discovery.

Achieving higher fidelity in quantum computations is paramount to unlocking the full capabilities of dynamic circuits, a cornerstone of advanced quantum processing. These circuits, unlike their static counterparts, adapt and change during execution, allowing for complex algorithms and error correction protocols. This adaptability is fundamental to realizing fault-tolerant quantum computation, where errors are actively mitigated to ensure reliable results, and distributed quantum computing, which links multiple quantum processors to increase computational power. Without significant improvements in qubit coherence and control – like those enabled by minimizing measurement cross-talk (MCM) errors – the complexity of dynamic circuits introduces unacceptable levels of noise, rendering these ambitious architectures impractical. Therefore, advancements in fidelity directly translate to the feasibility of building larger, more powerful, and ultimately more useful quantum computers capable of tackling previously intractable problems.

The versatility of the Measurement Error Reduction Algorithm (MERA) extends beyond specific quantum computing platforms, demonstrating compatibility with a diverse range of superconducting qubit architectures. This adaptability is particularly significant given the varied designs employed by leading processors, including IBM’s Eagle and Heron systems. MERA’s principles aren’t tied to a particular qubit type or connectivity scheme; instead, the algorithm effectively mitigates measurement-correlated errors regardless of the underlying hardware. This broad applicability streamlines the process of implementing error reduction techniques across different quantum processors, fostering a more unified approach to improving fidelity and scalability. The potential for seamless integration with existing and future superconducting qubit platforms positions MERA as a valuable tool for accelerating progress toward robust and reliable quantum computation.

Recent evaluations utilizing Random Unitary (RUS) benchmarks reveal a significant performance advantage for the Multi-Error Reduction Algorithm (MERA) over conventional methods like Qiskit. MERA consistently achieved higher success rates, requiring fewer computational attempts to reach a solution – a demonstration of its enhanced robustness. Crucially, this improved performance wasn’t a fleeting occurrence; the algorithm maintained a stable per-qubit Multi-Cycle Measurement (MCM) error distribution for over 24 hours, indicating a sustained level of accuracy and reliability. This extended stability is particularly valuable for complex quantum computations, assuring consistent results and laying a foundation for more intricate algorithms and extended quantum circuit depths.

The mitigation of multi-qubit measurement (MCM) errors represents a significant step towards realizing the full potential of quantum computation and its application to complex, real-world problems. By enhancing the reliability of quantum operations, researchers anticipate advancements in fields currently limited by classical computational power. Specifically, materials science stands to benefit from more accurate simulations of molecular interactions, accelerating the discovery of novel materials with tailored properties. In drug discovery, improved quantum algorithms promise to refine the modeling of drug candidates and their interactions with biological targets, potentially shortening development timelines and increasing efficacy. Moreover, the financial modeling sector could leverage these advancements to optimize portfolio management, assess risk with greater precision, and develop more sophisticated trading strategies, all facilitated by the increased fidelity and scalability of quantum algorithms now within reach.

The presented framework, MERA, prioritizes efficient quantum circuit compilation by addressing the challenges posed by mid-circuit measurement (MCM) errors. It operates on the principle of minimizing unnecessary complexity within the circuit layout, routing, and scheduling phases – a process mirroring elegant mathematical reduction. This aligns with the sentiment expressed by Paul Dirac: “I have not the slightest idea what I am doing.” While seemingly paradoxical, the quote speaks to a relentless pursuit of fundamental truths, even amidst uncertainty. MERA, similarly, tackles the inherent uncertainties of noisy intermediate-scale quantum (NISQ) devices by focusing on error awareness, achieving improved fidelity through focused optimization rather than exhaustive, potentially wasteful, computation. The emphasis on qubit reuse within MERA further exemplifies this reductionist approach, seeking maximum utility from limited resources.

What Lies Ahead?

The presented framework, while addressing a critical failing in current quantum compilation – the disregard for mid-circuit measurement (MCM) errors – does not, of course, resolve the underlying issue of imperfect hardware. It merely shifts the focus. Future work must not solely concentrate on error mitigation within compilation, but on a rigorous quantification of the cost of error mitigation itself. Every added gate, every qubit re-use strategy, introduces a new potential source of decoherence. The field requires a calculus of trade-offs, a demonstrable understanding of when simplification is genuinely intelligent, and when it is merely obfuscation.

A natural progression lies in the development of error profiles that extend beyond single-qubit or two-qubit gates. MCM errors are not isolated events; they are entangled with the preceding and subsequent operations. A holistic error model, one that accounts for the temporal correlation of errors, is essential. Further, the current reliance on heuristic scheduling algorithms should be replaced with methods grounded in formal verification, guaranteeing, where possible, a measurable improvement in circuit fidelity—not just a statistical one.

Ultimately, the pursuit of increasingly complex error mitigation schemes risks becoming a self-defeating exercise. The true challenge is not to bandage the flaws of current hardware, but to transcend them. Simplicity, in both algorithm design and hardware implementation, remains the most elegant, and likely, the most effective path forward.

Original article: https://arxiv.org/pdf/2511.10921.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Jujutsu Kaisen Modulo Chapter 18 Preview: Rika And Tsurugi’s Full Power

- How to Unlock the Mines in Cookie Run: Kingdom

- ALGS Championship 2026—Teams, Schedule, and Where to Watch

- Upload Labs: Beginner Tips & Tricks

- Top 8 UFC 5 Perks Every Fighter Should Use

- Jujutsu: Zero Codes (December 2025)

- How to Unlock & Visit Town Square in Cookie Run: Kingdom

- Roblox 1 Step = $1 Codes

- Mario’s Voice Actor Debunks ‘Weird Online Narrative’ About Nintendo Directs

- The Winter Floating Festival Event Puzzles In DDV

2025-11-17 16:03