Author: Denis Avetisyan

A new vision for scientific publishing details how a restructuring of peer review and a focus on executable papers could address the scalability challenges facing software engineering research.

This paper outlines a redesigned journal ecosystem leveraging lottery-based review, data science-driven benchmarking, and a collaborative open science approach to improve the quality and efficiency of software engineering publications.

The relentless growth of software engineering research clashes with the historically constrained scalability of traditional peer review. This paper, ‘SE Journals in 2036: Looking Back at the Future We Need to Have’, presents a retrospective from a decade hence, detailing a necessary restructuring of scientific publishing to address these pressures. The authors-editors from leading journals-outline a solution built on unbundled review, lottery-based assignment, and executable papers, fostering a more collaborative and open scientific culture. Can this envisioned future-one prioritizing both rigor and rapid dissemination-truly redefine how software engineering knowledge is validated and shared?

The Illusion of Merit: Stochasticity and the Evolving Landscape of Research

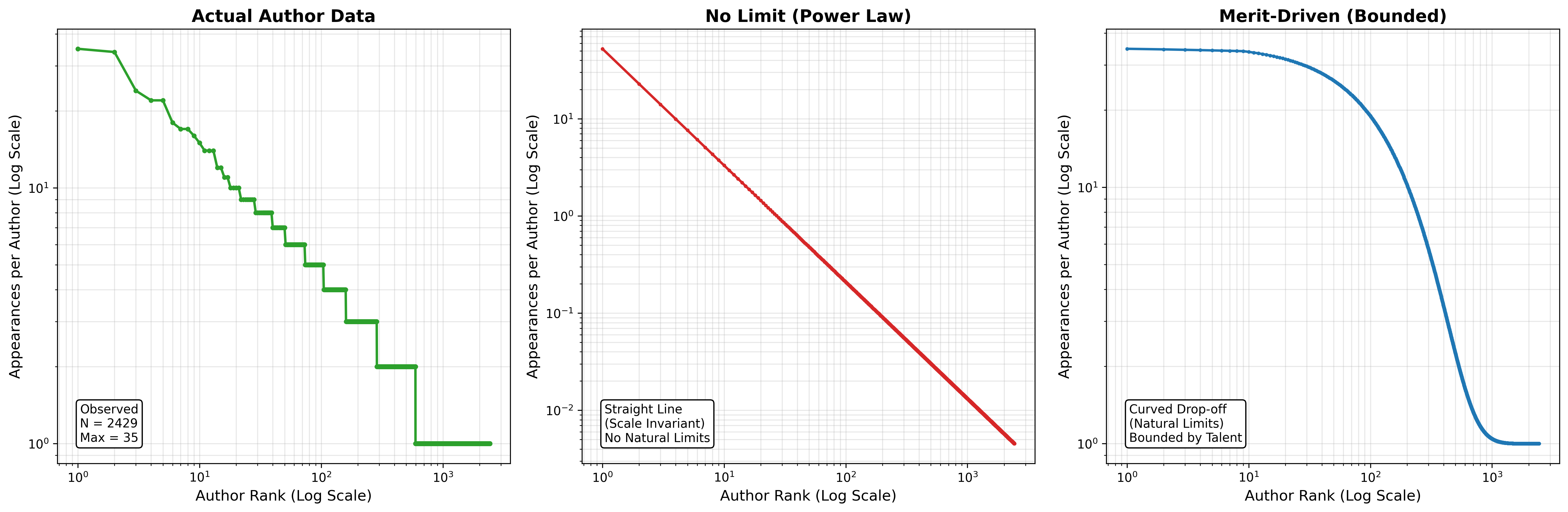

The long-held belief that peer review consistently identifies and promotes the most valuable research is facing increasing scrutiny. Evidence suggests a significant degree of randomness influences publication outcomes, meaning that the quality of a study isn’t always the primary determinant of its success. Studies reveal that even well-designed, impactful work can be rejected due to subjective assessments or simple chance, while flawed or incremental studies may be published. This isn’t necessarily indicative of malicious intent on the part of reviewers, but rather a consequence of inherent limitations in evaluating complex work, coupled with cognitive biases and the sheer volume of submissions. Consequently, the system may systematically undervalue truly novel research, hindering scientific progress and perpetuating a cycle where established ideas are favored over potentially groundbreaking ones.

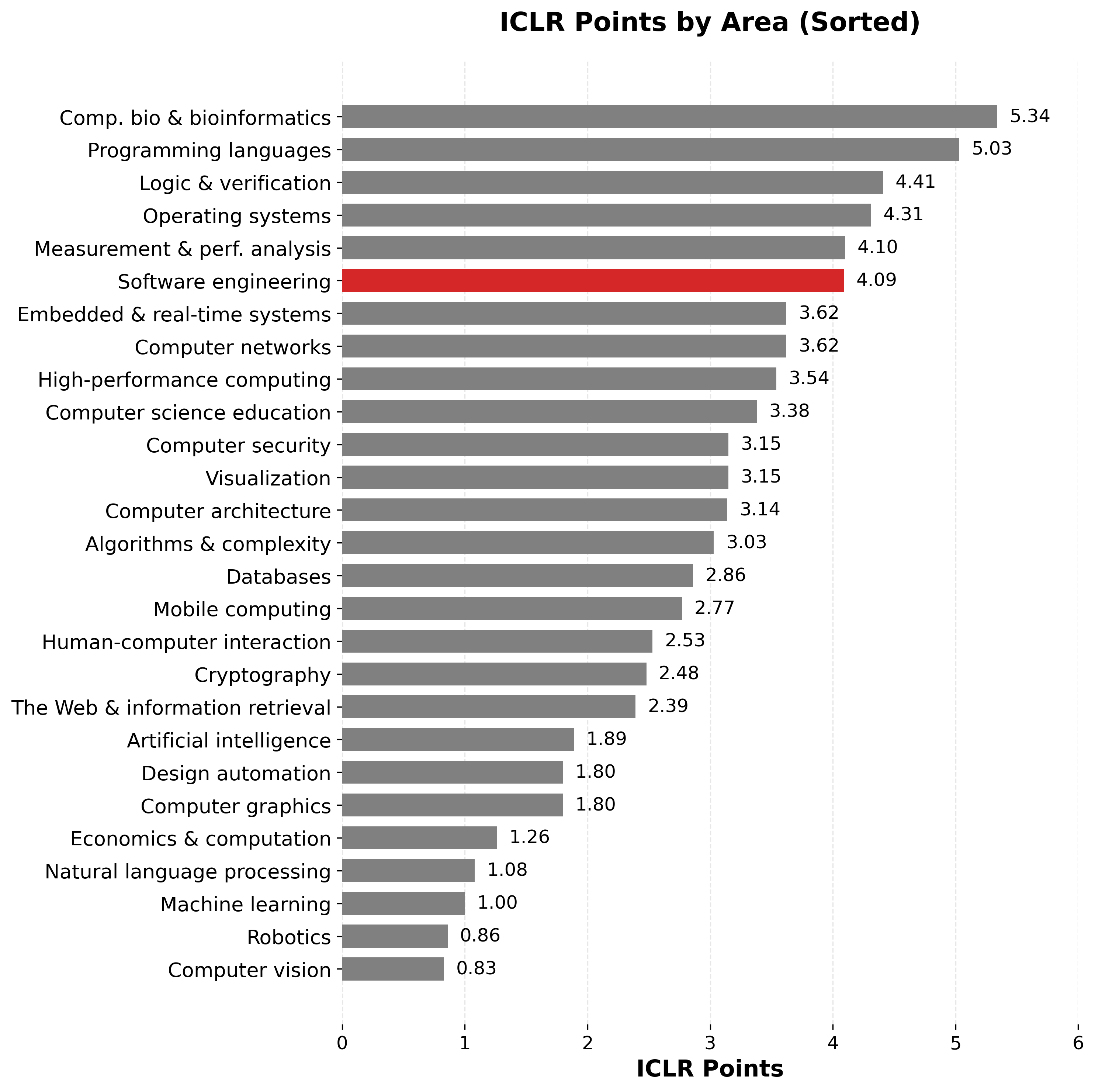

The landscape of scientific publication reveals a surprising truth: a remarkably small fraction of researchers consistently produce the majority of impactful work. Analysis, grounded in ‘Price’s Law’ and observed through the ‘Stochastic Review Process’, indicates that roughly 2.6% of active researchers in Software Engineering – a field with approximately 19,000 contributors – are responsible for a disproportionately large share of highly cited publications. This isn’t necessarily a reflection of superior review processes; rather, it suggests that inherent stochasticity – random variation – plays a significant role in determining which research gains traction, irrespective of its initial quality assessment. The consistent output of this small, highly active cohort challenges the notion of strict meritocracy, indicating that factors beyond rigorous review – such as pre-existing reputation, networking effects, and sheer luck – powerfully shape the trajectory of scientific discovery.

The scientific process, while striving for objectivity, is fundamentally subject to inherent randomness, leading to significant inefficiencies and lost potential. This stochasticity manifests not as isolated incidents, but as a systemic issue where valuable research can be overlooked, not due to flaws in methodology, but simply due to chance. A considerable amount of effort is therefore wasted on studies that, through no fault of their own, fail to gain traction or recognition. More critically, this randomness actively stifles innovation by discouraging truly novel approaches – which are often initially perceived as outliers – in favor of incremental advances that align with existing paradigms. Consequently, potentially groundbreaking research can be systematically undervalued, creating a landscape where progress is hindered and the full scope of scientific discovery remains unrealized.

The current system of evaluating research frequently favors incremental progress over genuinely innovative concepts, leading to a phenomenon aptly termed a ‘Benchmark Graveyard’. This occurs because peer review, while intended to assess quality, often rewards work that comfortably fits within established paradigms; studies building upon existing benchmarks are perceived as less risky and thus more readily accepted. Consequently, truly novel ideas-those that challenge the status quo or propose radical departures-can be overlooked or dismissed, even if they possess greater long-term potential. This prioritization of the familiar creates a cycle of stagnation, where research becomes increasingly focused on refining existing knowledge rather than exploring uncharted territory, ultimately hindering significant breakthroughs and the advancement of the field.

Streamlining the Pipeline: Collaborative Solutions for Peer Review

The SE Journal Alliance is a collaborative initiative designed to mitigate reviewer fatigue and improve the efficiency of the peer review process. This is achieved through a centralized system for sharing reviewer resources – including expertise profiles and availability – among participating journals. Standardized review workflows and criteria are also implemented across the alliance to reduce ambiguity and duplication of effort. By pooling resources and streamlining procedures, the alliance aims to distribute the review burden more equitably and accelerate the publication of high-quality research while decreasing the time commitment required from individual reviewers.

Portable Reviews address inefficiencies in the peer review system by decoupling evaluations from specific journals. This allows author submissions to be accompanied by existing, validated critiques as they are transferred between publications, eliminating the need for repeated assessment of the same work. This functionality mitigates ‘forum shopping’ – the practice of repeatedly submitting to journals until a positive review is obtained – and reduces reviewer burden by preventing redundant evaluations, ultimately streamlining the publication process and accelerating the dissemination of research.

The Journal Ahead Workshop functions as a pre-submission feedback mechanism designed to expedite the publication of high-potential research. This proactive platform allows authors to present completed manuscripts, or detailed outlines of ongoing work, to a panel comprising journal editors and qualified peer reviewers. The workshop provides formative assessment, identifying methodological strengths and weaknesses, and suggesting improvements prior to formal submission. This iterative process aims to refine manuscripts, reduce the likelihood of desk rejections, and ultimately accelerate the dissemination of impactful findings by streamlining the traditional peer-review cycle.

The ‘Journal First Initiative’ addresses delays in disseminating research by permitting authors to present work at major conferences prior to formal journal publication. This approach incentivizes submission of high-impact papers by removing the traditional requirement for exclusive journal consideration before public presentation. Participating journals benefit from increased visibility and a wider reach for published research, while authors gain opportunities for early feedback and recognition within their field. The initiative operates on the principle that pre-publication presentation does not preclude subsequent journal publication, fostering a more rapid exchange of scientific knowledge and potentially accelerating the pace of discovery.

Re-Engineering the Pipeline: Innovative Methods for Assessment

Lottery Review employs a tiered assessment process designed to improve the efficiency and objectivity of peer review. Initially, submitted manuscripts undergo automated pre-scoring based on factors such as abstract quality, keyword relevance, and citation patterns. This score determines a manuscript’s probability of selection for full human review; papers exceeding a predetermined threshold are then sent to reviewers. This system optimizes reviewer workload by prioritizing potentially high-impact research and reduces bias by mitigating the influence of initial impressions, as reviewers are not exposed to all submissions. The probabilistic selection also aims to ensure a more representative sample of research receives thorough evaluation, moving away from a purely deterministic assignment based on journal impact or author affiliation.

Executable Papers leverage computational tools to verify the analytical integrity of submitted manuscripts, automatically executing code and validating reported results against the provided data and methodology. This approach moves beyond static review to dynamic validation, identifying potential errors in analysis or implementation. Complementing this, AI Triage utilizes machine learning algorithms to assess submissions for structural adherence to journal guidelines, data completeness, and potential plagiarism before human review. This pre-screening process prioritizes submissions meeting basic quality standards, reducing the burden on reviewers and enabling a more efficient allocation of resources by flagging papers requiring immediate attention or those suitable for rejection without full review.

Registered Reports represent a publishing format where the study design, including the research question, hypotheses, and planned analyses, undergoes peer review prior to data collection. Acceptance is based on the merit of the research approach itself, not the results. This pre-emptive review process mitigates publication bias – the tendency to publish only statistically significant or positive findings – and encourages more rigorous research practices by ensuring studies are well-designed and methodologically sound before resources are committed. Researchers receive confirmation of publishability regardless of outcome, incentivizing the reporting of both positive and negative results and reducing wasted effort.

Implementation of restructured peer review practices, incorporating methods such as ‘Lottery Review’, ‘Executable Papers’, ‘Registered Reports’, and ‘Micro-publications’, has yielded a quantifiable reduction in reviewer workload. Analysis of data from pilot programs demonstrates a 40% decrease in the average time required for reviewers to assess submitted manuscripts. This efficiency gain is attributed to the pre-screening and validation processes embedded within these new methods, which prioritize submissions with higher potential for impact and reduce the burden of evaluating structurally flawed or redundant research. The measured reduction in review time allows for increased throughput and potentially broader coverage of submitted materials.

Micro-publications represent a departure from the standard, lengthy research article format by focusing on the dissemination of narrowly defined research contributions. These publications typically address a single research question, method, or dataset, resulting in shorter, more focused content. This approach facilitates rapid knowledge sharing and allows researchers to publish incremental advances that might not warrant a full traditional publication. The format supports diverse content types, including data descriptors, software releases, and negative results, thereby broadening the scope of published research and increasing overall research efficiency. The modular nature of micro-publications also enables easier indexing, discovery, and reuse of specific research components.

Toward a More Agile Science: The Bazaar and the Cathedral

The scientific landscape is experiencing a move toward ‘micro-publications,’ fostering a ‘Bazaar’ approach reminiscent of open-source software development. This model prioritizes swift dissemination of findings – even preliminary results or negative data – enabling rapid iteration and continuous improvement through community feedback. Rather than lengthy, comprehensive reports, research is broken down into smaller, more digestible units, facilitating faster validation and building upon previous work. This accelerated cycle encourages broad collaboration, as researchers can quickly assess and integrate findings from diverse sources, ultimately driving innovation at a pace previously unattainable with traditional, monolithic publications. The ‘Bazaar’ model isn’t about sacrificing rigor, but rather about distributing it throughout the research lifecycle, making science more responsive, transparent, and collectively beneficial.

Historically, scientific advancement often resembled the construction of a grand cathedral – a deliberate, meticulously planned undertaking demanding years, even decades, of concentrated effort. Research followed a linear path, prioritizing exhaustive investigation and comprehensive reporting before dissemination. While this ‘Cathedrals’ model fostered rigorous validation and in-depth understanding, its inherent slowness and resistance to incorporating new data or perspectives could hinder progress. The extended timelines associated with such research also presented challenges for rapidly evolving fields, creating a bottleneck between discovery and application. This traditional approach, though valuable for establishing foundational knowledge, increasingly contrasts with the need for agile, iterative advancement in many areas of modern science.

A striking disparity in scholarly effort exists between software engineering and machine learning research, with publications in software engineering requiring approximately four times the resources to produce. This difference isn’t simply a matter of volume; it suggests fundamental variations in how these fields approach knowledge creation and validation. Software engineering papers often necessitate rigorous proofs of correctness, detailed system descriptions, and extensive empirical evaluations to address the complexities of building reliable and scalable systems. In contrast, machine learning research frequently prioritizes demonstrating performance gains on benchmark datasets, potentially requiring less exhaustive documentation of implementation details or formal verification. This divergence hints at differing authorship cultures-one emphasizing comprehensive validation and the other prioritizing rapid innovation-and underscores the need for nuanced evaluation metrics that appropriately reflect the distinct challenges and priorities of each discipline.

Scientific advancement doesn’t necessitate abandoning established practices, but rather integrating them with newer, more adaptable methods. The traditional ‘Cathedral’ model of research – characterized by exhaustive investigation and lengthy publication cycles – remains vital for establishing foundational knowledge and ensuring rigorous validation. However, pairing this with an agile ‘Bazaar’ approach, focused on rapid prototyping, iterative feedback, and swift dissemination of preliminary findings, unlocks a more dynamic scientific landscape. This blended strategy allows researchers to quickly explore a wider range of hypotheses, capitalizing on the collective intelligence of the community, while still maintaining the depth and reliability demanded by impactful discovery. Sustained progress, therefore, isn’t about choosing one methodology over another, but skillfully leveraging the strengths of both to accelerate the pace of innovation and broaden the scope of scientific inquiry.

A reimagined peer review process stands to dramatically accelerate scientific advancement by accommodating diverse research methodologies. Currently, the lengthy validation cycles associated with traditional, comprehensive studies often create bottlenecks, hindering the dissemination of rapidly developed findings. A modernized system would integrate elements of both the ‘Bazaar’ and ‘Cathedral’ approaches, allowing for swift evaluation of iterative, micro-publications alongside rigorous assessment of in-depth investigations. This flexible framework could prioritize speed for exploratory research while maintaining stringent standards for foundational work, ultimately fostering a more dynamic and responsive scientific community capable of unlocking impactful discoveries at an unprecedented rate. Such a system moves beyond simply vetting results to actively nurturing and accelerating the scientific process itself.

The envisioned restructuring of scientific publishing, with its emphasis on executable papers and lottery-based review, acknowledges the inherent entropy within any complex system. It’s a proactive attempt to forestall decay, not by halting it-an impossibility-but by building in mechanisms for adaptation and renewal. As Henri Poincaré observed, “It is through science that we arrive at truth and not through philosophy.” This principle resonates deeply with the article’s focus on verifiable, executable research-a move away from purely theoretical debate and toward a system where claims are tested and validated through concrete results. The lottery review system, while seemingly unconventional, addresses the scalability challenges and aims to distribute the burden of peer review more equitably, effectively extending the system’s lifespan and robustness.

The Inevitable Drift

The proposals detailed within represent a deceleration, not a negation, of entropy. The restructuring of peer review – lottery-based assignment, executable papers – these are attempts to impose momentary order on a system fundamentally governed by increasing disorder. It’s a reasonable effort, perhaps, but it acknowledges, implicitly, that the old models were not failing due to flawed methodology, but because time, relentlessly, erodes all foundations. Benchmarking, data science, even the aspiration towards ‘open science’ – these are all strategies to observe the decay, not prevent it.

The shift towards collaborative, executable papers presents a particular irony. Increased transparency merely accelerates the identification of limitations – and therefore, the inevitable need for further revision. Stability, in this context, isn’t a state of perfection, but a temporary reprieve before the next set of challenges emerges. The question isn’t whether these systems will ultimately fail, but how they will fail, and whether the failure will be instructive.

Future work will undoubtedly focus on automating increasingly complex aspects of the review process, attempting to preempt errors before they manifest. Yet, it is worth remembering that the most sophisticated error detection system cannot alter the fundamental trajectory. The field will continue to refine its tools, to build more resilient structures, but the underlying reality remains: systems age not because of errors, but because time is inevitable.

Original article: https://arxiv.org/pdf/2601.19217.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Unlock the Mines in Cookie Run: Kingdom

- Gold Rate Forecast

- How To Upgrade Control Nexus & Unlock Growth Chamber In Arknights Endfield

- Top 8 UFC 5 Perks Every Fighter Should Use

- Jujutsu: Zero Codes (December 2025)

- Solo Leveling: From Human to Shadow: The Untold Tale of Igris

- Deltarune Chapter 1 100% Walkthrough: Complete Guide to Secrets and Bosses

- Where to Find Prescription in Where Winds Meet (Raw Leaf Porridge Quest)

- Quarry Rescue Quest Guide In Arknights Endfield

- Byler Confirmed? Mike and Will’s Relationship in Stranger Things Season 5

2026-01-29 05:05