Author: Denis Avetisyan

Researchers have developed a new AI-powered technique that uses natural language documentation to automatically verify the correctness of software patches and identify potential vulnerabilities.

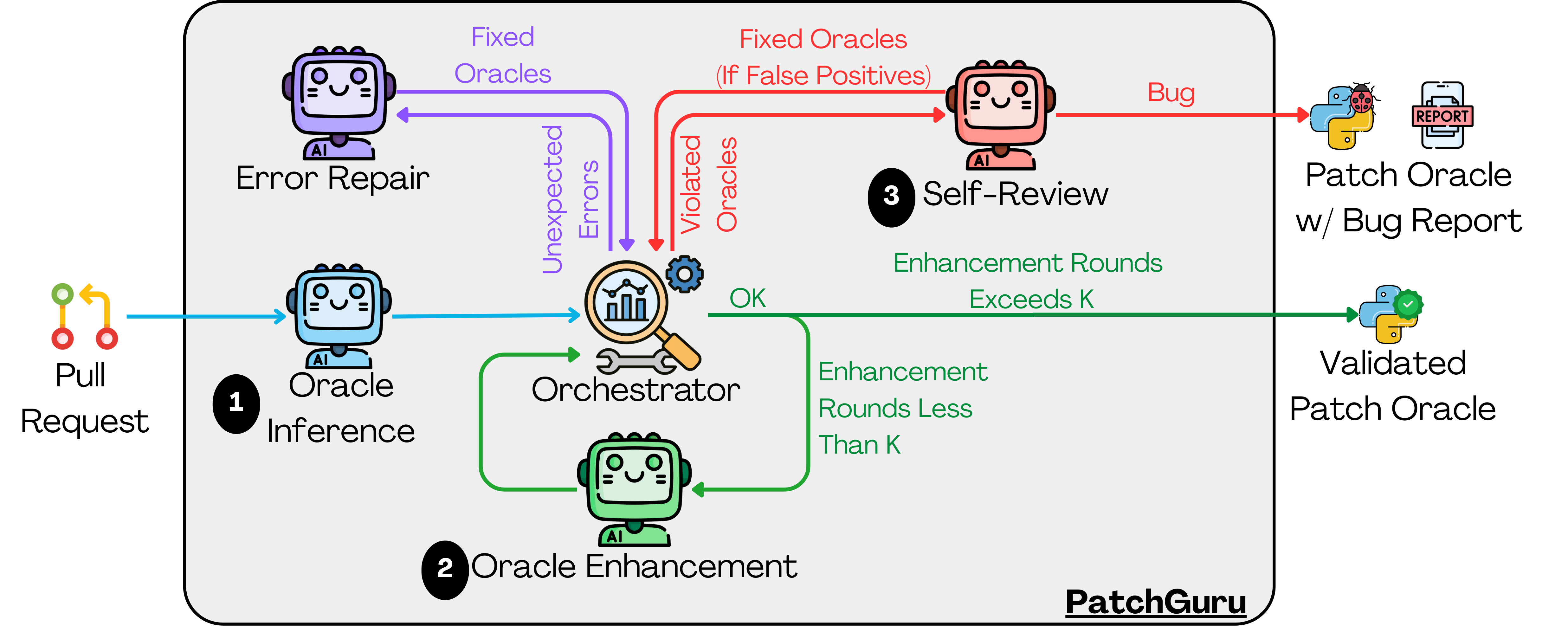

PatchGuru leverages large language models to infer executable patch oracles, enhancing dynamic analysis and mutation testing for improved software validation.

Despite increasing reliance on software patches, validating their correctness remains challenging due to incomplete testing and ambiguous natural language descriptions of intended changes. To address this, we present ‘PatchGuru: Patch Oracle Inference from Natural Language Artifacts with Large Language Models’, an automated technique that leverages large language models to synthesize executable patch specifications-oracles-from developer documentation. PatchGuru effectively identifies patch-relevant behaviors, revealing inconsistencies and previously unknown bugs with a precision of 0.62 and detecting 17 more bugs than state-of-the-art methods. Could this approach fundamentally shift the balance between proactive bug detection and reactive patching in modern software development?

The Patch Validation Paradox: Why Testing Often Misses the Point

Validating software patches presents a significant challenge due to the inherent difficulty in establishing a reliable “test oracle” – a mechanism for definitively determining the expected output for a given input after the patch is applied. Traditional testing methods often falter not because of a failure to find bugs, but because verifying the patch hasn’t introduced new issues, or subtly altered intended behavior, proves exceptionally difficult. This stems from the need to accurately predict how the software should function after modification, requiring a comprehensive understanding of the original code and the patch’s intended effects. Without a robust test oracle, developers risk accepting patches that appear to pass tests but still contain latent errors, potentially leading to unpredictable software behavior and diminished system reliability. The problem is compounded by the sheer scale of modern software, where exhaustively verifying every possible outcome after a patch is simply impractical.

The introduction of new code defects through patches is frequently amplified not by technical errors alone, but by a disconnect between implemented code and its original purpose. Often, documentation lags behind development, failing to accurately reflect the nuanced logic or intended behavior of a code segment, or is simply absent altogether. This lack of clarity creates space for misinterpretation during patch creation; developers may correctly address a reported issue but inadvertently introduce regressions or unintended side effects because the broader context of the code – the ‘Developer Intent’ – remains poorly defined. Consequently, even seemingly minor patches can become sources of instability, underscoring the critical need for robust mechanisms to capture and verify not just what code does, but why it exists in the first place.

The escalating intricacy of contemporary software systems significantly amplifies the challenges associated with patch validation. Modern applications are rarely monolithic; instead, they consist of interwoven components and dependencies, creating a ripple effect where a seemingly isolated fix can inadvertently disrupt unrelated functionality. This complexity is further compounded by the increasing trend towards ‘Multi-Function Patches’ – updates designed to address multiple issues simultaneously. While efficient for developers, these patches obscure the precise impact of any given change, making it considerably more difficult to isolate the root cause of potential regressions. Consequently, thorough testing becomes exponentially more demanding, requiring extensive coverage to account for the numerous interactions and potential side effects introduced by these multifaceted updates and increasing the likelihood of overlooking critical errors.

PatchGuru: Automating Intent with LLMs and Dynamic Analysis

PatchGuru automates the creation of ‘Patch Oracle’ specifications by processing ‘Natural Language Artifacts’ – typically commit messages, bug reports, and documentation – directly associated with a software patch. This approach bypasses the need for manually crafted oracles, which are traditionally used to verify the correctness of a patch. The system analyzes these textual descriptions to identify the intended behavior changes introduced by the patch, then translates that understanding into a formal specification suitable for automated testing. By extracting intent from natural language, PatchGuru aims to reduce the effort and potential for error inherent in manual oracle creation, enabling more efficient and reliable patch verification.

PatchGuru utilizes Large Language Models (LLMs) to translate natural language descriptions of patch intent – such as commit messages or bug reports – into executable code representations. This process involves prompting the LLM to generate test cases or code snippets that reflect the described functionality. The LLM’s ability to understand semantic meaning allows it to infer the intended behavior of the patch, effectively bridging the gap between human-readable descriptions and the concrete actions a program performs. This synthesized code serves as a ‘Patch Oracle’, a programmatic specification of the expected behavior, and enables automated validation of the patch’s correctness without relying solely on pre-existing test suites.

Dynamic analysis within PatchGuru operates by executing the patched code with a suite of automatically generated test inputs. These inputs are designed to exercise the code regions affected by the patch, and the resulting runtime behavior is then compared against the inferred oracle – the specification derived from the patch’s natural language artifacts via the LLM. Discrepancies between observed behavior and the oracle trigger a refinement process, where the LLM re-evaluates the inferred specification based on the execution trace. This iterative process of execution and refinement continues until the oracle accurately reflects the patched code’s behavior, thereby increasing confidence in its correctness and robustness against unforeseen inputs. The system prioritizes minimizing false positives and negatives in oracle predictions through this runtime validation loop.

Demonstrating PatchGuru’s Effectiveness: Mutation Testing and Coverage

PatchGuru’s architecture is designed to accommodate patches ranging in complexity from single-function alterations to multi-function changes affecting numerous code paths. This capability is achieved through a modular design that consistently applies the same inference and validation techniques regardless of patch size or scope. Initial evaluations demonstrate that the system’s performance does not degrade significantly when processing multi-function patches, indicating scalability beyond simple code modifications and adaptability to real-world software maintenance scenarios involving broader system-level changes.

Mutation testing was employed to evaluate the effectiveness of test suites generated by the inferred oracle in identifying potential weaknesses and vulnerabilities. Results indicate that PatchGuru achieved a mutation score of 0.70. This performance surpasses the mutation score of 0.58 attained by developer-written regression tests when evaluated independently. When PatchGuru-generated tests were combined with developer-written tests, the overall mutation score increased to 0.82, demonstrating a synergistic benefit and improved test coverage.

PatchGuru’s validation process incorporates established techniques to broaden test coverage beyond the inferred oracle. ‘Shadow’ testing executes the patched code alongside the original, comparing outputs for discrepancies. ‘ChaCo’ (Compositional Change Overlap) analyzes the changes introduced by the patch to identify potentially affected areas requiring further testing. Finally, ‘Metamorphic Relations’ leverage the principle that certain program behaviors should remain consistent even with altered inputs, allowing for the generation of new test cases and the detection of unexpected regressions; these methods work in conjunction to provide a robust evaluation of patch correctness and behavior.

Beyond Simple Correctness: The Future of Automated Patch Validation, and its Limits

PatchGuru represents a significant advancement in software validation by automating the creation of patch oracles – systems that determine if a code change functions as intended. Traditionally, verifying patches relies heavily on manual testing and human assessment, a process that is both expensive and time-consuming. By shifting this burden to an automated system, PatchGuru drastically reduces the need for human intervention, accelerating the software release cycle and lowering development costs. This automation not only streamlines the testing process but also minimizes the potential for human error, leading to more reliable and robust software updates. The resulting efficiency allows developers to focus on innovation rather than tedious validation tasks, ultimately boosting time-to-market for new features and improvements.

A recent evaluation of PatchGuru’s capabilities revealed its effectiveness in uncovering subtle flaws within existing codebases. Analyzing 400 real-world pull requests, the system successfully identified 24 inconsistencies, representing instances where proposed changes deviated from expected behavior or introduced potential errors. Notably, 12 of these inconsistencies were previously unknown bugs, highlighting PatchGuru’s ability to detect issues that escaped traditional testing methods. This discovery underscores the potential of automated patch validation to improve software quality and reduce the risk of deploying faulty code, offering a proactive approach to maintaining robust and reliable systems.

The architecture of PatchGuru is designed not as a solution tailored to a single ecosystem, but as a broadly applicable framework for automated patch validation. By decoupling the core logic from language-specific details and leveraging abstract syntax trees, the technique readily adapts to diverse programming languages – initial testing encompassed Java and Python, but extension to C++, Go, and others presents a clear pathway for future development. Furthermore, the system’s modular design allows integration with a variety of software systems, from monolithic applications to microservice architectures, and can be incorporated into existing CI/CD pipelines. This versatility positions PatchGuru as a valuable asset for organizations managing expansive and heterogeneous codebases, facilitating continuous software evolution and reducing the risks associated with large-scale maintenance efforts.

The efficiency of PatchGuru’s automated validation process is notable; each pull request analysis requires, on average, approximately 8.9 minutes to complete. This rapid assessment is achieved with a remarkably low operational cost of $0.07 per pull request, leveraging the capabilities of large language models. This cost-effectiveness positions PatchGuru as a scalable solution for continuous software maintenance, enabling frequent and thorough verification of code changes without substantial resource expenditure. The combination of speed and affordability suggests a pathway toward integrating automated patch validation as a standard practice in modern software development workflows, ultimately reducing technical debt and improving software quality.

The pursuit of automated patch validation, as demonstrated by PatchGuru, feels predictably ambitious. It attempts to bridge the gap between natural language – inherently ambiguous – and executable code, a task fraught with peril. As John von Neumann observed, “There is no telling what the future holds in store.” This feels acutely relevant; the system strives for a perfect oracle, but production environments are notorious for revealing edge cases unforeseen by even the most rigorous testing. The paper’s success in detecting inconsistencies is noteworthy, yet one anticipates the inevitable accumulation of ‘technical debt’ as patches evolve and the system struggles to maintain its pristine understanding. Better one robust, manually verified patch than a hundred automatically ‘validated’ ones prone to subtle failures, it seems.

What’s Next?

The pursuit of automated patch validation, as demonstrated by PatchGuru, inevitably introduces a new layer of abstraction – and thus, a new surface for failure. The system correctly identifies inconsistencies, but it does not address the fundamental problem: that documentation, the very foundation of this approach, is often a post-hoc rationalization of decisions made under duress. It is a description of what should be, not necessarily what is. The illusion of a self-validating patch oracle is appealing, but production will always find a way to expose the discrepancies between intention and implementation.

Future work will undoubtedly focus on improving the natural language understanding component, attempting to coax more accurate representations from inherently ambiguous sources. However, the real challenge lies not in parsing language, but in modeling the chaotic reality of software development. The focus on large language models risks treating symptoms-poor documentation-rather than the disease: the constant pressure to ship features before understanding their full implications.

The field does not need more sophisticated techniques for detecting bugs; it needs fewer bugs in the first place. The promise of automated oracles is seductive, but one suspects the ultimate outcome will be a more efficient means of discovering edge cases-a faster route to the same familiar frustrations. Perhaps the next generation of research will prioritize methods for preventing inconsistencies, rather than simply identifying them after the fact.

Original article: https://arxiv.org/pdf/2602.05270.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- How to Unlock the Mines in Cookie Run: Kingdom

- Solo Leveling: Ranking the 6 Most Powerful Characters in the Jeju Island Arc

- Gold Rate Forecast

- Bitcoin Frenzy: The Presales That Will Make You Richer Than Your Ex’s New Partner! 💸

- YAPYAP Spell List

- Gears of War: E-Day Returning Weapon Wish List

- How to Find & Evolve Cleffa in Pokemon Legends Z-A

- Most Underrated Loot Spots On Dam Battlegrounds In ARC Raiders

- Bitcoin’s Big Oopsie: Is It Time to Panic Sell? 🚨💸

- Top 8 UFC 5 Perks Every Fighter Should Use

2026-02-08 17:48