Author: Denis Avetisyan

This review explores a robust security architecture designed to protect containerized systems from the growing threat of low-rate Distributed Denial of Service attacks.

A novel system integrating rate limiting, dynamic blacklisting, TCP/UDP header analysis, and a Web Application Firewall within a Zero Trust framework enhances container security.

While increasingly sophisticated defenses exist for high-volume attacks, low-rate Distributed Denial of Service (DDoS) attacks pose a persistent threat to cloud infrastructure resilience. This paper, ‘Multi Layer Protection Against Low Rate DDoS Attacks in Containerized Systems’, introduces a novel multi-layered mitigation system designed to effectively defend containerized environments against these subtle, yet damaging, attacks. By integrating a Web Application Firewall, rate limiting, dynamic blacklisting, TCP/UDP header analysis, and zero trust principles, the proposed system proactively identifies and blocks malicious traffic throughout the attack lifecycle. Could this approach represent a paradigm shift towards more adaptive and robust security architectures for modern cloud deployments?

Unveiling the Subtle Assault: Low-Rate DDoS and the Erosion of Trust

Conventional Distributed Denial of Service (DDoS) defenses are historically designed to identify and neutralize attacks characterized by massive surges in traffic volume – the kind that overwhelm network bandwidth. However, a growing threat bypasses these safeguards through subtlety. Low-rate DDoS attacks operate by sending malicious traffic at a pace that closely mimics legitimate user activity, remaining beneath the thresholds typically flagged by automated mitigation systems. This insidious approach allows attackers to slowly degrade service availability, causing performance issues and disruptions that can be difficult to distinguish from routine network congestion. Consequently, organizations reliant solely on volumetric detection risk remaining unprotected against these stealthier, yet increasingly prevalent, attacks – a significant vulnerability in today’s interconnected digital landscape.

Low-rate Distributed Denial of Service (DDoS) attacks present a unique challenge because they deliberately mimic normal network traffic, skillfully evading traditional security measures designed to detect large spikes in data volume. Rather than overwhelming systems with sheer force, these attacks utilize a sustained, subtle flood of malicious requests that remain just below the thresholds triggering conventional mitigation systems. This insidious approach allows attackers to gradually degrade service availability, causing performance issues and intermittent outages that are difficult to attribute to a deliberate attack. Consequently, legitimate users experience frustrating slowdowns or access disruptions, while security teams struggle to identify the source of the problem amidst the noise of regular network activity. The stealth and persistence of low-rate DDoS attacks necessitate more advanced detection techniques that analyze traffic patterns and application behavior, rather than relying solely on volume-based filtering.

Contemporary distributed denial-of-service (DDoS) attacks are no longer solely characterized by massive floods of traffic; instead, a trend towards highly refined and subtle attack vectors is emerging. This necessitates a fundamental reassessment of traditional mitigation strategies, which often rely on simple volumetric thresholds. Modern defenses must move beyond merely identifying and blocking large-scale surges to incorporate behavioral analysis, anomaly detection, and machine learning. These intelligent systems can discern malicious traffic disguised within legitimate requests, recognizing deviations from established patterns and adapting to evolving attack techniques. Such granular approaches allow for precise targeting of malicious activity without disrupting genuine users, ensuring continued service availability and minimizing the impact of increasingly sophisticated threats. The evolution demands proactive, adaptive security measures capable of anticipating and neutralizing attacks before they escalate into significant disruptions.

Organizations increasingly vulnerable to low-rate Distributed Denial of Service (DDoS) attacks now face a cascade of potential consequences extending far beyond immediate service disruption. While appearing as legitimate traffic, these subtle attacks can slowly degrade performance, leading to lost revenue and diminished customer trust. The financial implications extend to emergency mitigation costs, incident response, and potential legal ramifications stemming from compromised data. Critically, a successful low-rate DDoS attack often serves as a distraction, masking attempts at data exfiltration or the deployment of more damaging malware, significantly elevating the risk of a full-scale data breach and lasting reputational harm. Ignoring this evolving threat landscape, therefore, isn’t simply a technical oversight-it’s a business risk with potentially devastating consequences.

Zero Trust: A Fortress Built on Verification, Not Assumption

The Zero Trust security model operates on the principle of “never trust, always verify,” rejecting the traditional network security approach of perimeter-based trust. This means that no user or device, whether inside or outside the network perimeter, is automatically trusted. Every access request is strictly authenticated, authorized, and continuously validated based on a number of factors, including user identity, device posture, and behavioral analytics. This granular approach minimizes the attack surface and limits the potential damage from compromised credentials or insider threats by enforcing least privilege access and continuously monitoring for anomalous activity. Effectively, Zero Trust eliminates the concept of implicit trust and requires explicit verification for every transaction.

Micro-segmentation divides the network into isolated segments, limiting communication pathways and reducing the attack surface. This approach prevents attackers who have breached one segment from freely accessing other parts of the network. By enforcing granular access controls and restricting lateral movement, the scope of a successful breach is contained, minimizing data exposure and potential damage. Implementation typically involves defining policies based on user identity, device posture, and application context, effectively creating a “least privilege” environment where only necessary communication is permitted between segments.

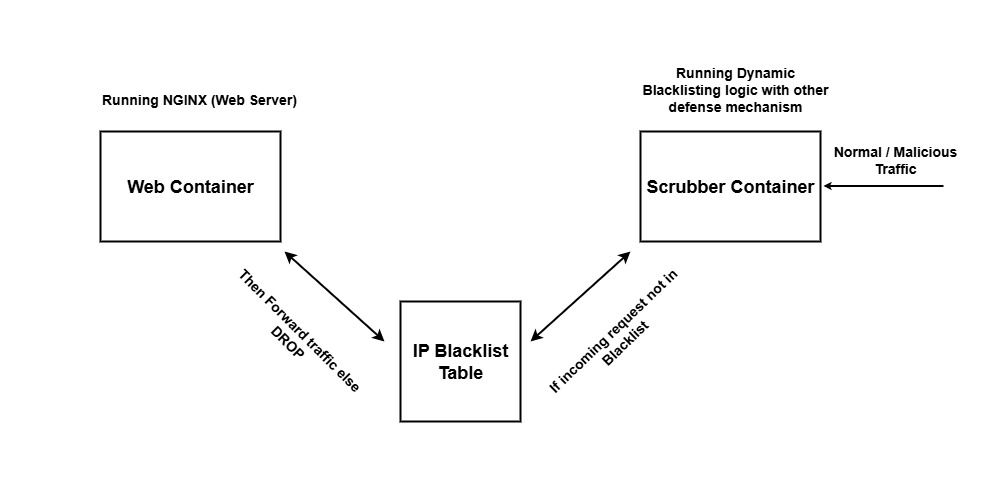

Proactive traffic filtering within a Zero Trust architecture utilizes rate limiting and dynamic blacklisting as key components. Rate limiting functions by restricting the number of requests accepted from a specific source within a defined timeframe, mitigating the impact of brute-force attacks and preventing service exhaustion. Dynamic blacklisting complements this by automatically identifying and blocking IP addresses or patterns associated with malicious activity, sourced from threat intelligence feeds and real-time behavioral analysis. These techniques operate at multiple layers – network, application, and API – and adaptively respond to evolving threat landscapes without requiring manual intervention, reducing the attack surface and minimizing exposure to malicious traffic.

The implementation of a Zero Trust model, coupled with micro-segmentation, rate limiting, and dynamic blacklisting, significantly raises the bar for successful low-rate Distributed Denial of Service (DDoS) attacks. These attacks, characterized by traffic volumes below traditional detection thresholds, attempt to overwhelm systems through persistent, subtle requests. Micro-segmentation limits the blast radius of any compromised host, preventing broad network impact. Rate limiting restricts the number of requests accepted from a single source within a given timeframe, while dynamic blacklisting automatically blocks identified malicious IP addresses. The combined effect forces attackers to utilize a substantially larger botnet and more sophisticated techniques to overcome these defenses, increasing both the cost and complexity of a successful attack and making it more likely to be detected and mitigated.

Deep Packet Inspection: Peering Beneath the Surface of Network Traffic

Analysis of TCP and UDP headers provides a method for identifying anomalous network behavior indicative of potential attacks. Specifically, examination of TCP handshake packets – SYN, SYN-ACK, and ACK – enables the detection of SYN flood attacks, where an attacker overwhelms a target server with SYN requests without completing the handshake. Monitoring the rate of incomplete connections and the source of these packets allows for identification of malicious activity. UDP header analysis can reveal anomalies such as unusually large packets or high packet rates originating from a single source, potentially indicating UDP flood attacks or amplification attacks. Beyond simple rate limiting, header analysis allows for stateful inspection, tracking connection status and identifying deviations from expected patterns.

Deep packet inspection (DPI) enables the examination of data beyond header information, allowing for analysis of payload content and patterns to distinguish malicious traffic from legitimate requests. This capability is particularly important for identifying low-and-slow attacks, where traffic volume remains below typical thresholds but exhibits characteristics indicative of hostile intent. By analyzing packet content, DPI can detect subtle anomalies, such as specific code injections or exploitation attempts, that would otherwise go unnoticed by simple header-based filtering. This granular inspection allows for accurate differentiation, minimizing false positives and ensuring legitimate users are not inadvertently blocked, even during periods of low-volume, targeted attacks.

A Web Application Firewall (WAF) functions as a protective barrier between a web application and incoming network traffic, specifically examining HTTP requests. When enhanced with the OWASP Core Rule Set (CRS), a continually updated collection of signatures and rules, the WAF effectively mitigates a broad spectrum of application-layer attacks, including SQL injection, cross-site scripting (XSS), remote file inclusion (RFI), and other OWASP Top Ten vulnerabilities. The OWASP CRS provides a baseline level of protection and reduces the administrative overhead associated with custom rule creation and maintenance, while also facilitating rapid deployment and consistent security policies across web applications.

Combining TCP/UDP header analysis with Web Application Firewall (WAF) capabilities provides a multi-layered defense against Distributed Denial of Service (DDoS) attacks. Header analysis identifies volumetric attacks and anomalies in network traffic patterns, allowing for rate limiting and traffic filtering based on source IP addresses, port numbers, and flags. Simultaneously, the WAF, particularly when utilizing a rule set like the OWASP Core Rule Set, examines the application layer (Layer 7) for malicious payloads and patterns indicative of application-layer DDoS attacks, such as HTTP floods or slowloris. This combined approach ensures protection against both network and application-layer DDoS vectors, adapting to evolving attack techniques by leveraging signature-based detection, behavioral analysis, and continuously updated rule sets.

Containerization: Orchestrating Resilience in a Dynamic Environment

The DDoS mitigation system benefits significantly from containerization, specifically through the use of Docker, which streamlines both packaging and deployment processes. This approach encapsulates the system and its dependencies into standardized units, ensuring consistent operation across diverse environments – from developer laptops to production servers. Such portability eliminates common “it works on my machine” issues and accelerates the deployment pipeline. Moreover, containerization enables efficient resource utilization and facilitates horizontal scalability; as traffic demands fluctuate, additional container instances can be readily deployed to absorb the load, enhancing the system’s resilience against large-scale distributed denial-of-service attacks without requiring substantial infrastructure changes. This agility is crucial for maintaining service availability during peak events and adapting to evolving threat landscapes.

The containerized deployment of the DDoS mitigation system significantly streamlines the process of bringing the solution online, eliminating dependencies on specific host environments and reducing setup time. This method minimizes resource consumption by packaging only the essential components – the application, runtime, and system tools – into a lightweight, isolated unit. Consequently, the system exhibits enhanced resilience; if one container fails, it can be rapidly replaced without impacting the overall service, and scaling becomes more manageable through the simple replication of containers as demand fluctuates. This efficient use of resources and rapid recovery capability contribute to a more stable and robust defense against distributed denial-of-service attacks.

The DDoS mitigation system leverages Apache2 as its core web server, providing a robust and widely-adopted platform for hosting the applications it safeguards. This integration isn’t simply a matter of compatibility; Apache2 is fully contained within the Dockerized environment, allowing the entire application stack – including the web server – to benefit from the portability, scalability, and resource efficiency of containerization. This seamless inclusion ensures consistent performance across different deployment environments and simplifies the process of scaling the protected applications to meet fluctuating demand. By operating within the container, Apache2’s configuration and dependencies are isolated, enhancing system stability and reducing potential conflicts with other services.

The DDoS mitigation system’s effectiveness hinges on a carefully tuned rate limiting mechanism, designed to distinguish legitimate traffic from malicious floods. This system accepts a baseline of five requests per second, providing ample bandwidth for typical user activity. However, recognizing that momentary spikes in traffic are normal, a burst allowance of ten requests is incorporated. This allows for short-term surges – such as multiple clicks during a rapid navigation sequence – without triggering false positives and blocking genuine users. By accommodating these temporary increases while firmly capping sustained request rates, the system maintains accessibility for legitimate users while effectively neutralizing distributed denial-of-service attacks that attempt to overwhelm the server with excessive traffic.

The presented system champions a proactive dismantling of assumed security, mirroring a fundamental tenet of robust engineering. It doesn’t simply trust containerized environments; instead, it continuously probes for weaknesses, leveraging techniques like dynamic blacklisting and TCP/UDP header analysis. This mirrors Linus Torvalds’ sentiment: “Most people think that ‘debugging’ means ‘fixing bugs’, but it’s really about finding them.” The multi-layered approach isn’t about preventing all attacks, but about rapidly detecting and neutralizing malicious traffic, acknowledging that vulnerabilities are inevitable. The zero-trust architecture, coupled with rate limiting and WAF integration, isn’t a static shield, but a dynamic process of controlled failure – a constant cycle of testing and refinement.

What’s Next?

The presented architecture, while demonstrably effective against the subtly disruptive force of low-rate DDoS attacks, implicitly acknowledges a fundamental truth: security isn’t a destination, but a continuous process of controlled demolition. Each layer of defense, from rate limiting to header analysis, functions as a controlled failure point, designed to expose vulnerabilities before an attacker does. The immediate challenge, then, isn’t simply adding more layers, but refining the granularity of those failures. A truly adaptive system would not just detect anomalous traffic, but predict its evolution, anticipating attack vectors before they fully materialize.

Furthermore, the reliance on blacklisting, even dynamic blacklisting, represents a reactive stance. It treats symptoms, not causes. The logical extension of this work lies in proactively profiling legitimate user behavior-building a ‘white list’ of expected interactions-and treating deviations as inherently suspect. This, of course, introduces a different set of problems – false positives, privacy concerns – but those are precisely the interesting problems. Security, at its core, is a trade-off; the art lies in determining where to draw the line.

Finally, the zero-trust framework, while a step in the right direction, remains largely reliant on perimeter-based assumptions. The true test will be extending this model to encompass the entire data lifecycle, ensuring that every packet, every process, every access request is subject to continuous, granular scrutiny. To assume trust is to invite failure. The future isn’t about preventing attacks; it’s about minimizing their impact by embracing the inevitability of compromise.

Original article: https://arxiv.org/pdf/2602.11407.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- One Piece Chapter 1174 Preview: Luffy And Loki Vs Imu

- Mewgenics Tink Guide (All Upgrades and Rewards)

- Top 8 UFC 5 Perks Every Fighter Should Use

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- Sega Declares $200 Million Write-Off

- Violence District Killer and Survivor Tier List

- All Pistols in Battlefield 6

- All 100 Substory Locations in Yakuza 0 Director’s Cut

- Xbox Game Pass September Wave 1 Revealed

- All Shrine Climb Locations in Ghost of Yotei

2026-02-16 04:13