Author: Denis Avetisyan

A novel orchestration system, push0, addresses the challenges of reliably and efficiently generating zero-knowledge proofs for demanding blockchain applications.

This paper details push0, a scalable and fault-tolerant framework designed to meet the timing and reliability requirements of zero-knowledge proof generation, demonstrated through production deployment and performance analysis.

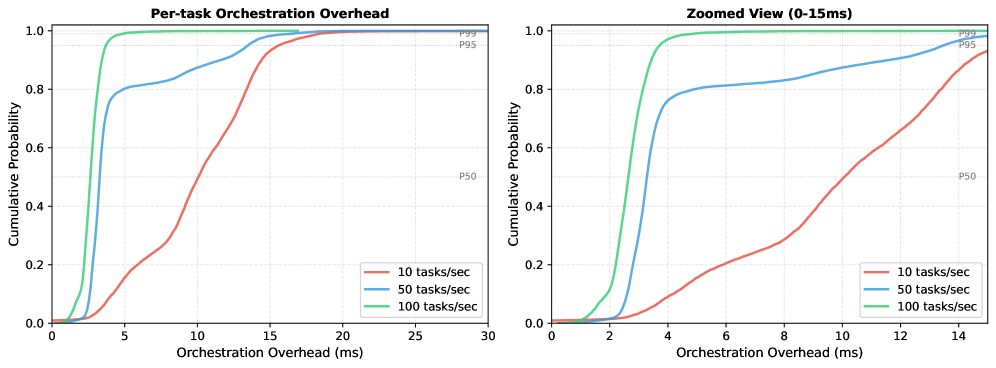

Achieving timely finality and economic efficiency in blockchain systems is increasingly challenged by the computational demands of zero-knowledge (ZK) proof generation. This paper introduces push0: Scalable and Fault-Tolerant Orchestration for Zero-Knowledge Proof Generation, a cloud-native system designed to decouple prover binaries from scheduling infrastructure and address the critical requirements of both ZK-rollups and emerging L1 zkEVMs. Through an event-driven architecture and persistent priority queues, push0 demonstrably achieves 5 ms median orchestration overhead with near-perfect scaling efficiency-a negligible fraction of typical proof computation times-as validated by production deployment on the Zircuit zkrollup and controlled experiments. Will this decoupling pave the way for truly decentralized and scalable ZK-powered blockchains?

Unveiling the Scaling Paradox: ZK-Rollups and the Proof Generation Bottleneck

ZK-Rollups represent a significant advancement in Ethereum scaling, tackling transaction congestion by shifting computational burdens off the main chain. This is achieved through the execution of transactions on a Layer 2 network, followed by the submission of only a succinct validity proof – a Zero-Knowledge Proof – to the Ethereum mainnet. While this drastically reduces on-chain data requirements and associated costs, the generation of these proofs is inherently computationally demanding. The security of a ZK-Rollup is directly tied to the correctness of this proof; any flaw could compromise the entire system. Consequently, despite offering substantial scalability benefits, the practical implementation of ZK-Rollups hinges on overcoming the challenges associated with efficiently generating and verifying these complex cryptographic proofs, requiring specialized hardware and optimized algorithms to maintain responsiveness and prevent network bottlenecks.

The creation of zero-knowledge proofs, essential for the security and functionality of ZK-Rollups, represents a significant performance hurdle in scaling Ethereum. This proof generation process, while verifying transactions without revealing data, is computationally demanding and directly influences how quickly transactions are finalized – a metric known as finality lag. Without substantial optimization, the time required to generate these proofs can become excessive, creating a bottleneck that limits transaction throughput and potentially halts the rollup chain entirely. A delay in proof generation effectively delays the confirmation of all bundled transactions, impacting user experience and creating systemic risks as the backlog grows. Consequently, research and development efforts are heavily focused on accelerating proof generation through novel algorithms, specialized hardware, and distributed proof systems to ensure ZK-Rollups can meet the demands of a rapidly expanding blockchain ecosystem.

Existing methods for managing and verifying Zero-Knowledge Proofs within ZK-Rollups are increasingly challenged by the escalating demands of a burgeoning decentralized application landscape. As rollup ecosystems expand, the sheer volume of transactions requiring proof generation and validation creates a significant performance bottleneck, threatening scalability gains. Current centralized or simple distributed approaches struggle to efficiently orchestrate the complex computational tasks involved, leading to increased finality times and potential system instability. Consequently, research is heavily focused on developing innovative orchestration frameworks – systems capable of dynamically allocating resources, parallelizing proof generation, and intelligently distributing verification loads – to ensure these scaling solutions can keep pace with growing user activity and maintain the integrity of the Ethereum network.

Push0: Deconstructing the Proof Generation Bottleneck

Push0 is a proof orchestration framework built to address the demands of scalable and fault-tolerant Zero-Knowledge (ZK)-rollup operation. Unlike general-purpose computation platforms, Push0 is specifically designed to manage the complex workflow of generating and verifying the cryptographic proofs required for ZK-rollups. This specialization allows for optimizations in task distribution, dependency management, and failure recovery, crucial for maintaining rollup throughput and data integrity at scale. The framework abstracts away the underlying prover technology, enabling compatibility with multiple proving systems and hardware accelerators without altering the core rollup logic.

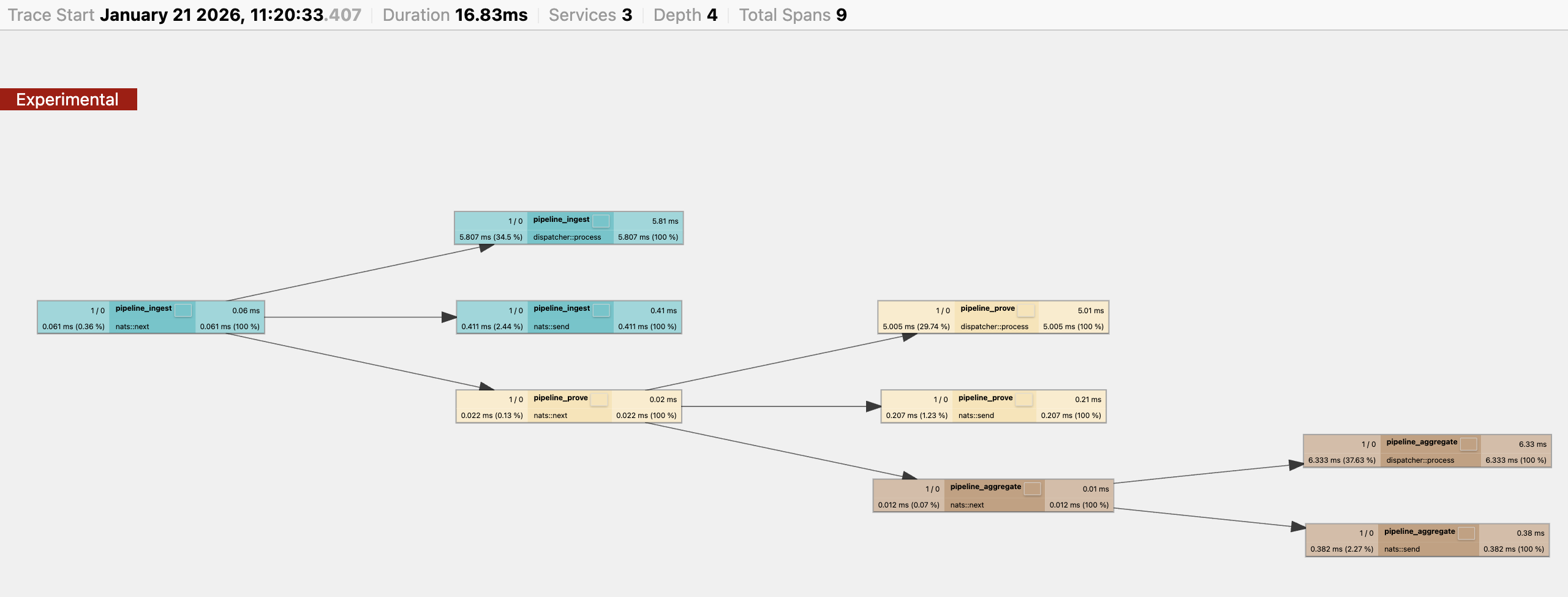

Push0 achieves horizontal scalability through a distributed architecture comprised of a Dispatcher and a Collector. The Dispatcher component is responsible for distributing proof generation tasks across available prover nodes, managing task queues, and ensuring efficient resource utilization. The Collector component aggregates the resulting proofs from these nodes, verifies their validity, and manages dependencies between tasks. This separation of concerns allows Push0 to dynamically scale its proof generation capacity by adding or removing prover nodes without requiring significant architectural changes, and ensures resilience by isolating task failures to individual nodes rather than impacting the entire system.

Push0 achieves high throughput by eschewing synchronous write operations, which commonly introduce performance bottlenecks in distributed systems. Instead of immediately confirming each write, Push0 utilizes an asynchronous approach. To counteract the potential for data inconsistencies inherent in asynchronous systems, Push0 is engineered to guarantee exactly-once semantics. This is accomplished through a combination of techniques including durable task queues, idempotent operations, and conflict detection/resolution, ensuring that each operation is reliably processed exactly one time, even in the event of node failures or network disruptions, thereby preserving data integrity without sacrificing performance.

The Prover component is fundamental to Push0’s operation, responsible for generating the cryptographic proofs required for ZK-rollup validity. This component currently supports proof generation via platforms including SP1 and Halo2, allowing for flexibility and adaptation to different proving system requirements. Since its deployment, Push0 has successfully orchestrated the generation of proofs for over 14 million mainnet blocks on the Zircuit zkrollup, commencing on March 4, 2025, demonstrating its capacity for high-throughput, production-level operation.

The Inner Workings: Orchestrating Reliability within Push0

Push0 utilizes a priority queue to strictly enforce head-of-chain ordering, a mechanism essential for maintaining data consistency and preventing invalid state transitions within the system. This queue prioritizes operations based on their position within a chain of dependencies; operations higher in the chain – closer to the “head” – are processed before those further down. By processing operations in this predetermined order, Push0 ensures that any given state transition is built upon a valid and previously confirmed foundation, mitigating the risk of applying operations that rely on incomplete or incorrect data. This prioritization is not based on time or external factors, but solely on the inherent logical order defined by the chain structure, guaranteeing deterministic and predictable system behavior.

Push0 utilizes Recursive Proof Composition to enhance verification efficiency by constructing larger, consolidated proofs from a series of smaller, individual proofs. This process involves chaining these constituent proofs together, allowing a single, succinct result to represent the validity of multiple underlying operations or data points. The aggregation reduces the computational cost and storage requirements associated with verifying each individual proof separately, while maintaining the integrity and verifiability of the overall result. This is particularly beneficial in scenarios involving complex computations or large datasets where verifying numerous individual claims would be impractical.

Push0 utilizes NATS, a high-performance messaging system, to facilitate communication between its internal components. This implementation of a message bus architecture enables asynchronous operation, meaning components are not blocked waiting for responses, improving overall system throughput. NATS also provides inherent resilience; if a component fails, messages remain available for processing by other instances, preventing data loss and maintaining system stability. This decoupling of components allows for independent scaling and updates without impacting the functionality of other parts of the system, contributing to Push0’s operational reliability.

Push0 incorporates OpenTelemetry for the generation and collection of telemetry data, including metrics, logs, and traces, which are then ingested by Prometheus for storage and querying. This integration facilitates real-time monitoring of system health, component performance, and operational metrics. Specifically, this monitoring infrastructure enables the consistent achievement of a dispatch latency of less than 10ms, a key performance indicator for the system. Alerting rules configured within Prometheus trigger notifications based on predefined thresholds, ensuring prompt responses to potential issues and maintaining system reliability.

Challenging the Limits: Amdahl’s Law and the Pursuit of Throughput

Despite the substantial gains offered by Push0’s distributed architecture, the potential for speedup remains fundamentally constrained by Amdahl’s Law. This principle dictates that the maximum reduction in processing time achievable through parallelization is limited by the portion of the task that must be completed sequentially. Even with a highly distributed system, any single, non-parallelizable step in the proof generation pipeline will ultimately bottleneck the overall process. Therefore, while Push0 effectively minimizes sequential dependencies to maximize parallelization, it cannot entirely circumvent the limitations imposed by Amdahl’s Law; gains will diminish as more computational resources are added, highlighting the importance of continually refining the algorithm to reduce inherently sequential components.

Push0’s architecture deliberately reduces the reliance on strictly sequential operations within its proof generation process. By identifying and restructuring computational steps, the framework isolates tasks that can be executed concurrently, dramatically increasing the overall throughput. This design prioritizes the parallelizable components of the pipeline-such as individual range proof calculations-allowing them to proceed independently and simultaneously. The result is a system where performance isn’t limited by the slowest sequential step, but rather by the efficient coordination of numerous parallel computations, ultimately contributing to faster proof finality and a more responsive user experience.

Push0’s architecture deliberately prioritizes the optimization of tasks suitable for parallel processing, directly challenging the limitations imposed by Amdahl’s Law and maximizing overall throughput. This focus allows the system to significantly reduce the time required for crucial cryptographic operations, ultimately leading to faster finality of transactions and a smoother user experience. Benchmarks demonstrate this optimization in practice, with the system achieving a median range proof completion time of just 24.4 minutes when processing chunks of 100 blocks – a substantial improvement over existing solutions and a testament to the effectiveness of its parallelized design.

The architectural refinements within the Push0 framework yield benefits beyond simply accelerating proof generation; they substantially bolster the system’s resilience. By distributing computational load and minimizing dependencies, the framework achieves a median aggregation proof completion time of 2.0 minutes for batches containing 1,500 to 2,000 blocks. This distributed approach inherently increases fault tolerance, as the failure of any single component does not halt the entire process; computation seamlessly shifts to remaining operational units. Consequently, Push0 maintains continuous operation even under adverse conditions, ensuring reliable finality and a consistently available service – a critical feature for robust blockchain infrastructure.

The push0 framework, as detailed in the article, doesn’t simply avoid failure; it actively anticipates and mitigates it through distributed orchestration and fault tolerance. This approach echoes a sentiment expressed by Edsger W. Dijkstra: “Programming is a sport.” The design isn’t about constructing a perfect, unbreakable system, but about building one that gracefully handles inevitable imperfections. Just as a skilled athlete prepares for various setbacks, push0 anticipates potential issues in proof generation-like timing discrepancies or node failures-and incorporates mechanisms to recover. The article’s focus on scalability and reliability isn’t about eliminating bugs, but about mastering the art of managing them within a complex, distributed environment. The system’s architecture treats failure not as an anomaly, but as an inherent part of the game.

Beyond the Proof: Where Do We Go From Here?

The orchestration of zero-knowledge proofs, as demonstrated by push0, isn’t about achieving perfect reliability-a mirage in any complex system. It’s about elegantly containing failure. The system reveals its limits not in ideal conditions, but when pushed-when the network stumbles, or a prover inexplicably halts. Future work shouldn’t focus on eliminating these inevitable hiccups, but on instrumenting for their rapid diagnosis and, crucially, automated recovery. The current emphasis on zkEVM compatibility is a necessary step, but a temporary constraint; true scalability won’t arrive until proof generation transcends the limitations of existing virtual machine architectures.

One wonders if the relentless pursuit of ever-more-complex proofs is a distraction. The real bottleneck might not be computational cost, but the human cost of debugging these systems. A shift towards formal verification of orchestration logic-treating the scheduler itself as a critical piece of hardware-could yield dividends. The field currently prioritizes proving what a computation does; perhaps equal effort should be devoted to proving how the proof system behaves under stress.

Ultimately, the success of zero-knowledge technologies hinges not on cryptographic elegance alone, but on their practical robustness. Push0 represents a move toward that practicality, but it also illuminates the gaps. The next phase requires embracing the chaos-actively seeking out the points of failure, and building systems designed to learn from them, rather than resist them.

Original article: https://arxiv.org/pdf/2602.16338.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Jujutsu Kaisen Modulo Chapter 23 Preview: Yuji And Maru End Cursed Spirits

- Poppy Playtime Chapter 5: Engineering Workshop Locker Keypad Code Guide

- Mewgenics Tink Guide (All Upgrades and Rewards)

- 8 One Piece Characters Who Deserved Better Endings

- God Of War: Sons Of Sparta – Interactive Map

- Top 8 UFC 5 Perks Every Fighter Should Use

- How to Play REANIMAL Co-Op With Friend’s Pass (Local & Online Crossplay)

- How to Discover the Identity of the Royal Robber in The Sims 4

- Who Is the Information Broker in The Sims 4?

- Sega Declares $200 Million Write-Off

2026-02-19 14:37