Author: Denis Avetisyan

A novel approach uses category theory to formally model and verify trust relationships within complex computing systems, moving beyond traditional security paradigms.

This review details a category-theoretic model (‘TRUST’) for representing and reasoning about trust, remote attestation, and verification processes.

Establishing robust trust in complex computational systems remains a fundamental challenge, particularly as reliance on remote attestation grows. This paper, ‘Modelling Trust and Trusted Systems: A Category Theoretic Approach’, introduces a novel framework-‘TRUST’-that leverages category theory to formally represent trust relationships and verification processes. By modelling elements of trust as objects and operations as morphisms within a category, we establish a rigorous semantics for trustworthiness and justification, extending beyond binary trust assessments with a Heyting Algebra. Can this approach provide a foundation for verifiable and composable security architectures in increasingly interconnected systems?

Unveiling the System: The Foundations of Trust

Contemporary digital infrastructure is fundamentally reliant on the ability to verify the trustworthiness of remote components, a need increasingly met through remote attestation. This process isn’t simply about confirming a device is present, but rigorously establishing the integrity of its software and hardware-ensuring it hasn’t been tampered with or compromised. From securing cloud computing environments and protecting sensitive data in IoT devices, to enabling confidential computing and building resilient supply chains, attestation serves as a cornerstone of modern security architectures. It allows systems to confidently interact with remote entities, mitigating risks associated with untrusted environments and enabling a new level of assurance in distributed computing paradigms. The demand for robust attestation mechanisms will only intensify as reliance on interconnected and remotely managed systems continues to grow.

The increasing reliance on remote systems necessitates rigorous verification of their integrity, making attestation critical for foundational security processes such as secure boot and comprehensive data protection. However, simply performing attestation is insufficient; a robust framework is essential to ensure its effectiveness, particularly within the complexities of distributed environments. Without such a framework, attestation results can be ambiguous or easily circumvented, leaving systems vulnerable to attack. This framework must account for the diverse challenges inherent in verifying remote components, including establishing chains of trust, handling potential tampering, and managing the scalability required for large-scale deployments. Consequently, the development and implementation of a well-defined attestation framework represent a crucial step in securing modern, interconnected systems.

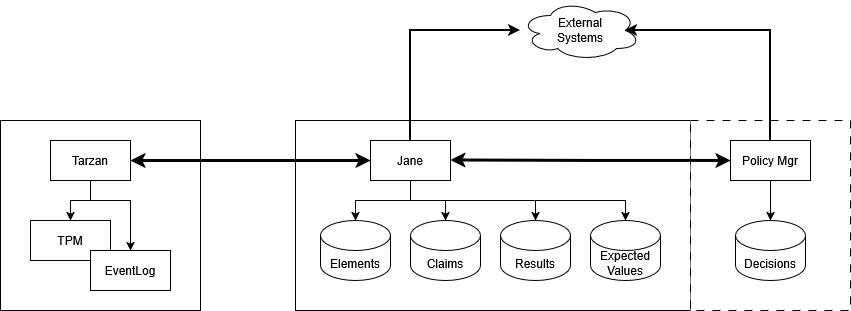

This paper introduces the ‘TRUST Category’, a formal and abstract model designed to provide a foundational understanding of trust and attestation processes within modern computing systems. By establishing a structured framework, it moves beyond ad-hoc security measures to allow for rigorous reasoning about integrity and trustworthiness. The model decomposes attestation into core components, enabling a precise definition of what constitutes ‘trust’ in a given context – a crucial step for building secure systems. This approach allows developers and security researchers to systematically analyze and improve attestation protocols, ultimately bolstering confidence in the reliability of remote devices and software, and forming the basis for verifiable security assurances in increasingly interconnected environments.

At the heart of remote attestation lies the systematic process of gathering and validating assertions about a system’s state. These assertions, termed ‘Claims’, detail specific attributes of a given ‘Element’ – which could be a software component, a hardware module, or even the system’s configuration. The process isn’t simply about collecting data; it demands rigorous verification of these Claims against established policies or trusted sources. Successful attestation hinges on the ability to reliably demonstrate that a system Element possesses the characteristics claimed, effectively establishing a chain of trust. This verification can involve cryptographic proofs, comparisons against known good states, or the use of trusted execution environments to ensure the integrity of both the Claim and the verification process itself.

Tracing the Evidence: The Attestation Process in Detail

The Trusted Platform Module (TPM) is a dedicated cryptographic co-processor designed to secure hardware by storing cryptographic keys, algorithms, and sensitive data. A key component is the ‘Endorsement Key’, a unique RSA key established by the TPM manufacturer. This key is used to establish a root of trust, verifying the TPM’s authenticity and ensuring the integrity of subsequent cryptographic operations. The TPM protects these keys from external access via a physically secured environment and access control mechanisms, preventing unauthorized duplication or modification. Storage within the TPM ensures that cryptographic material is bound to the specific hardware platform, mitigating risks associated with software-based key storage and potential compromise.

Attestation relies on the collection of system measurements, which are cryptographic hashes of specific boot components and configurations, including the UEFI firmware, bootloader, and kernel. These measurements are chained together, creating a Merkle tree-like structure that represents the system’s boot state. A digital signature, generated using a private key securely stored within the Trusted Platform Module (TPM), is then applied to this measurement chain. This signature cryptographically binds the measurements, providing a verifiable record of the system’s integrity and authenticity. Any modification to the measured components will result in a different measurement chain and, consequently, an invalid signature, indicating a potential compromise.

Following signature generation, the attested data – comprising system measurements and the digital signature – is transmitted to a Remote Attestation Server. This server functions as a trusted third party responsible for validating the authenticity and integrity of the reported system state. The server maintains a database of expected values, often representing a known-good configuration or policy. Verification involves comparing the received measurements against these expected values, and cryptographically validating the signature using the public key associated with the Endorsement Key stored within the TPM. Successful verification confirms that the system’s reported state matches the expected configuration, establishing a basis for trust and enabling secure operations.

The verification process within remote attestation fundamentally relies on comparing the submitted evidence – comprised of measurements and signatures – against pre-defined, known-good states established as a baseline of trust. These known-good states are typically cryptographic hashes or digital signatures representing the expected configuration of the system’s boot process, firmware, and critical software components. Successful verification occurs when the submitted measurements align with these expected values, confirming the system’s integrity and establishing a level of confidence in its current state. Discrepancies indicate potential tampering or compromise, triggering a failure in the attestation process and denying access or functionality. The specificity of these known-good states, and the cryptographic strength of the comparison, directly impact the robustness of the trust established.

Navigating Trust Levels: Decision Making in Attestation

The decision-making process within an attestation system directly utilizes the result of the preceding verification step as its primary input for determining the overall trust level. This result, which indicates the success or failure of specific integrity checks, is not treated as a simple binary value; rather, it’s analyzed in conjunction with other factors, such as the specific checks passed or failed, and the severity of any identified issues. The outcome of this analysis then informs the assignment of a trust level, representing the degree of confidence in the attested system’s authenticity and integrity. This allows for a graduated scale of trust, rather than a strict pass/fail determination, acknowledging that varying levels of compromise may exist.

Traditional verification systems often produce a binary result – pass or fail – which limits the granularity of trust assessment. Heyting Algebra provides a formal mathematical framework for representing and reasoning about degrees of truth and evidence. In this system, truth values are not limited to true or false, but exist on a continuous scale, allowing for the representation of partial trust or uncertainty. \land and \vee operators within Heyting Algebra define conjunction and disjunction with specific properties that model the weakening of evidence due to conflicting information. This enables a more nuanced evaluation of system integrity, where multiple verification results can be combined to generate a trust level reflecting the overall confidence in the attested system, rather than a simple pass/fail determination.

The decision-making process incorporates external contextual information to refine trust assessments. This includes evaluating potential threats to the attested system, such as the ‘Evil Maid Attack’, where an attacker gains physical access and compromises the system’s integrity while it is powered off or unlocked. Assessing these threats involves analyzing the system’s environment, physical security measures, and potential attack vectors. The identified risks are then factored into the overall trust level calculation, potentially downgrading confidence even if initial verification steps pass. This contextual analysis ensures a more realistic and comprehensive evaluation of system trustworthiness, accounting for real-world vulnerabilities beyond purely technical checks.

Establishing confidence in system integrity and authenticity necessitates verifying that the system operates as intended and has not been compromised. This involves confirming the system’s components haven’t been tampered with, its configuration matches expectations, and its behavior aligns with defined security policies. Successful verification provides assurances regarding the system’s trustworthiness, allowing users or other systems to rely on its outputs and functionality without undue risk. The degree of confidence established is not necessarily binary; it can be expressed as a graded level reflecting the thoroughness of the verification process and the strength of the evidence supporting the system’s integrity.

Mapping System States: From Origin to Compromise

The concept of an ‘Initial Object’ establishes a crucial baseline for any system undergoing attestation. It doesn’t represent a state of the system, but rather the complete absence of verified information about it. Prior to attestation, the system exists as an unknown – a blank slate devoid of trustworthiness. This initial lack of knowledge isn’t a flaw in the process, but a necessary starting point; attestation actively transitions the system from this unknown state to one of verified confidence, or conversely, confirmed compromise. The Initial Object therefore functions as a placeholder for uncertainty, enabling a rigorous, formalized assessment of the system’s evolution and current security posture. Understanding this starting point is fundamental to defining what attestation seeks to achieve – a reliable mapping from initial ignorance to informed trust.

The concept of a ‘Terminal Object’, often visualized as ‘Fire’, represents the ultimate failure state within a system undergoing attestation. This isn’t merely a functional error, but a complete and irreversible loss of trust. Once a system reaches this state, it is deemed compromised – any data it holds is suspect, and its continued operation poses unacceptable risks. The ‘Fire’ signifies that recovery is no longer possible through standard attestation procedures; the system is effectively unusable and must be isolated or decommissioned. Defining this terminal condition is crucial, as it establishes a clear boundary for acceptable risk and dictates the necessary safeguards to prevent a cascade of failures, ensuring the integrity of the broader system it once served.

Attestation serves as a crucial mechanism for gauging system integrity by charting its evolution from an unknown, initial state to a definitively compromised, terminal condition – often referred to as ‘fire’. This process doesn’t simply declare a system trustworthy or untrustworthy, but instead constructs a detailed map of its current standing. Through attestation, a system’s trustworthiness isn’t a binary value; it exists on a spectrum, allowing for nuanced assessments of risk and confidence. This granular approach is particularly vital in complex systems where vulnerabilities may emerge incrementally, and a clear understanding of the system’s progression towards – or away from – a compromised state is paramount for proactive security measures and informed decision-making.

The pursuit of robust system attestation benefits significantly from the application of advanced categorical frameworks. Specifically, employing ‘Two-Category’ theory-a mathematical approach focusing on relationships between objects and morphisms-allows for a precise definition of system states and transitions. This formalism isn’t merely abstract; it provides a foundation for identifying vulnerabilities and formally verifying security properties. Further refinement comes through ‘Kan Extensions’, which enable the comparison of attestation processes across different system configurations and trust models. By rigorously mapping these relationships, researchers can move beyond ad-hoc security assessments to a mathematically grounded understanding of system trustworthiness, paving the way for more resilient and verifiable digital infrastructure. This categorical approach ensures that attestation isn’t simply a binary pass/fail, but a nuanced and verifiable assessment of system integrity throughout its lifecycle.

Beyond Isolated Components: Systemic Integrity and the Future of Trust

System integrity relies not on isolated verification of parts, but on a comprehensive assessment of the complete system – its ‘Composition’. Traditional security models often focus on individual component attestation, yet a compromised dependency within a complex system can undermine the trustworthiness of the entire architecture. This holistic perspective recognizes that security is a systemic property, emerging from the interactions between hardware, software, and firmware. A thorough compositional attestation process meticulously maps these interdependencies, establishing a chain of trust that extends from the root of trust to every element within the system. By verifying the integrity of the entire assembly, rather than simply its parts, a more robust and resilient security posture is achieved, safeguarding against both known and emerging threats.

System trustworthiness isn’t simply established by securing individual components; rather, it demands rigorous attestation of each element comprising the entire system. This extends beyond software verification to encompass the foundational hardware, firmware, and even the configuration data that dictates operational parameters. A comprehensive attestation process for every element establishes a chain of trust, confirming that each piece hasn’t been tampered with and operates as intended. Without this granular verification, a single compromised element – a rogue driver, a modified bootloader, or a malicious hardware implant – can undermine the integrity of the entire system, regardless of the strength of higher-level security measures. Consequently, a robust attestation framework is essential for building resilient systems capable of withstanding increasingly sophisticated attacks targeting any point within the technological stack.

A system’s robustness isn’t solely determined by the security of its individual parts, but by a comprehensive understanding of how those parts interact. Recognizing these interdependencies allows for the development of systems designed to anticipate and neutralize sophisticated attacks that target vulnerabilities arising from component relationships. By mapping these connections, security architects can proactively fortify critical pathways and build redundancy into the system’s architecture, ensuring continued operation even when faced with compromise. This approach moves beyond reactive patching to a proactive design philosophy, fostering resilience and minimizing the impact of increasingly complex and targeted threats. Consequently, systems built on this principle demonstrate a significantly improved ability to withstand adversarial actions and maintain operational integrity.

The research details a novel application of category theory to establish a rigorous, formal model for trust and attestation – essentially, a mathematical language for describing how security claims are made and verified within a complex system. This framework moves beyond simply checking individual components; it maps the relationships between those components, allowing for a comprehensive understanding of how trust propagates-or fails to propagate-through the entire architecture. By abstracting system elements as ‘objects’ and their dependencies as ‘morphisms’, the model enables precise reasoning about security properties and decision-making processes, offering a foundation for verifying systemic integrity and building systems resilient to increasingly sophisticated attacks. This structured approach allows security professionals to move beyond ad-hoc assessments and towards provable guarantees of trustworthiness, ultimately bolstering confidence in critical infrastructure and sensitive data handling.

The pursuit of formalizing trust, as detailed in this exploration of category theory and system security, inherently demands a willingness to deconstruct existing assumptions. One dismantles established notions of attestation and verification not to create chaos, but to reveal the underlying structure. Donald Davies keenly observed, “It is surprisingly difficult to define what one means by ‘information’.” This resonates deeply with the work presented; the very act of modeling trust necessitates a rigorous definition of its components – a reverse-engineering of a concept often taken for granted. By probing the boundaries of what constitutes ‘trust’ within a system, researchers illuminate potential vulnerabilities and build more resilient frameworks, effectively treating the system as a challenge to be overcome through intellectual exploration.

What Lies Ahead?

The construction of TRUST, as presented, isn’t an endpoint, but a deliberately introduced complexity. Every exploit starts with a question, not with intent. The model, by formalizing trust, immediately highlights the areas where current systems rely on implicit, and therefore fragile, assumptions. The immediate challenge isn’t simply proving systems trustworthy within this framework, but discovering where the framework itself breaks down – where the categories fail to capture the nuance of real-world compromise.

Topos theory, while offering a powerful lens for abstraction, necessitates a reckoning with its limitations. Can the inherent ambiguity of natural language – the very basis of security policies – be faithfully represented within a formally rigorous system? Or does the attempt to eliminate all uncertainty necessarily introduce new, subtle vulnerabilities? The focus must shift from verification within the model to the model’s capacity to accurately reflect the systems it purports to represent.

Ultimately, the value of a category-theoretic approach lies not in providing definitive answers, but in framing the right questions. The next iteration shouldn’t seek to solve trust, but to map the boundaries of its insolubility. A truly robust system isn’t one that’s proven secure, but one that anticipates its own failure, and provides a framework for graceful degradation – or, perhaps, intelligent disobedience.

Original article: https://arxiv.org/pdf/2602.11376.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- EUR USD PREDICTION

- Epic Games Store Free Games for November 6 Are Great for the Busy Holiday Season

- TRX PREDICTION. TRX cryptocurrency

- How to Unlock & Upgrade Hobbies in Heartopia

- Battlefield 6 Open Beta Anti-Cheat Has Weird Issue on PC

- Xbox Game Pass September Wave 1 Revealed

- Sony Shuts Down PlayStation Stars Loyalty Program

- The Mandalorian & Grogu Hits A Worrying Star Wars Snag Ahead Of Its Release

- How to Increase Corrosion Resistance in StarRupture

- Best Ship Quest Order in Dragon Quest 2 Remake

2026-02-14 16:59